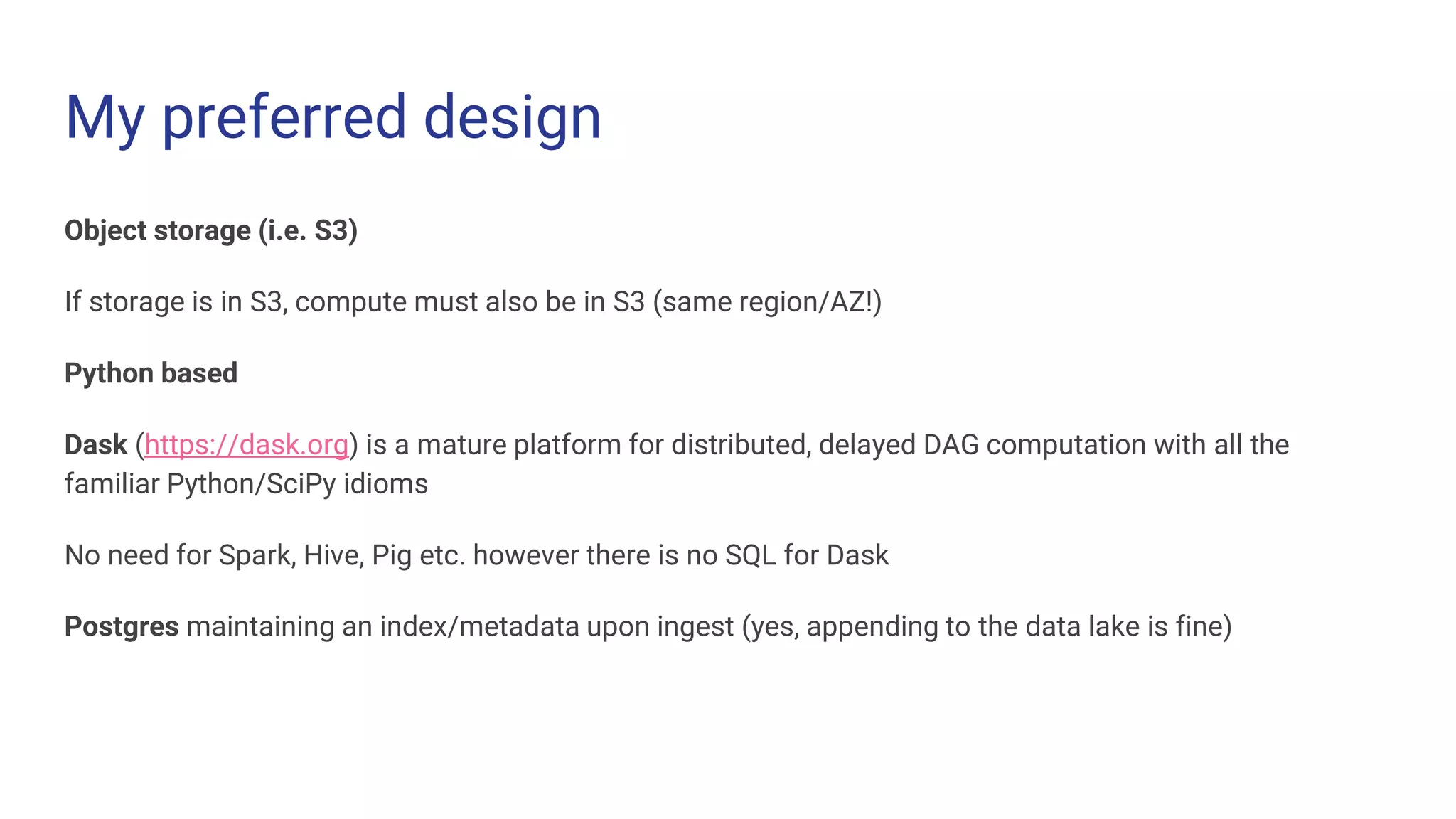

This document summarizes benchmarks for accessing data stored in different formats in a data lake. Scanning 1.2GB of text data stored in JSON, CSV, or Parquet formats was fastest when stored as Parquet, at 8.6 seconds. Retrieving a single row was also fastest from Parquet, at 1.75 seconds, because the Parquet format allows limiting the data read through predicate pushdown. The document concludes that Parquet is the best format even for row-oriented data when accessed through a distributed system like a data lake.

![Methodology

Not tremendously scientific...

Files in S3

Compute nodes in (same region; same AZ) Kubernetes pod

Wall time (CPU time << network read)

In Dask:

%%timeit -n 4

df = dd.read_parquet('s3://my-bucket/*.parquet', engine='fastparquet')

df[df['id'] == 1234567890].compute()](https://image.slidesharecdn.com/datalakes-181231194327/75/Big-Data-Lakes-Benchmarking-2018-13-2048.jpg)