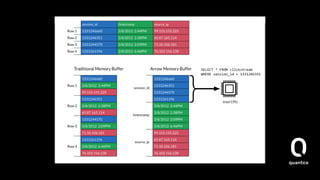

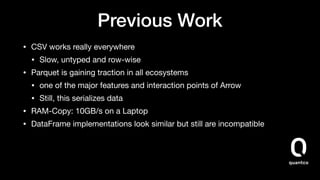

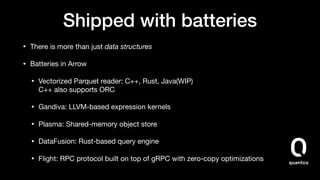

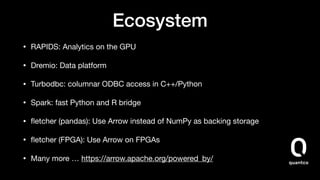

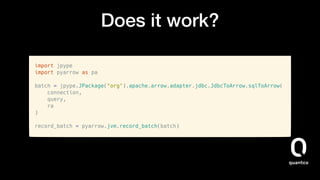

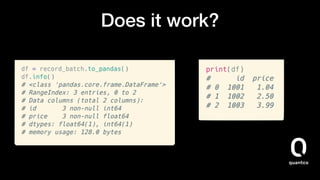

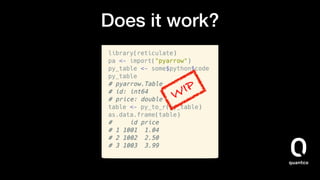

The document discusses the challenges of integrating different data ecosystems in analytics and science and presents Apache Arrow as a solution through its common columnar data representation. It highlights Arrow’s capability to support multiple programming languages and its ecosystem features, such as vectorized readers and query engines. The presentation emphasizes the need for community collaboration to enhance data analytics pipelines and improve interoperability across languages and platforms.