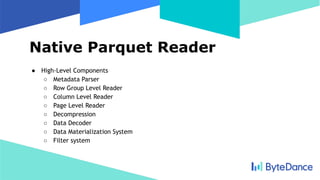

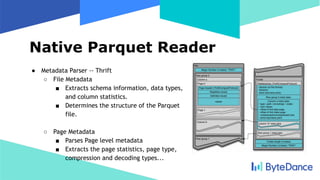

The document discusses the architecture and key features of ByteDance's native Parquet reader, a tool designed for efficient reading and processing of Parquet file format data. It highlights the advantages of columnar storage, compression algorithms, and various data types, along with detailed descriptions of the reader's components including metadata parsing, row group reading, and data materialization. Additionally, it addresses performance features like batch reading, filter push-down, and flexible data materialization to enhance efficiency in data processing.