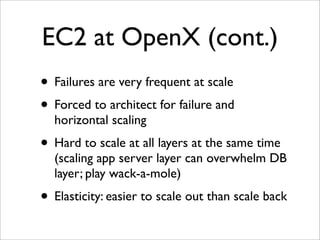

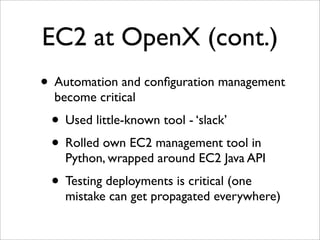

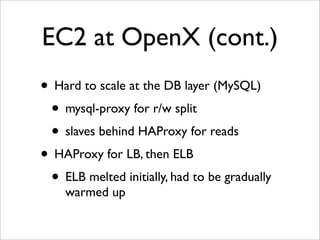

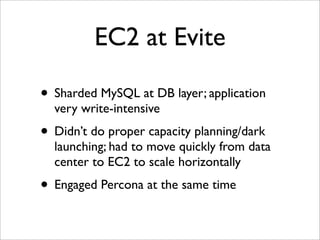

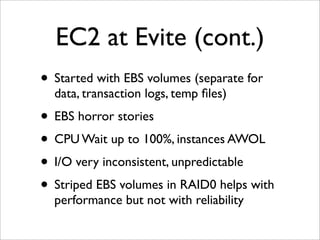

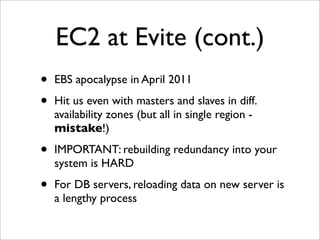

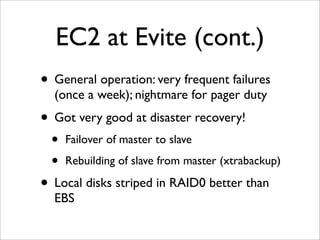

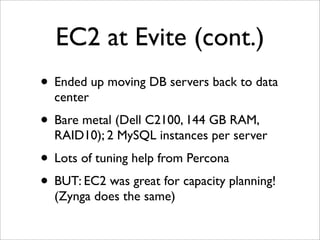

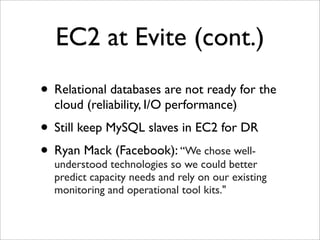

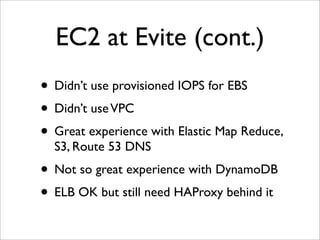

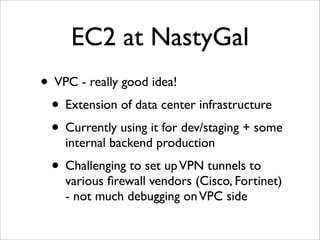

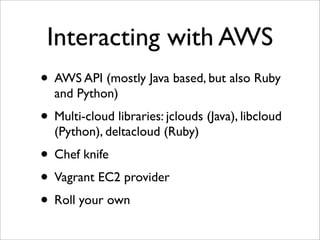

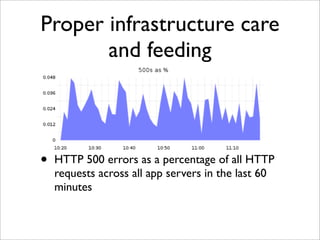

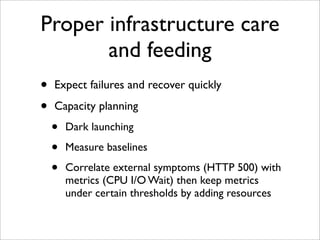

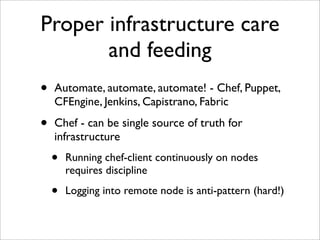

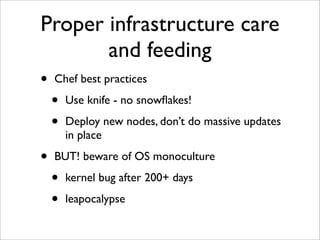

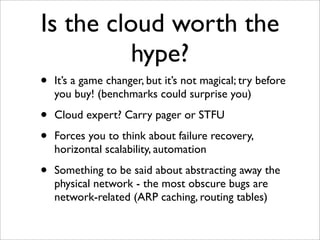

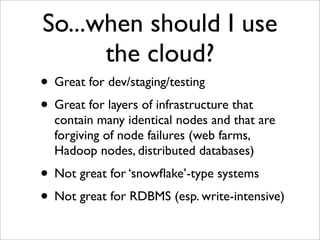

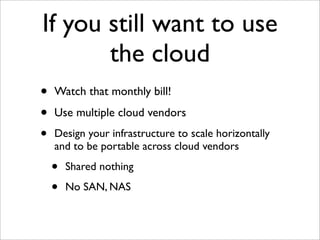

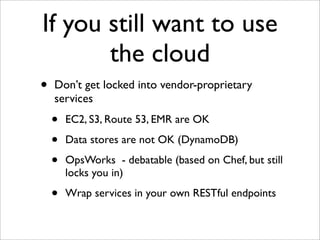

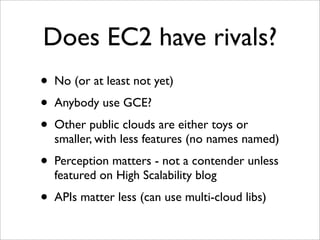

The document discusses the author's experiences with Amazon EC2 over several years, chronicling the challenges and lessons learned while managing large-scale cloud infrastructures at various companies. Key topics include the frequent failures faced when scaling, the importance of automation and configuration management, and the need for careful capacity planning, particularly with database systems. The document highlights the advantages and limitations of using cloud services like EC2, emphasizing the necessity for monitoring and disaster recovery strategies.