This document summarizes DreamHost's presentation on how they built a public cloud using OpenStack. Some key points:

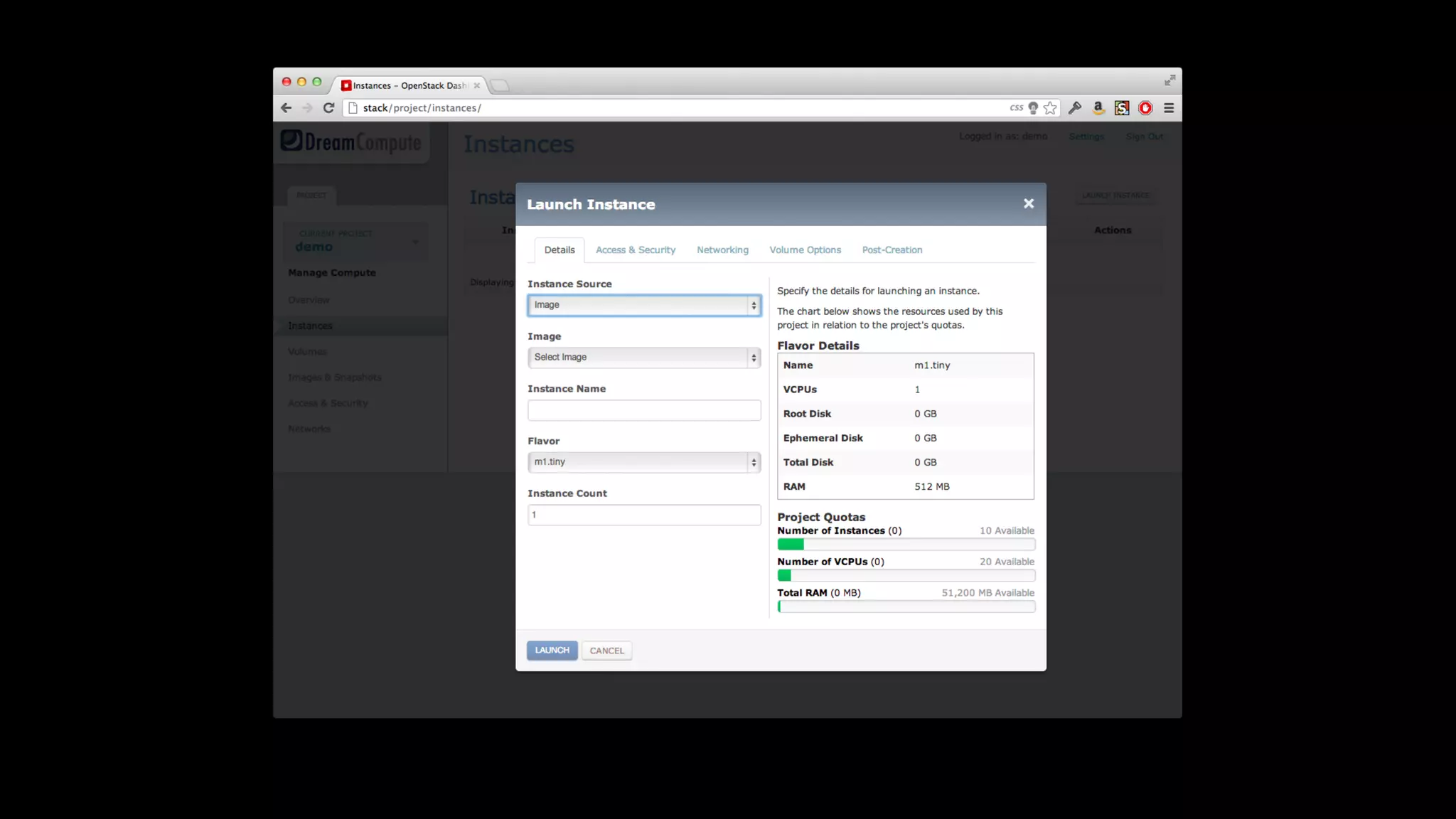

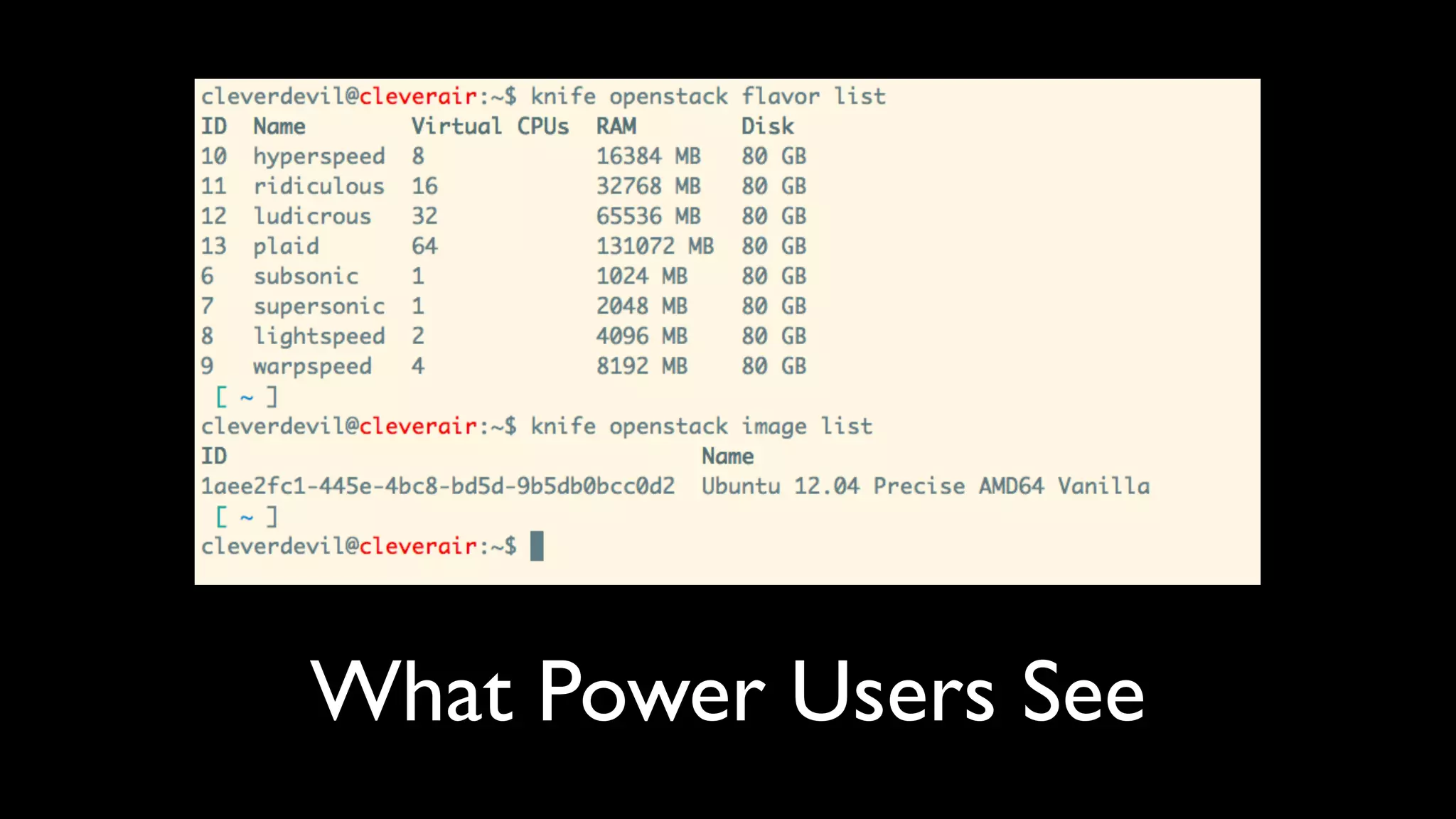

- DreamHost is using OpenStack for compute, storage, and networking in their public cloud offering called DreamCompute.

- For storage, they chose Ceph which provides shared, scalable block and object storage.

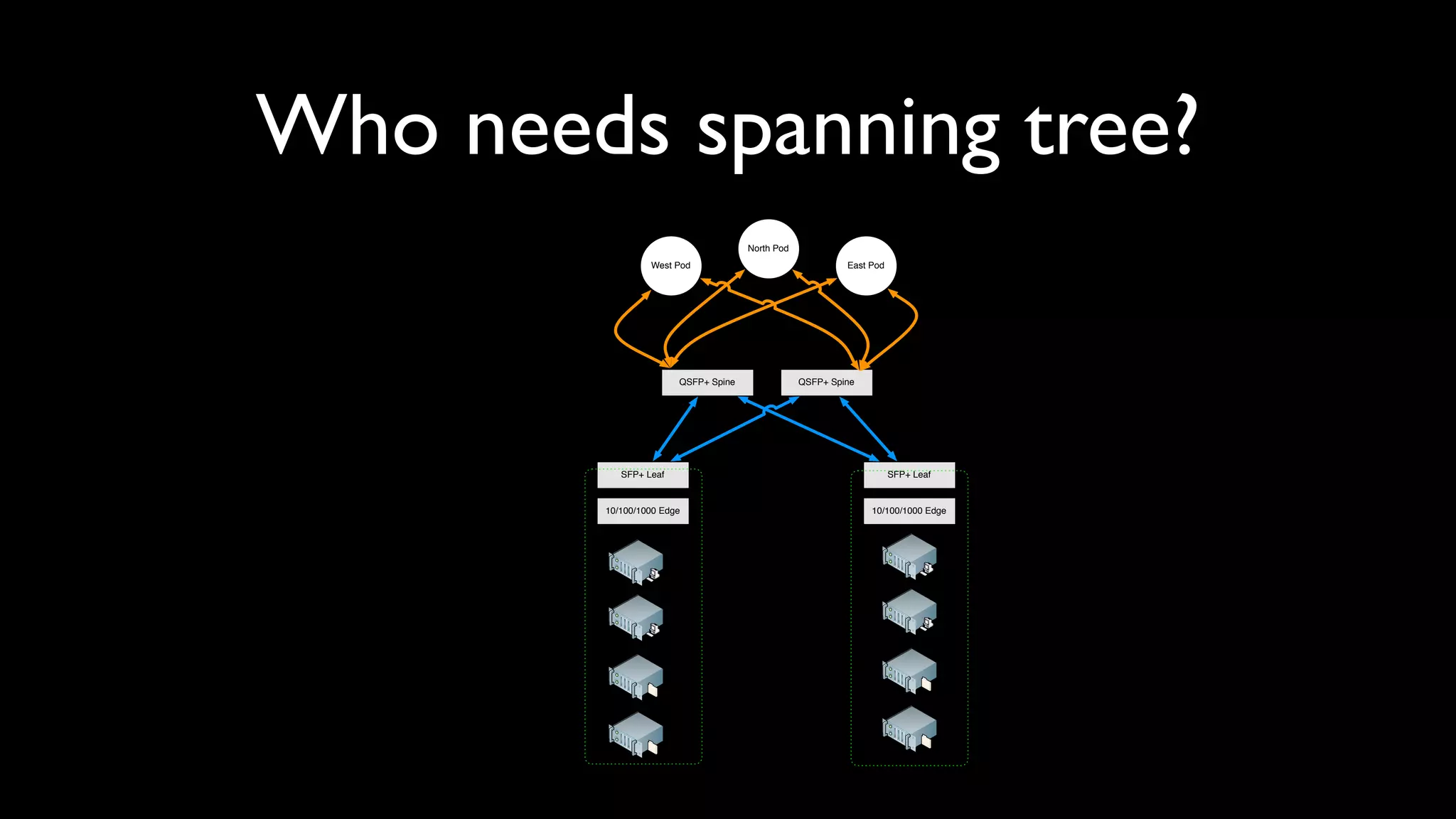

- Their network architecture uses 10Gb switches in a spine-leaf topology with logical networking software for tenant isolation.

- Automation is key to managing the cloud infrastructure and providing services to customers.

- DreamHost discussed the considerations and challenges in building the cloud such as scalability, speed, monitoring, security and cost effectiveness.