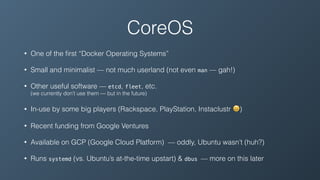

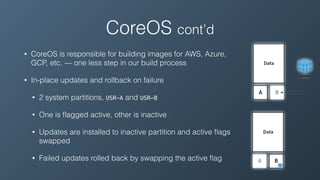

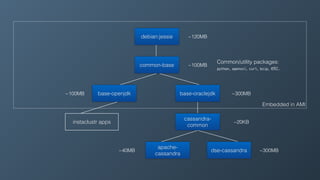

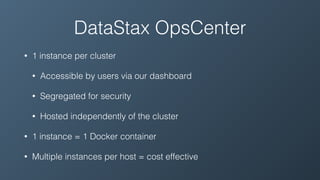

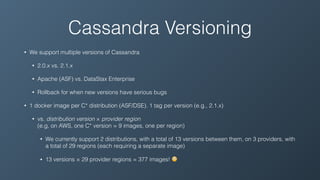

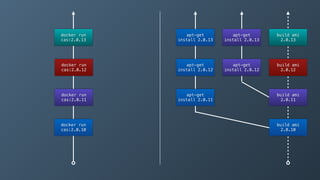

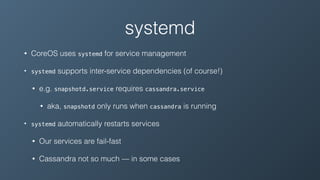

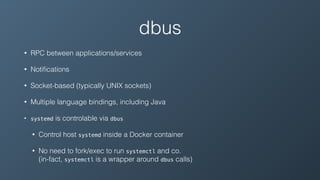

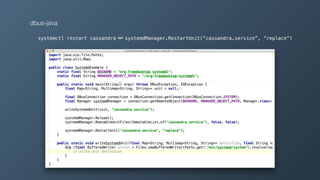

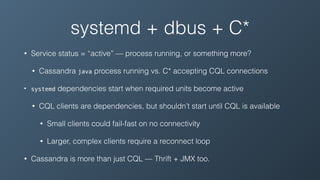

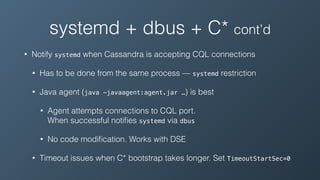

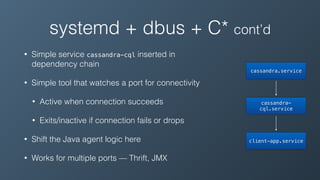

Instaclustr provides managed Apache Cassandra and DataStax Enterprise clusters in the cloud. They initially ran Cassandra on custom Ubuntu images but moved to CoreOS for its immutable and self-updating capabilities. Using Docker and CoreOS together allows Cassandra to run in immutable Docker containers while CoreOS handles OS-level updates. Integrating Cassandra containers with the CoreOS and systemd init system provides reliable automatic restarts and the ability to notify when Cassandra is ready using dbus inter-process communication. This architecture provides a robust solution for running and updating Cassandra in production clusters.