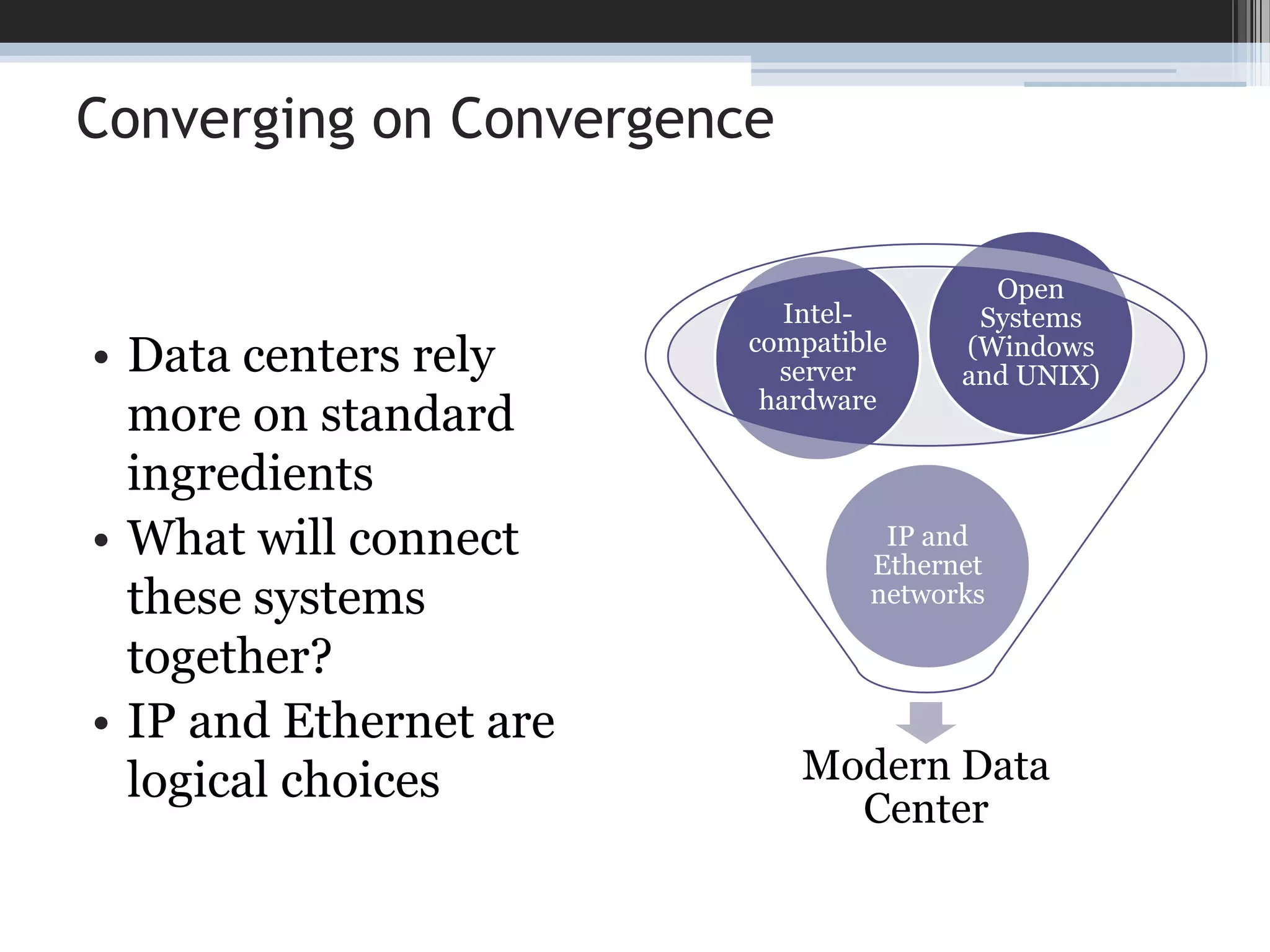

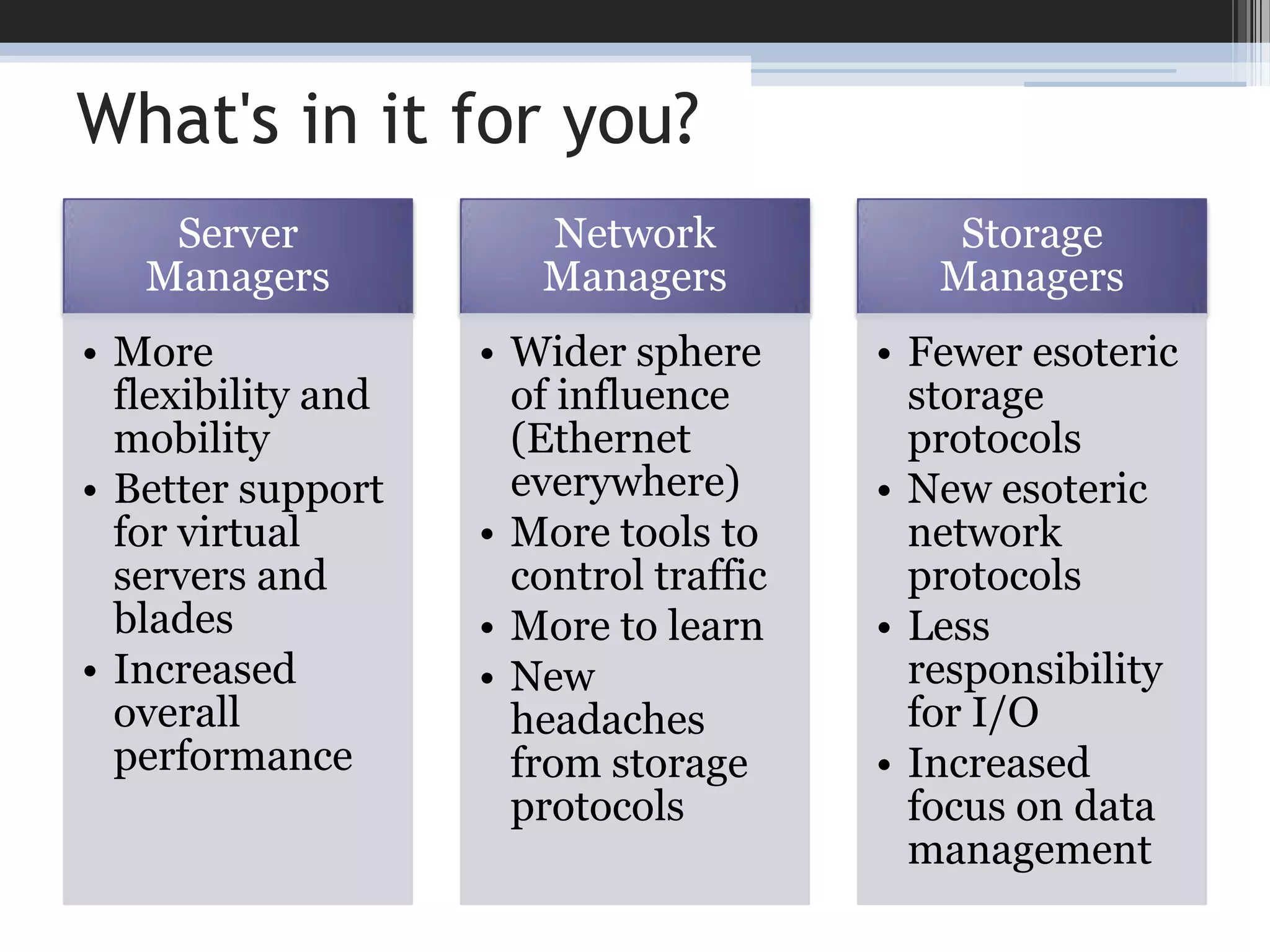

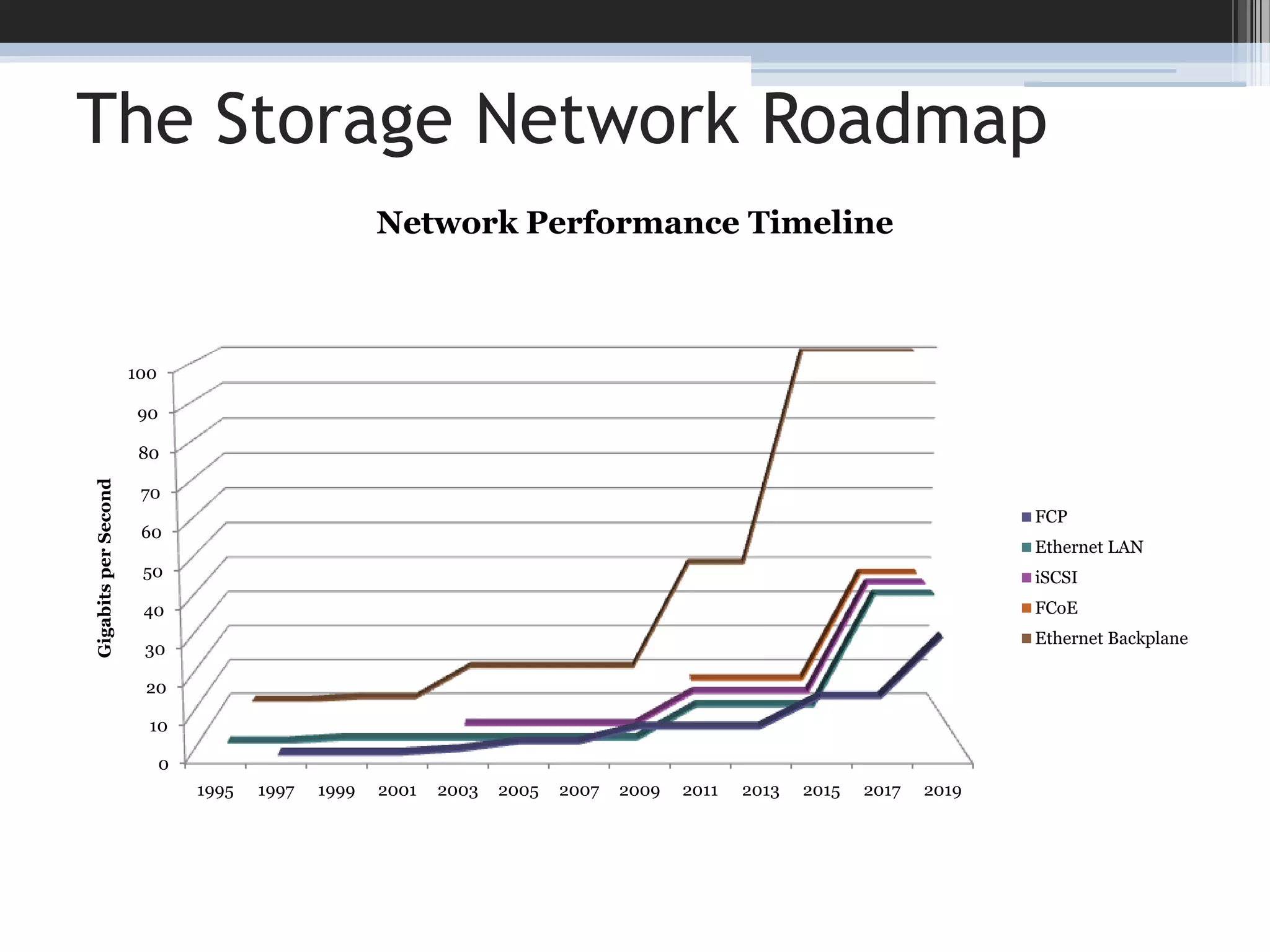

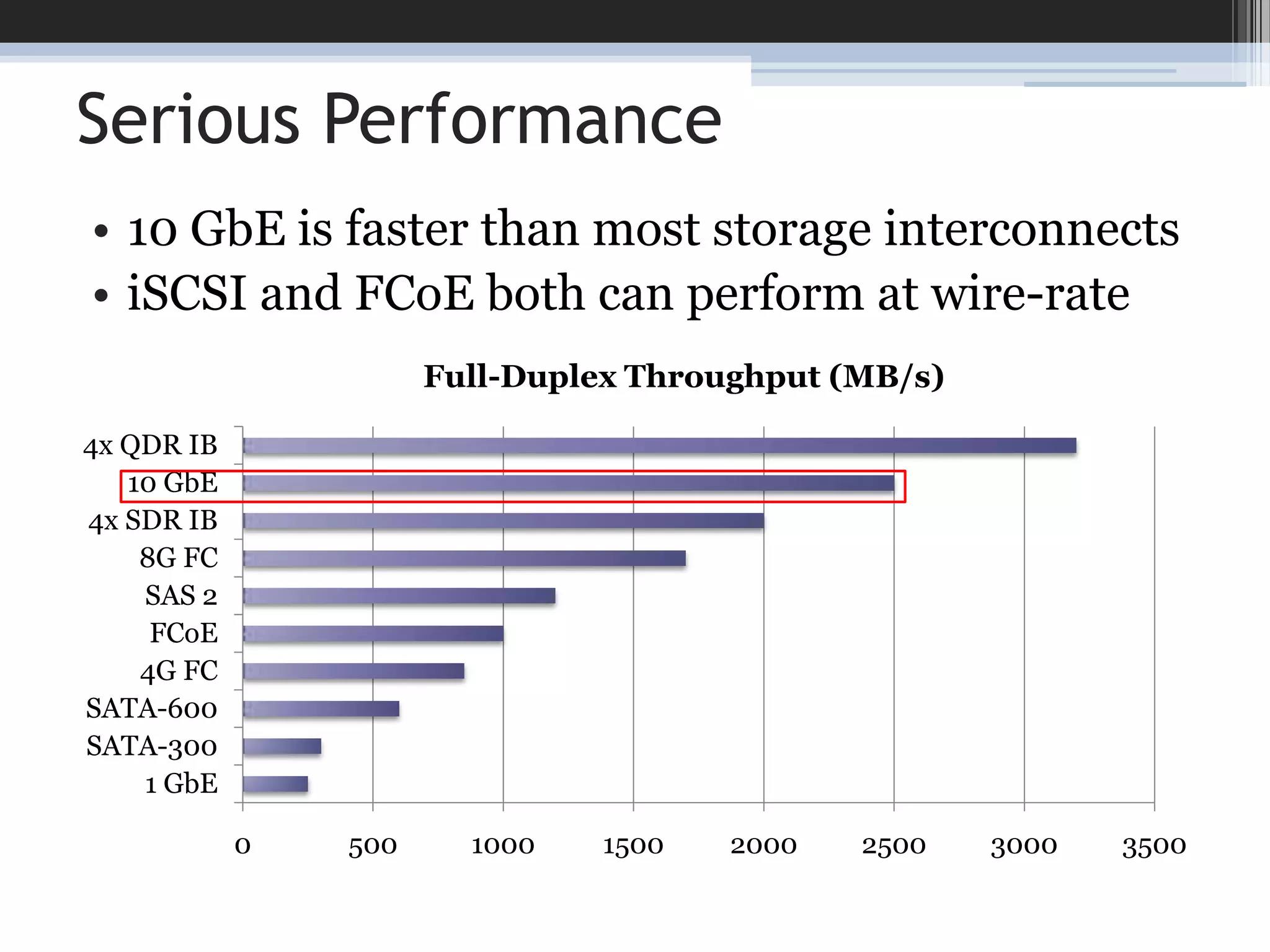

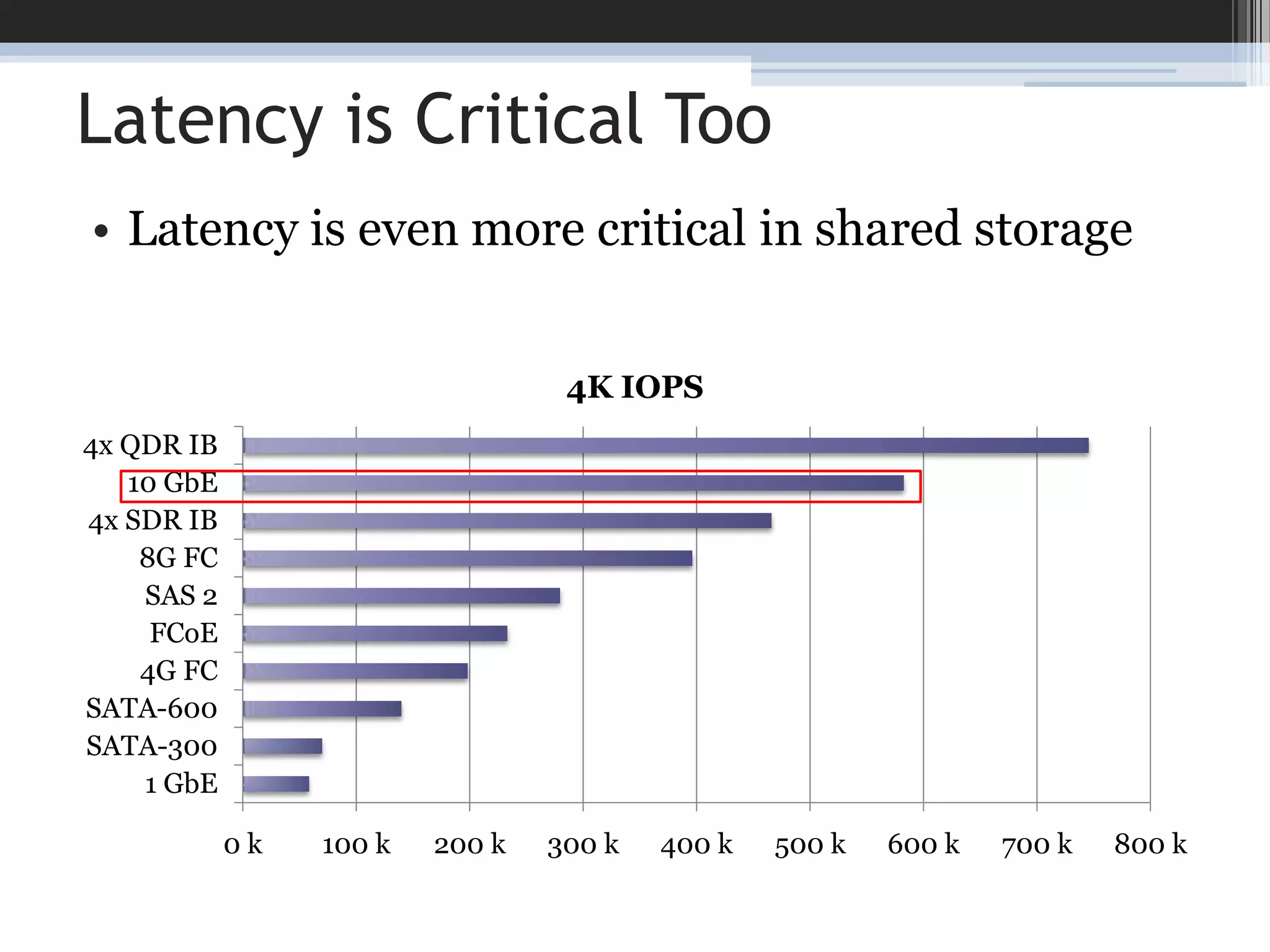

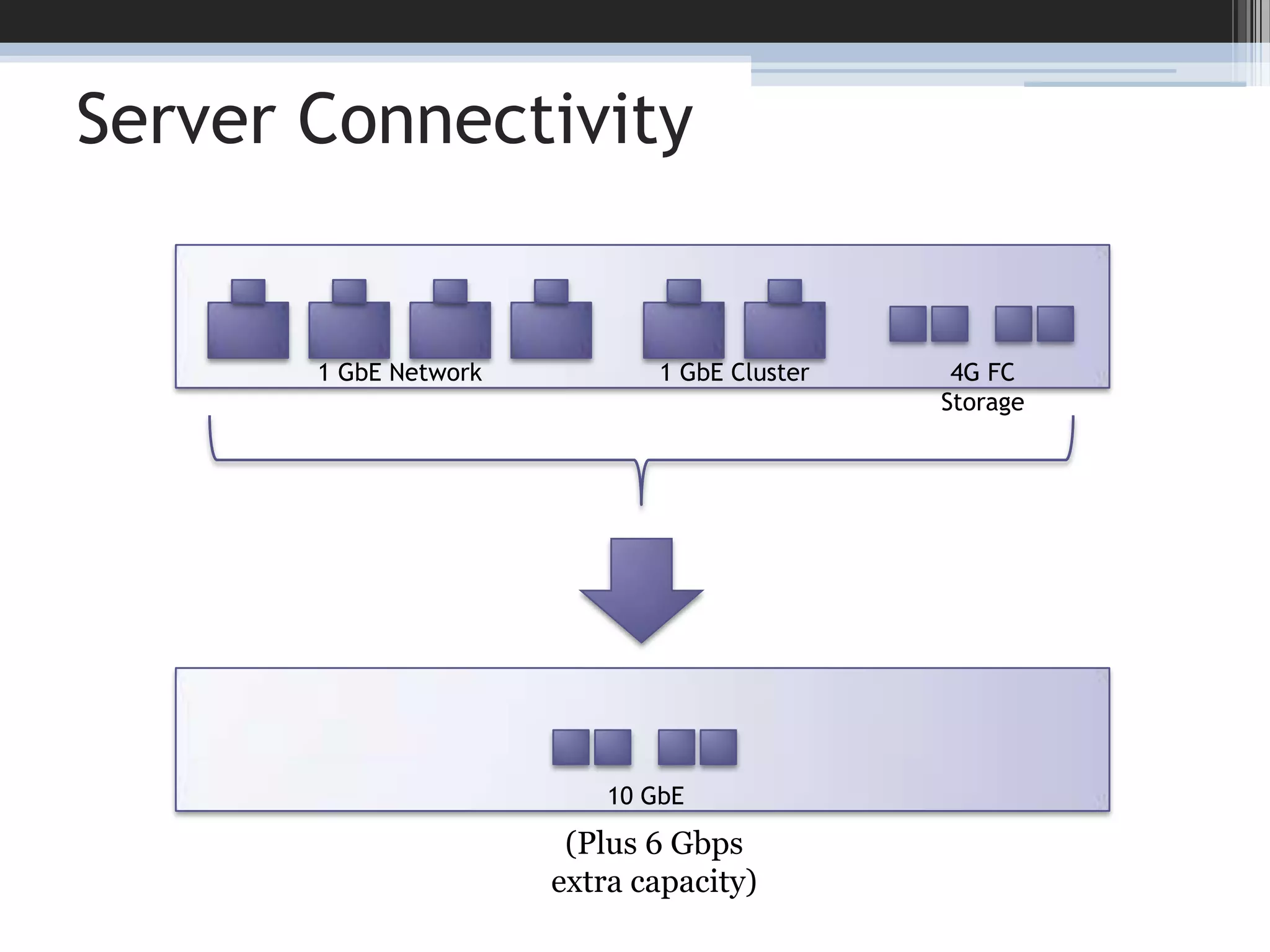

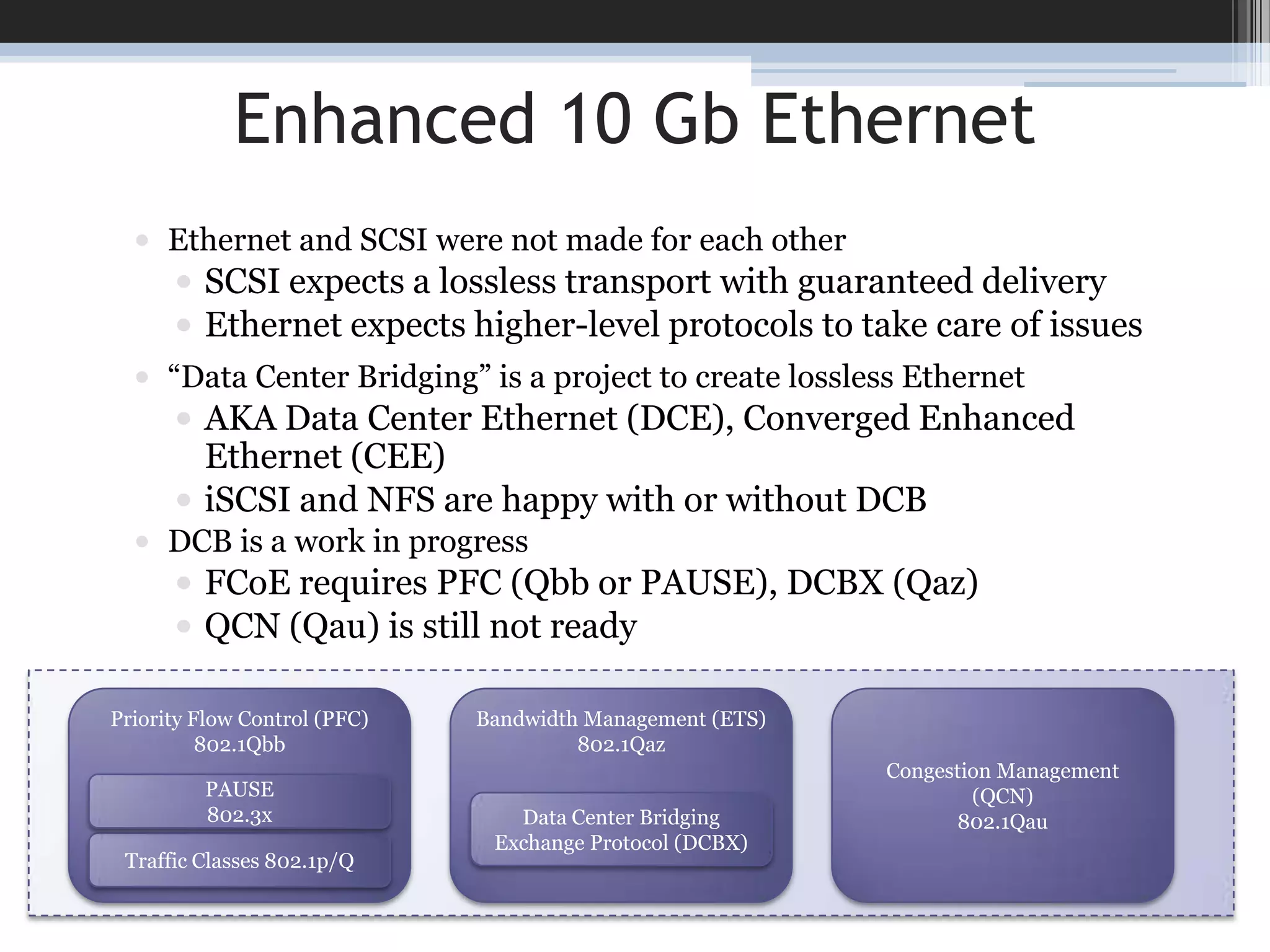

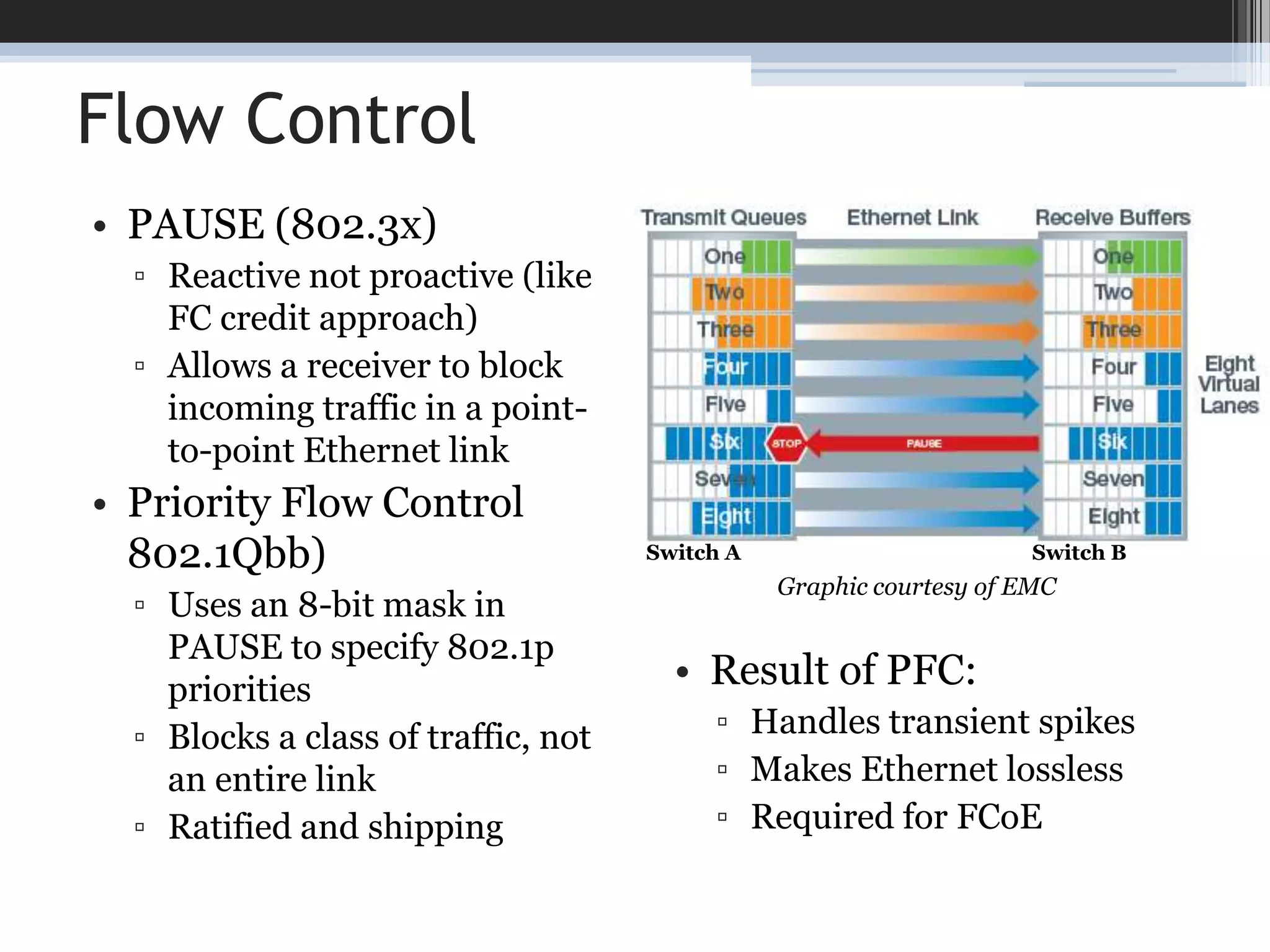

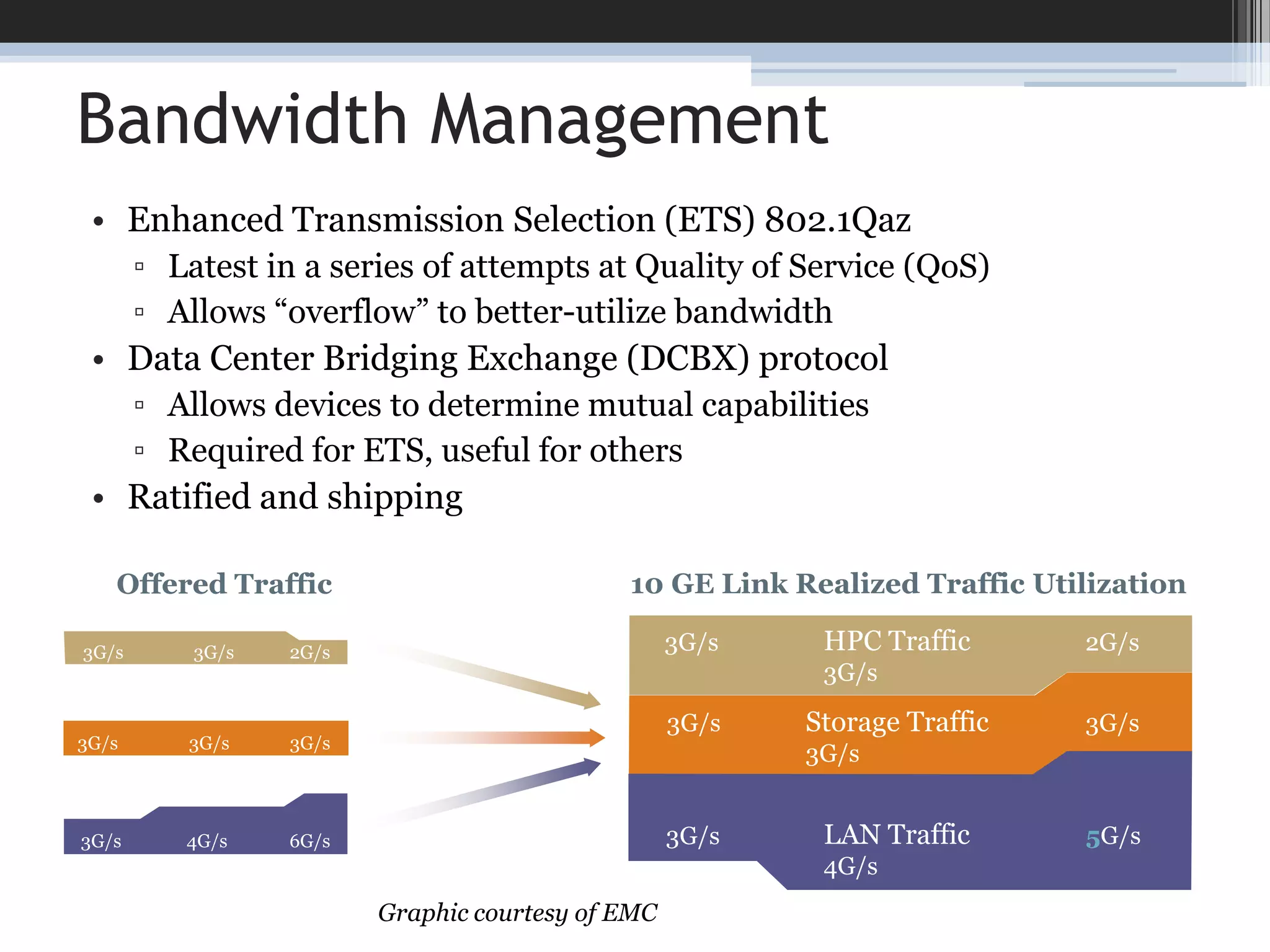

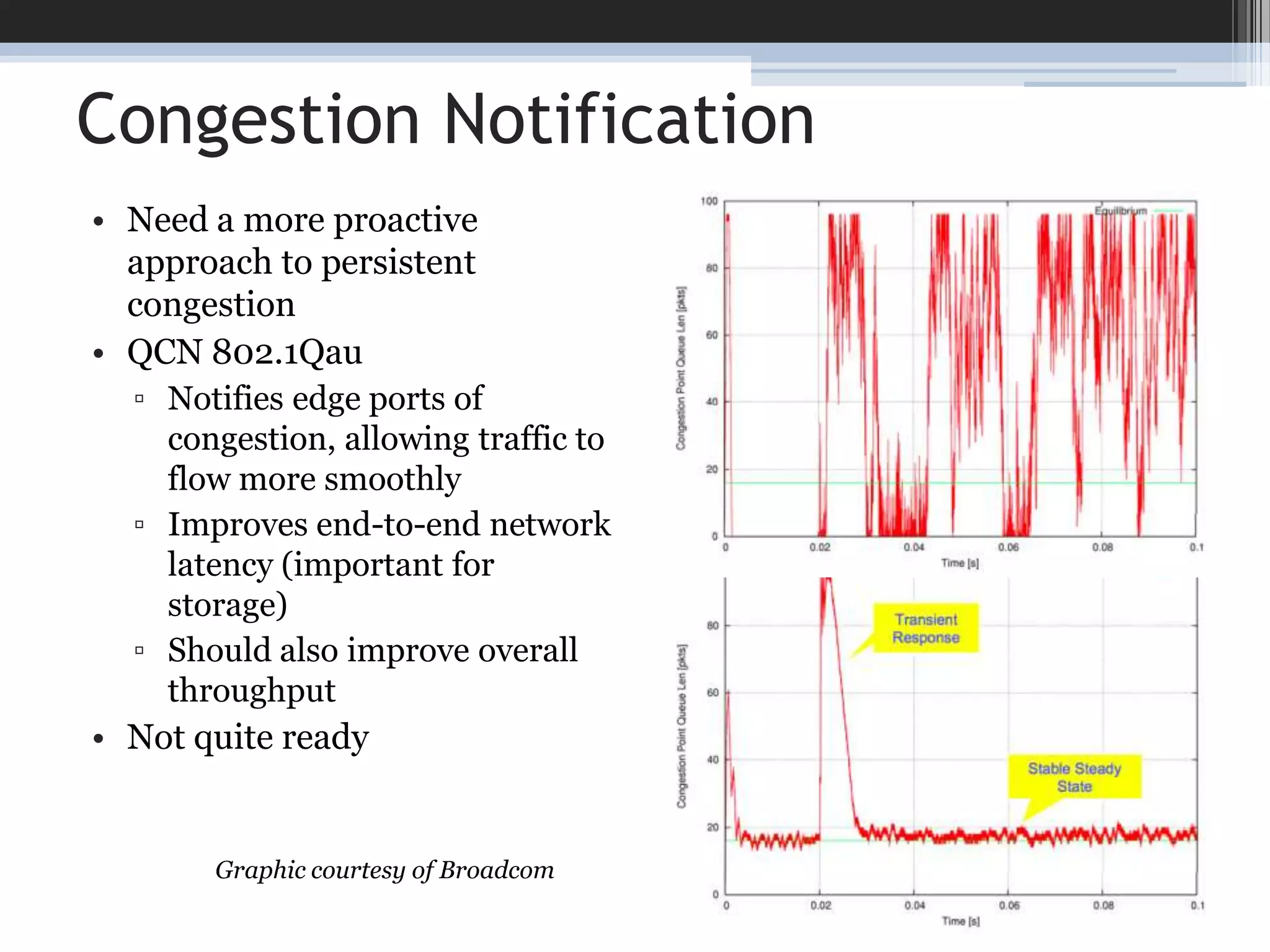

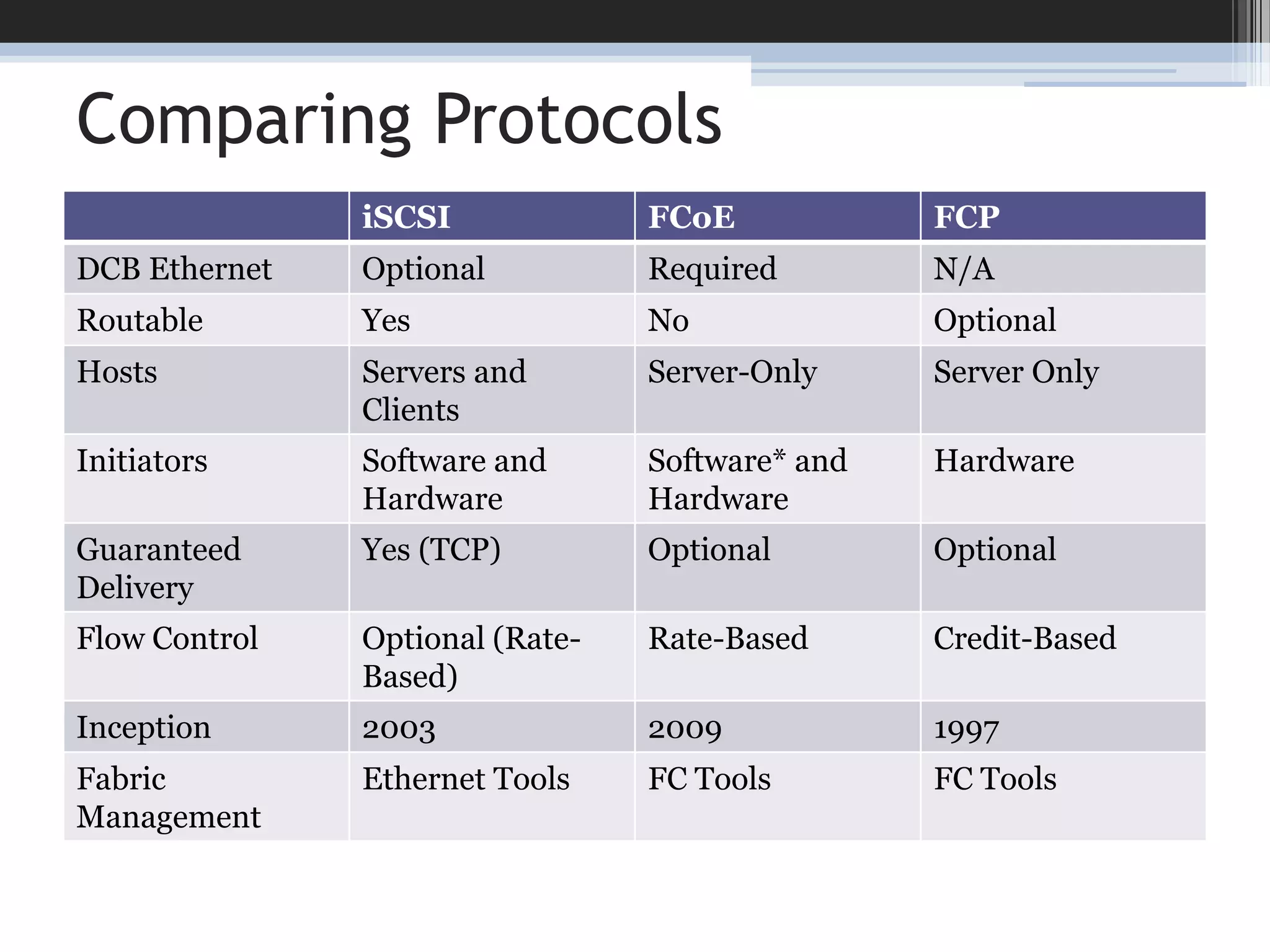

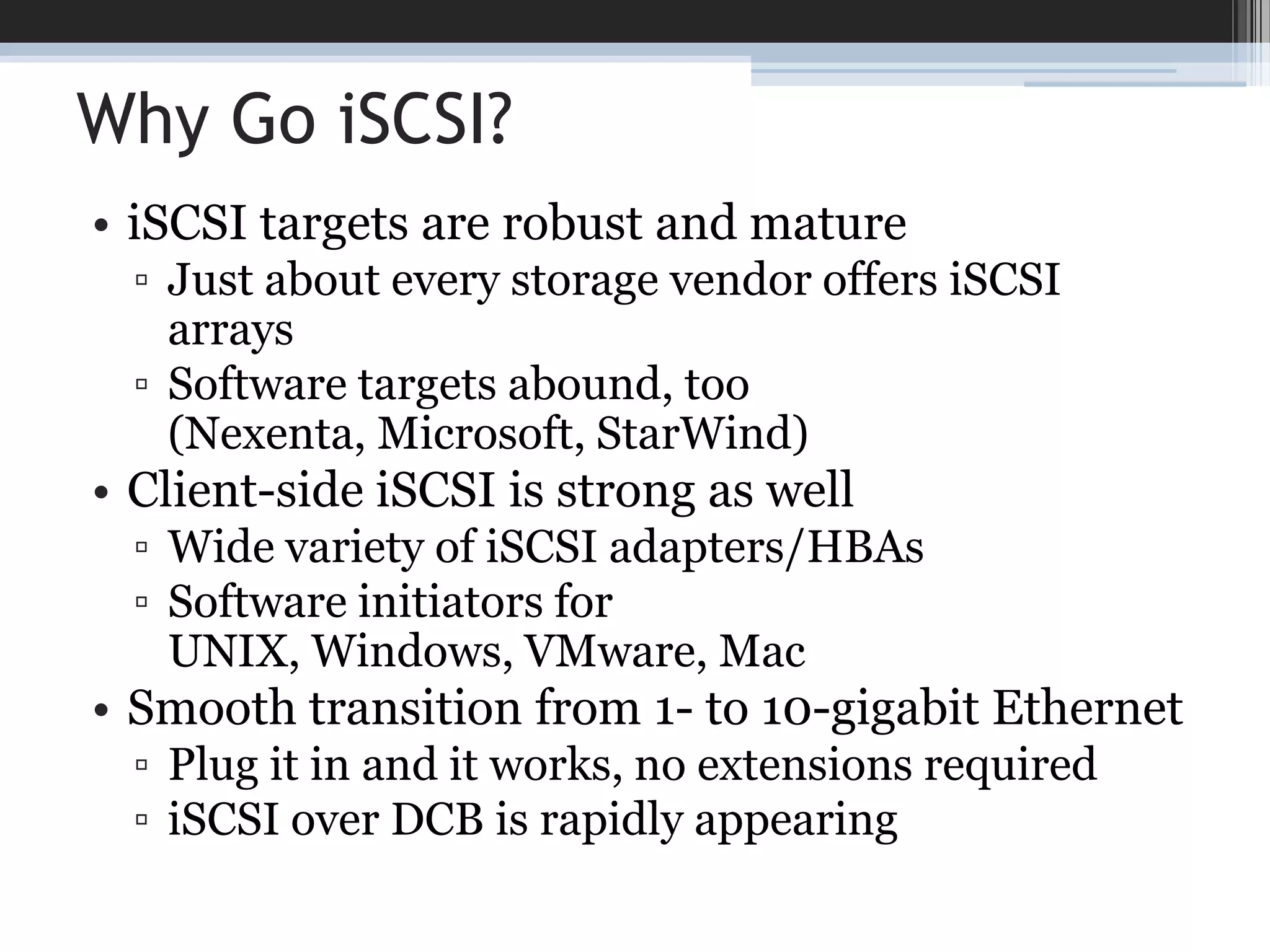

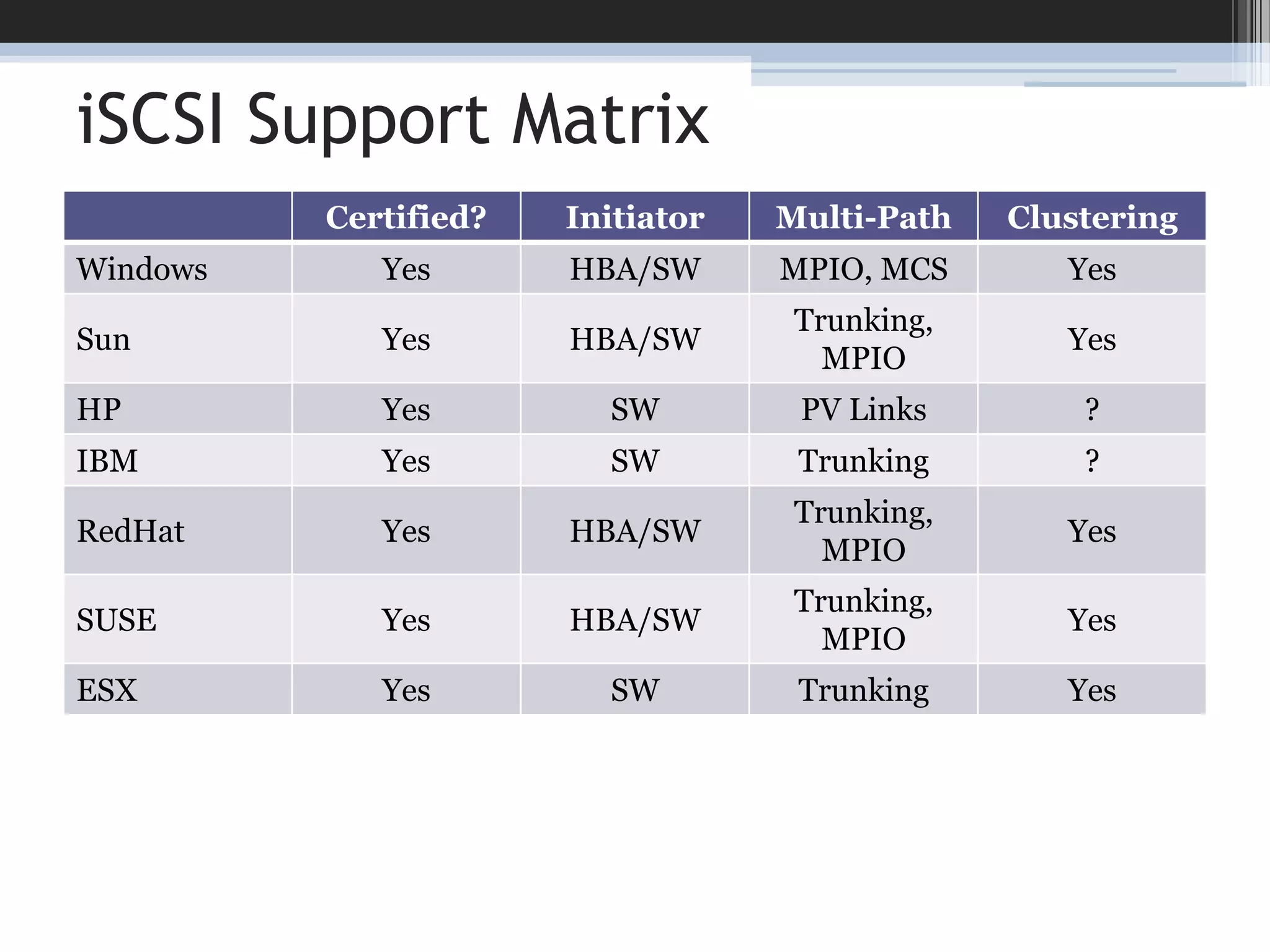

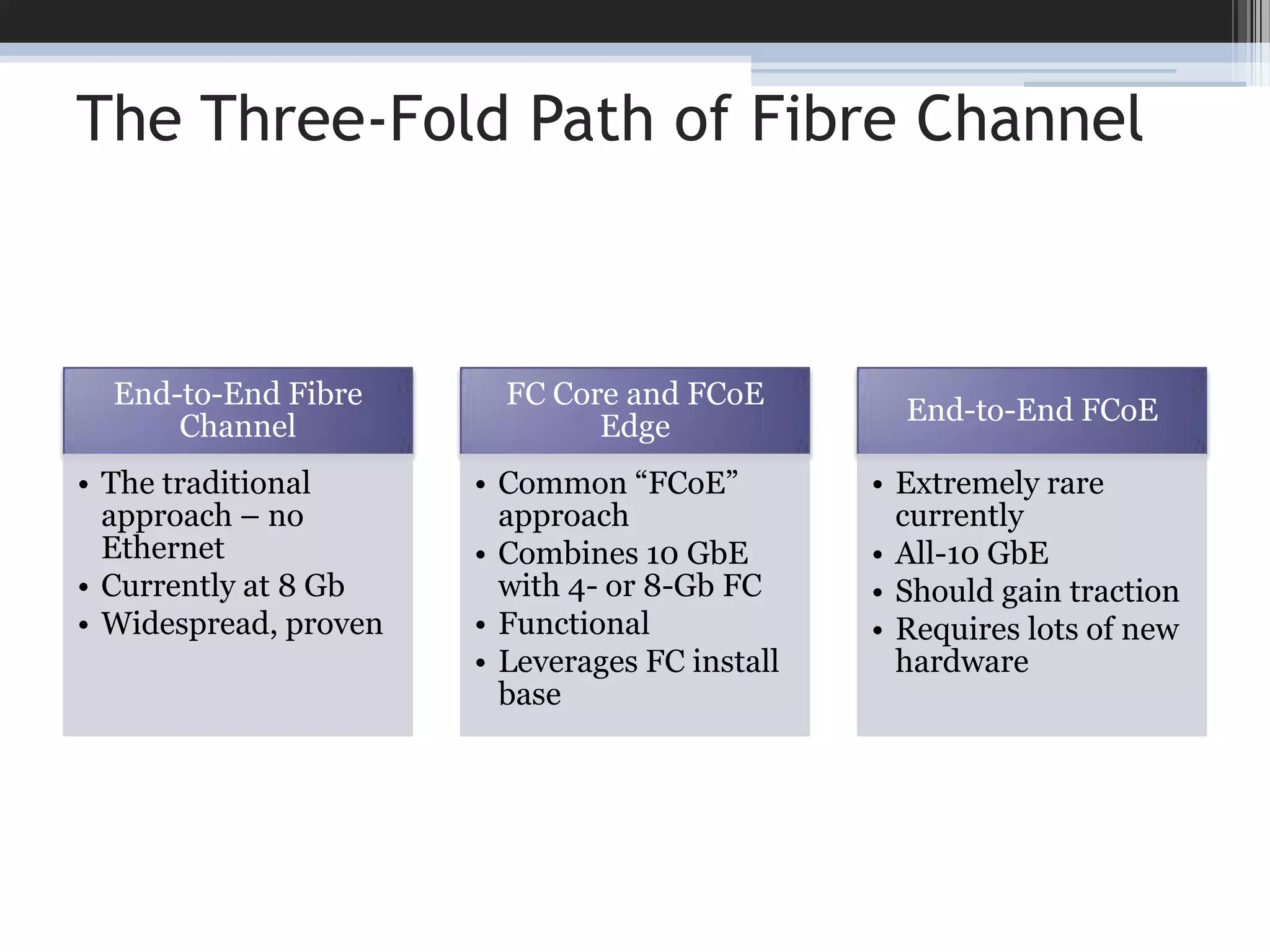

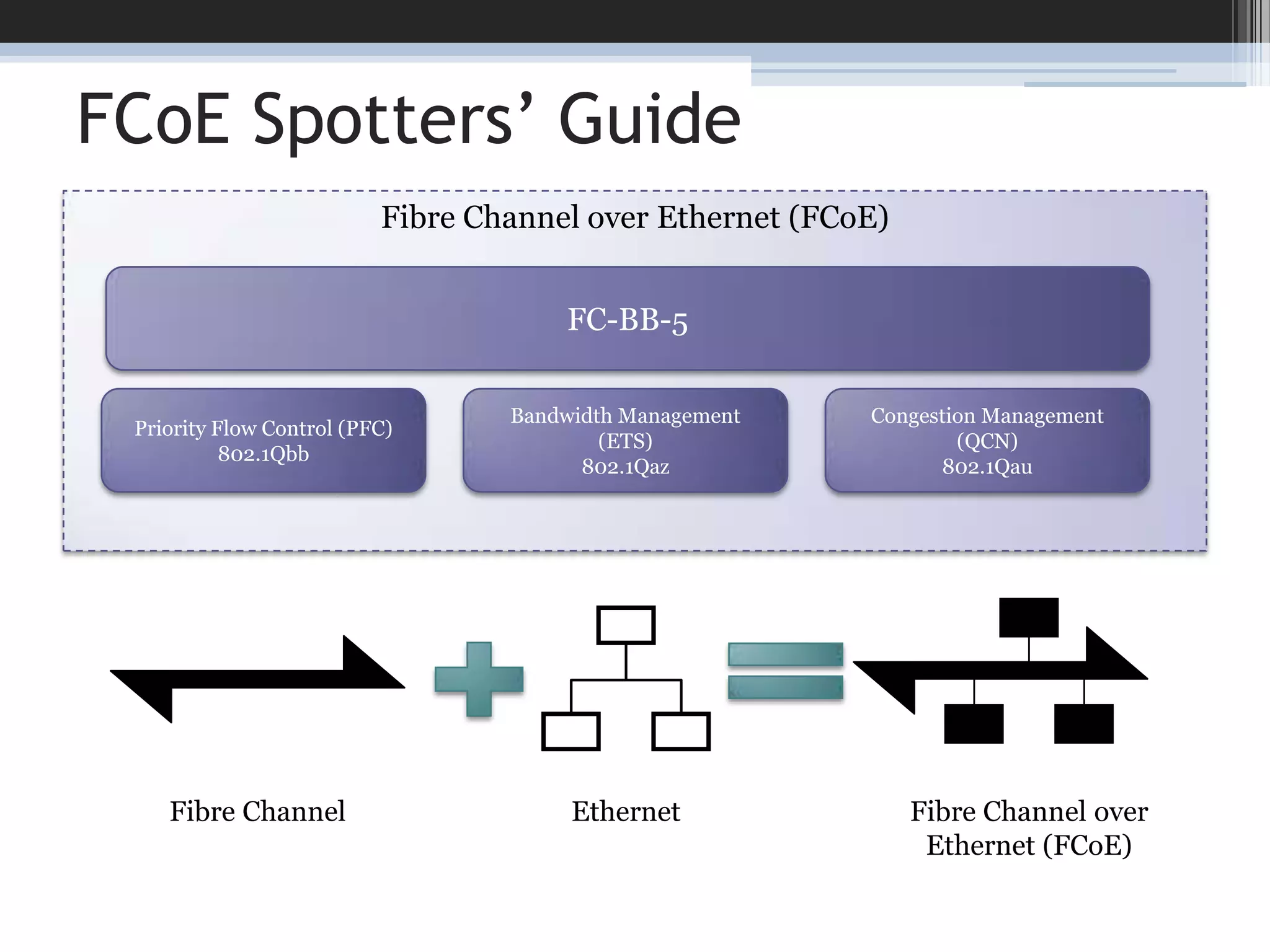

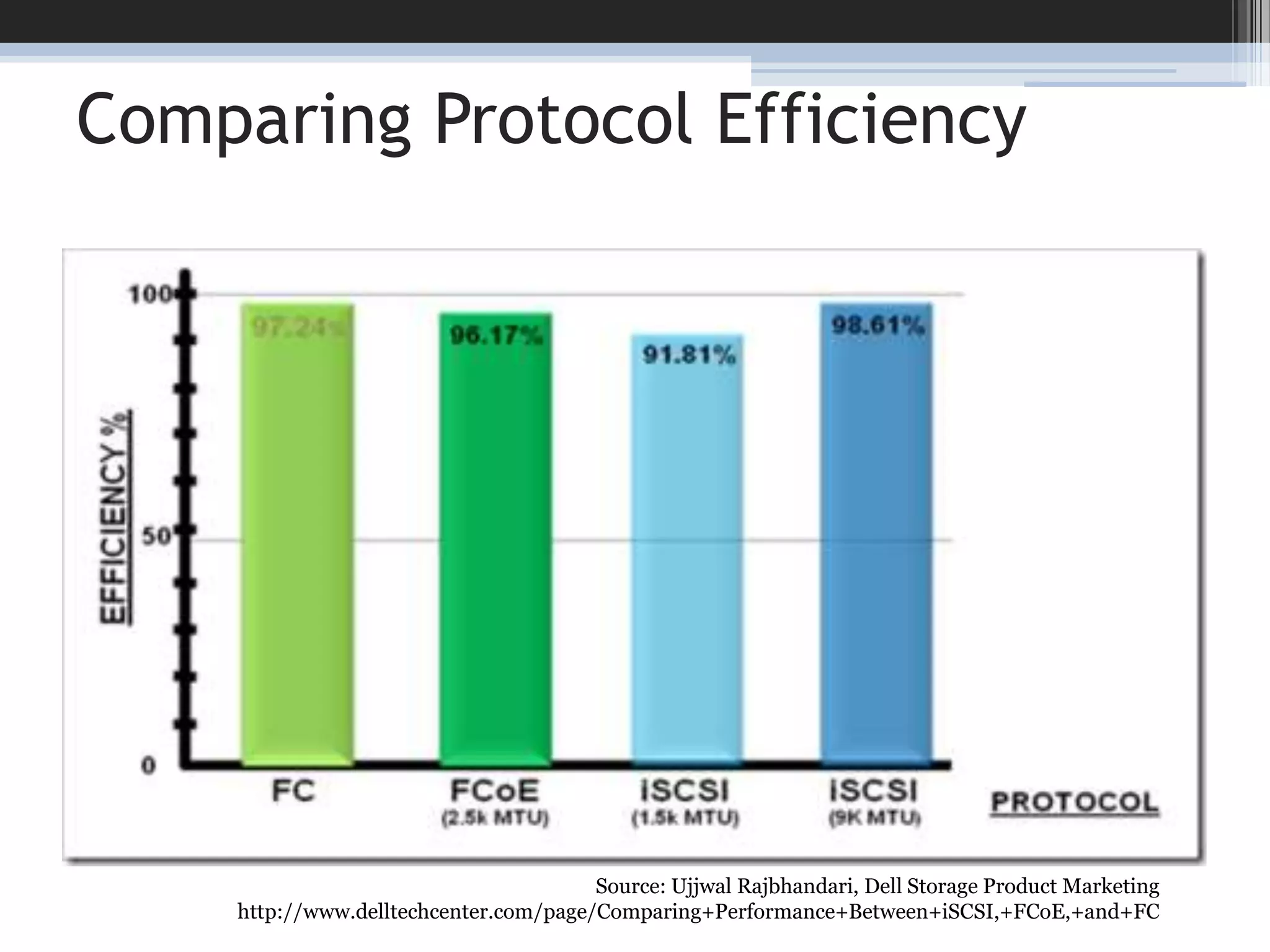

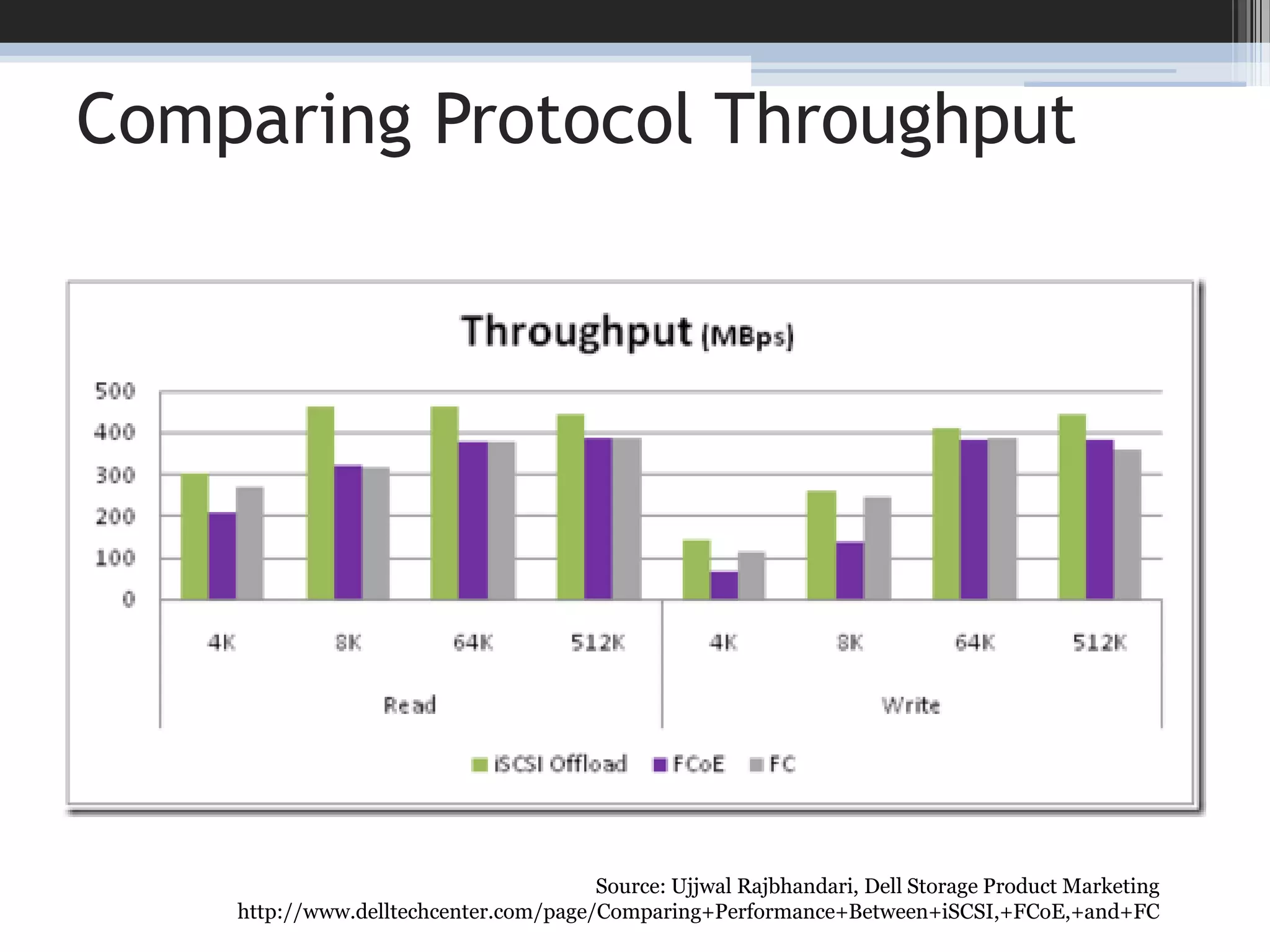

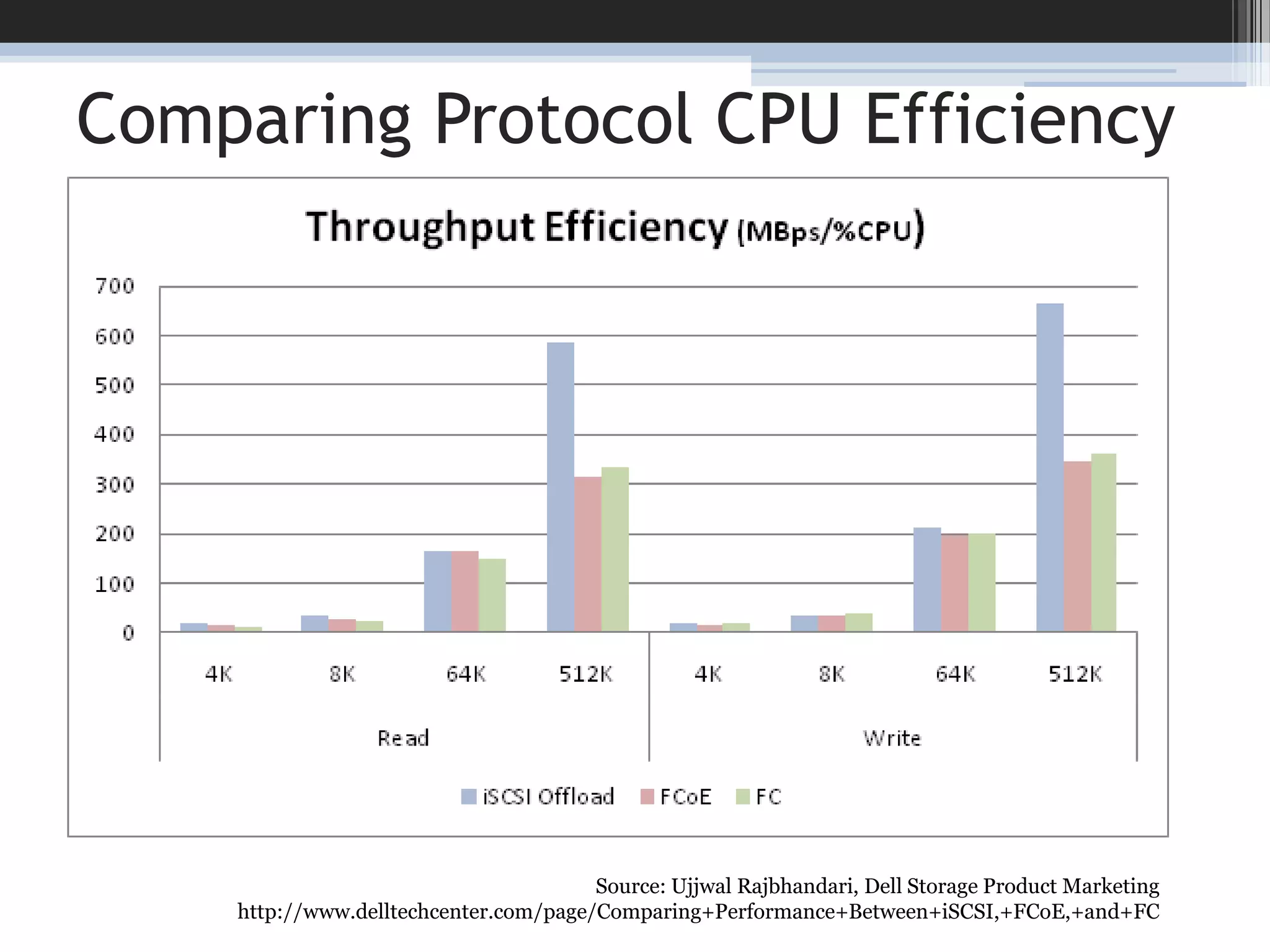

The document compares FCoE (Fibre Channel over Ethernet) and iSCSI (Internet Small Computer Systems Interface) in the context of data center convergence and performance. It discusses the benefits of each protocol in terms of throughput, latency, simplified connectivity, and virtualization capabilities, as well as considerations for network architecture. The author emphasizes the increasing importance of Ethernet infrastructure and the potential advantages of adopting either protocol based on existing system investments and operational needs.