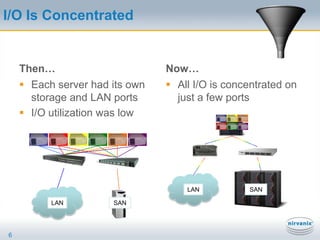

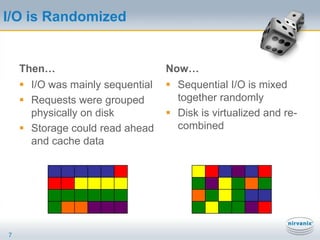

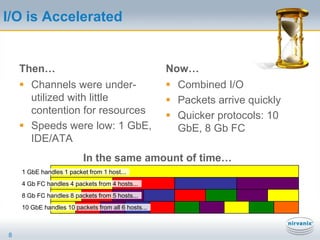

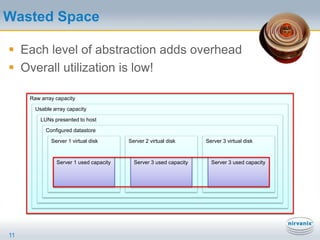

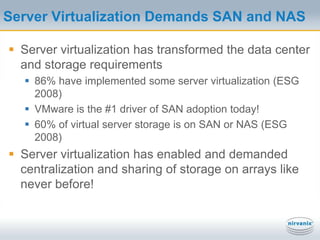

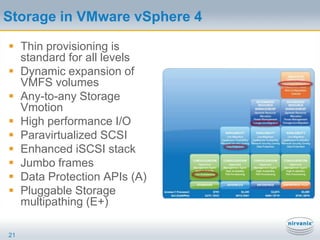

Virtualization changes storage in three key ways:

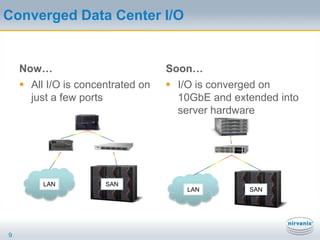

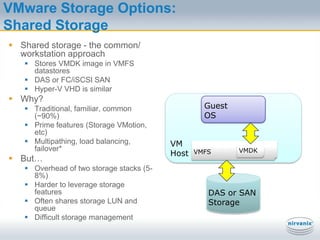

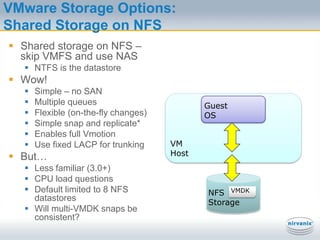

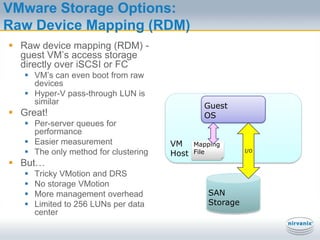

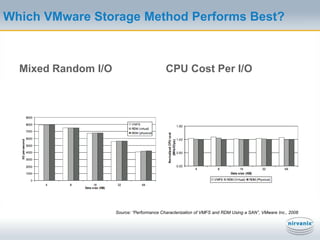

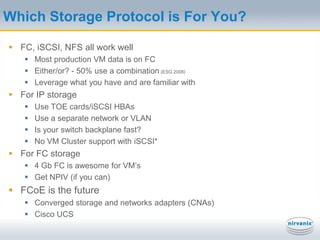

1) I/O is concentrated on fewer ports and randomized across physical disks. 2) Storage utilization is low due to abstraction overhead. 3) Options include shared storage on SAN/NAS, raw device mapping, or NFS with pros and cons for each. Effective virtualized storage requires balancing these changes.