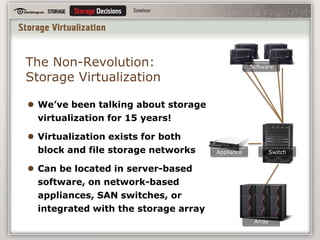

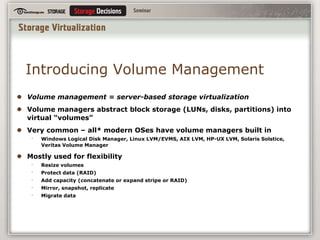

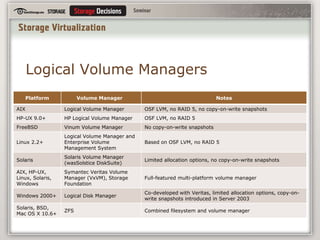

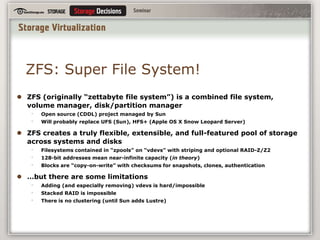

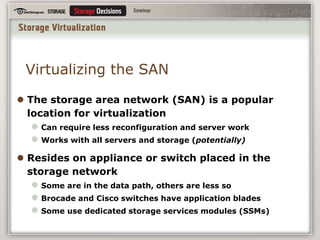

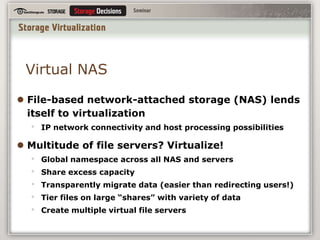

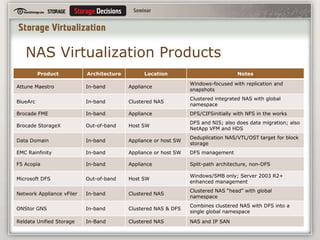

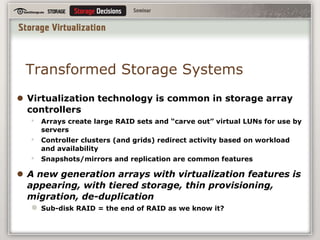

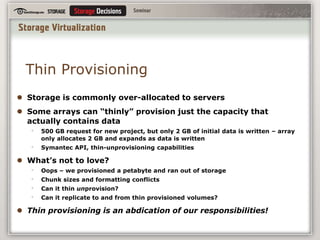

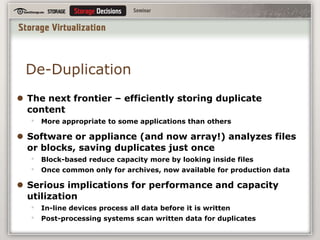

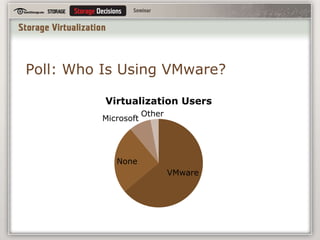

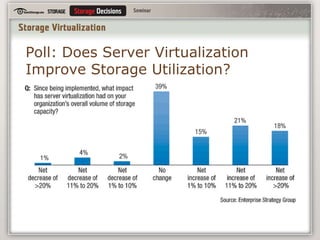

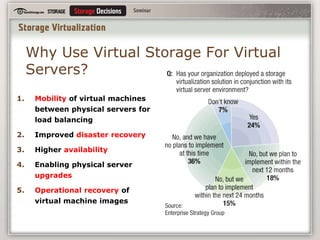

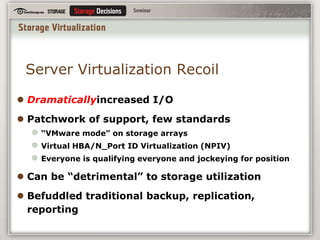

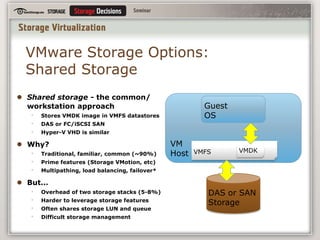

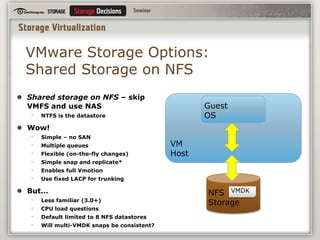

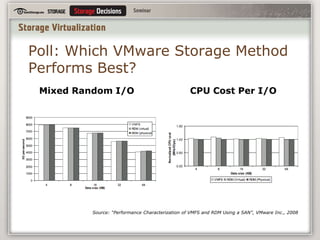

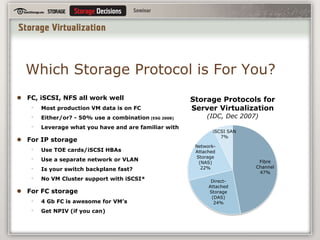

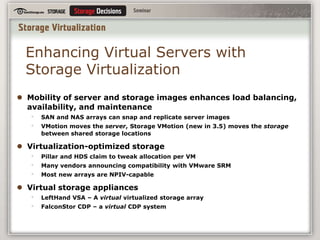

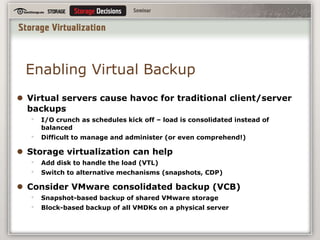

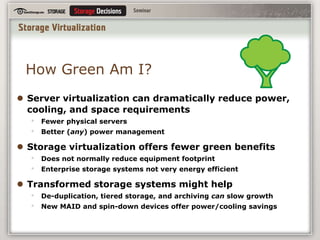

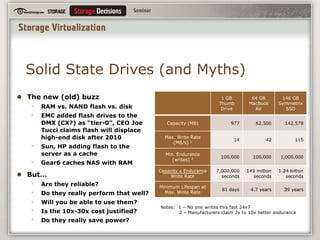

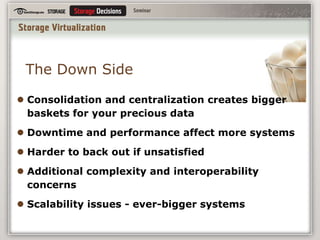

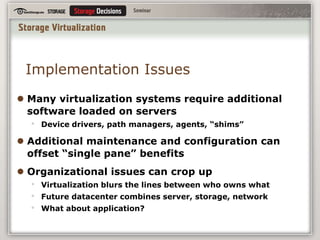

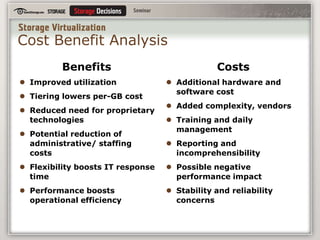

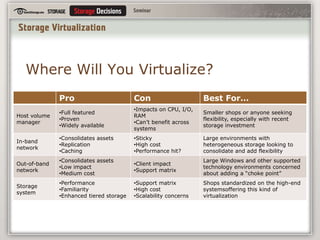

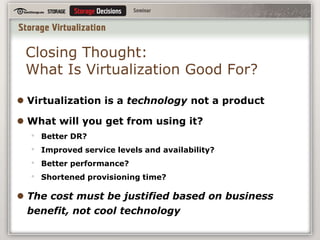

Storage virtualization seminar discusses breaking connections between physical and logical storage through virtualization. It covers volume management, file systems, virtualizing the SAN and NAS, and polling attendees on their virtualization usage. Benefits discussed include pooling for efficiency and scalability, improved performance and availability through technologies like snapshots and replication, and enabling disaster recovery of virtual machine images. Downsides and costs are considered.