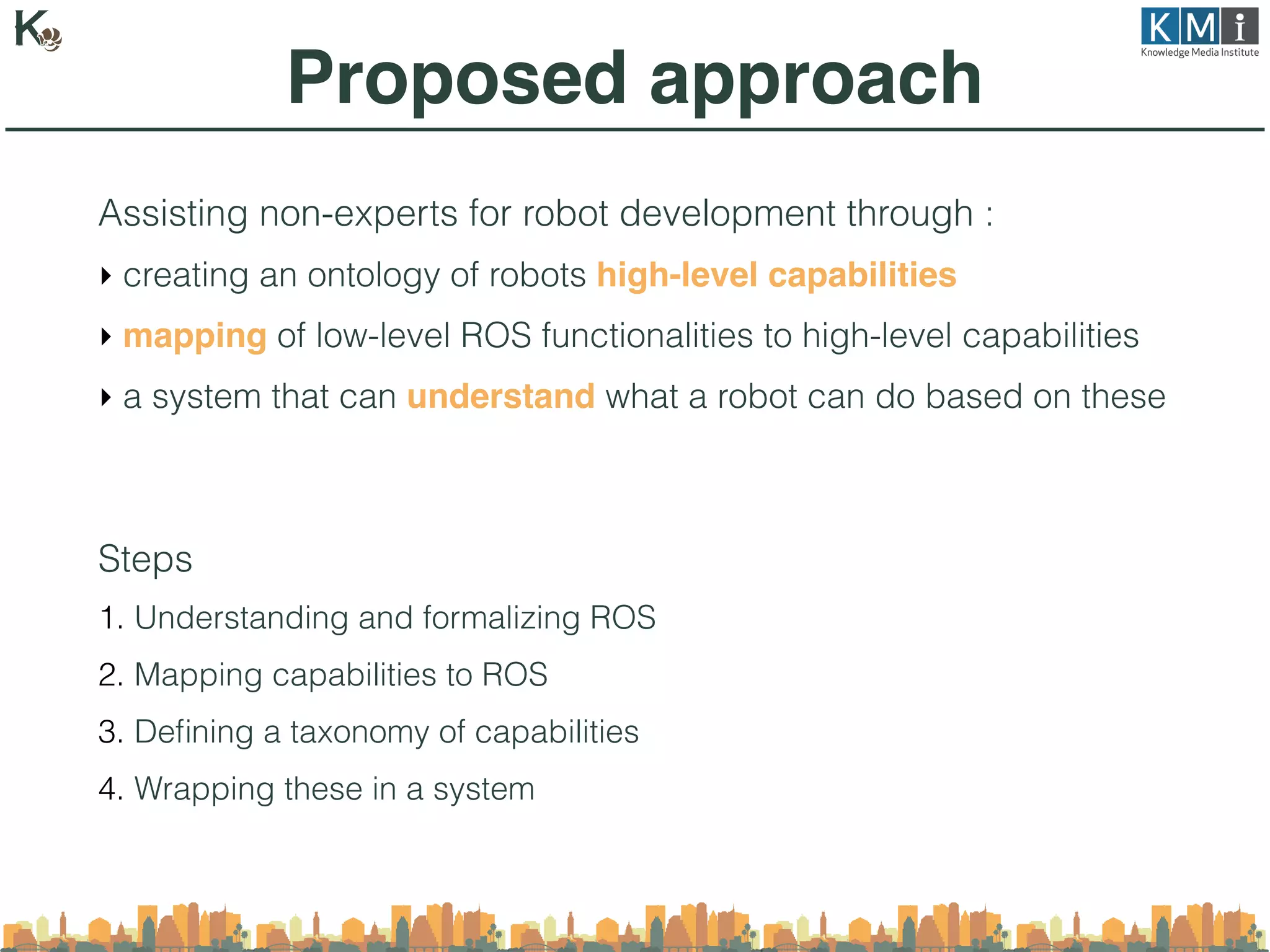

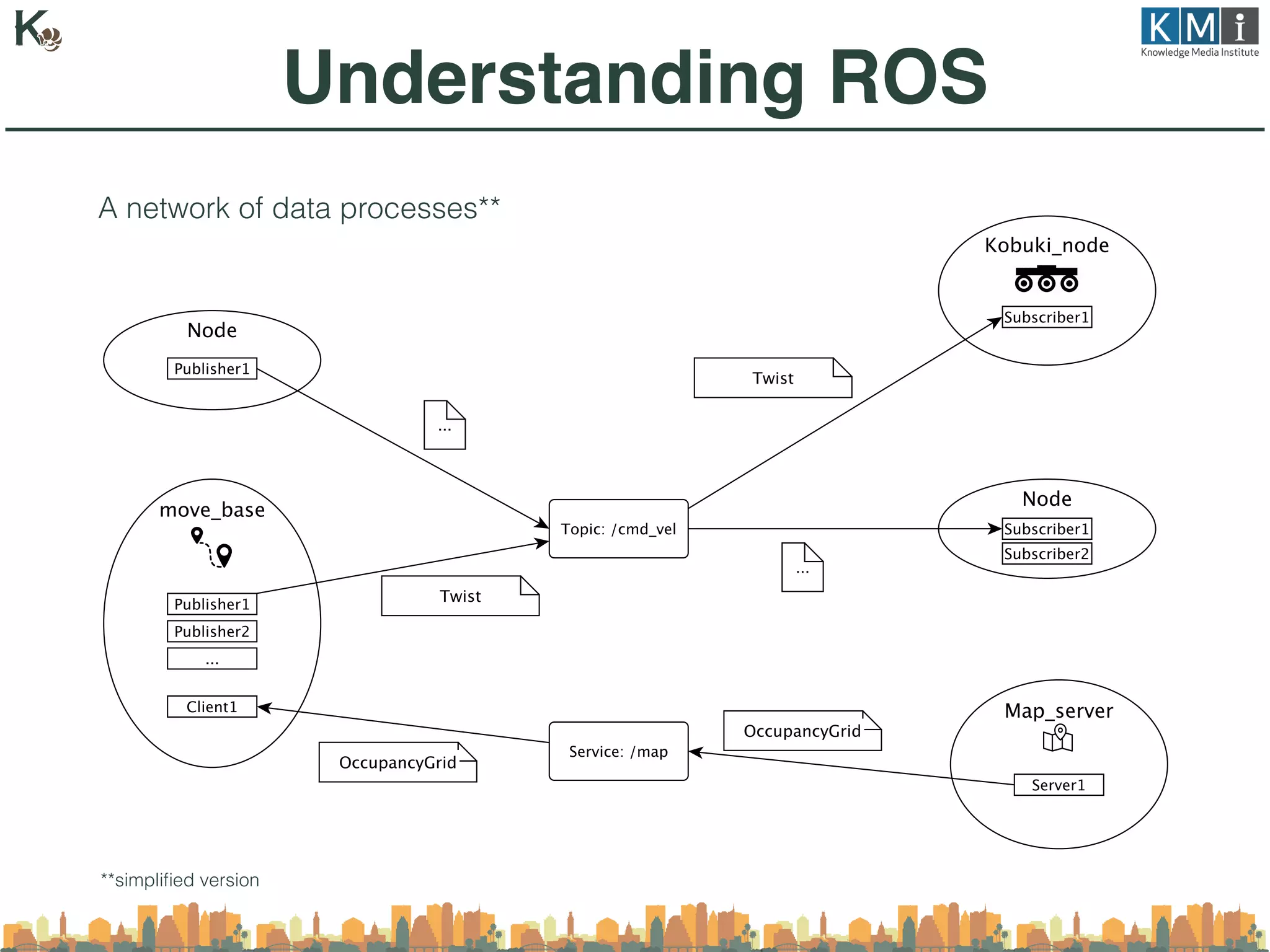

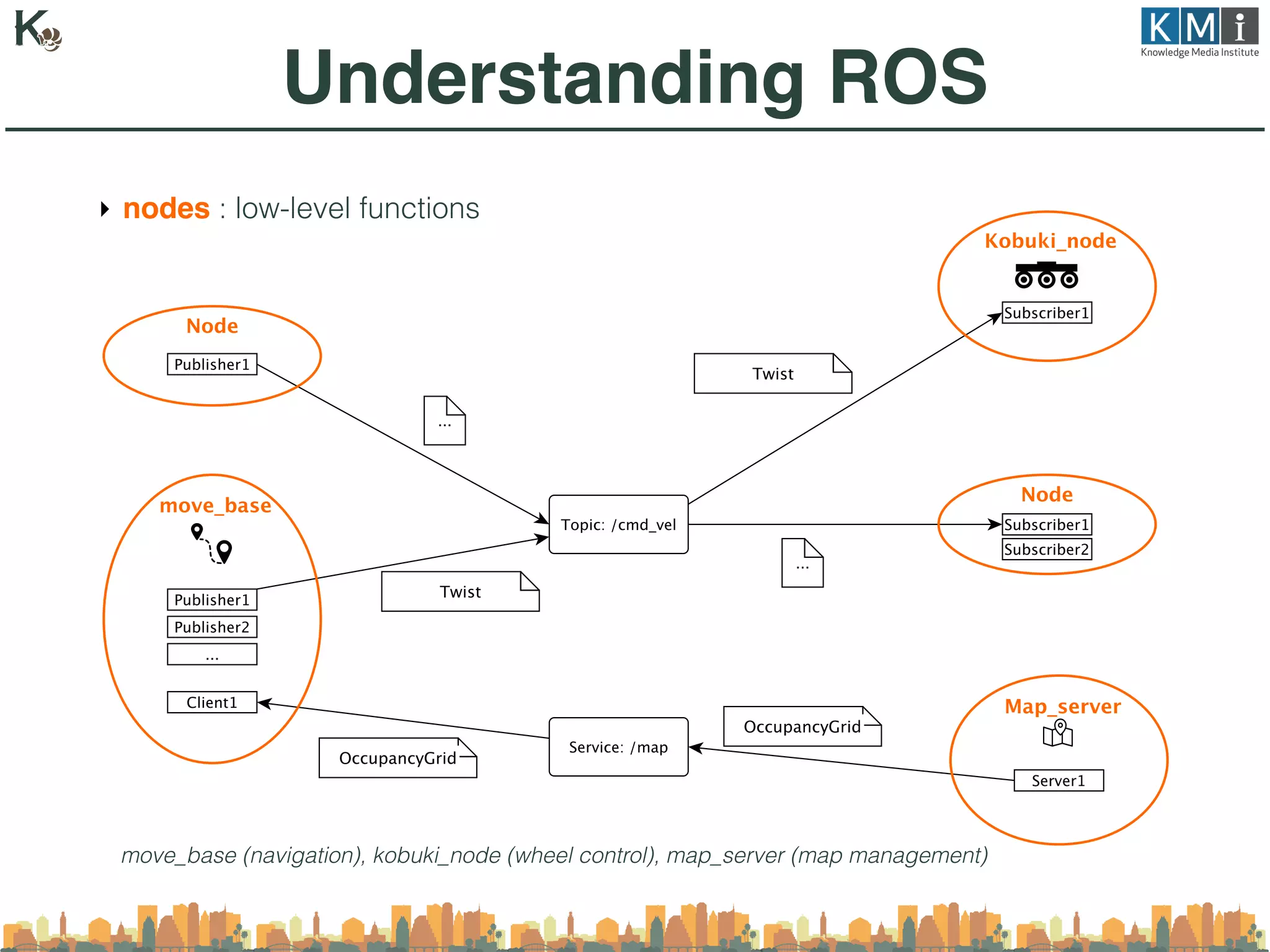

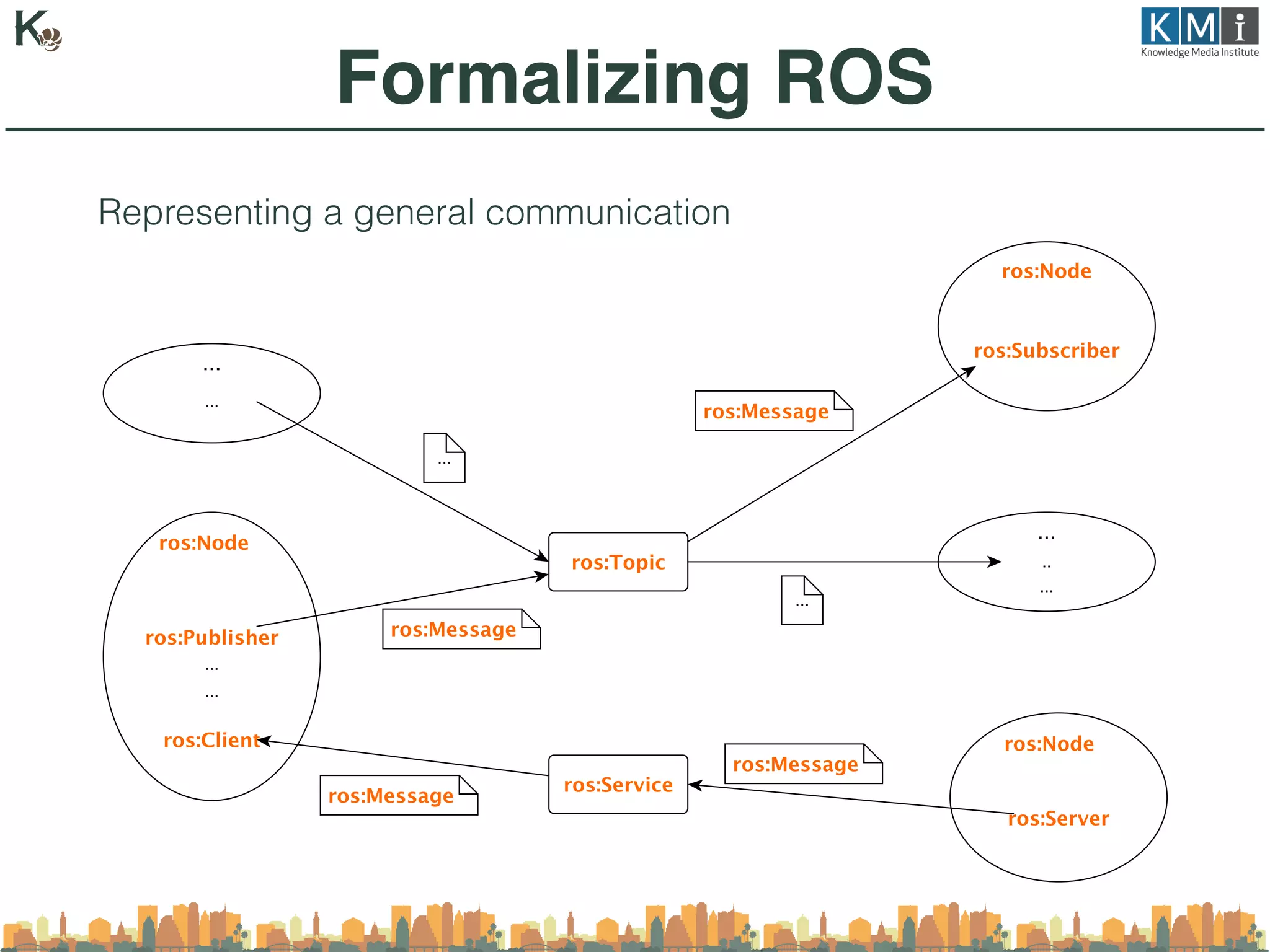

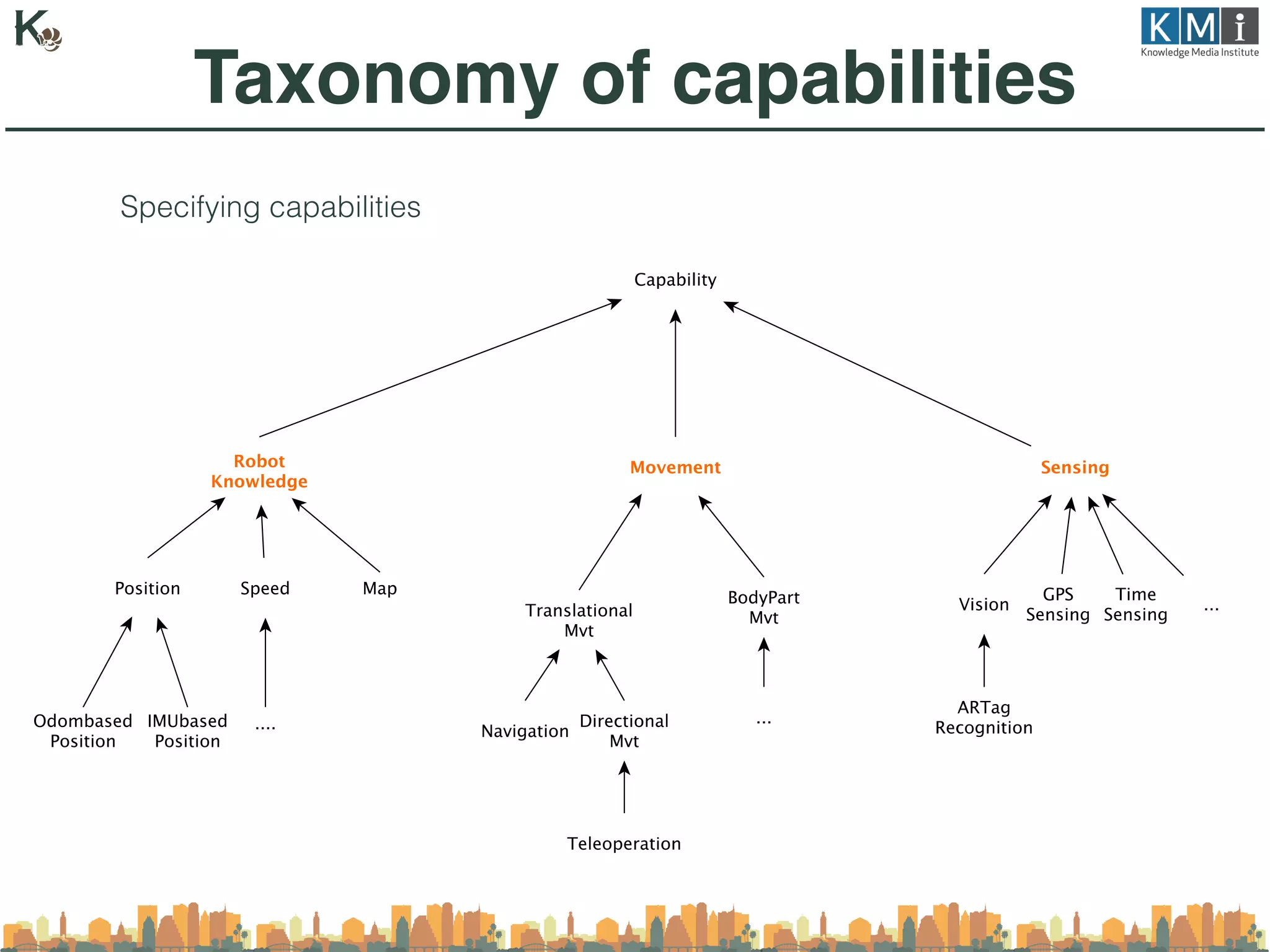

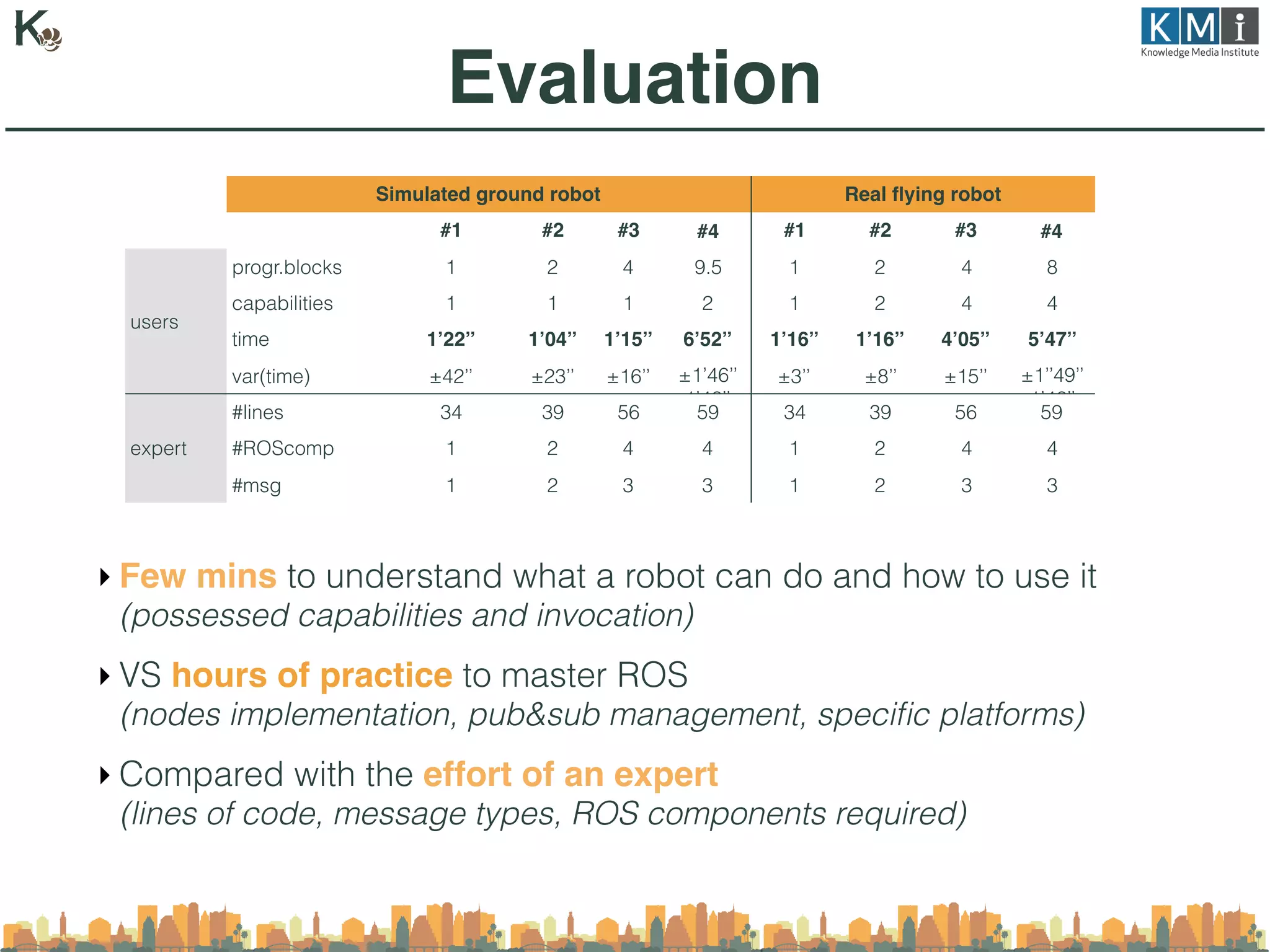

This document discusses an ontology-based approach to enhance the accessibility of robotic systems utilizing the Robot Operating System (ROS). It highlights the need for non-experts to understand and use robot capabilities without deep knowledge of the underlying technology, proposing a system that maps high-level functionalities to low-level ROS components. Future work includes refining the capability taxonomy and expanding the system to support additional robotic functions.

![Providers do not fully expose a robot’s capabilities

‣ robots become end products (unless being a robot developer)

e.g. drones for photography / roombas for cleaning

MK:Smart++ [1]: integrating robots in cities

‣ data collectors (drones for parking monitoring)

‣ data consumers (adaptive self-driving cars)

Example : team of robots for green space maintenance

‣ available capabilities : e.g. teleoperation, video recording

‣ expertise required to program trajectories/object recognition

‣ different platforms require different experts

Motivation

[1] www.mksmart.org](https://image.slidesharecdn.com/2017kcap-171205223537/75/An-ontology-based-approach-to-improve-the-accessibility-of-ROS-based-robotic-systems-4-2048.jpg)

![[2] : the Robot Operating System

‣ collaborative middleware

‣ management of low-level components (share, reuse)

‣ need a fine-grained understanding of the robot architecture

Robot Operating System

[2] www.ros.org](https://image.slidesharecdn.com/2017kcap-171205223537/75/An-ontology-based-approach-to-improve-the-accessibility-of-ROS-based-robotic-systems-6-2048.jpg)