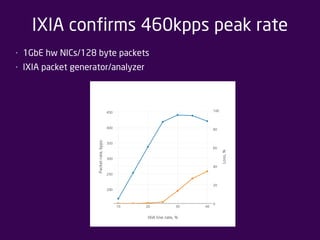

Erlang on Xen (LING) is a new Erlang platform that runs without an operating system for improved performance. The LINCX project ported the LINC-Switch to LING, demonstrating high compatibility. LINCX runs 100x faster than the original code by optimizing the fast processing path. LING can achieve throughput of up to 0.5 million packets per second and was used to build a high-performance network switch called LINCX.

![Raw network interfaces in Erlang

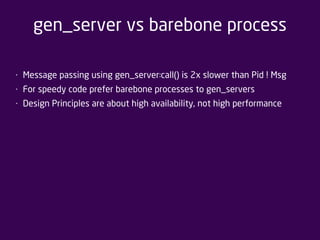

• LING adds raw network interfaces:

Port = net_vif:open(“eth1”, []),

port_command(Port, <<1,2,3>>),

receive

{Port,{data,Frame}} > ‐

...

• Raw interface receives whole Ethernet frames

• LINCX uses standard gen_tcp for the control connection and net_vif -

for data ports

• Raw interfaces support mailbox_limit option - packets get dropped if

the mailbox of the receiving process overflows:

Port = net_vif:open(“eth1”, [{mailbox_limit,16384}]),

...](https://image.slidesharecdn.com/erlanglincxfbynov222014-141203094603-conversion-gate01/85/Erlang-lincx-7-320.jpg)

![Processing delay and low-level stats

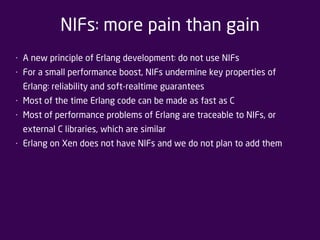

• LING can measure a processing delay for a packet:

1> ling:experimental(processing_delay, []).

Processing delay statistics:

Packets: 2000 Delay: 1.342us +‐ 0.143 (95%)

• LING can collect low-level stats for a network interface:

1> ling:experimental(llstat, 1). %% stop/display

Duration: 4868.6ms

RX: interrupts: 69170 (0 kicks 0.0%) (freq 14207.4/s period 70.4us)

RX: reqs per int: 0/0.0/0

RX: tx buf freed per int: 0/8.5/234

TX: outputs: 1479707 (112263 kicks 7.6) (freq 303928.8/s period 3.3us)

TX: tx buf freed per int: 0/0.6/113

TX: rates: 303.9kpps 3622.66Mbps avg pkt size 1489.9B

TX: drops: 12392 (freq 2545.3/s period 392.9us)

TX: drop rates: 2.5kpps 30.26Mbps avg pkt size 1486.0B](https://image.slidesharecdn.com/erlanglincxfbynov222014-141203094603-conversion-gate01/85/Erlang-lincx-10-320.jpg)

![How to tackle GC-related issues

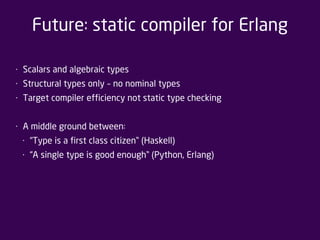

• (Priority 1) Call erlang:garbage_collect() at strategic points

• (Priority 2) For the fastest code avoid GC completely – restart the fast

process regularly:

spawn(F, [{suppress_gc,true}]), %% LING ‐only

• (Priority 3) Use fullsweep_after option](https://image.slidesharecdn.com/erlanglincxfbynov222014-141203094603-conversion-gate01/85/Erlang-lincx-16-320.jpg)