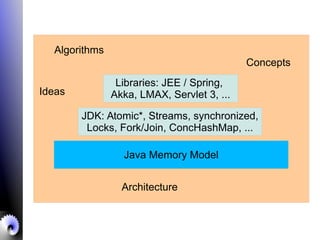

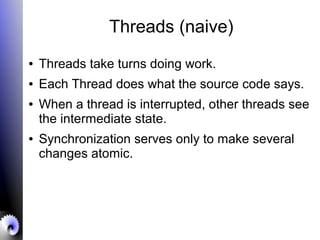

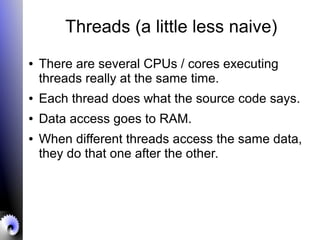

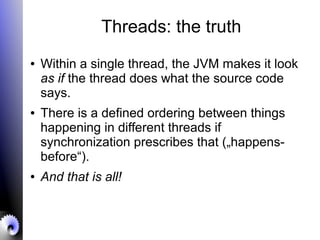

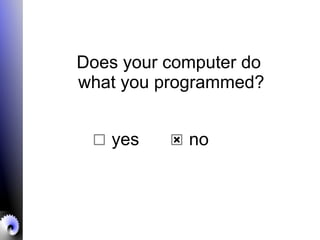

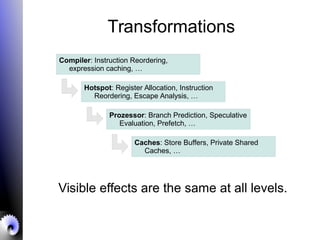

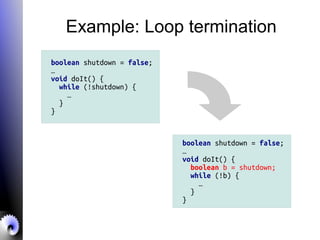

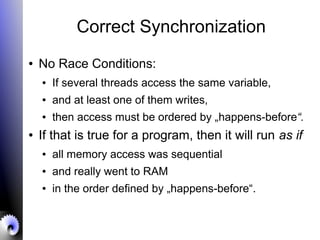

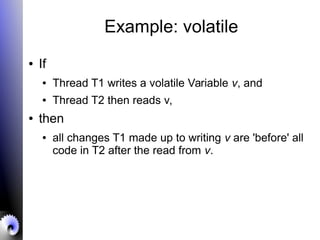

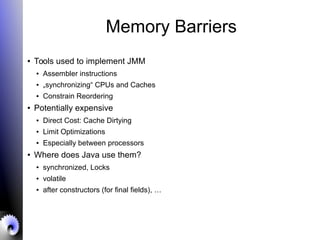

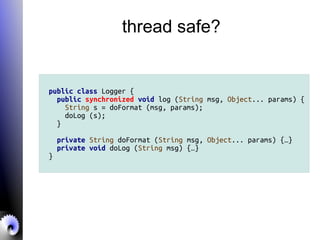

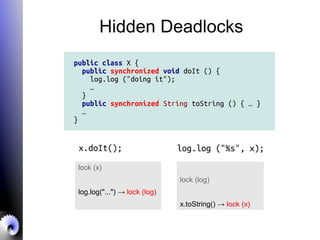

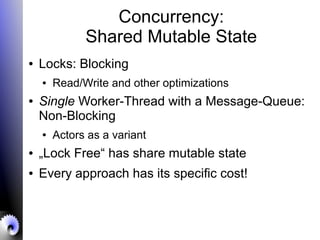

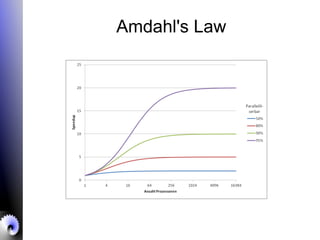

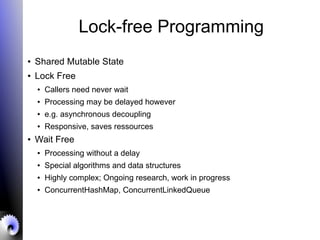

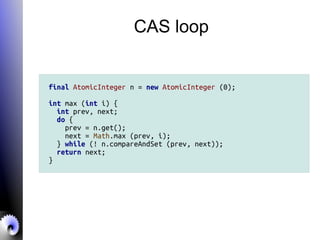

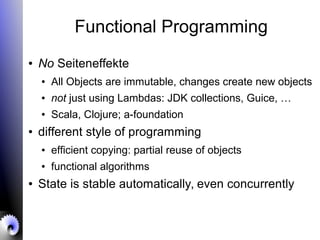

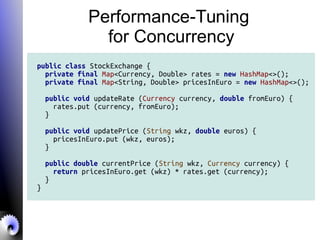

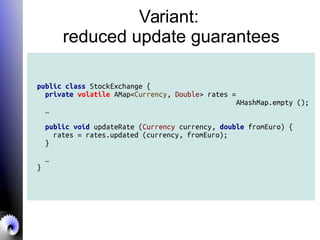

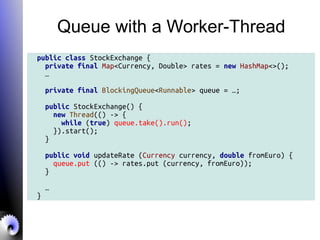

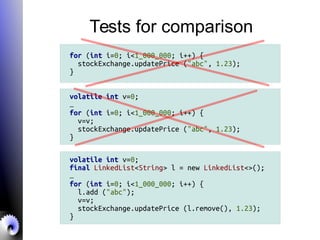

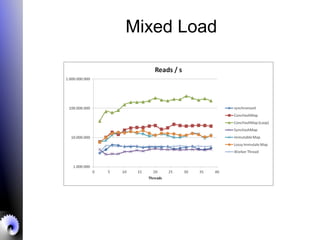

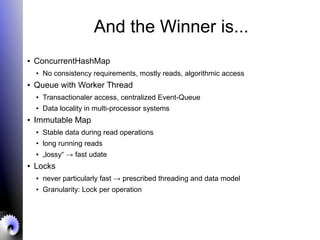

The document discusses advanced Java concurrency concepts, emphasizing the complexities of thread management and the necessity of correct synchronization to avoid race conditions. It covers topics such as the Java memory model, locking mechanisms, and performance tuning for concurrent applications, while advising on isolation strategies to reduce contention. Key takeaways include the importance of measuring performance in real scenarios and understanding that correctness is paramount before optimizing for performance.