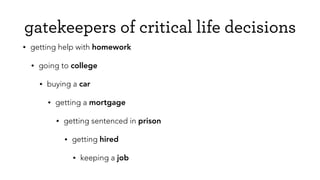

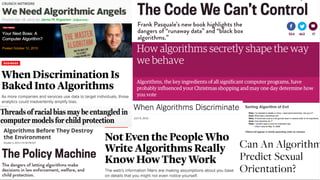

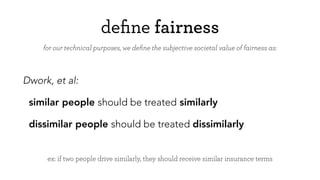

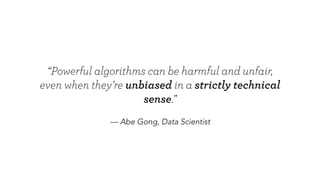

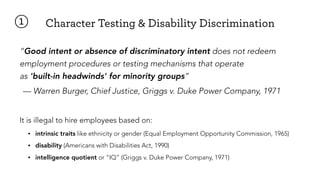

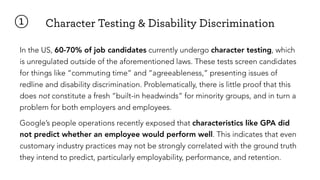

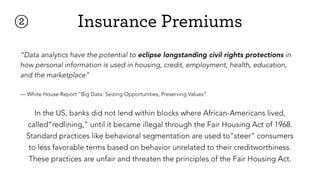

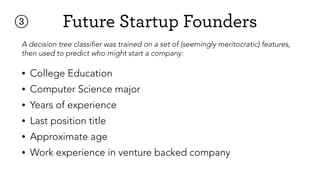

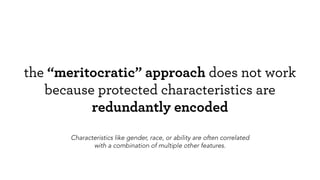

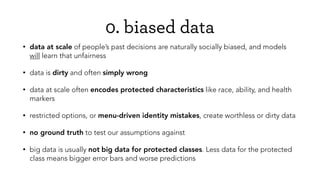

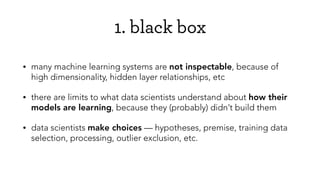

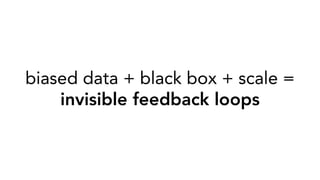

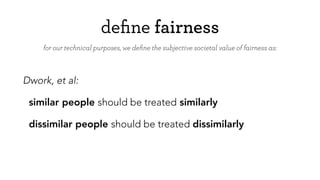

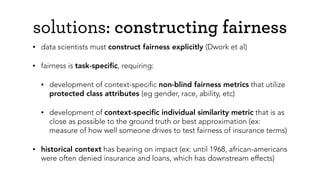

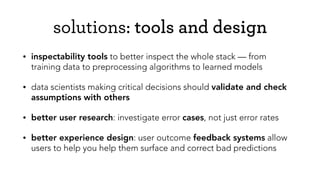

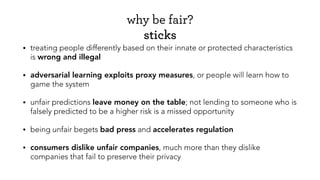

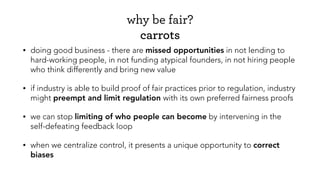

The document discusses the ethical implications of engineering and machine learning, focusing on fairness in predictive outcomes that affect significant life decisions. It highlights the issues of bias in data, the potential for discrimination through character testing, and the importance of constructing fairness explicitly in algorithms. The responsibility lies with data scientists to ensure fair practices to benefit both society and business.