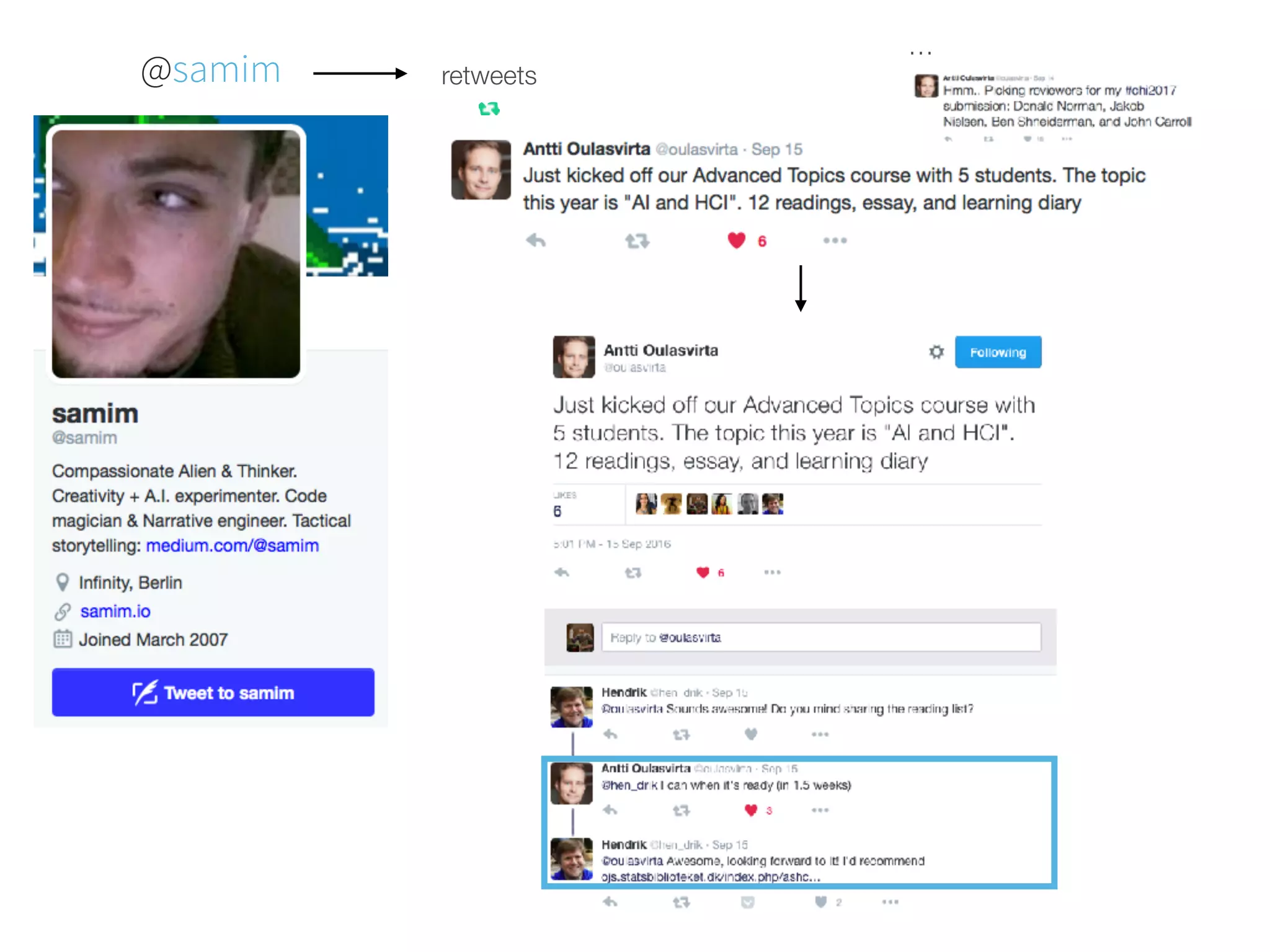

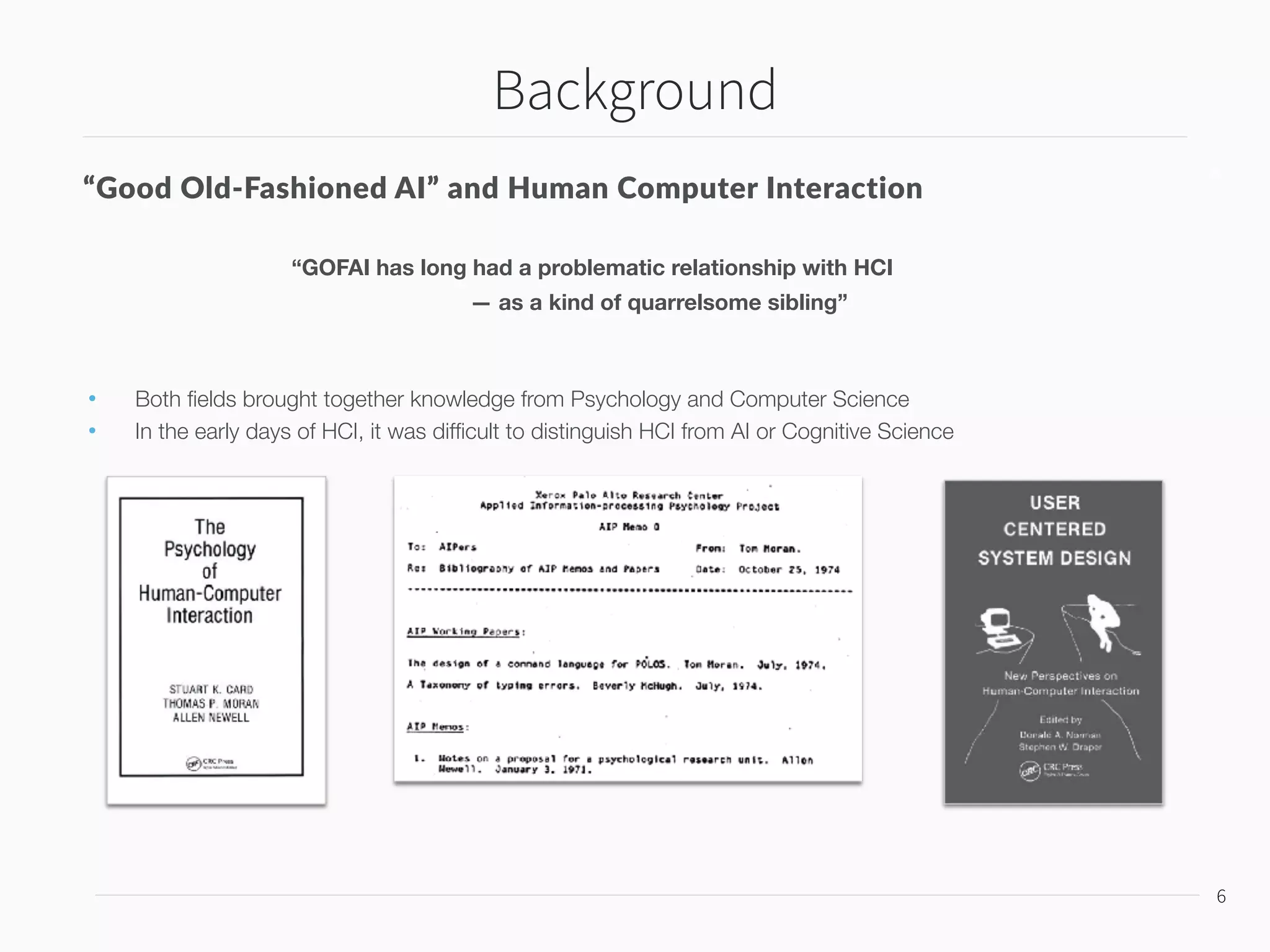

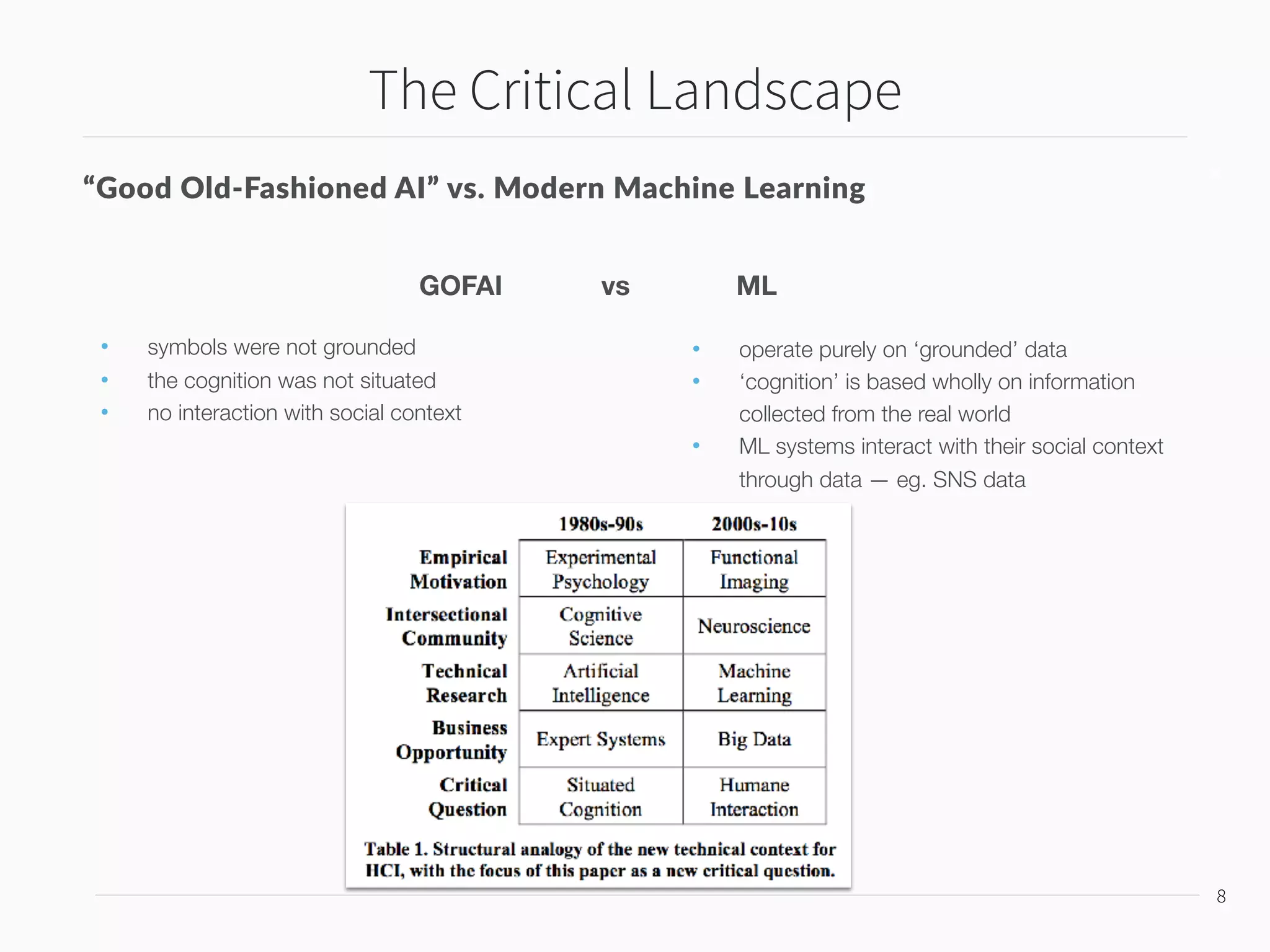

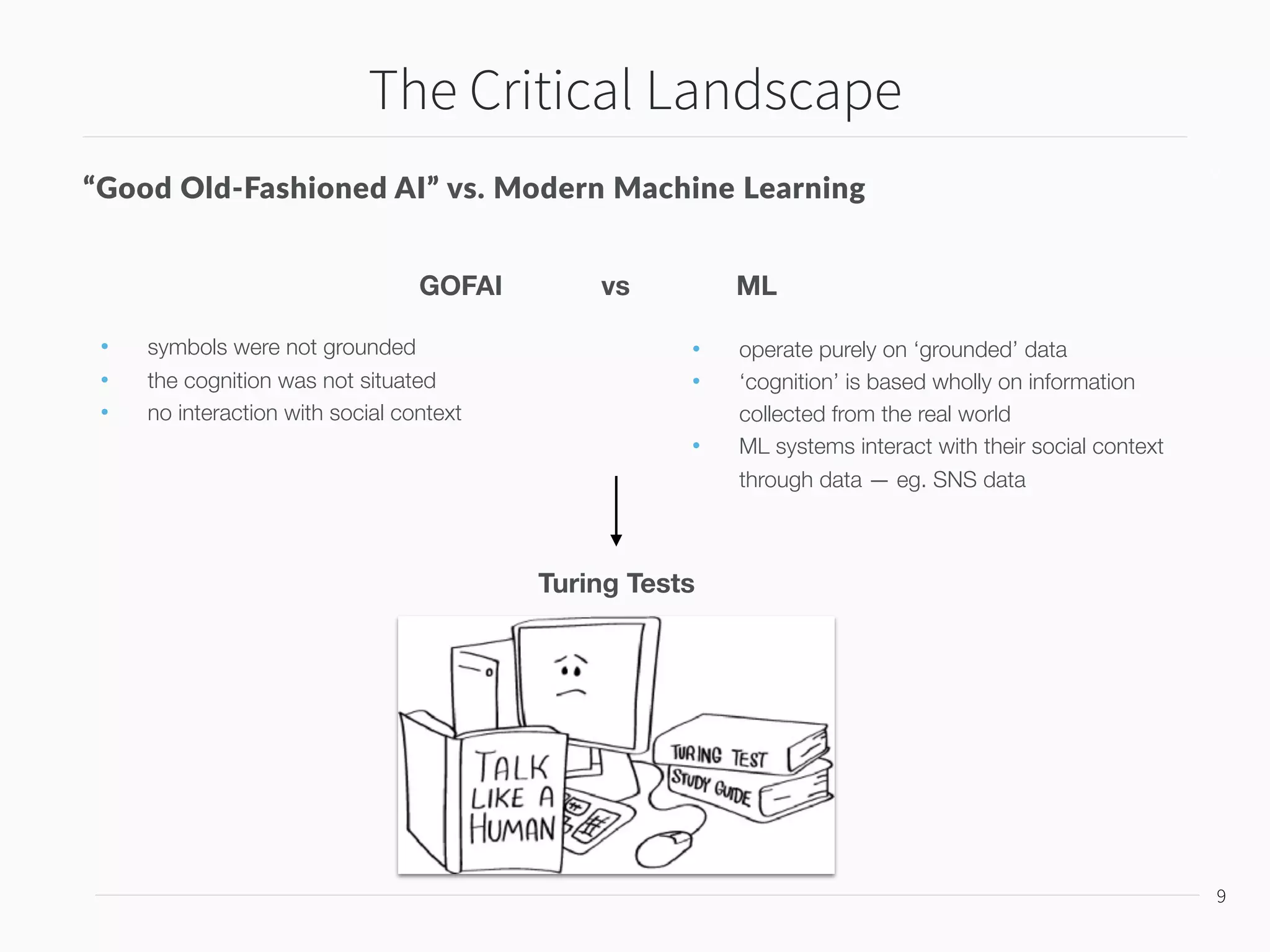

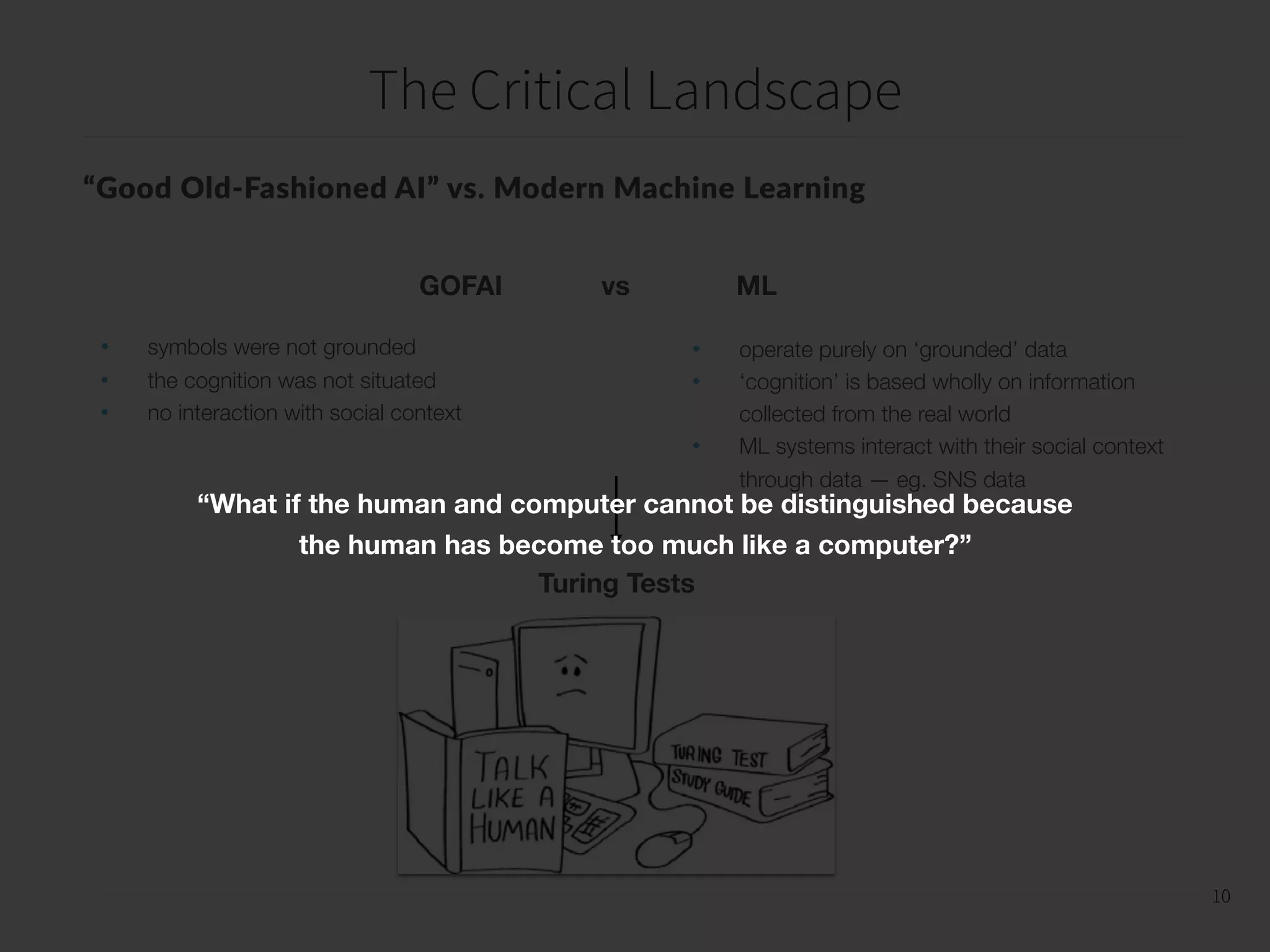

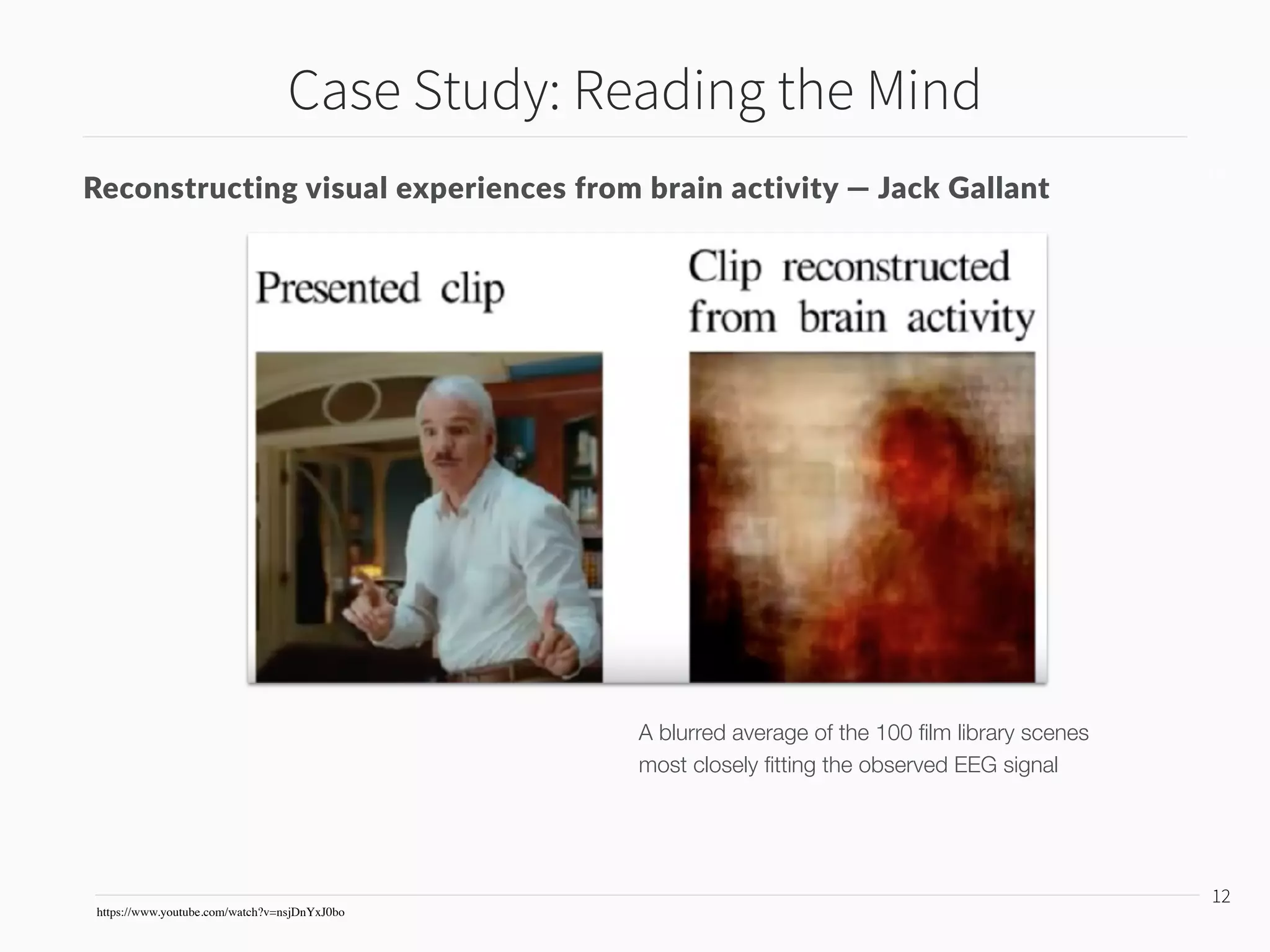

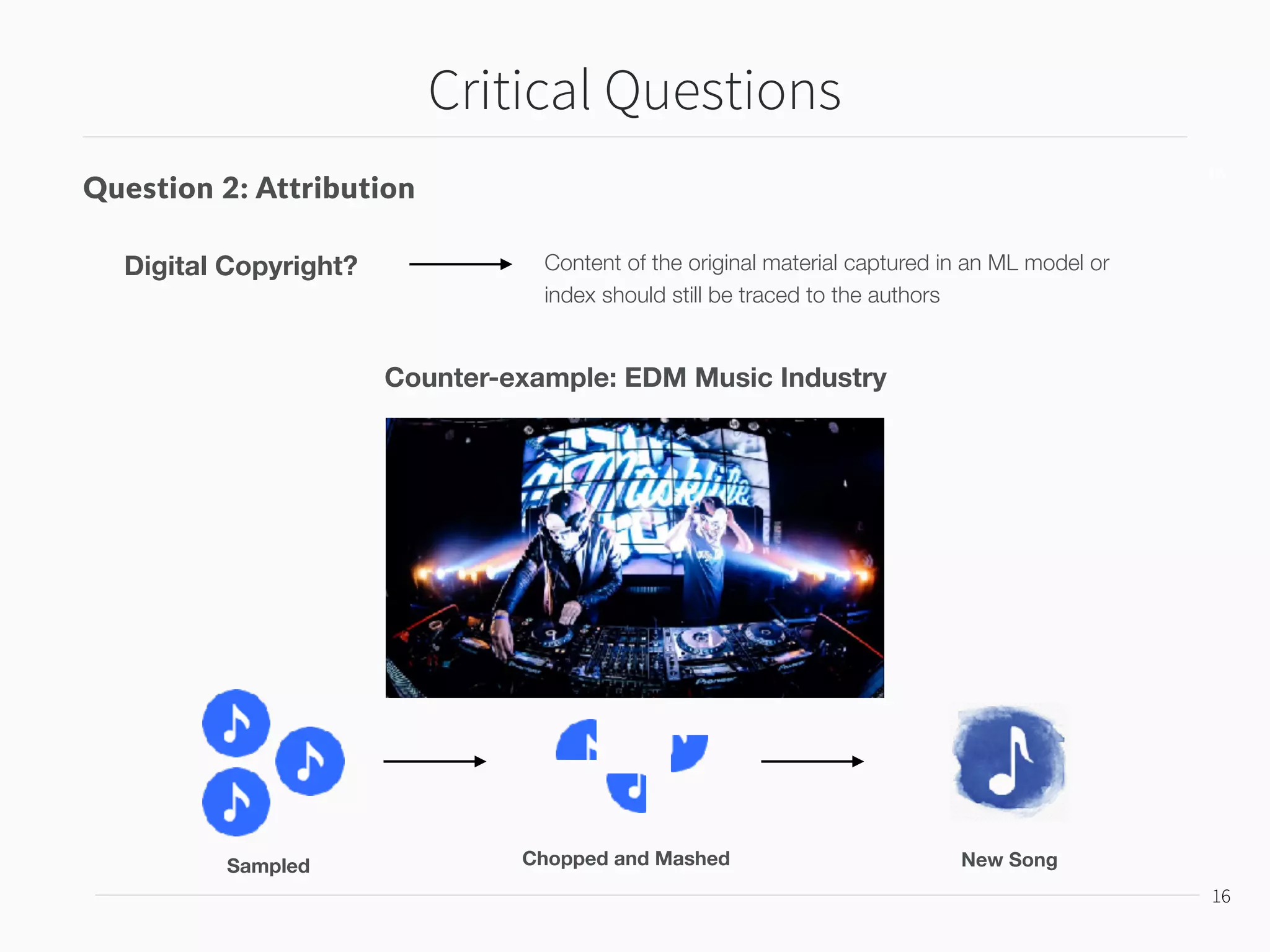

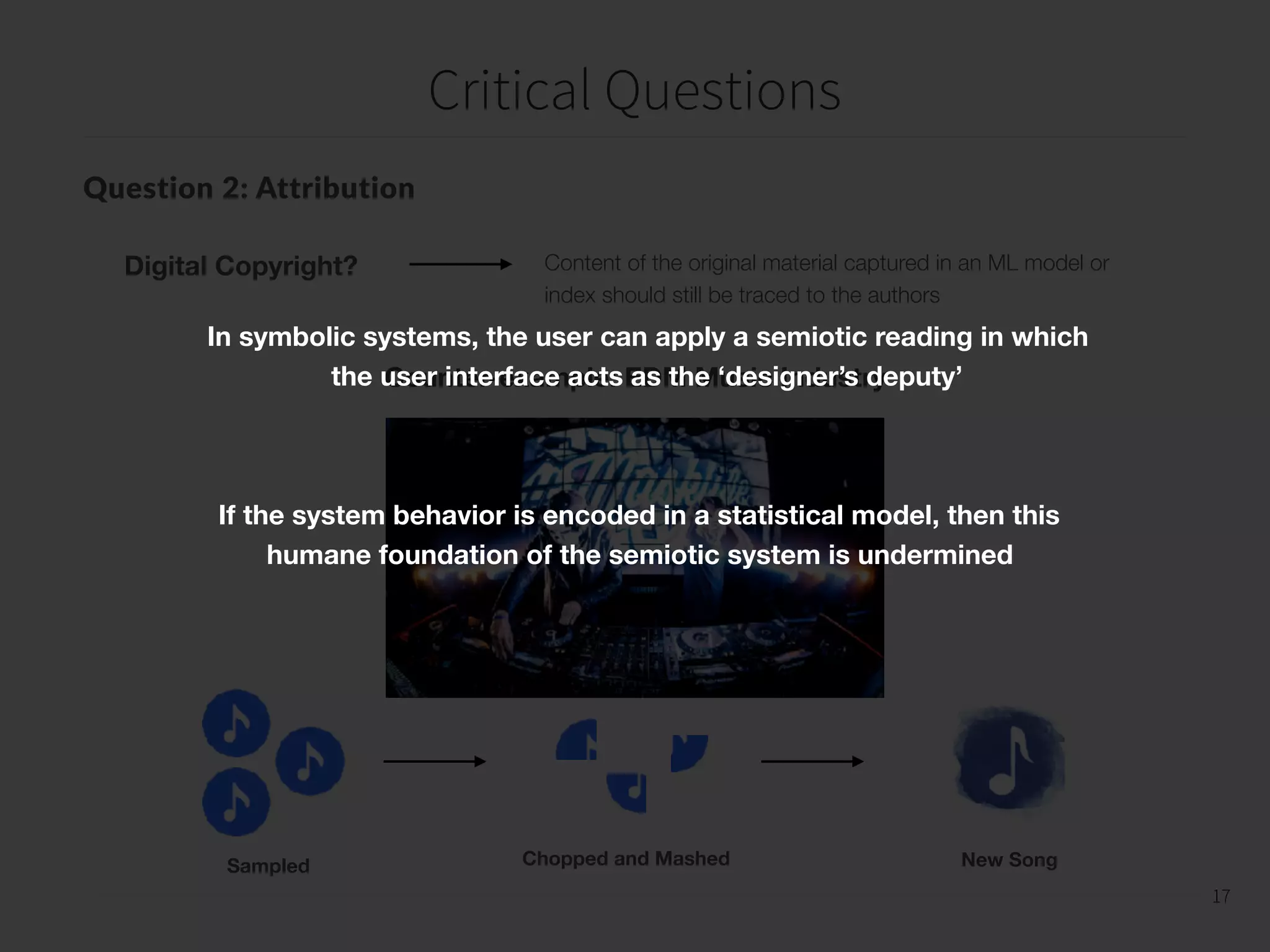

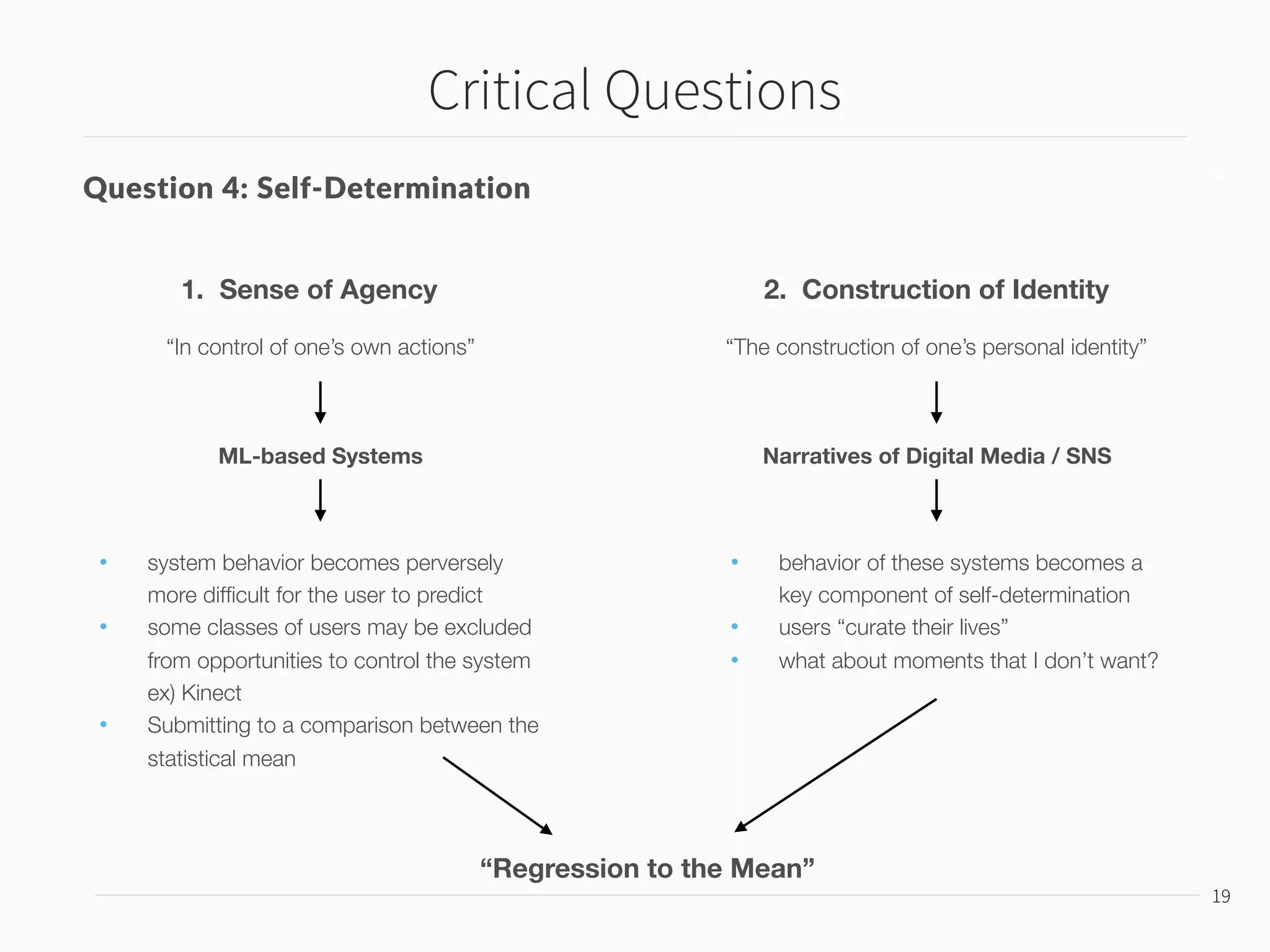

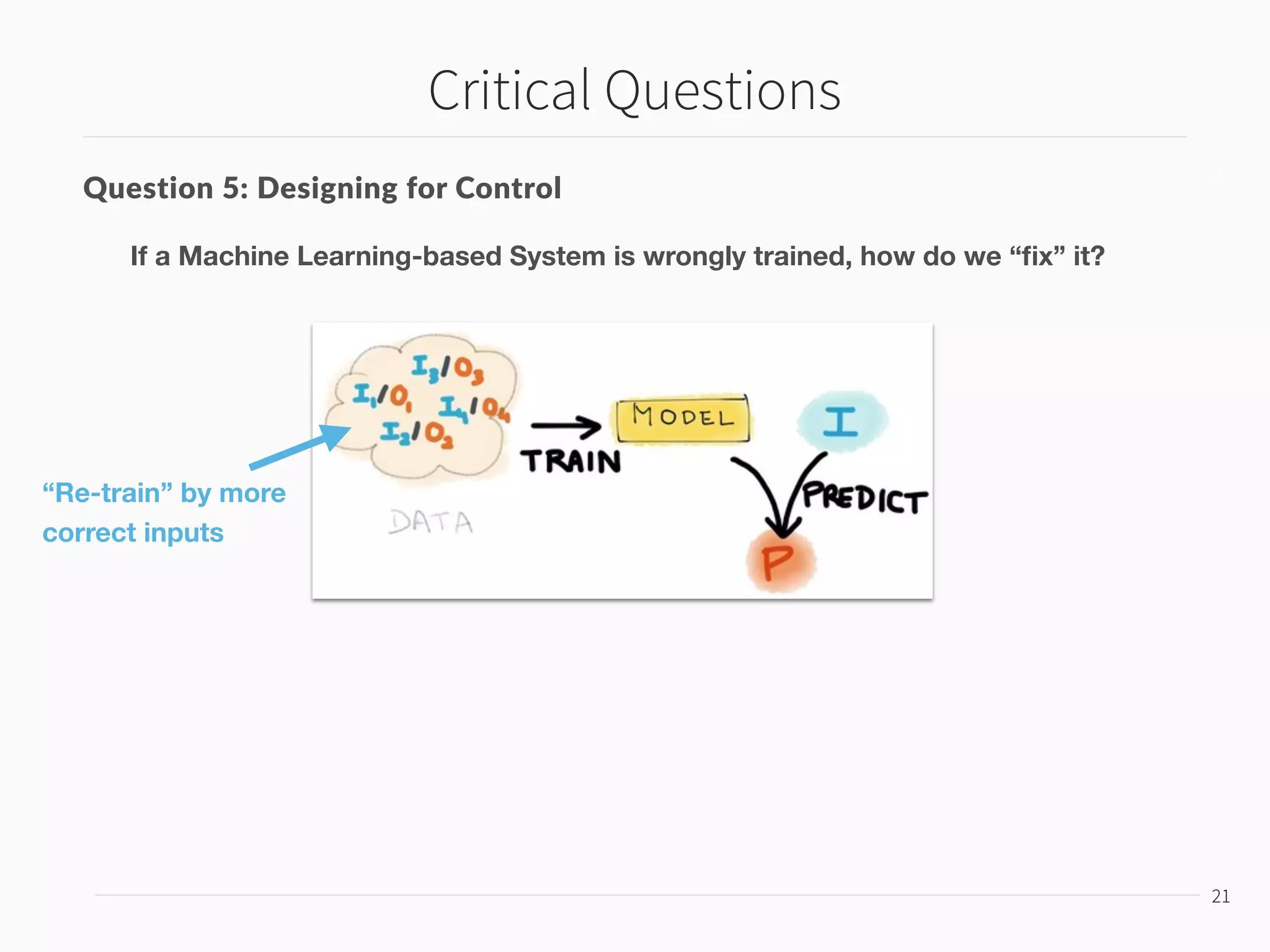

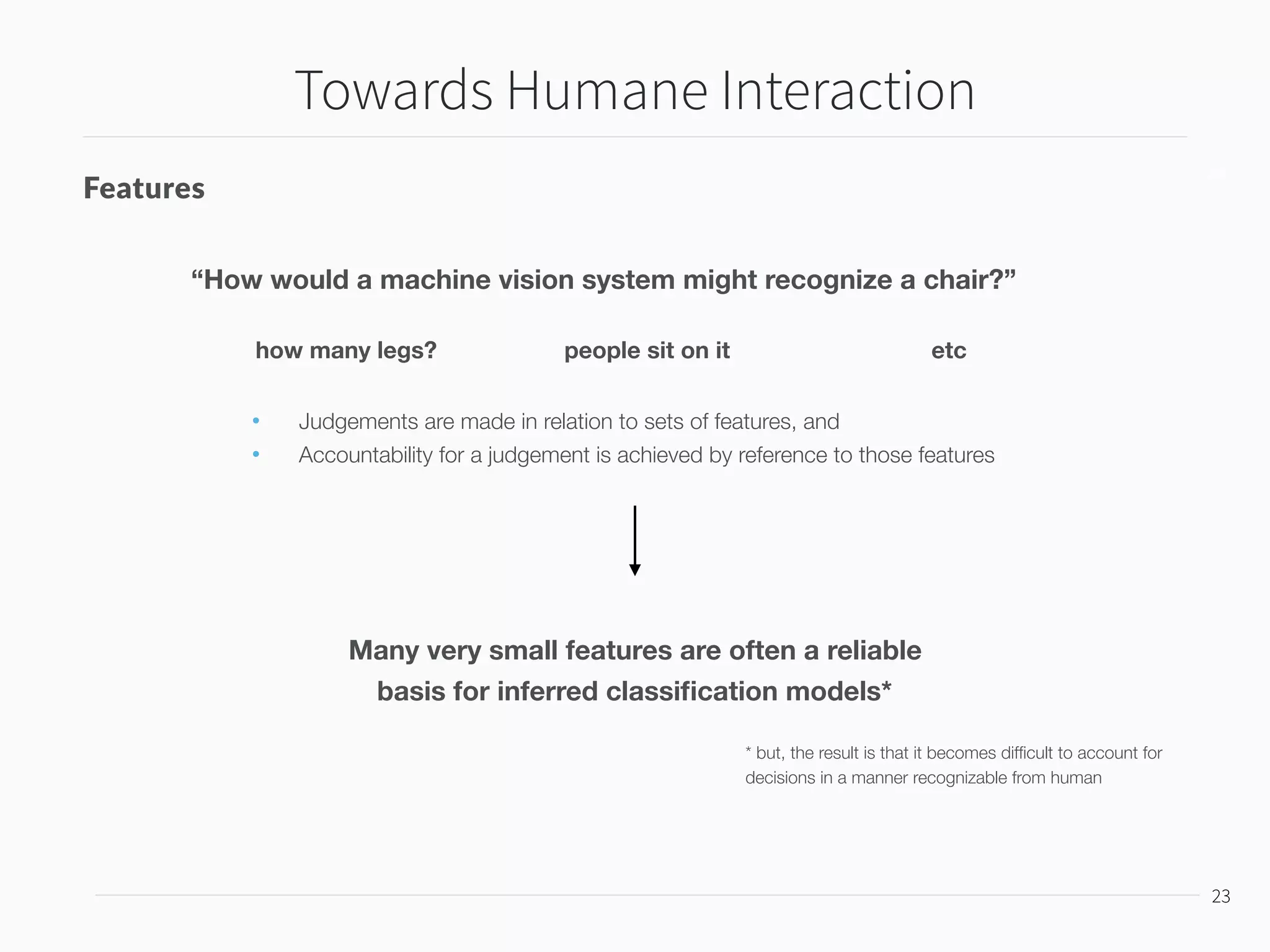

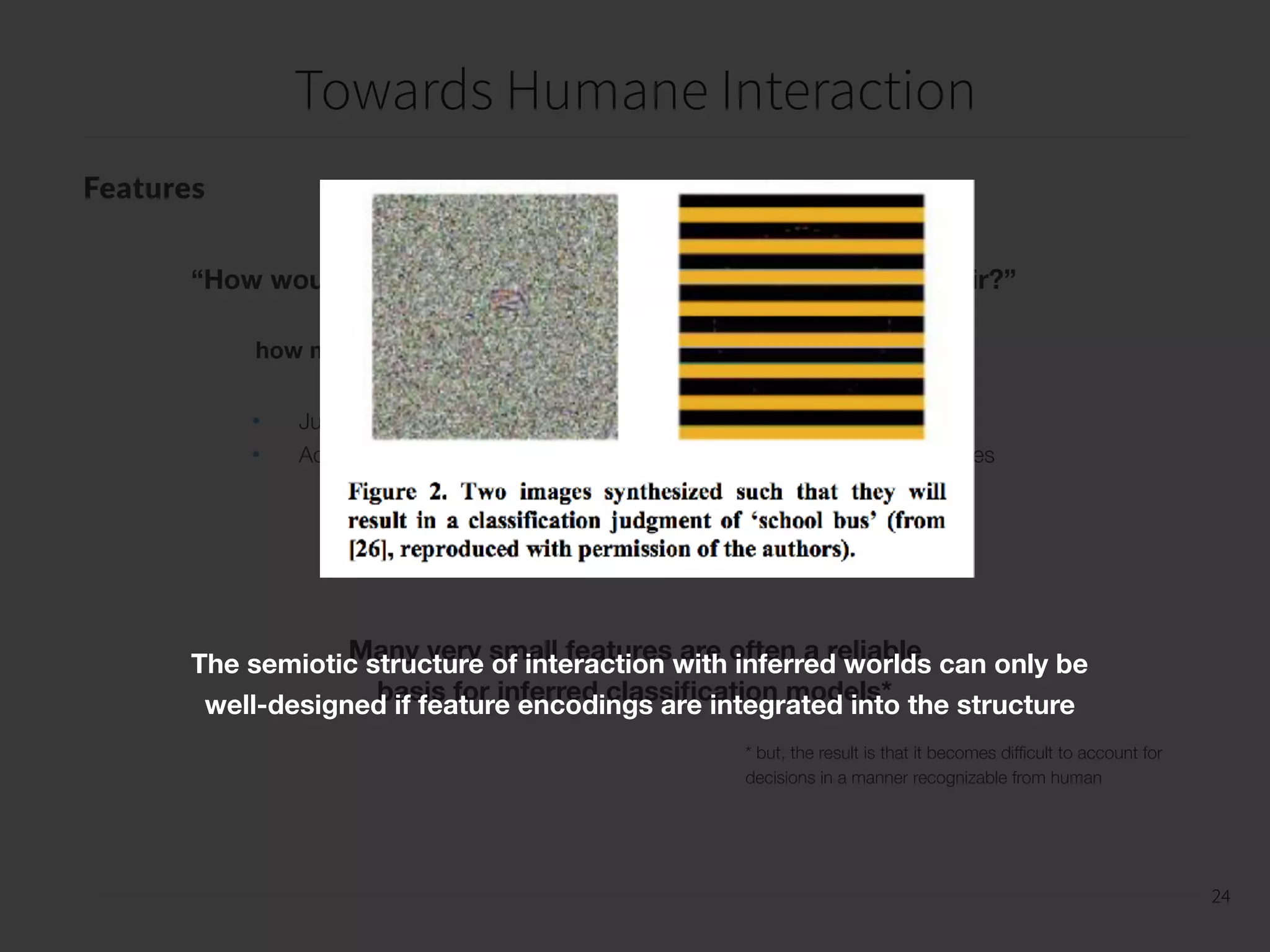

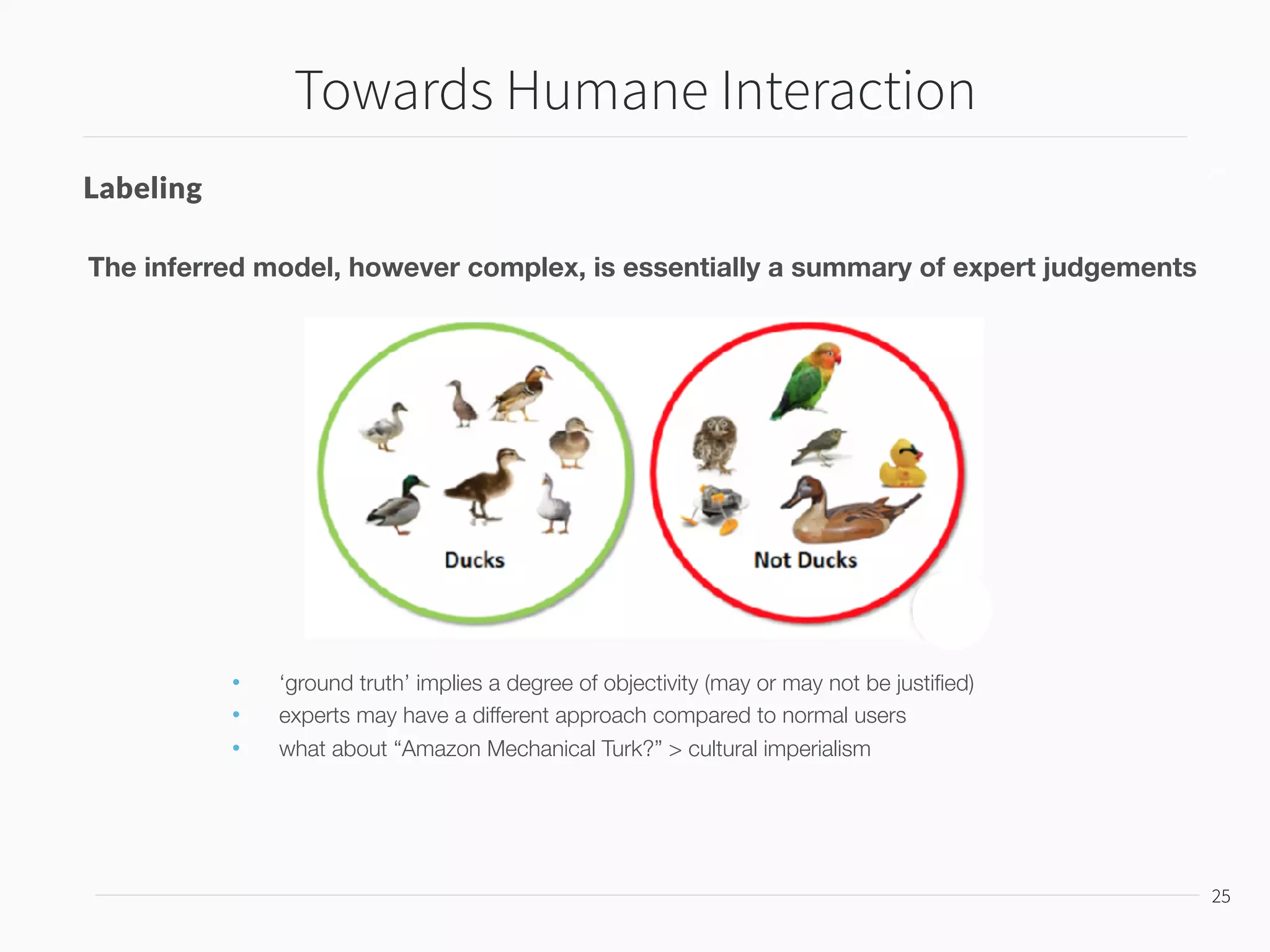

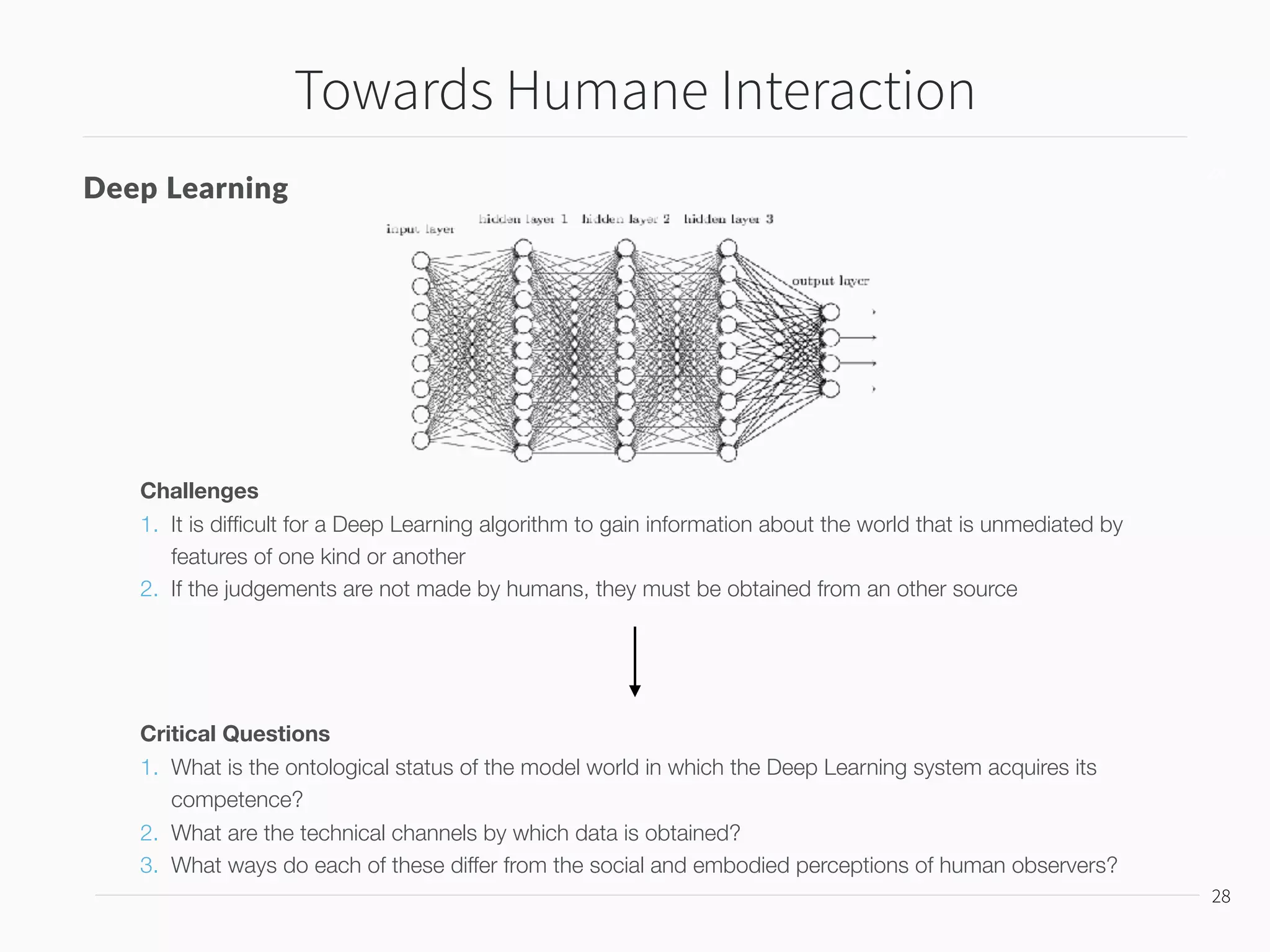

This document discusses the challenges of machine learning systems for human-computer interaction. It argues that while classic theories of interaction were based on symbolic models, modern machine learning systems are based on statistical models trained on large datasets. This raises new questions for how to design systems that are humane and give users a sense of agency, control, and self-determination over systems whose behaviors emerge from complex statistical inferences rather than explicit symbolic rules. It also questions how to ensure proper attribution of content and address issues like bias, privacy, and accountability in these inferred statistical worlds.