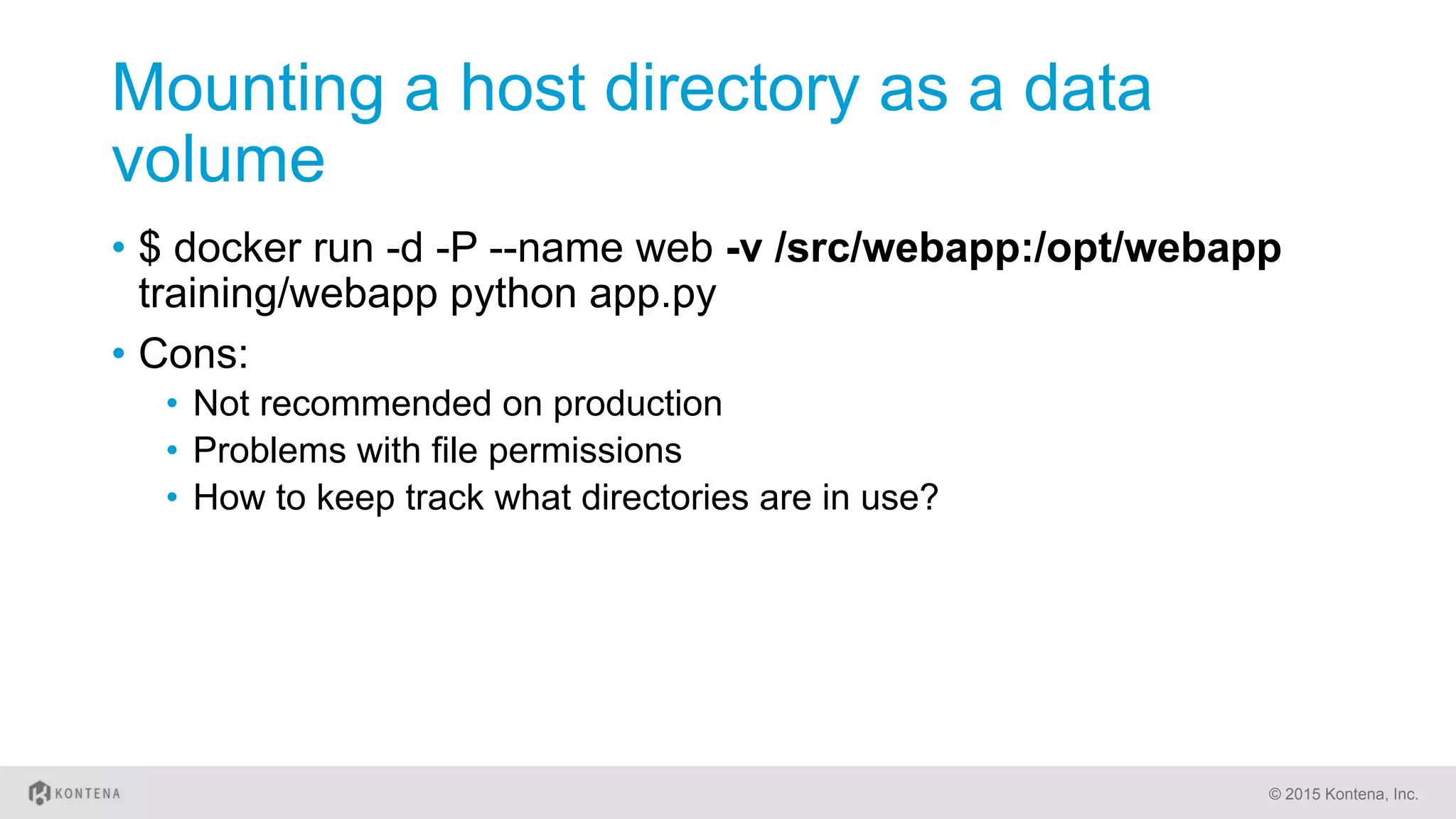

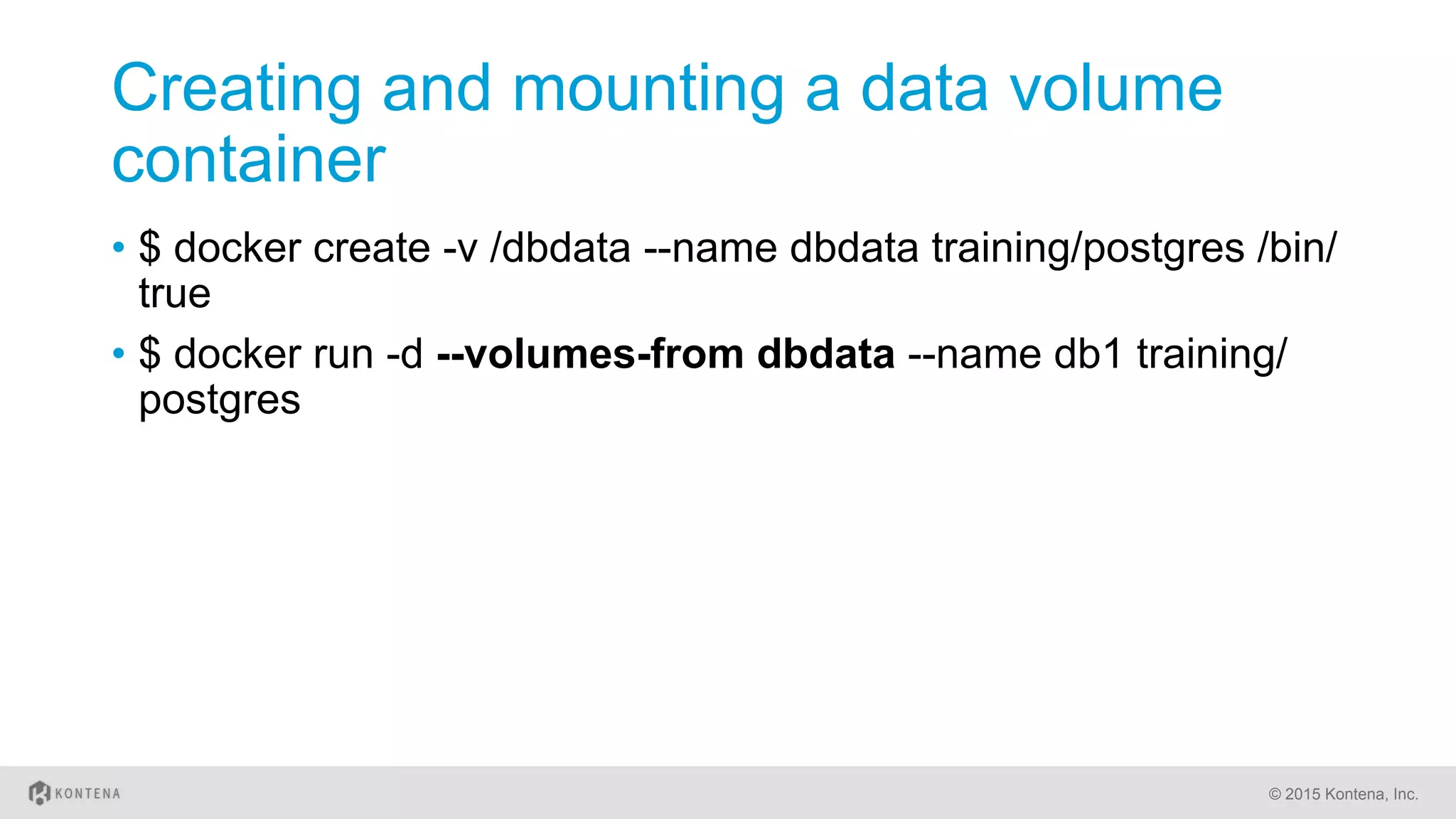

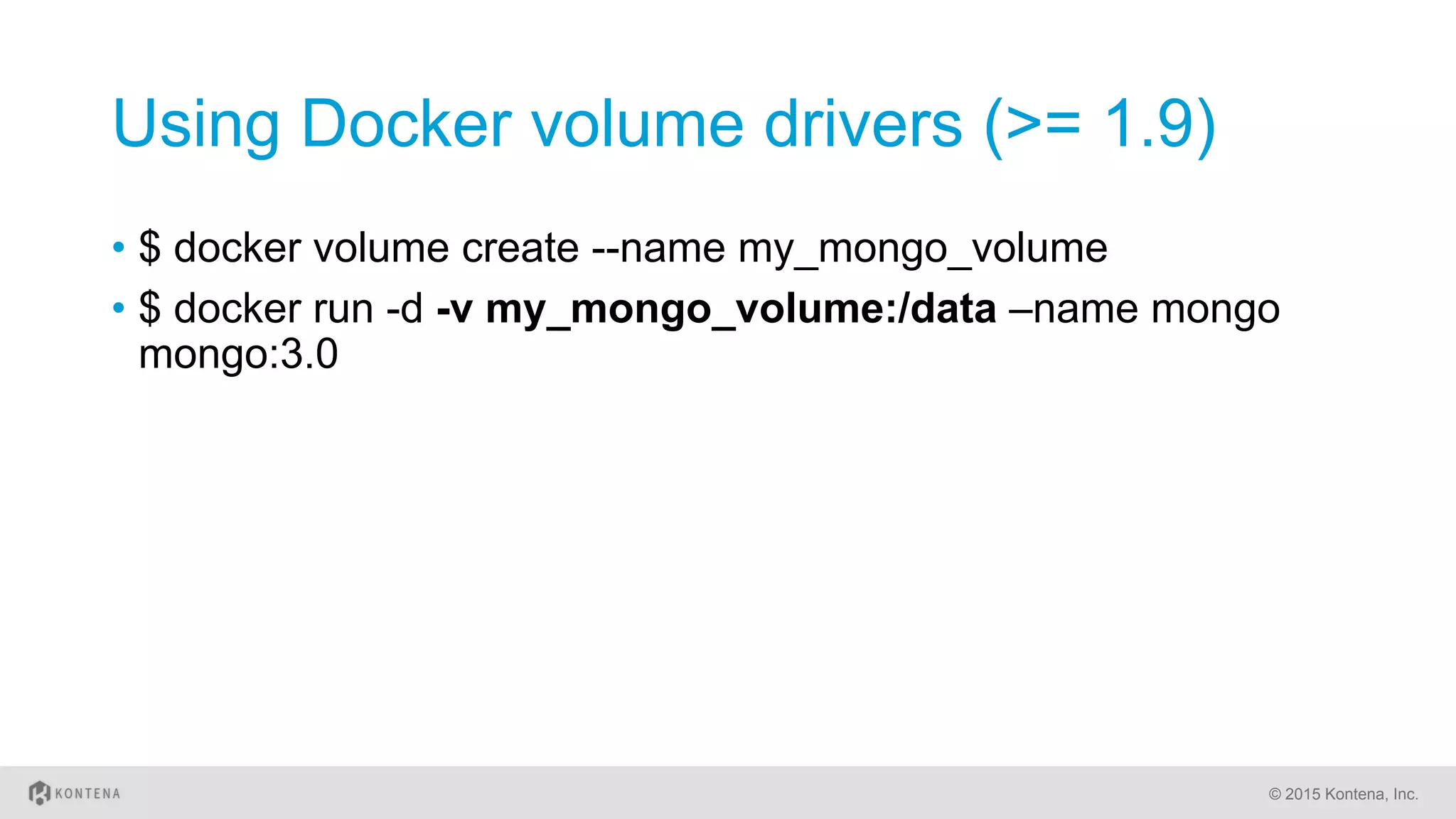

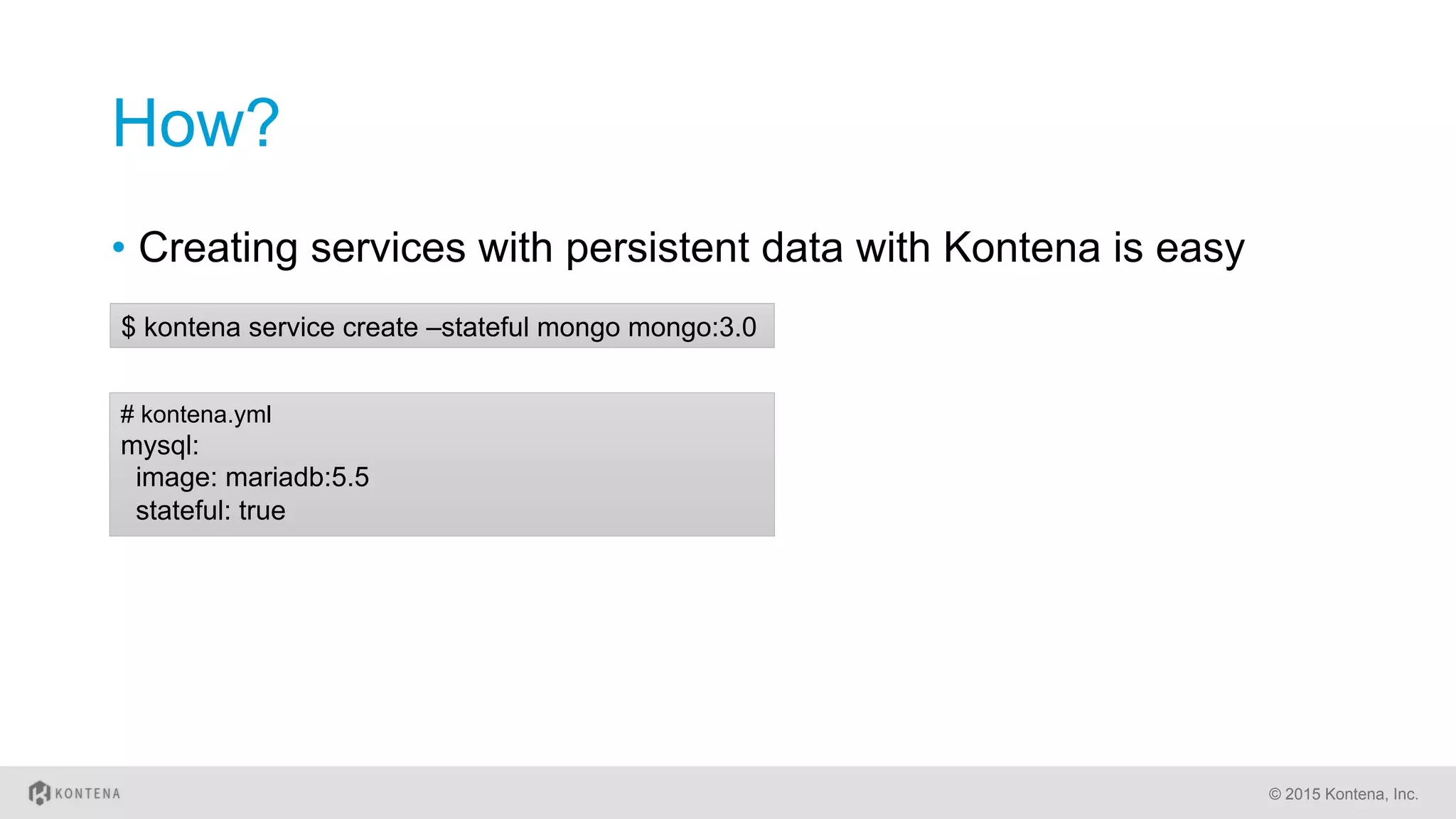

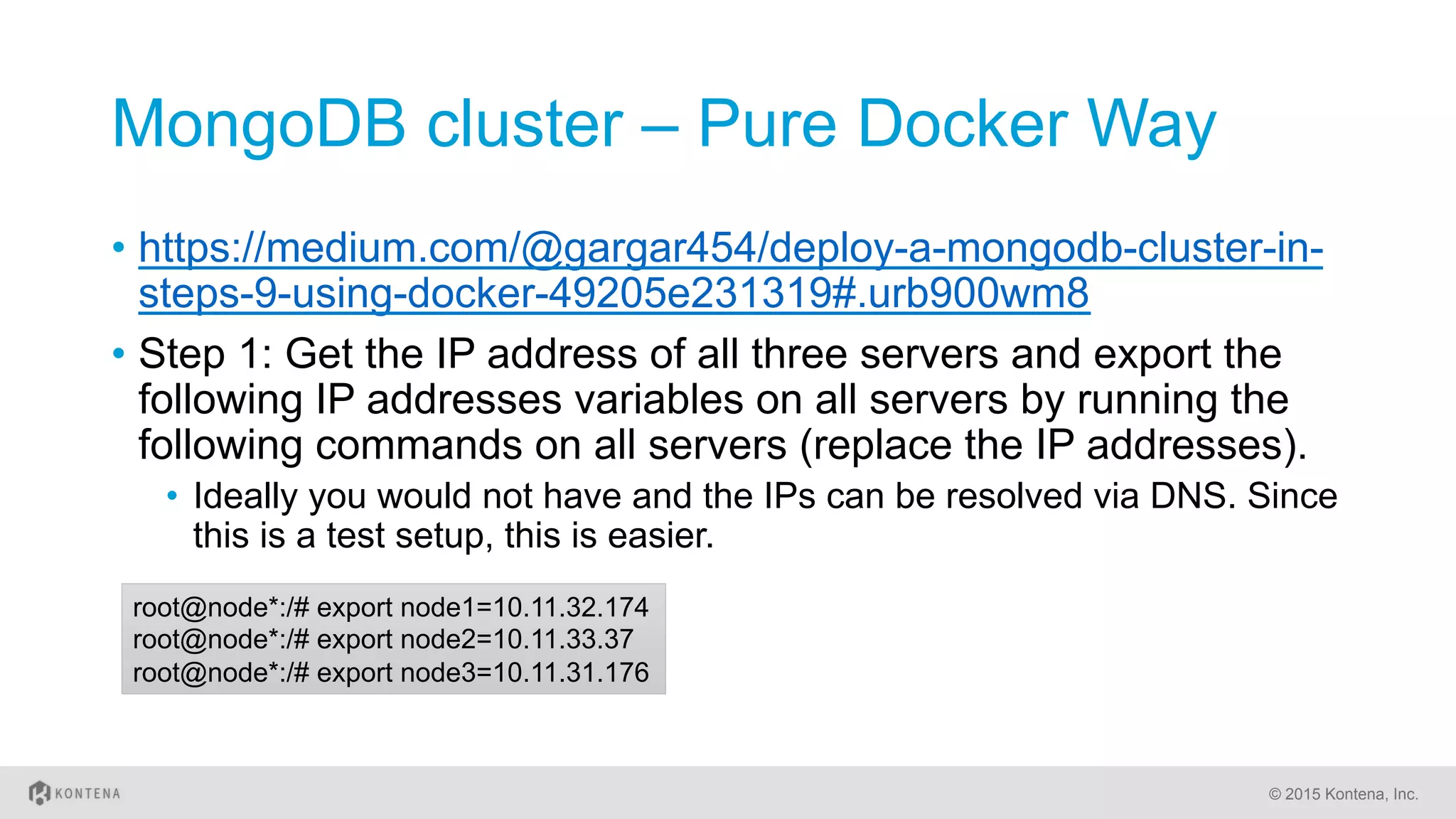

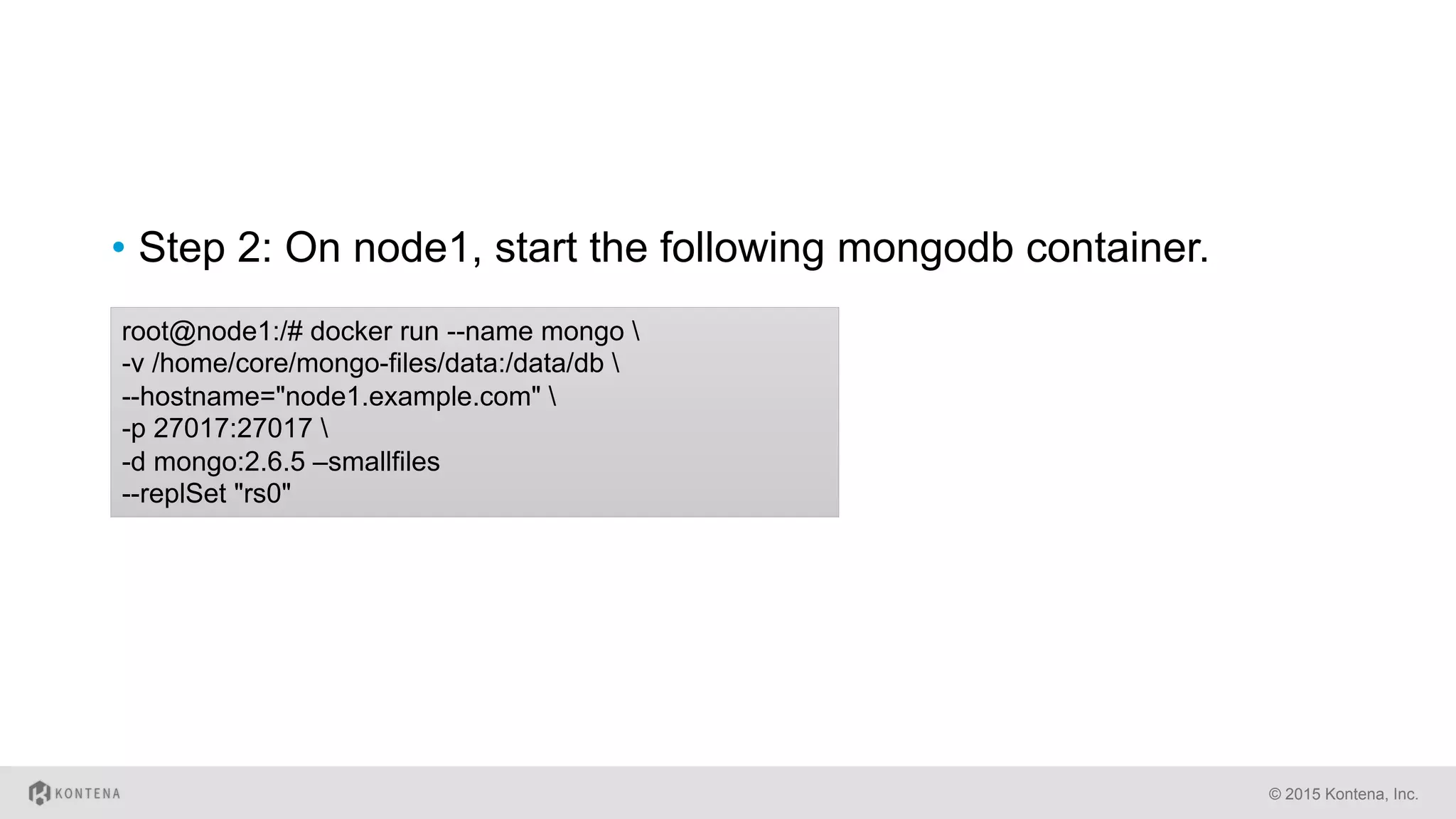

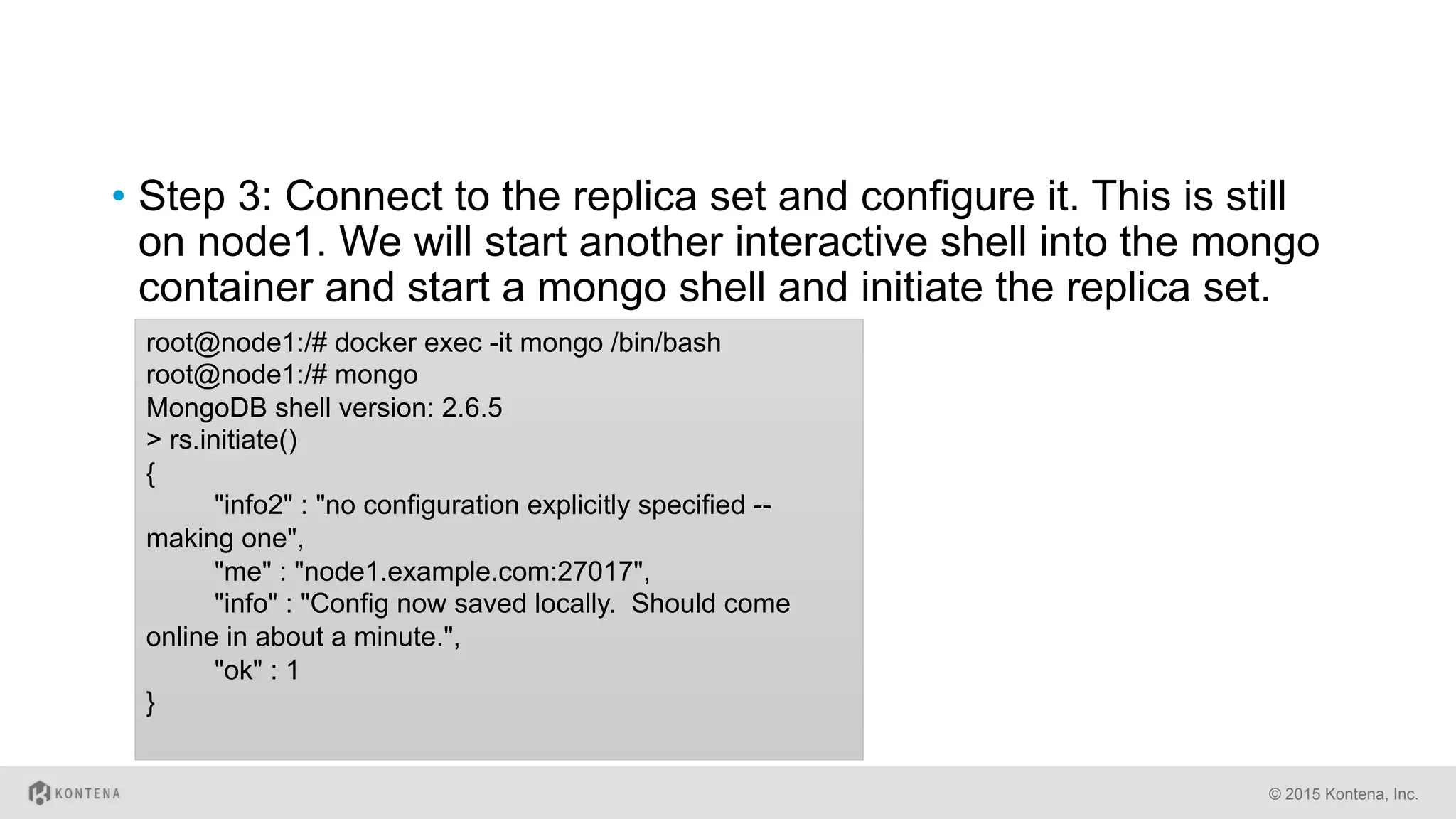

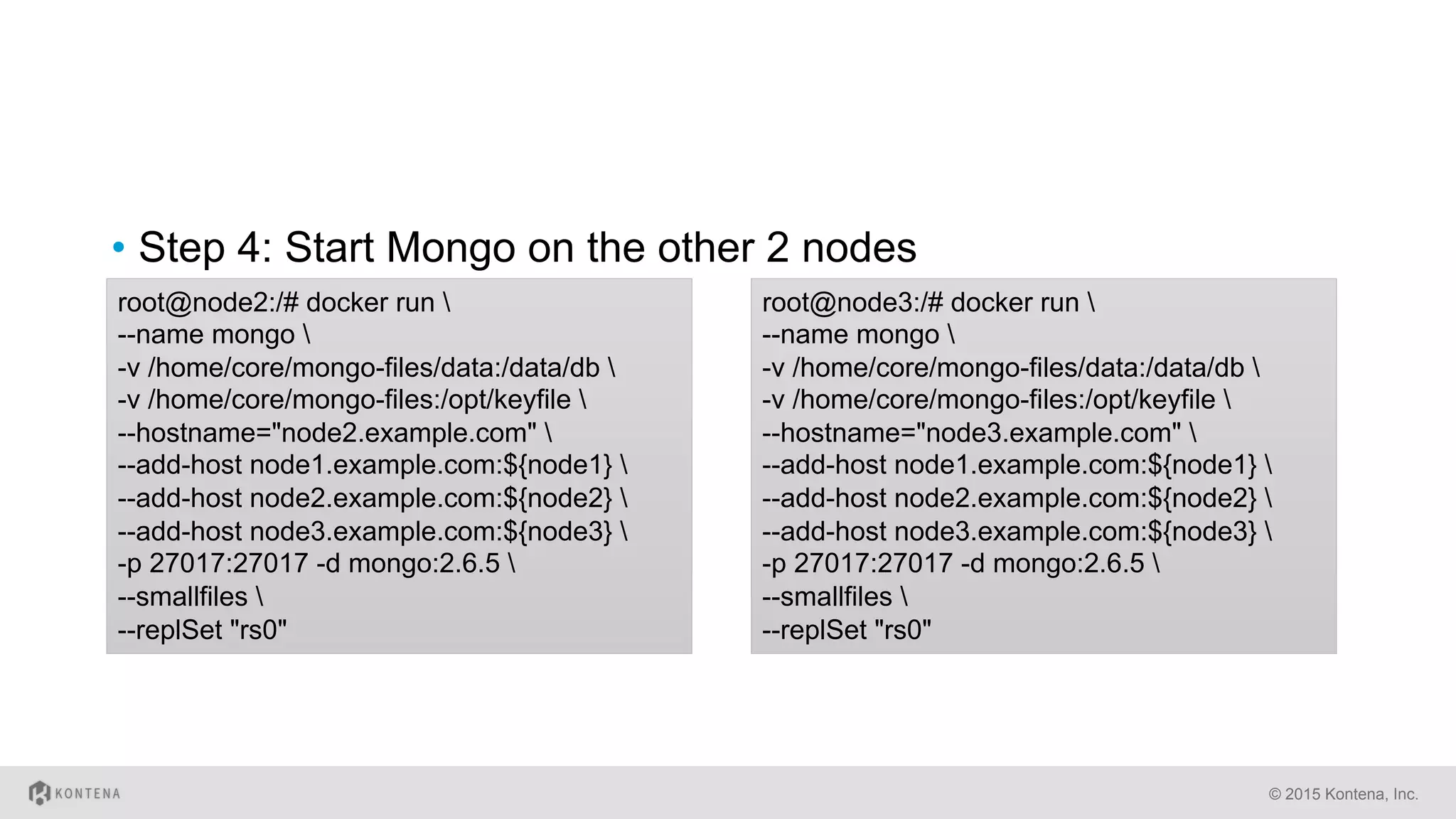

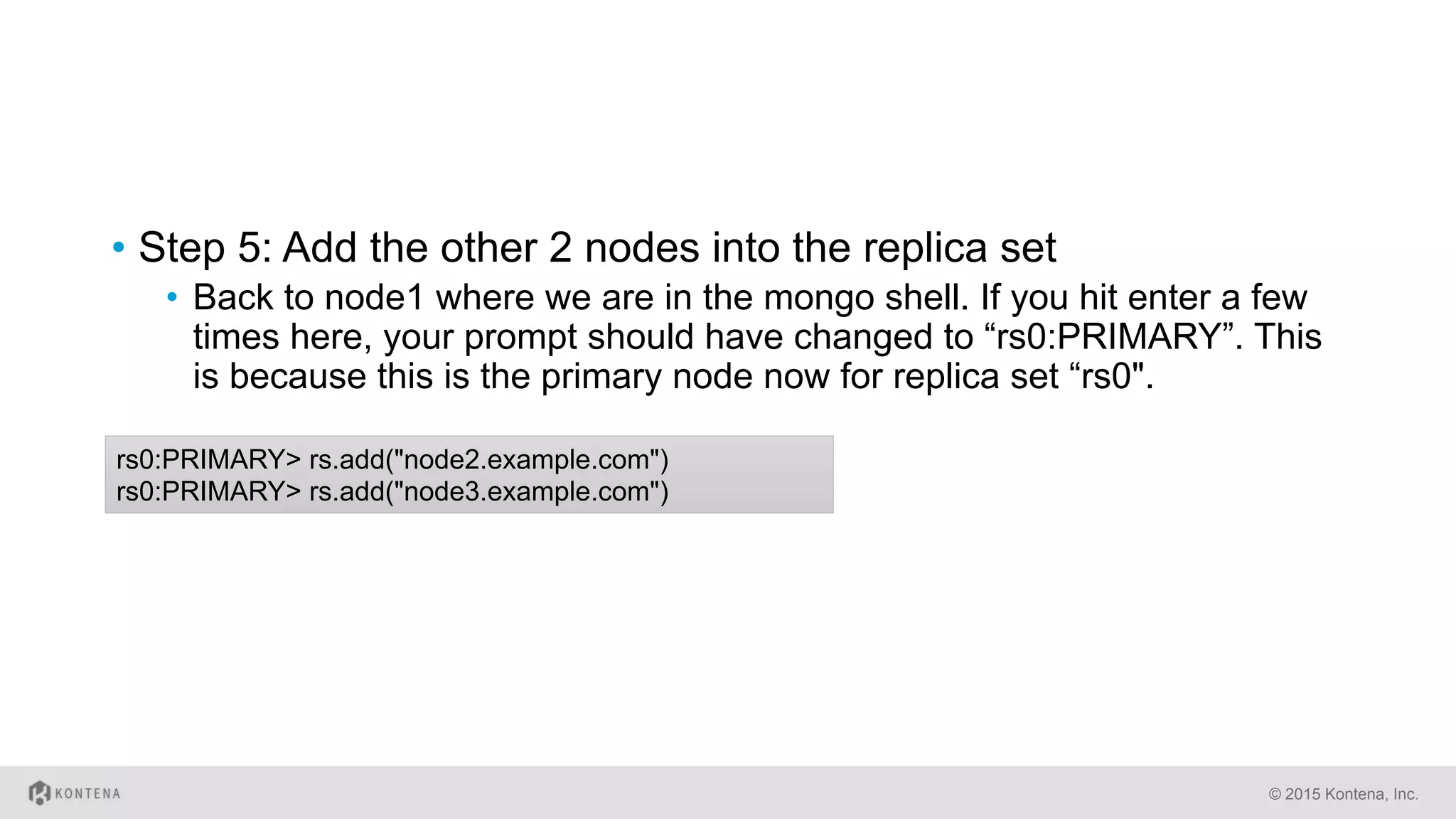

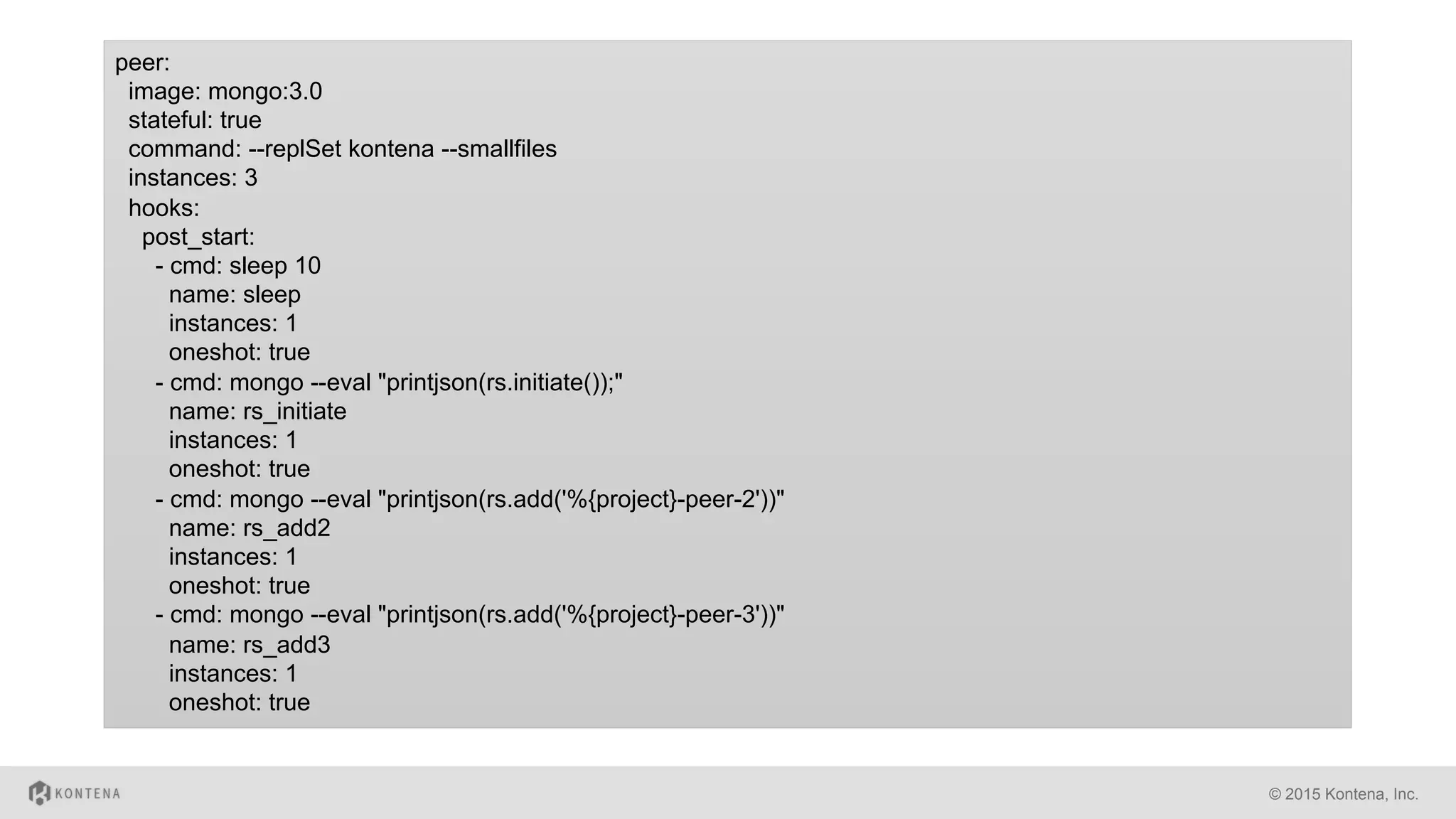

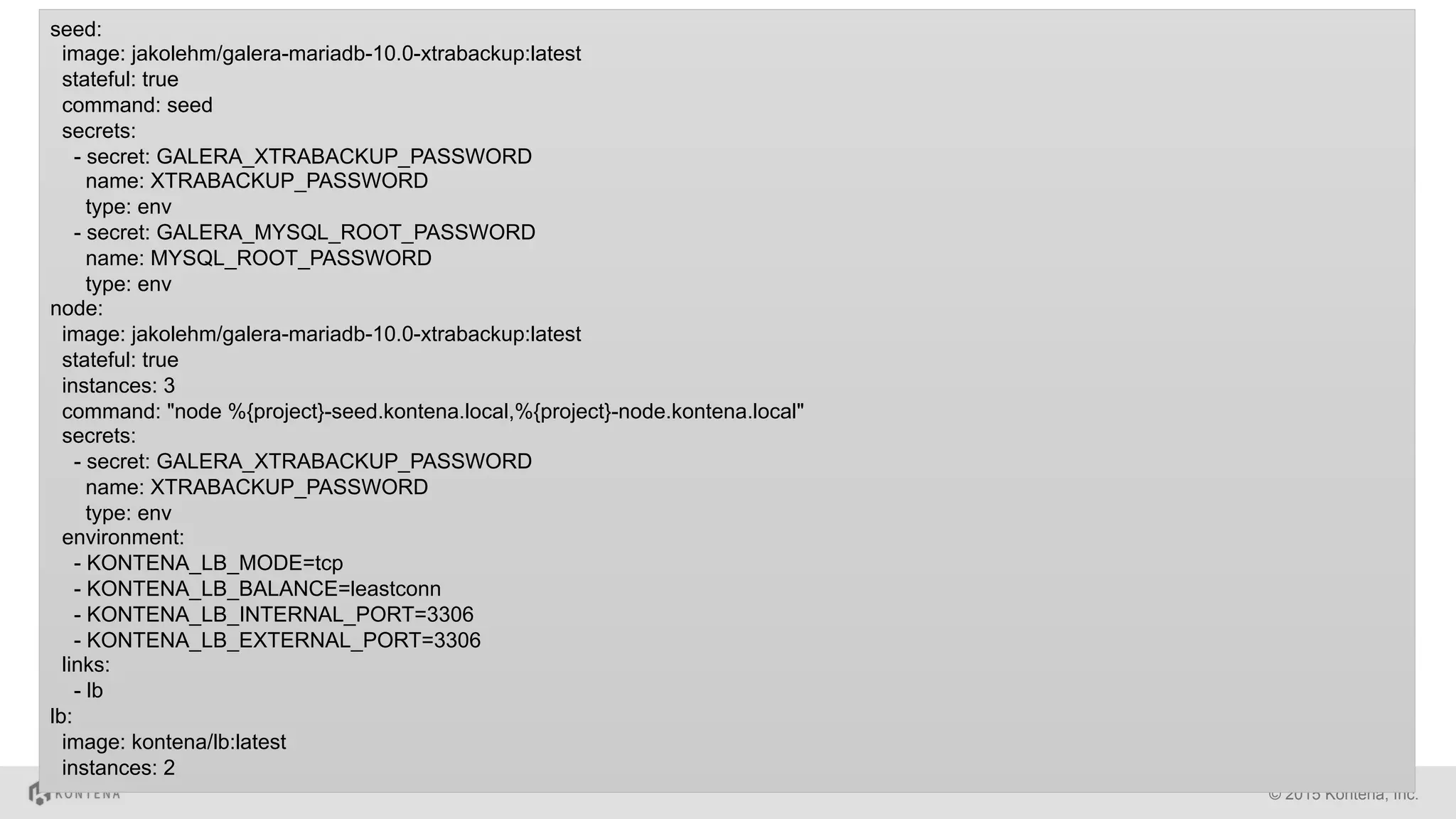

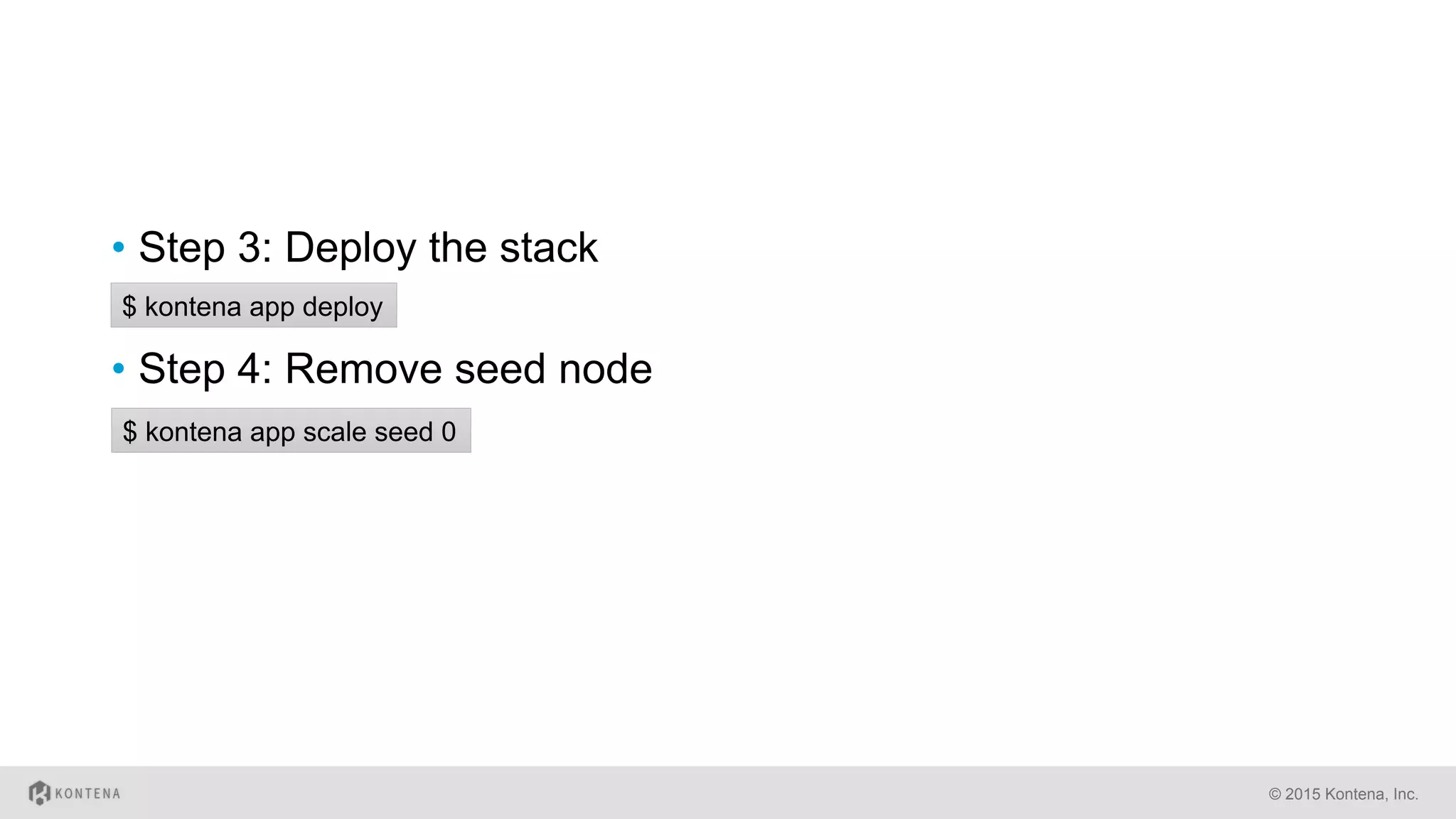

This document discusses running stateful applications in Docker containers. It describes three common approaches: mounting host directories, creating dedicated data volumes, and using Docker volume drivers. It then explains how the Kontena platform automates and simplifies managing stateful services with Docker by automatically creating data volumes and handling data persistence when services are rescheduled. Finally, it provides examples of running MongoDB and MariaDB clusters with stateful persistence using Kontena.