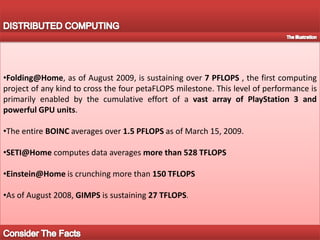

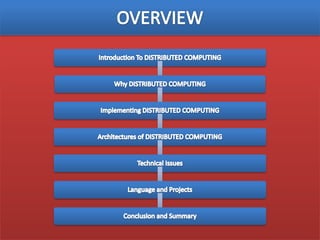

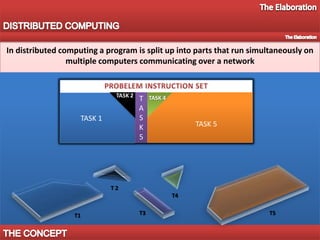

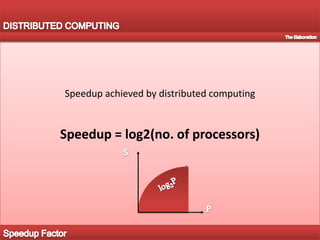

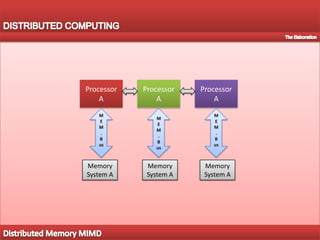

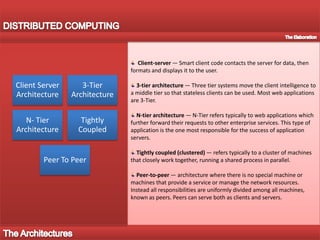

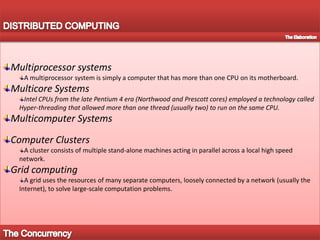

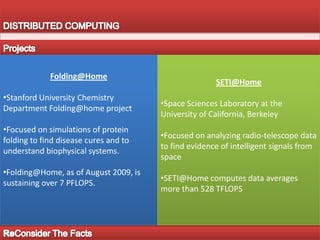

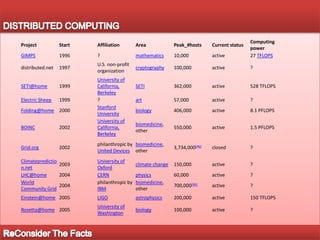

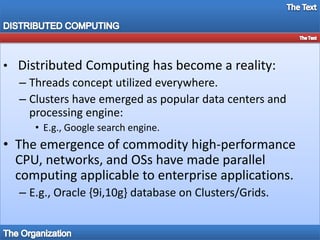

The document discusses distributed computing, highlighting the advancements and performance metrics of various projects like Folding@home and SETI@home, which utilize the collective power of multiple machines to achieve significant computing capabilities. It outlines the definition, importance, and challenges of distributed computing, emphasizing its role in enhancing processing capabilities through various architectures and models. Key points include the technical aspects of system design, the various types of architectures used, and the necessity of effective protocol and communication systems to ensure reliability and efficiency.