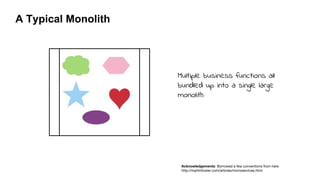

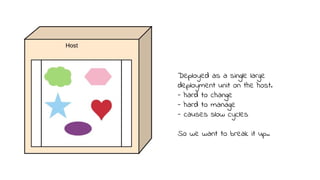

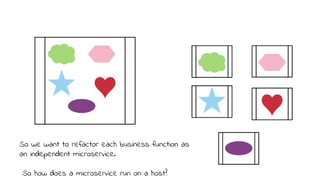

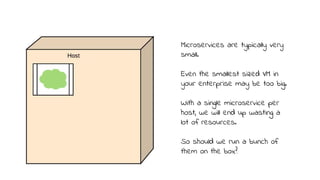

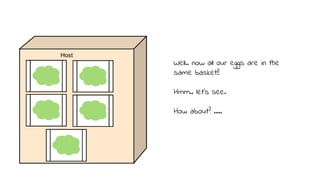

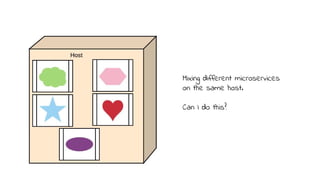

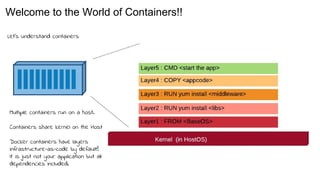

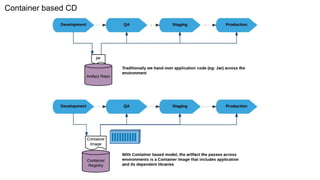

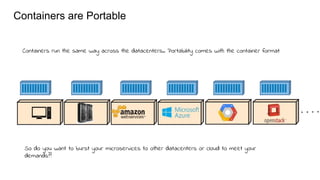

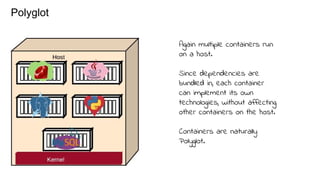

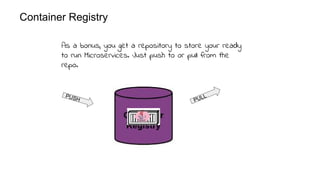

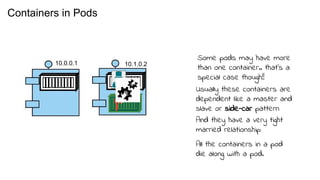

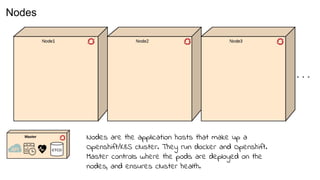

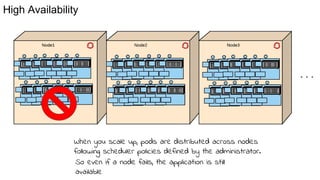

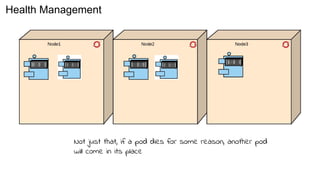

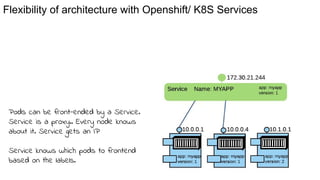

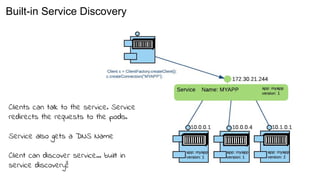

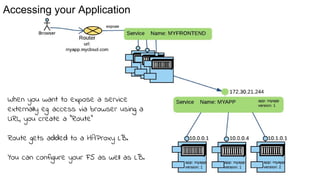

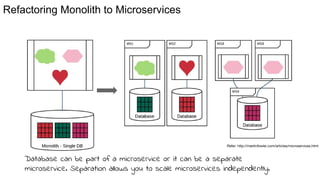

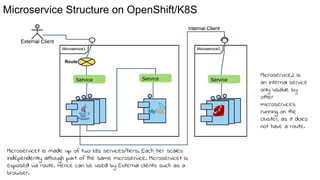

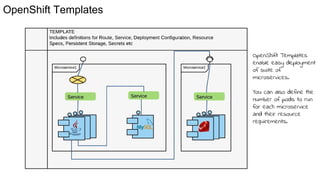

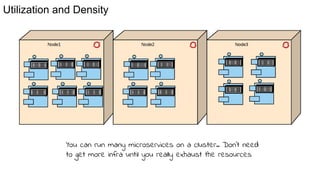

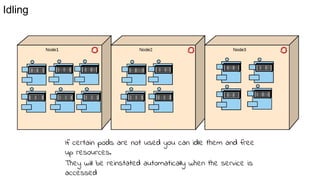

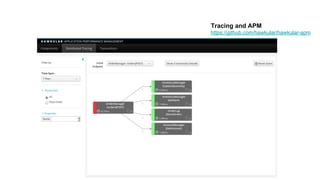

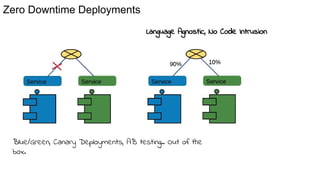

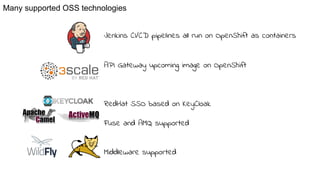

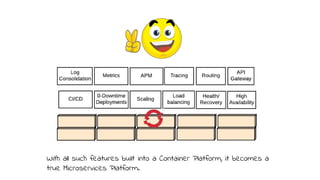

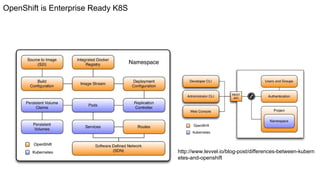

The document outlines the benefits of using containers for microservices, particularly in relation to deployment and scalability on platforms like OpenShift and Kubernetes. It discusses how containers enable independent, portable, and fast deployment of microservices, along with features like health management and service discovery. Additionally, it highlights the structured approach to transitioning from monoliths to microservices, emphasizing on flexibility, resource management, and built-in support for application monitoring and deployment strategies.