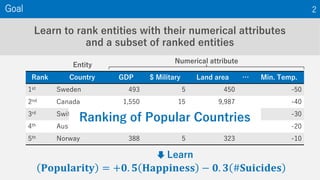

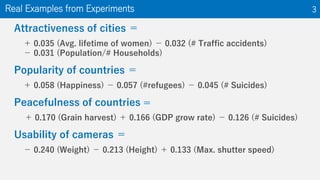

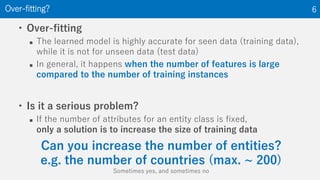

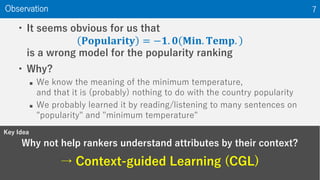

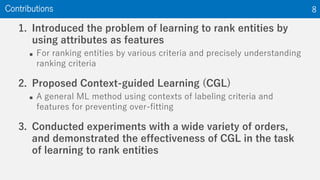

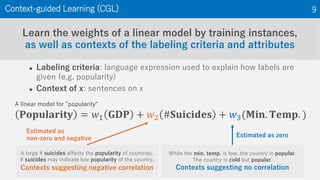

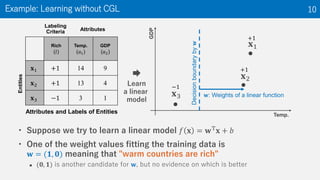

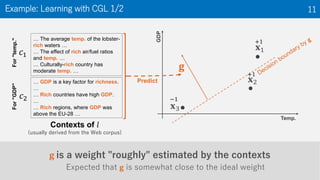

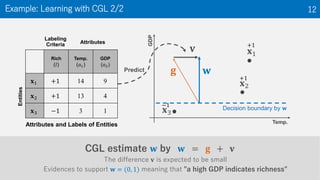

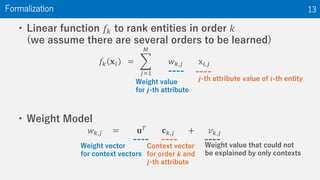

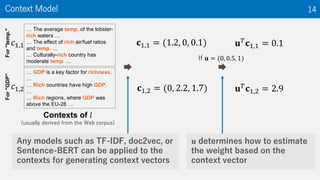

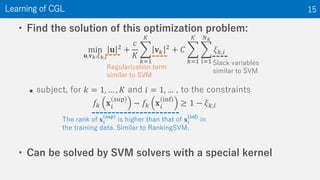

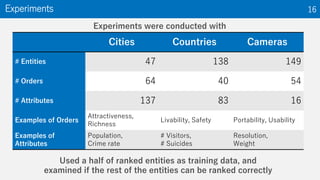

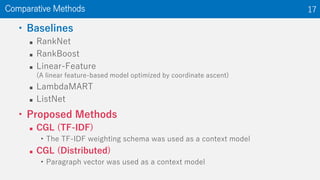

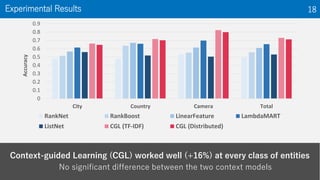

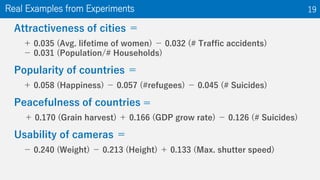

The document discusses the introduction of context-guided learning (CGL) as a method for ranking entities using numerical attributes and contextual information. It highlights the challenges of overfitting when training data is limited and how CGL can improve ranking accuracy by leveraging contextual factors. Experimental results demonstrate that CGL significantly enhances the learning process in ranking tasks across various entity types and criteria.