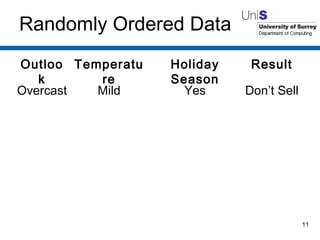

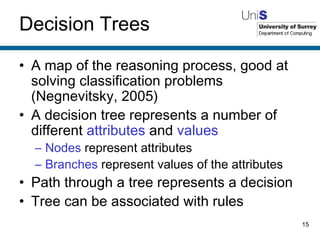

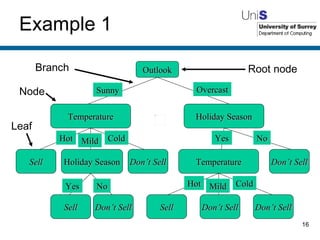

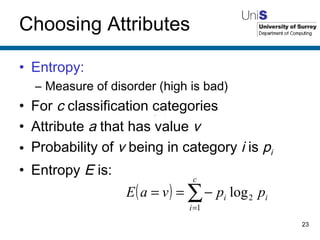

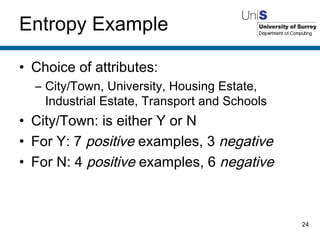

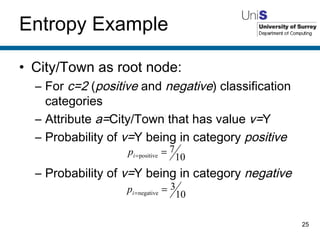

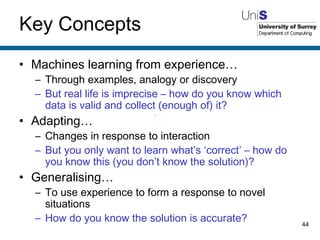

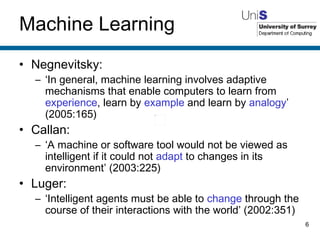

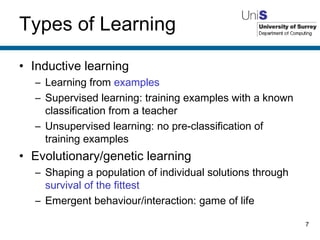

The document discusses machine learning techniques. It describes how machines can learn from examples, through experience and adaptation. It evaluates methods for acquiring and representing knowledge, including decision trees, neural networks and genetic algorithms. While machine learning techniques have benefits like learning from experience and generalizing, they also have drawbacks such as not knowing whether the learned knowledge is completely correct.

![What is Learning? ‘ The action of receiving instruction or acquiring knowledge’ ‘ A process which leads to the modification of behaviour or the acquisition of new abilities or responses , and which is additional to natural development by growth or maturation’ Oxford English Dictionary (1989). Learning, vbl. n. 2 nd Edition. http://dictionary.oed.com/cgi/entry/50131042?single=1&query_type=word&queryword=learning&first=1&max_to_show=10 . [Accessed 16-10-06].](https://image.slidesharecdn.com/week9machinelearningppt3339/85/week9_Machine_Learning-ppt-5-320.jpg)

![Game of Life Wikipedia (2006). Image:Gospers glider gun.gif - Wikipedia, the free encyclopedia. http://en.wikipedia.org/wiki/Image:Gospers_glider_gun.gif . [Accessed 16-10-06].](https://image.slidesharecdn.com/week9machinelearningppt3339/85/week9_Machine_Learning-ppt-8-320.jpg)

![Why? Knowledge Engineering Bottleneck ‘ Cost and difficulty of building expert systems using traditional […] techniques’ (Luger 2002:351) Complexity of task / amount of data Other techniques fail or are computationally expensive Problems that cannot be defined Discovery of patterns / data mining](https://image.slidesharecdn.com/week9machinelearningppt3339/85/week9_Machine_Learning-ppt-9-320.jpg)