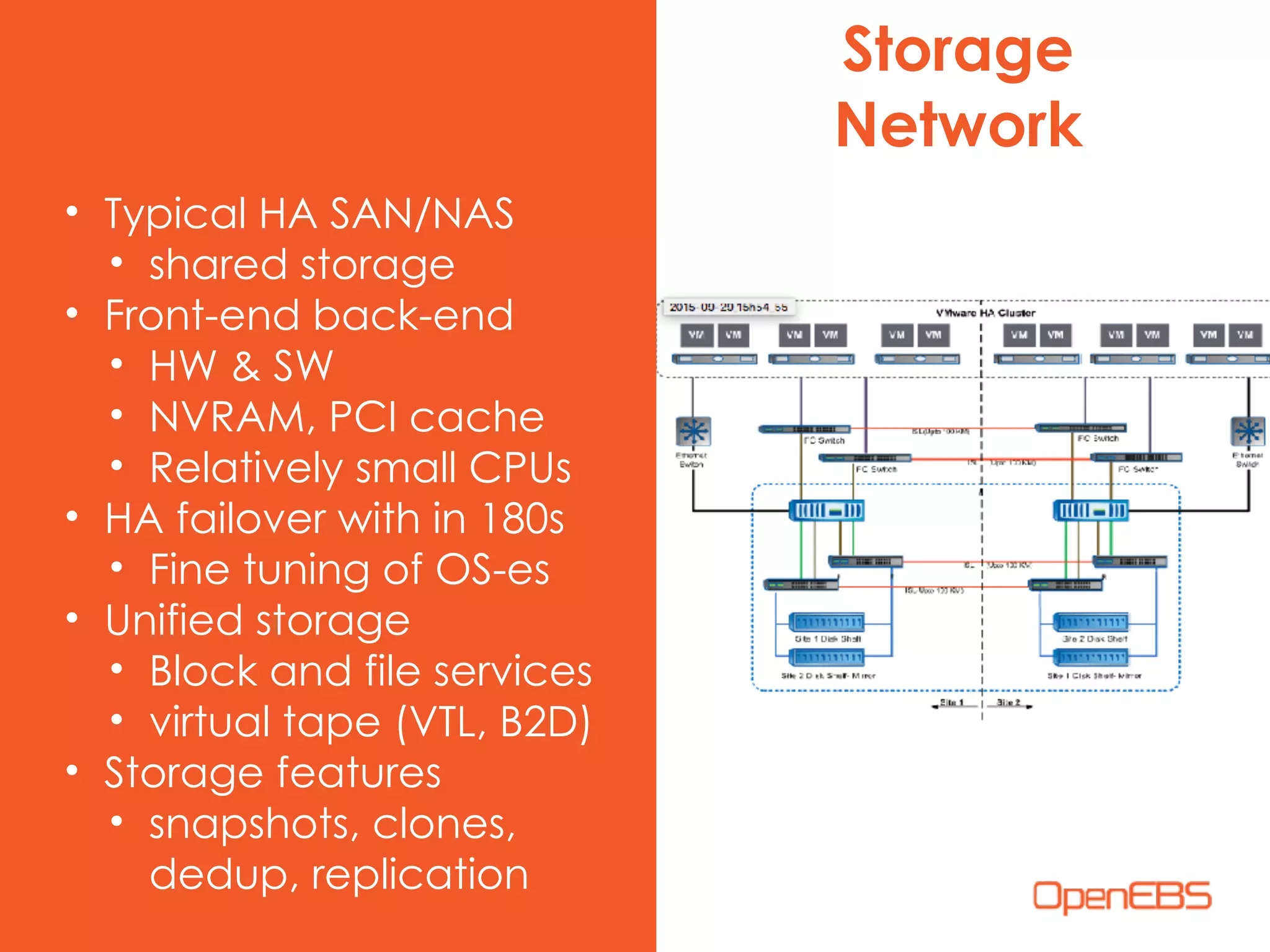

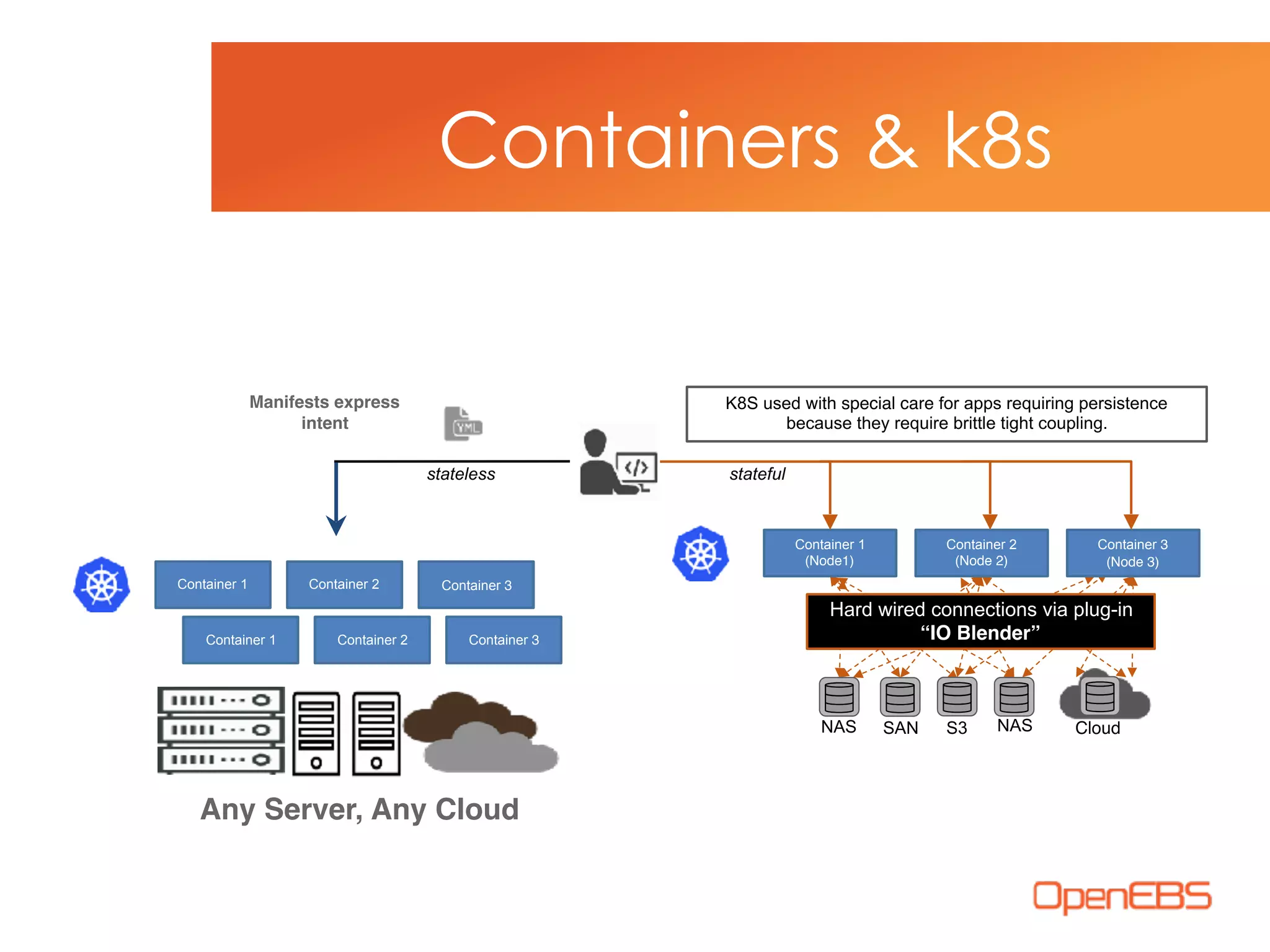

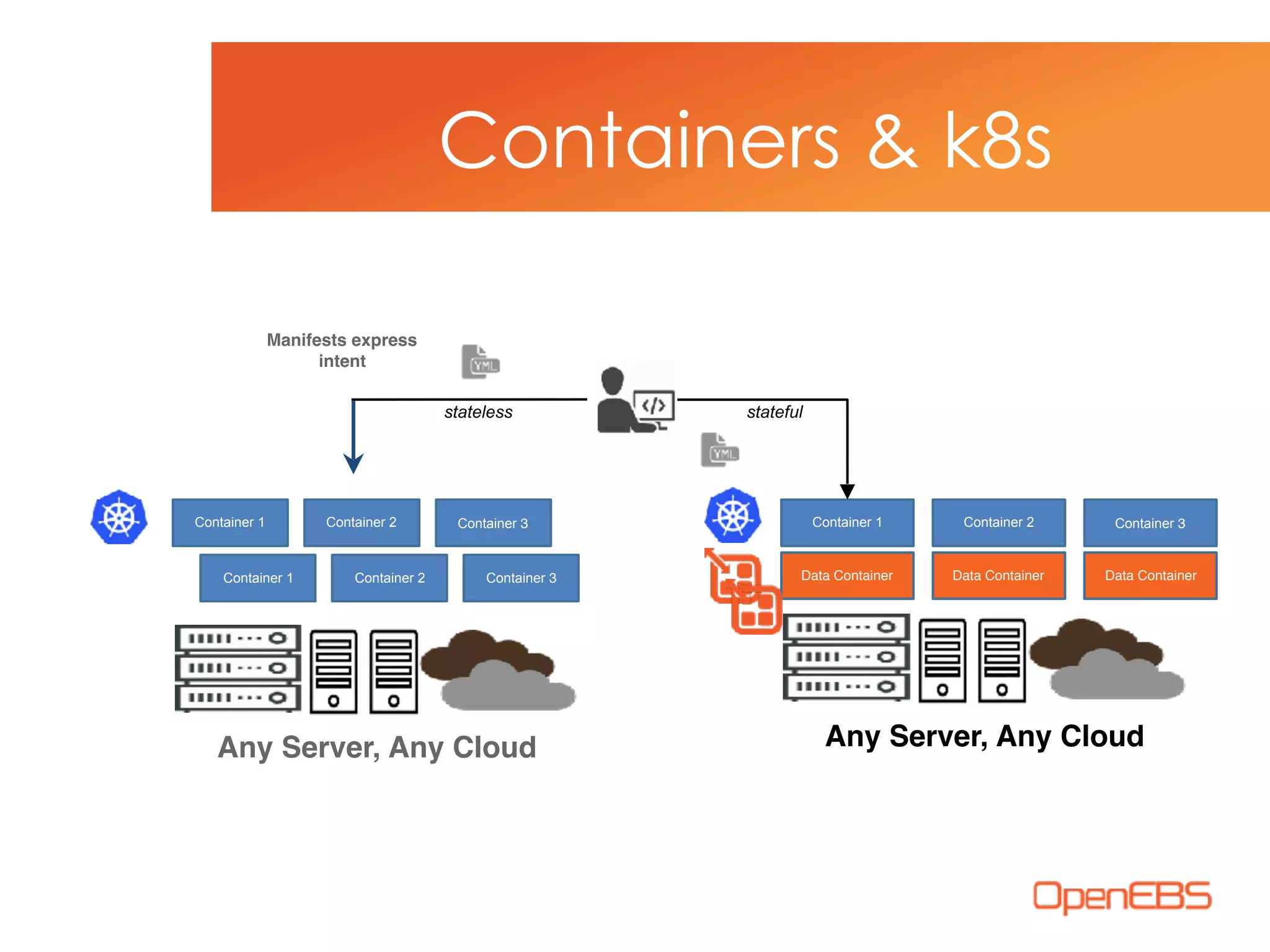

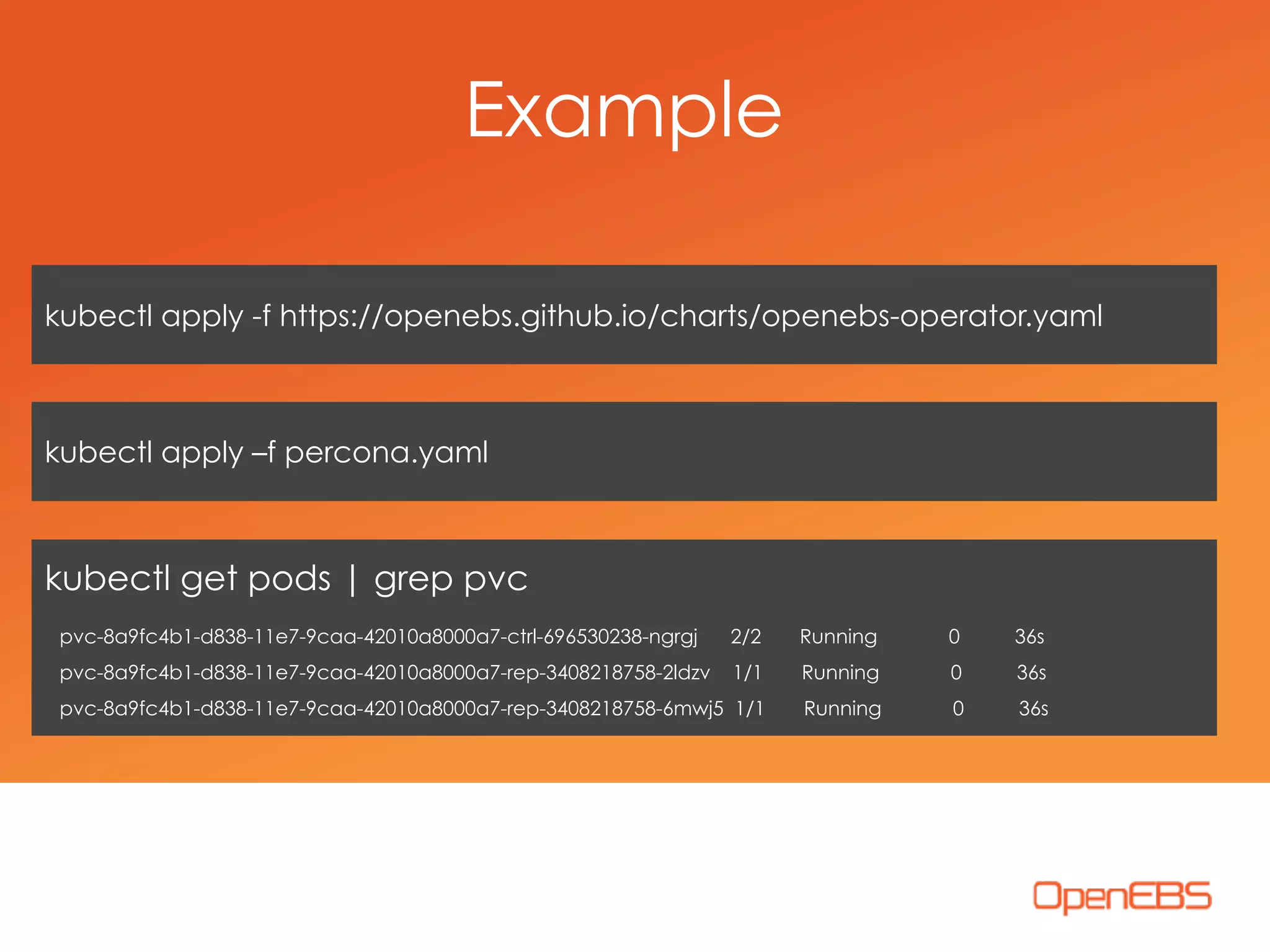

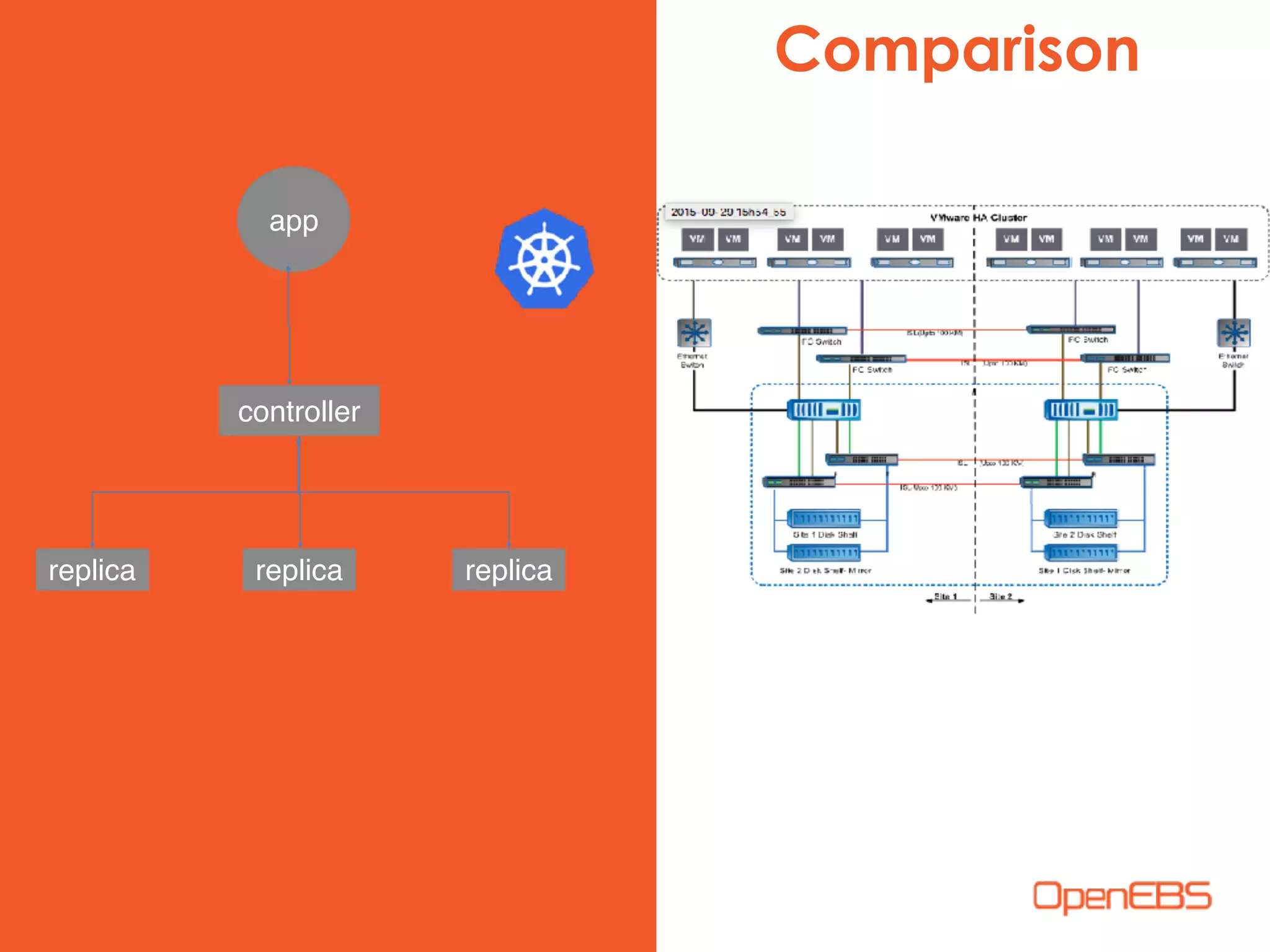

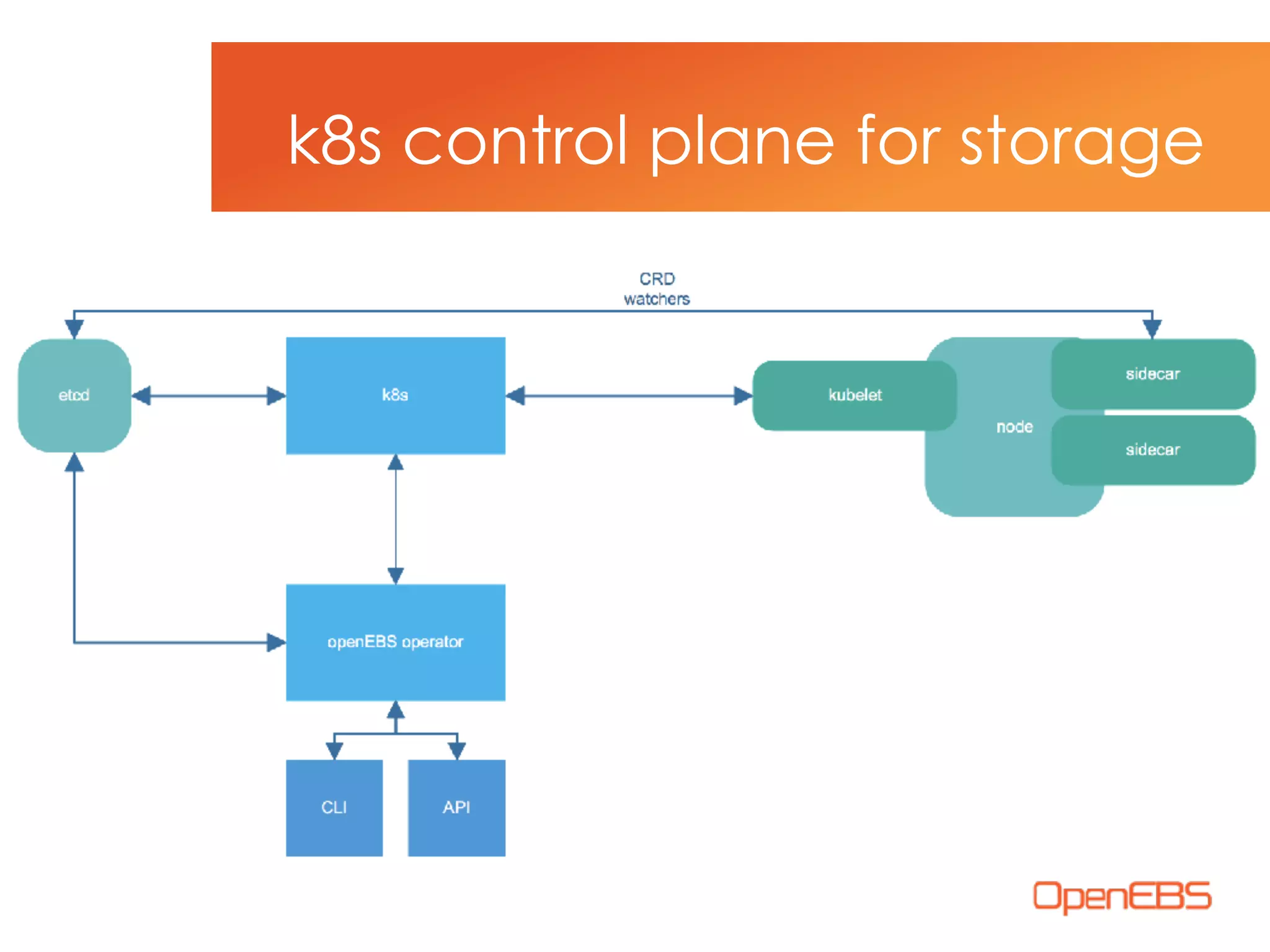

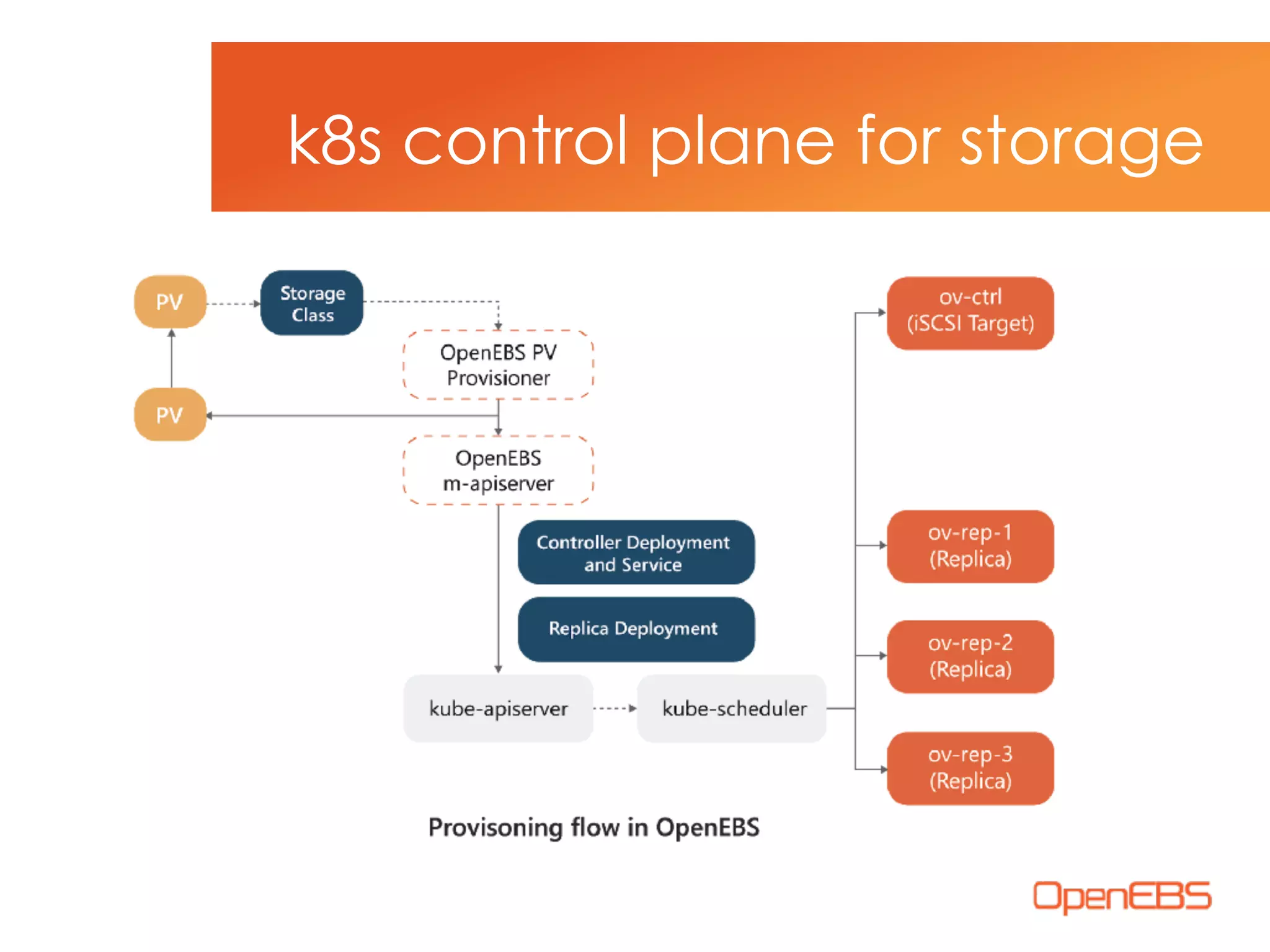

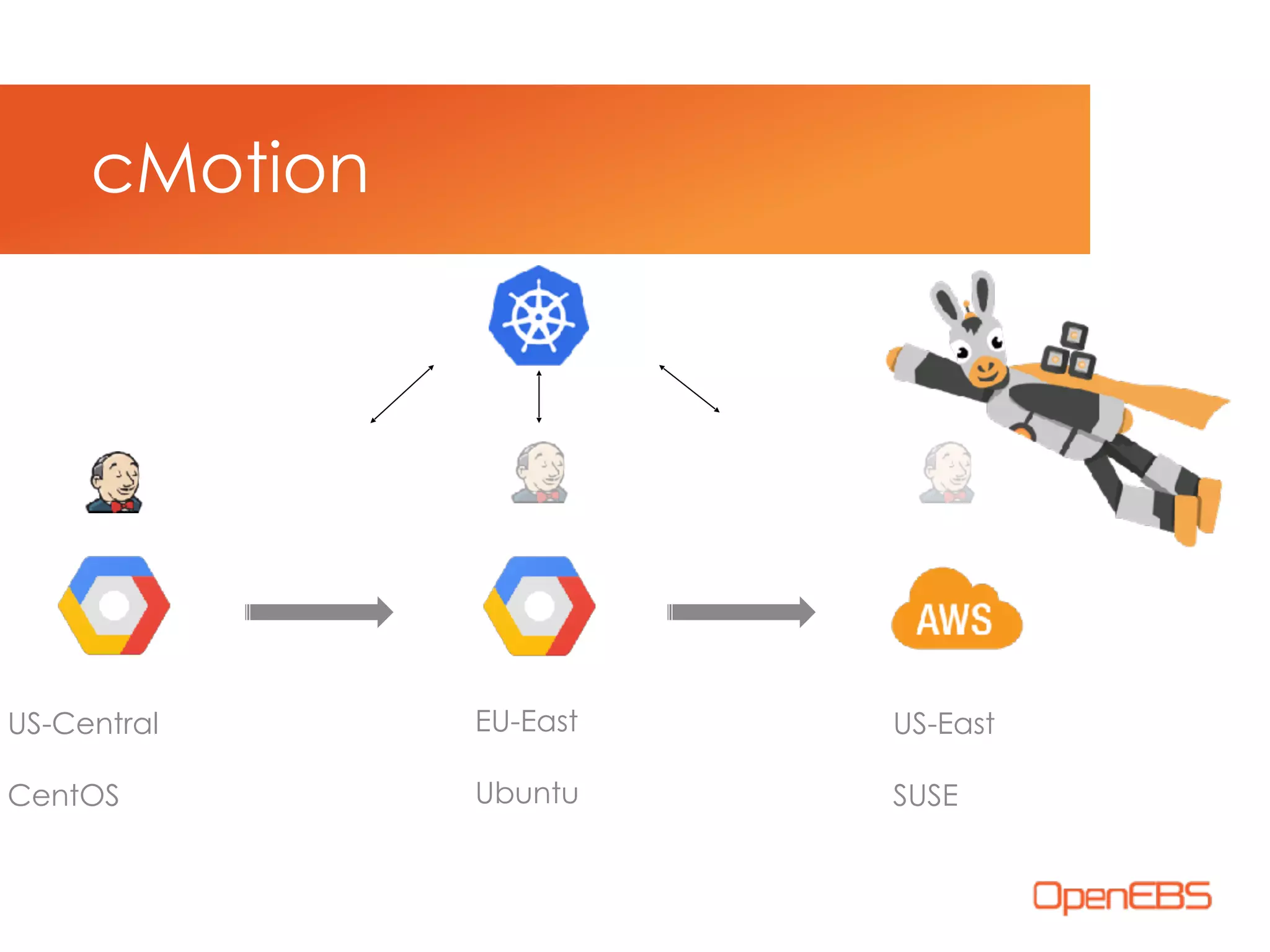

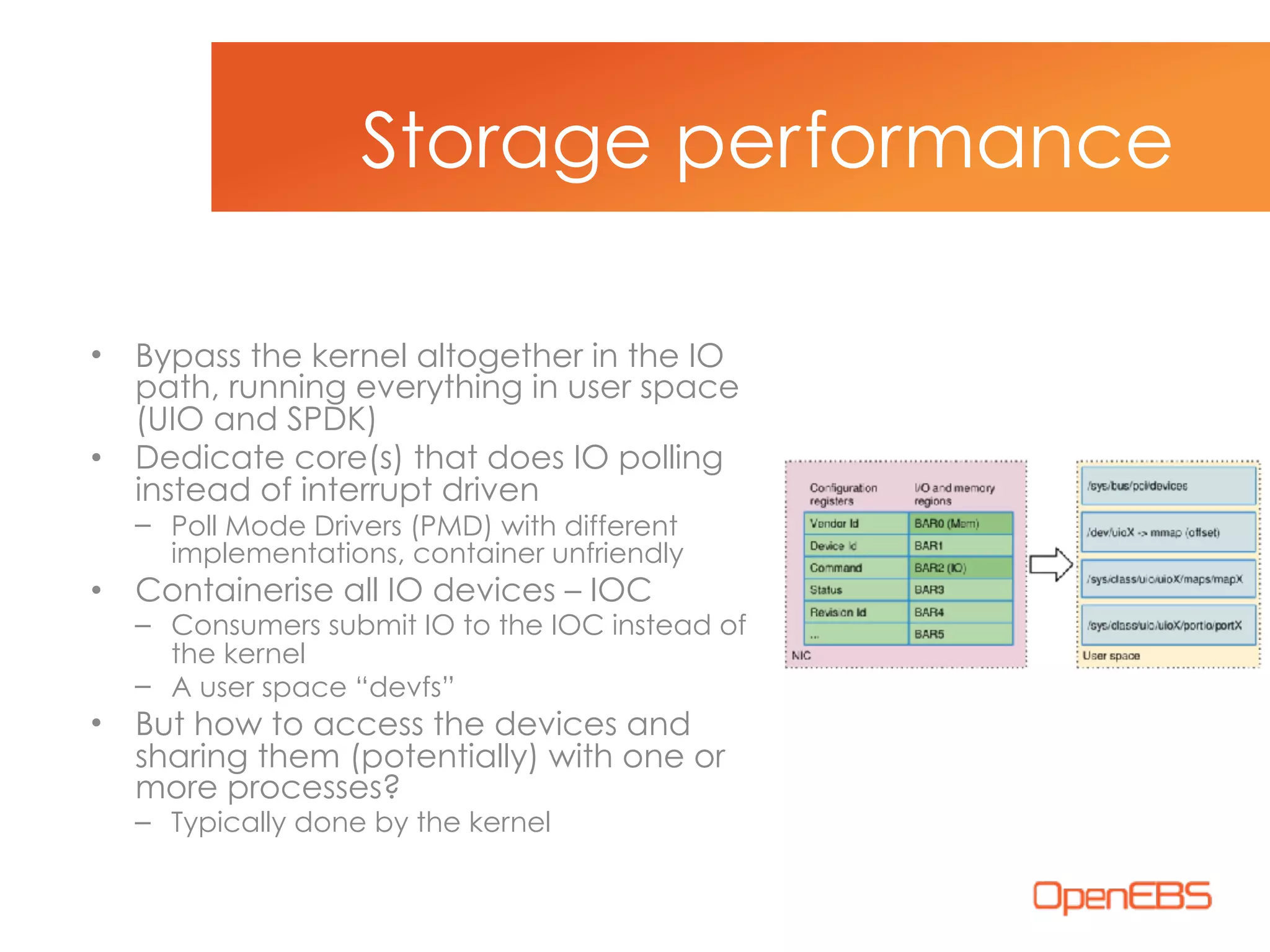

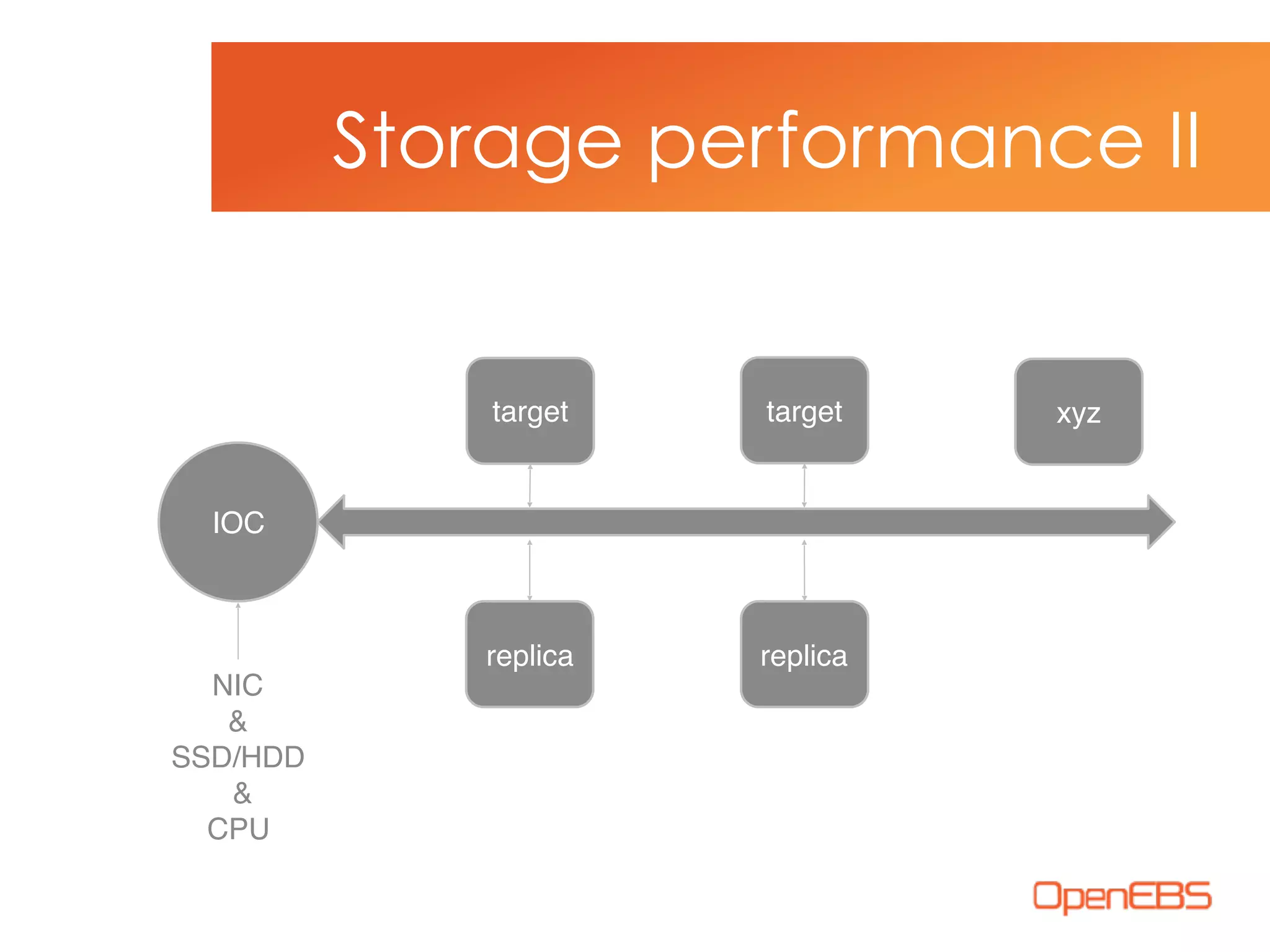

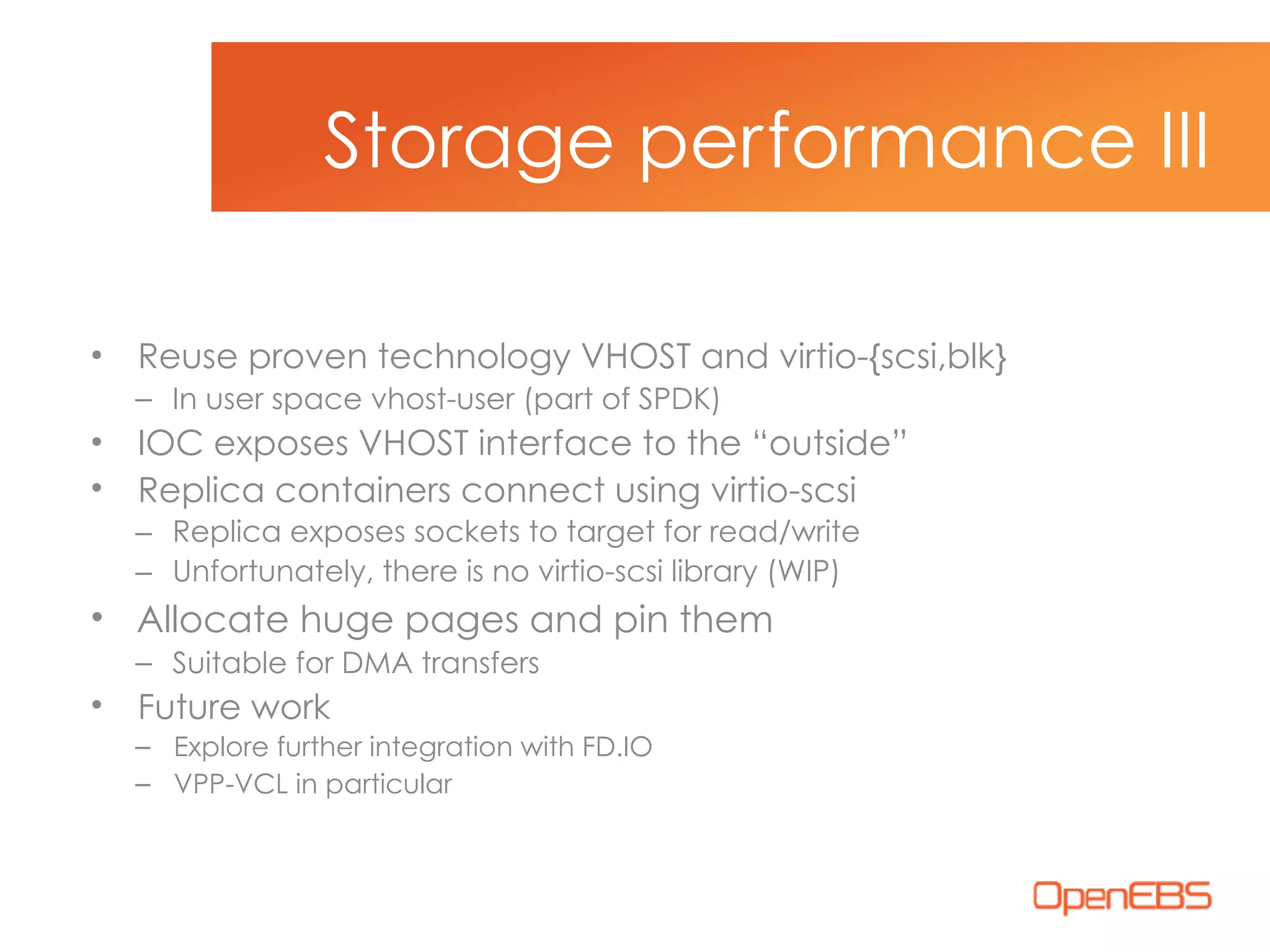

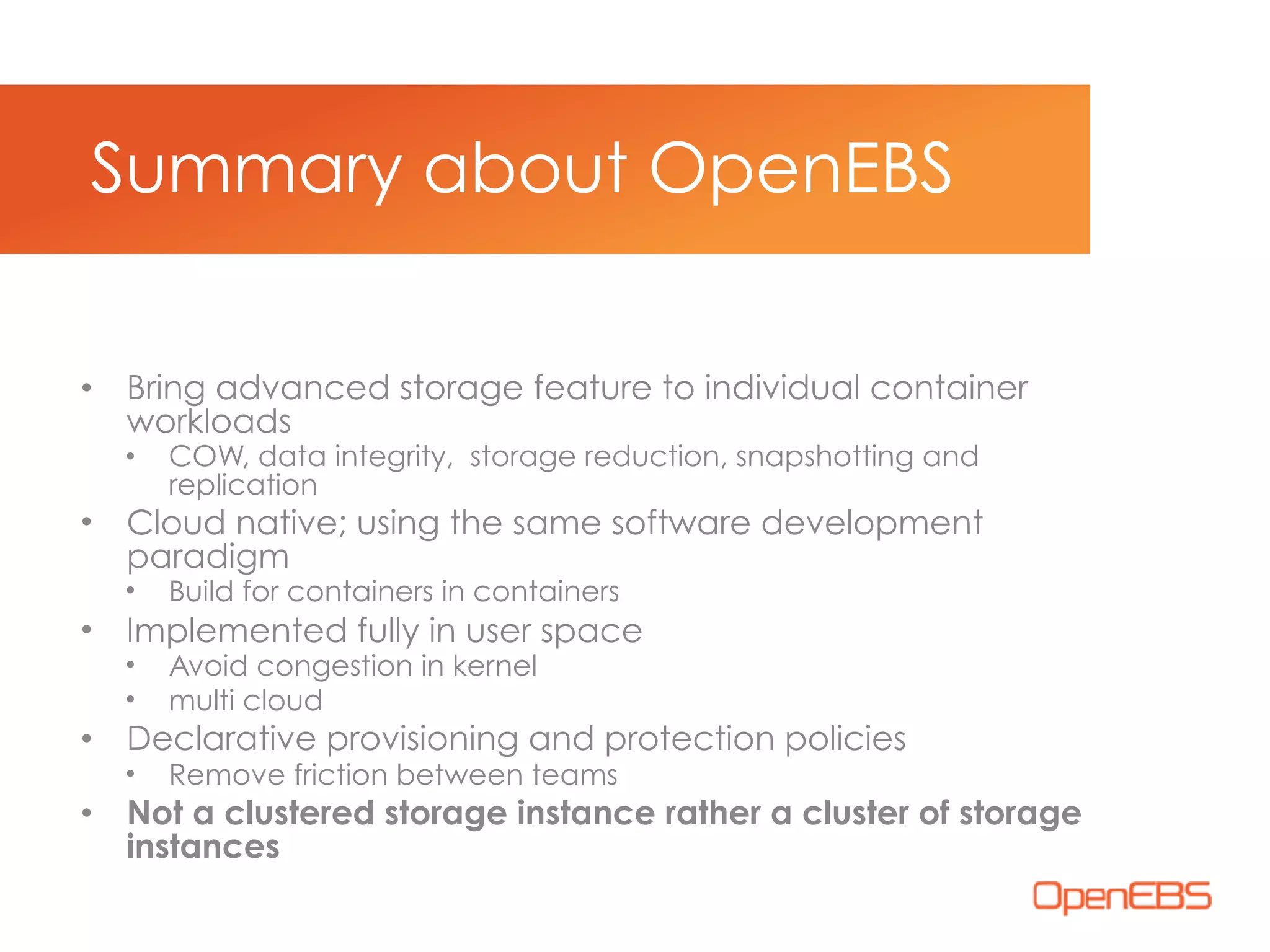

The document discusses container-native storage solutions for Kubernetes, emphasizing the evolution of storage management from traditional architectures to software-defined and user-space models. It highlights the importance of integrating modern applications' scalability with storage capabilities and the need for seamless management across diverse environments. Additionally, it suggests that utilizing containerized storage can enhance performance and data integrity while simplifying operations within cloud-native applications.