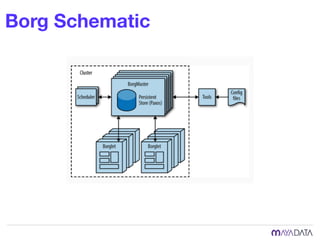

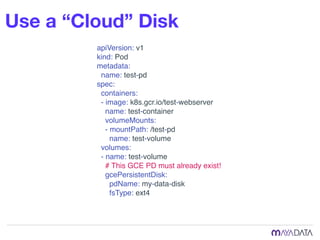

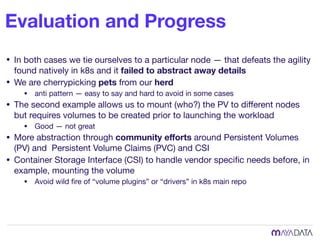

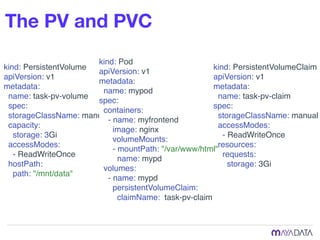

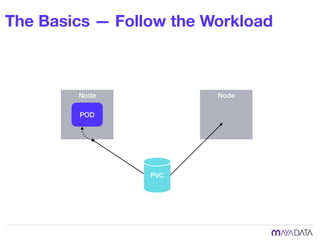

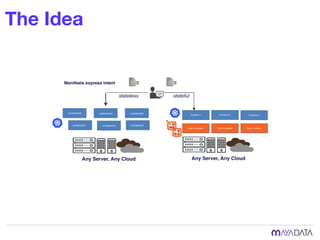

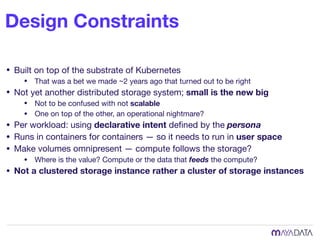

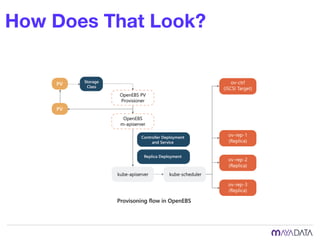

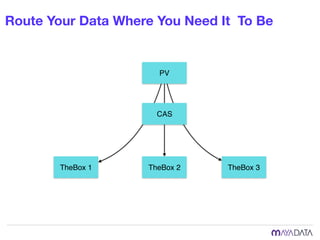

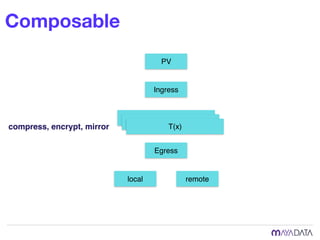

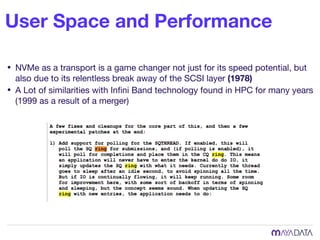

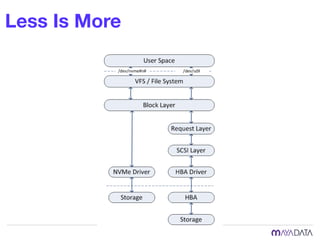

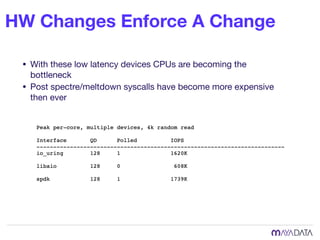

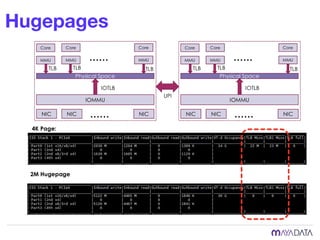

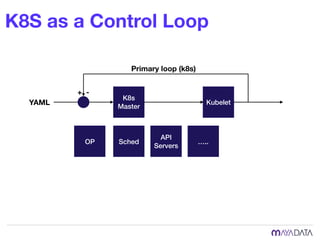

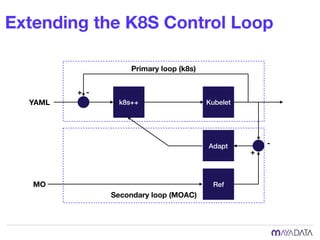

The document discusses container attached storage in Kubernetes (k8s) and highlights the challenges of managing persistent storage for stateful applications. It elaborates on the need for abstraction in storage solutions to minimize vendor lock-in and enhance agility within cloud-native environments. Additionally, it addresses various strategies and frameworks, such as Persistent Volume Claims (PVCs) and the Container Storage Interface (CSI), to improve storage management in containerized applications.