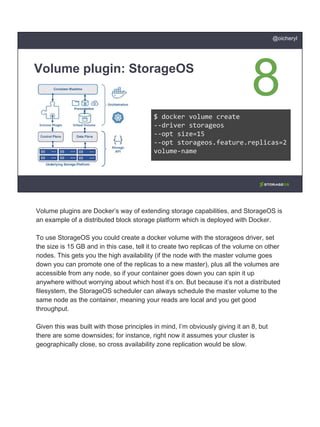

StorageOS is a distributed block storage platform that can be deployed with Docker using volume plugins. It allows the creation of highly available volumes that can be accessed from any node and follow containers during rescheduling. While built with cloud-native principles in mind, some limitations remain such as an assumption of geographic proximity between nodes. Overall, StorageOS scores well at 8 out of 8 on cloud-native storage principles due to its integration with Docker, ability to schedule data optimally, and provision of high availability and portability for application volumes.