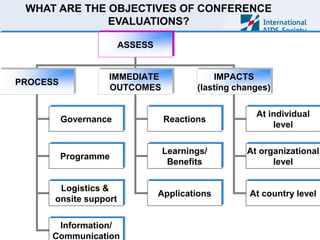

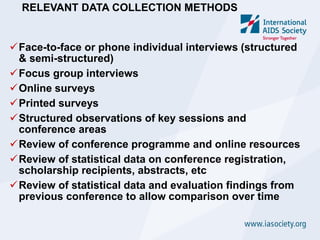

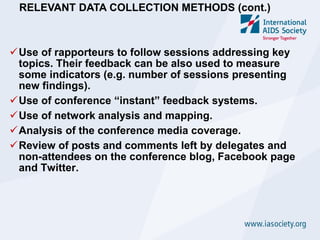

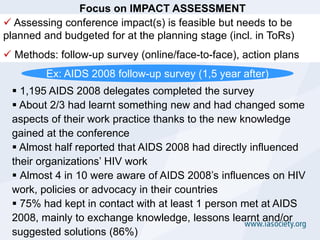

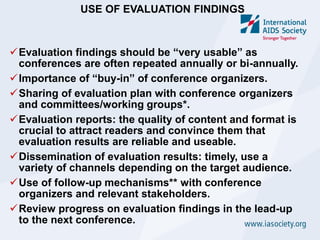

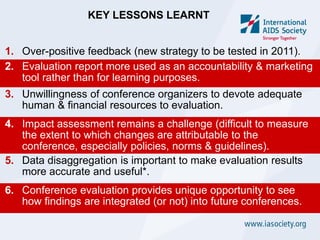

The document outlines the importance and objectives of evaluating conferences to ensure continuous accountability and learning. It discusses various data collection methods for impact assessment and emphasizes the need for planning and budget allocation for evaluations. Key lessons highlighted include the challenges of impact measurement, the necessity of data disaggregation, and the importance of utilizing evaluation findings effectively for future conferences.