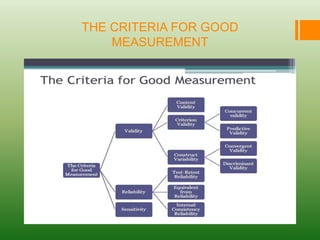

This document discusses key criteria for good measurement in research: validity and reliability. It defines validity as measuring what is intended and discusses three types: face validity, construct validity, and criterion-related validity. Reliability is defined as consistency of measurement and the document discusses test-retest reliability, equivalent forms reliability, and internal consistency reliability. Sensitivity is defined as a measure's ability to detect meaningful differences in responses.