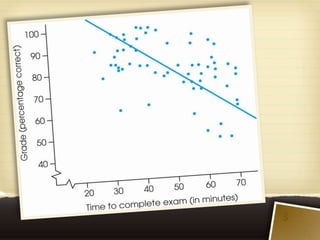

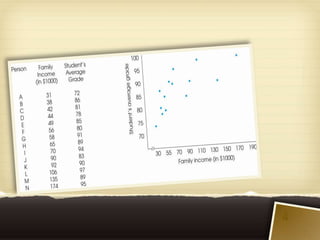

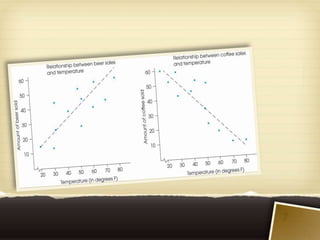

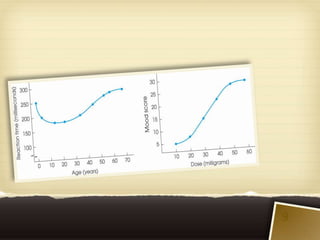

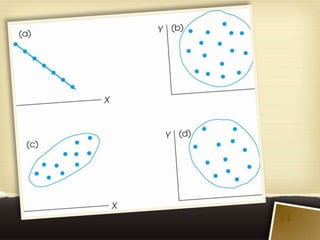

Correlation is a statistical method used to measure the relationship between two variables. A relationship exists when changes in one variable are accompanied by consistent changes in the other. A correlation evaluates the direction, form, and degree of the relationship. The Pearson correlation specifically measures the direction and strength of a linear relationship between two numerical variables. Other correlational methods like Spearman and point-biserial correlations can be used for ordinal or dichotomous variable relationships.