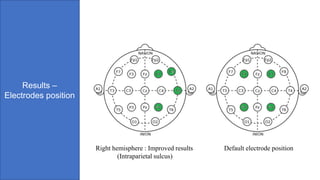

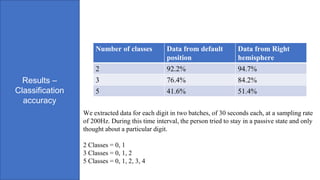

The document discusses a proposed system called "Brain Access" that would allow users to control devices through brain or muscle signals without needing specialized interfaces. It reviews existing brain-computer interface (BCI) and electromyography (EMG) works and their limitations. The proposed system would overlay numbers on existing device screens and map brain/muscle signals to numbers to enable universal control of any device or application. It describes a prototype implementation and presents results from initial experiments classifying EEG signals, showing improved accuracy when electrodes were positioned in the right hemisphere. The document concludes by discussing future work to improve the system for ubiquitous computing and control of Internet of Things devices.

![Existing works

EEG

• “Electroencephalography (EEG) is an electrophysiological monitoring method to

record electrical activity of the brain”. Any action, thought and emotions

generate electric signals in brains which are captured by electrodes resulting to

EEG

• Existing works focus on enabling brain-computer access for specific applications

– No universal interface developed.

• Brain waves can be used to classify as many as 10 actions with accuracy above

90% [1]

• “NeuroPhone” – iPhone interface that uses flashing images that user needs to

focus on [2]

• Samsung demoed Tablet control using flashing images based BCI [4]

• There are many systems developed that use BCI to perform various actions like

controlling robot motion [3], controlling drones [5], Typing applications [6][7].

However, all of these systems used visual stimulation (Like P300) which limits

its use to develop generic BCI interface.

• There are studies working on directly using EEG for action classification. For

example [8] uses raw EEG data with visual stimulation to allow users to play

rock-paper-scissors game.

• Many projects achieved higher classification accuracy – Needed invasive

surgeries.](https://image.slidesharecdn.com/brainaccess-180501021936/85/Brain-access-5-320.jpg)

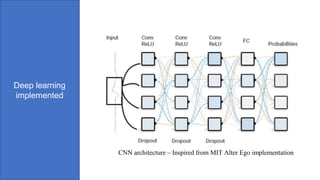

![Existing works

EMG

• Electromyography (EMG) is an electrodiagnostic medicine technique for

evaluating and recording the electrical activity produced by skeletal

muscles.

• EMG provides direct signals compared to EEG for actions classification.

• EMG detects electric potential generated by muscles when a person tries

to move or preform some actions.

• “AlterEgo” achieves 92% median accuracy for a silent conversational

interface [9]

• Microsoft’s muCIs (Muscle computer interface) is another project using

EMGs to enable human computer interactions.[10]

• Another study uses EMG for controlling mouse cursor, achieving 70%

accuracy [11].](https://image.slidesharecdn.com/brainaccess-180501021936/85/Brain-access-9-320.jpg)

![Results

• Our primary achievement – Creating a system that allows plug

and play interface to control any smartphone

• EEG Signals classification model – Weak model due to only 4

input channels available

• Still achieved significant accuracies in classification.

• However, the hardware we had can not support building end to

end working system

• The classification accuracies achieved have been reported on

next slide. With a 16 channel hardware, we could potentially

build a system that achieves a high (90% +) accuracy for 10

digits classification (As already proven in previous research)

• We also validated an authentication system using EEG as

proposed by [12] – Ensures privacy and end to end security in

the systems.

• We validated that EEG data captured from right side of the brain

gives better accuracy with predicting what number a person is

thinking.](https://image.slidesharecdn.com/brainaccess-180501021936/85/Brain-access-20-320.jpg)