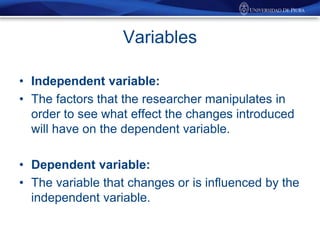

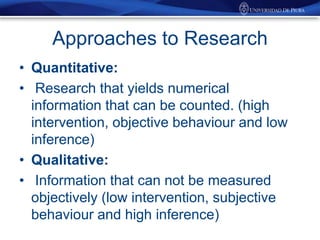

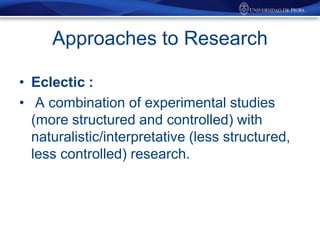

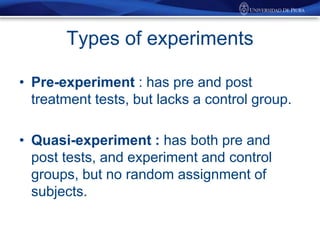

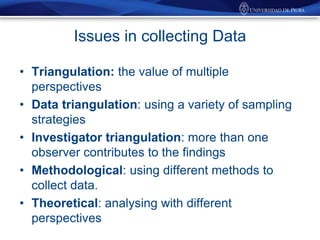

This document discusses research methods in education, including key concepts like independent and dependent variables, quantitative and qualitative approaches, and experimental designs. It describes the components of classroom research as involving the teacher, learner, classroom processes and products. Different data collection instruments are outlined, as are issues like reliability, validity, and triangulation. Experimental and action research are compared, and steps for developing valid and reliable research instruments are provided.