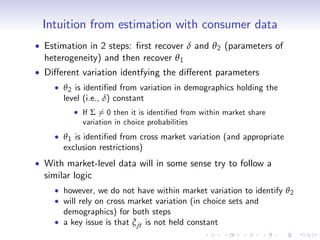

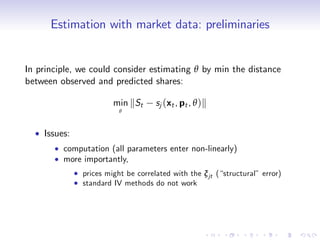

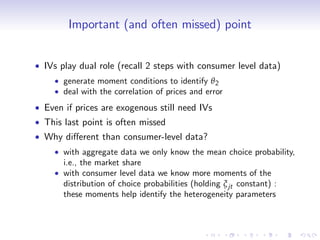

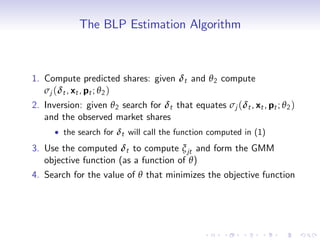

This document discusses methods for estimating static discrete choice models using market-level data rather than individual consumer data. It covers several key topics:

1) The types of market-level and consumer-level data that can be used. Market-level data is easier to obtain but poses challenges for identification and estimation.

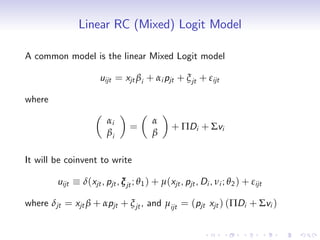

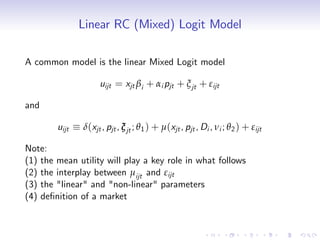

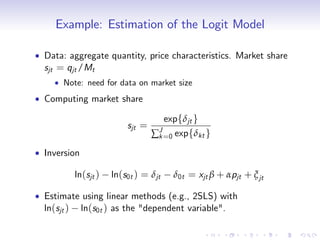

2) A common linear random coefficients logit model framework. It includes observed and unobserved product characteristics as well as observed and unobserved consumer heterogeneity.

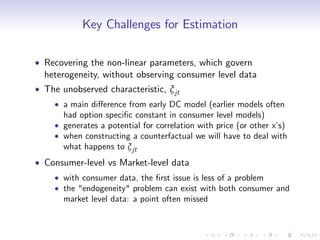

3) The key challenges of estimating heterogeneity parameters without consumer-level data. It also discusses how to deal with potential endogeneity of unobserved product characteristics.

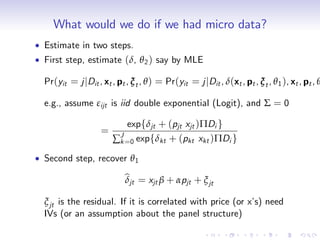

4) The two-step estimation approach when consumer-level data is available, and

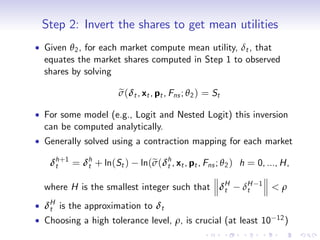

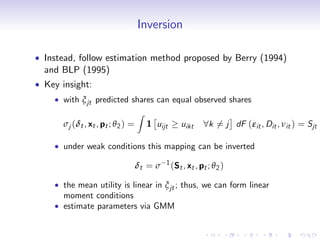

![Step 1: Compute the market shares predicted by the model

Given δt and θ 2 (and the data) compute

Z

σj (δt , xt , pt ; θ 2 ) = 1 [uijt uikt 8k 6= j ] dF (εit , Dit , νit )

For some models this can be done analytically (e.g., Logit,

Nested Logit and a few others)

Generally the integral is computed numerically

A common way to do this is via simulation

1 ns

expfδjt + (pjt xjt )(ΠDi + Σvi )g

e

σj (δt , x t , p t , F ns ; θ 2 ) =

ns ∑ 1 + ∑J expfδkt + (pkt xkt )(ΠDi + Σ

i =1 k =1

where vi and Di , i = 1, ..., ns are draws from Fv (v ) and

FD ( D ) ,

Note:

the ε’ are integrated analytically

s

other simulators (importance sampling, Halton seq)

integral can be approximated in other ways (e.g., quadrature)](https://image.slidesharecdn.com/avivl2-120815081515-phpapp01/85/Estimation-of-Static-Discrete-Choice-Models-Using-Market-Level-Data-17-320.jpg)