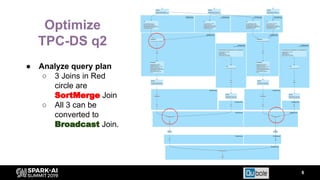

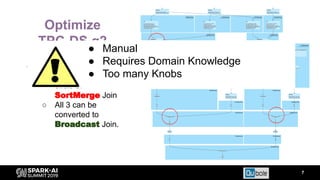

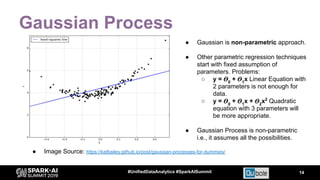

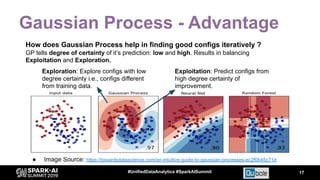

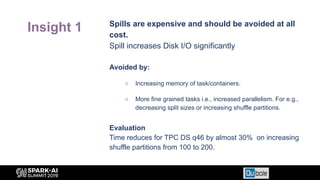

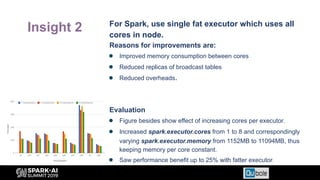

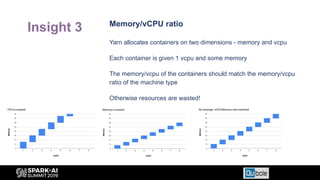

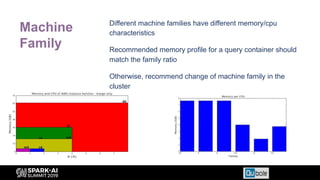

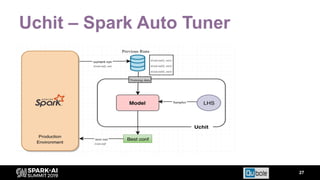

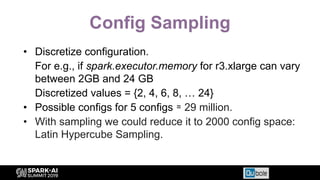

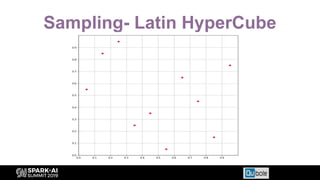

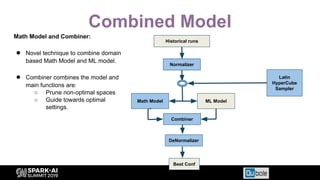

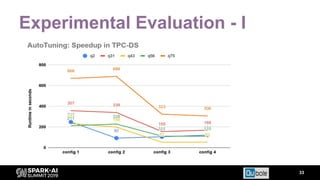

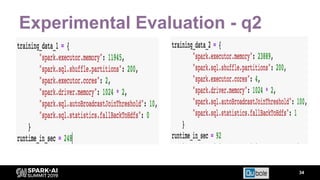

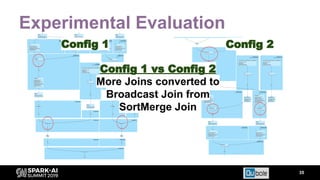

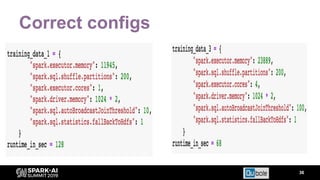

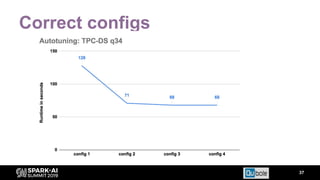

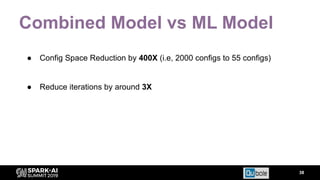

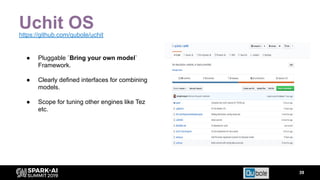

The document discusses an automated tuning system for Apache Spark applications using machine learning and domain-based models, aiming to optimize performance and resource efficiency. It covers motivation, the approach including Gaussian processes for iterative configuration prediction, and insights from previous research on performance tuning. A demo of the auto-tuning system, 'Uchit', is presented, illustrating significant reductions in configuration space and runtime evaluation time.