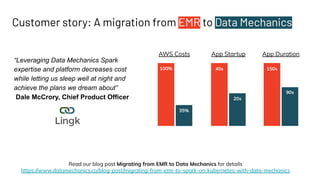

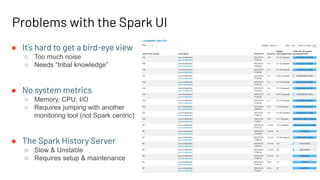

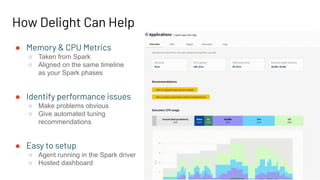

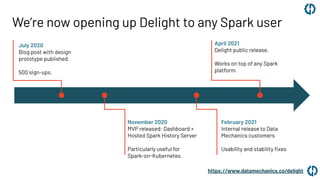

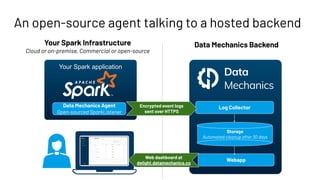

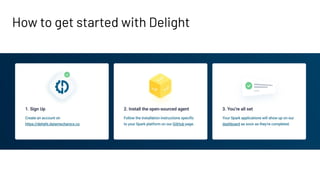

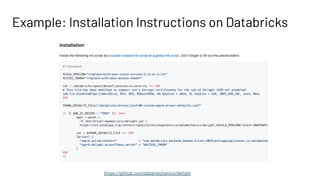

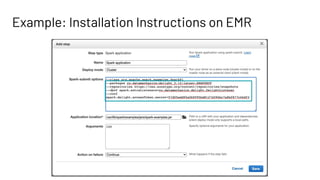

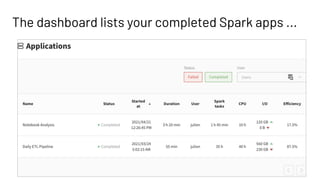

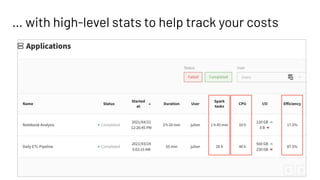

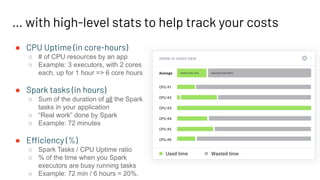

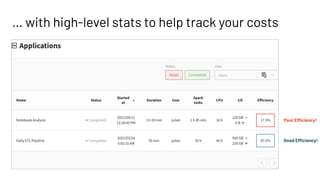

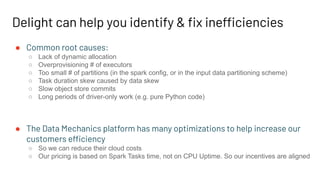

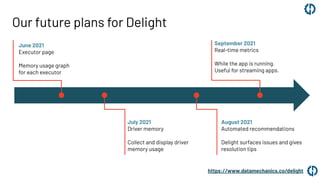

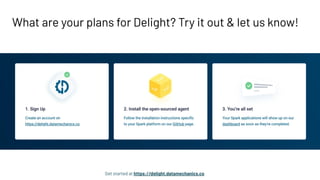

This document introduces Delight, an improved Apache Spark UI created by Data Mechanics. Delight provides high-level visualizations of Spark jobs to help users identify inefficiencies and reduce costs. It collects metrics during and after jobs to show CPU usage, task duration, and efficiency. Delight is open source and works on any Spark platform by installing an agent. Data Mechanics aims to further enhance Delight with real-time metrics, driver memory collection, and automated recommendations.