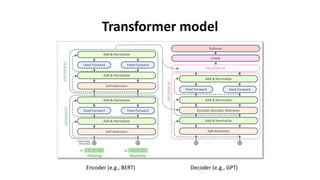

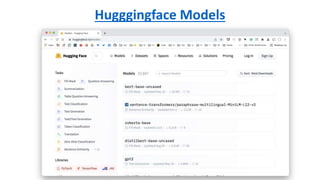

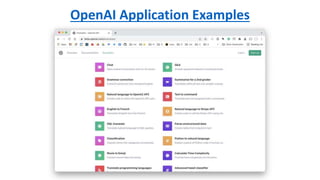

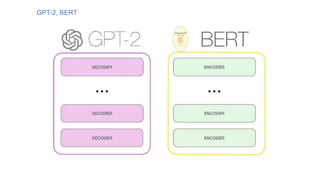

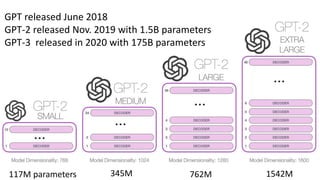

The document discusses the evolution of neural network models for language processing, highlighting the shift from RNNs and LSTMs to transformers, which utilize both left and right context for better contextual understanding. Transformers incorporate features like attention and are structured with an encoder and decoder, enabling tasks such as language modeling and question answering. It also mentions common pretrained models such as BERT and GPT-3, which are used due to their extensive training on large datasets.