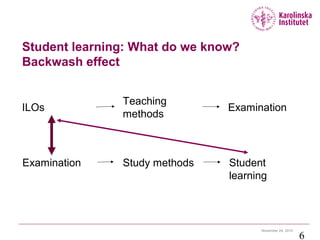

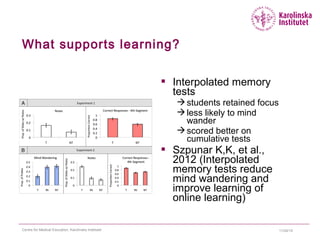

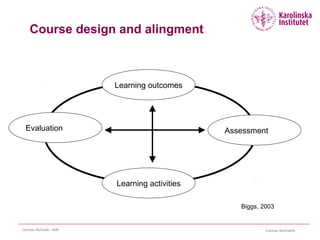

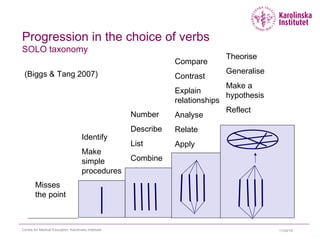

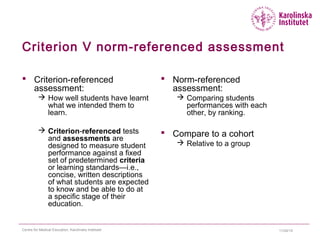

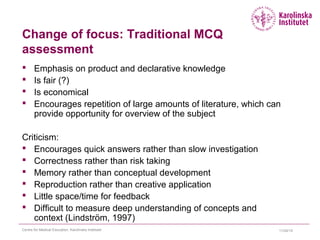

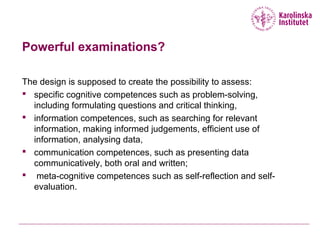

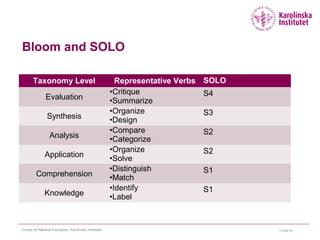

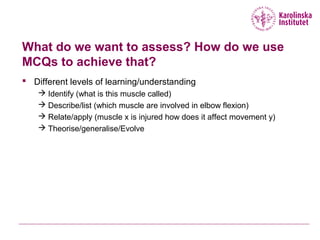

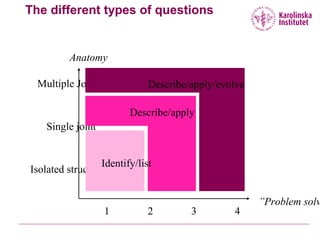

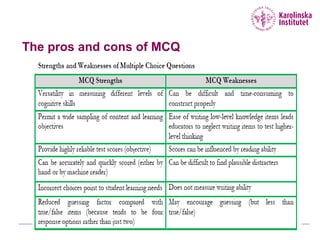

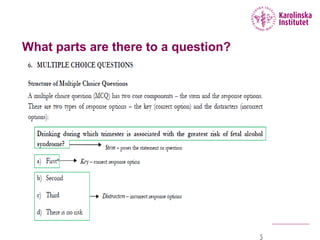

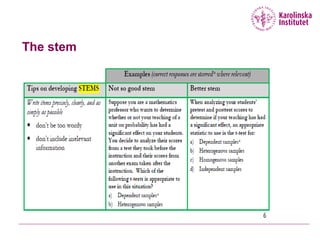

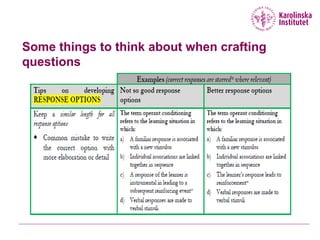

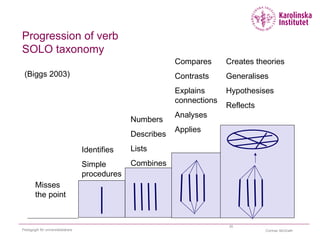

This document summarizes a presentation on assessment and multiple choice questions (MCQs). It discusses how assessment guides student learning through the backwash effect. Formative assessment supports learning by allowing recurrent testing. Course leaders can influence learning by aligning teaching and examinations. The presentation then focuses on constructing high-quality MCQs, including considering different cognitive levels, question types, and crafting effective stems, options, and keys. Workshops are proposed to build MCQ databases, test questions, conduct talk-aloud protocols with students, and eventually digitalize the assessment process.