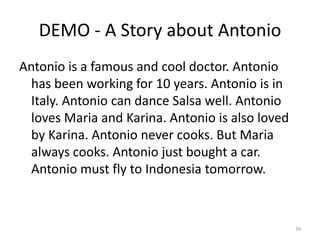

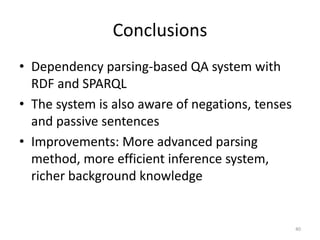

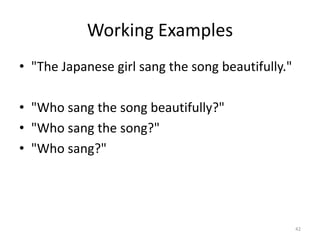

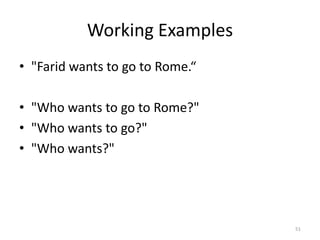

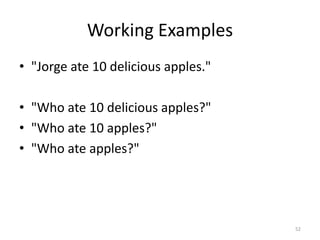

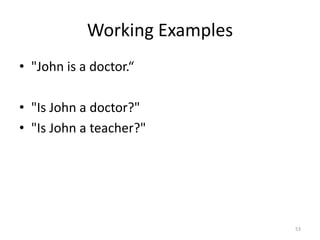

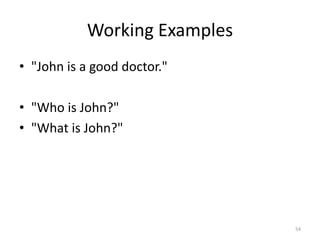

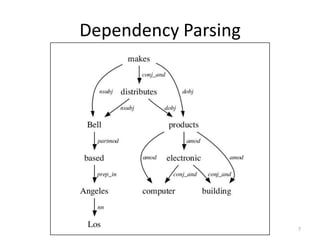

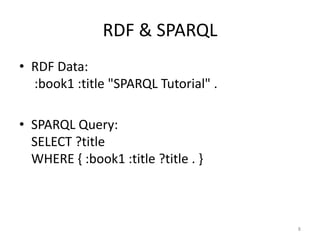

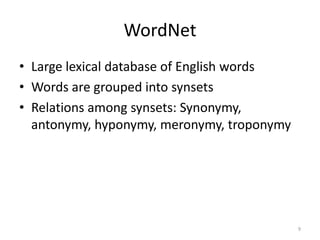

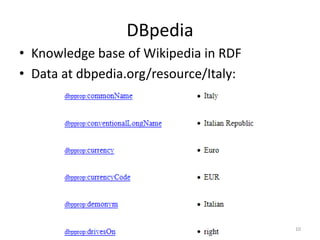

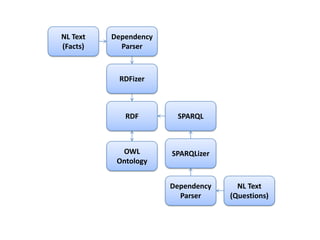

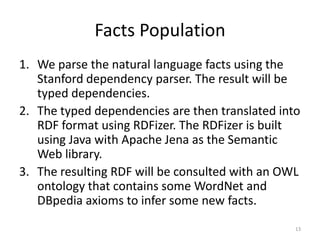

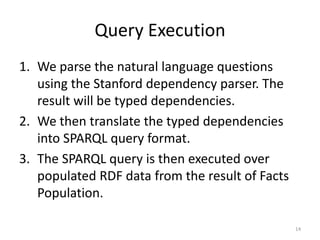

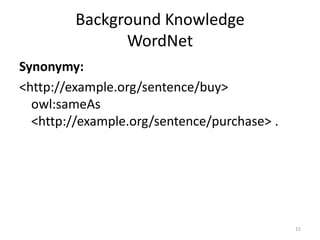

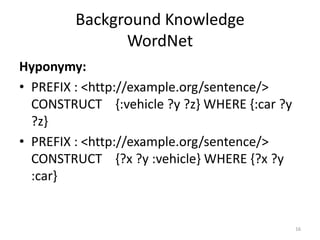

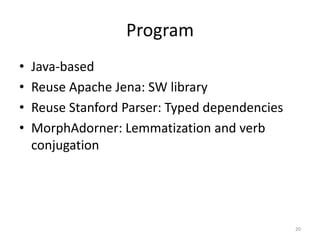

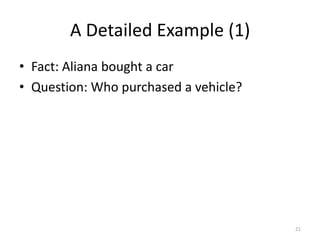

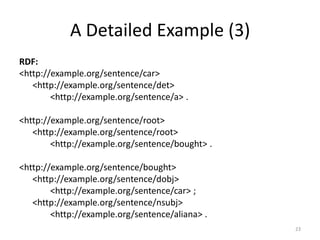

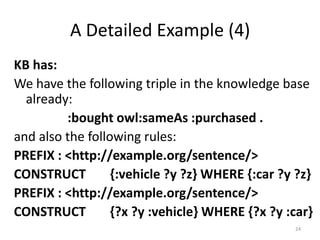

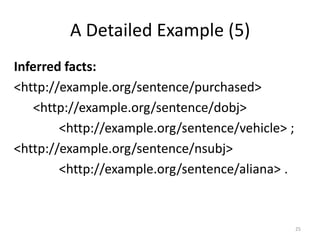

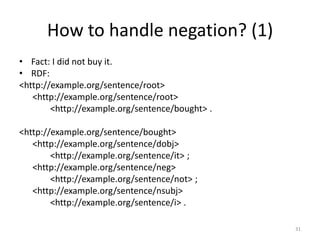

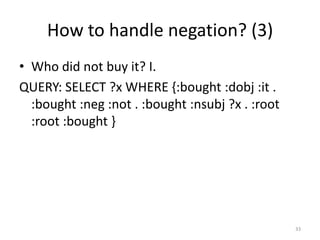

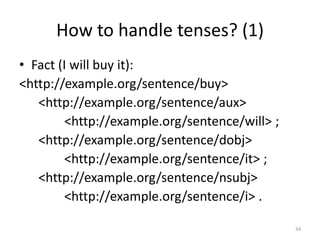

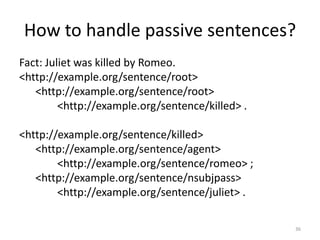

This document describes a dependency parsing-based question answering system that uses RDF and SPARQL. It parses natural language facts and questions into typed dependencies, translates them into RDF and SPARQL, queries populated RDF data to answer questions, and incorporates WordNet and DBpedia background knowledge. The system handles negation, tenses, passive voice and provides examples of its question answering capabilities.

![A Detailed Example (2)

• Typed Dependencies

[nsubj(bought-2, Aliana-1), root(ROOT-0,

bought-2), det(car-4, a-3), dobj(bought-2, car-

4)]

22](https://image.slidesharecdn.com/architecture-130130105619-phpapp02/85/Dependency-Parsing-based-QA-System-for-RDF-and-SPARQL-22-320.jpg)

![A Detailed Example (6)

Typed dependencies of question:

[nsubj(purchased-2, Who-1), root(ROOT-0,

purchased-2), det(vehicle-4, a-3), dobj(purchased-

2, vehicle-4)]

26](https://image.slidesharecdn.com/architecture-130130105619-phpapp02/85/Dependency-Parsing-based-QA-System-for-RDF-and-SPARQL-26-320.jpg)

![How to handle negation? (2)

• Question: Who bought it?

• SPARQL:

SELECT ?x WHERE {:bought :nsubj ?x . :bought

:dobj :it . :root :root :bought . FILTER NOT

EXISTS { [] :neg ?z . } }

32](https://image.slidesharecdn.com/architecture-130130105619-phpapp02/85/Dependency-Parsing-based-QA-System-for-RDF-and-SPARQL-32-320.jpg)

![How to handle tenses? (2)

• Who buys it?

• SELECT ?x WHERE {:root :root :buys . :buys

:nsubj ?x . :buys :dobj :it . FILTER NOT EXISTS {

[] :aux :will . } }

35](https://image.slidesharecdn.com/architecture-130130105619-phpapp02/85/Dependency-Parsing-based-QA-System-for-RDF-and-SPARQL-35-320.jpg)

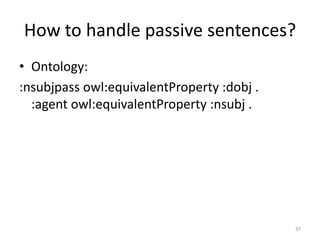

![How to handle passive sentences?

• Who killed Juliet?

SELECT ?x WHERE {:killed :nsubj ?x . :killed :dobj

:juliet . :root :root :killed . FILTER NOT EXISTS {

[] :neg ?z . }}

38](https://image.slidesharecdn.com/architecture-130130105619-phpapp02/85/Dependency-Parsing-based-QA-System-for-RDF-and-SPARQL-38-320.jpg)