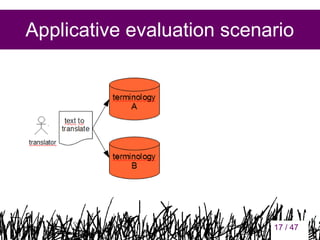

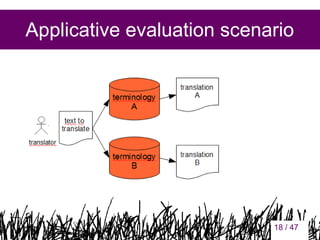

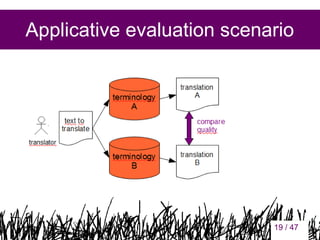

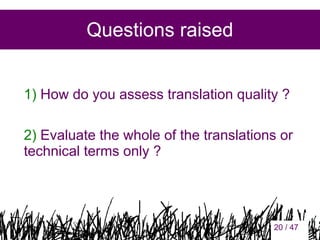

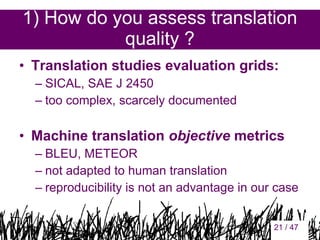

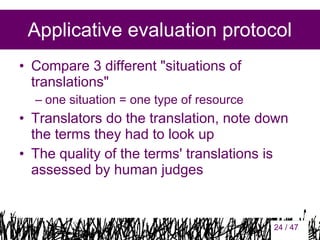

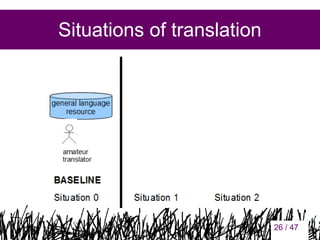

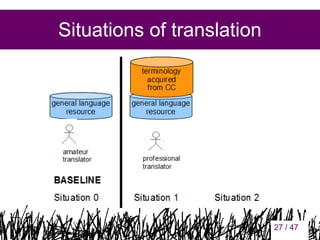

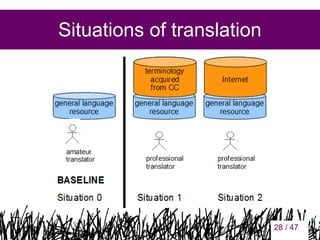

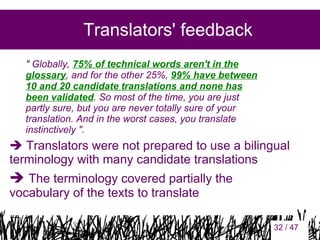

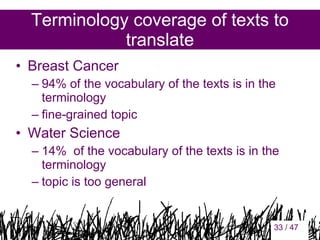

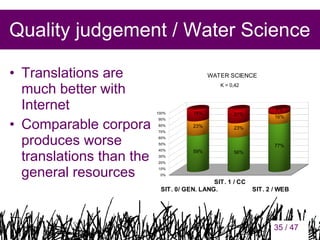

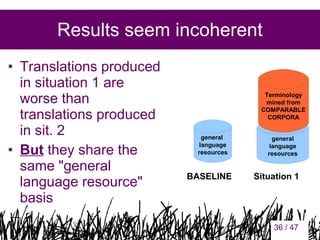

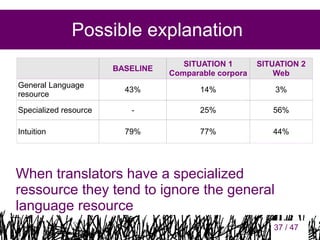

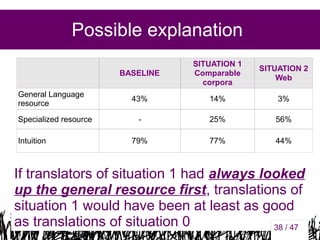

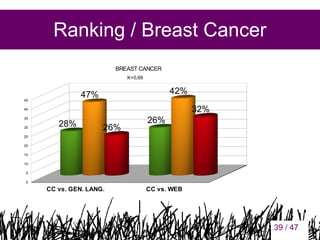

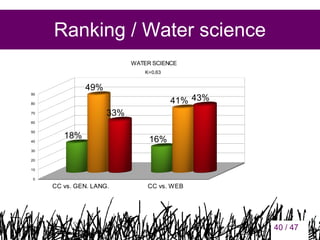

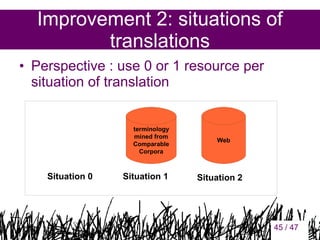

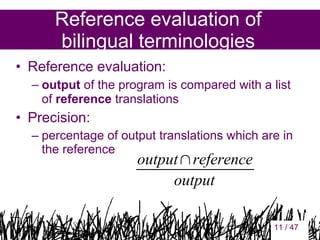

The document discusses the evaluation of bilingual terminologies and their application in technical translation, specifically focusing on the use of comparable corpora in the context of breast cancer and water science. It outlines a protocol for assessing the quality of translations and emphasizes the importance of terminology coverage in enhancing translation outcomes. Future improvements are suggested, including refining the evaluation method and training translators to work with validated terminologies.

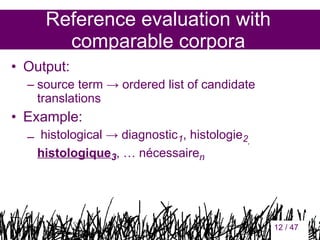

![Reference evaluation with

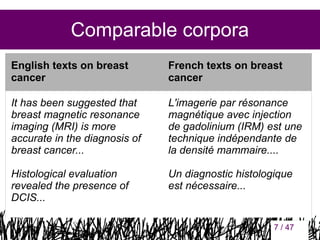

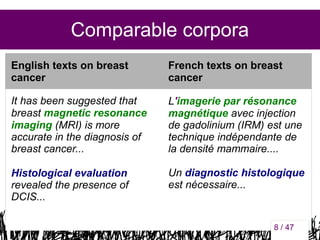

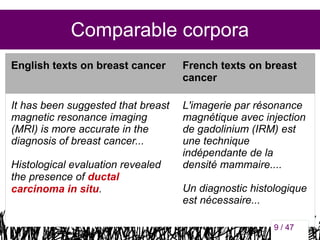

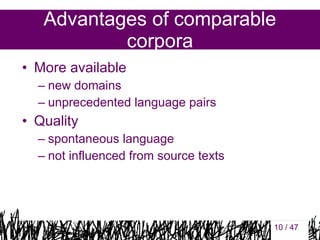

comparable corpora

• Precision:

– percentage of output translations which are in

the reference when you take into account

the Top 20 or Top 10 candidate

translations

• State-of-the-art:

– between 42% and 80% on Top 20

depending on corpus size, corpus type,

nature of translated elements [Morin and

Daille, 2009]

13

13 / 47](https://image.slidesharecdn.com/evaluation-bilingual-terminologies-131201192710-phpapp01/85/Applicative-evaluation-of-bilingual-terminologies-13-320.jpg)