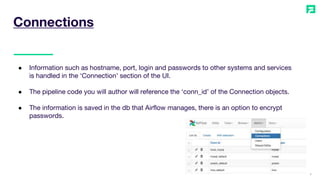

The document provides an overview of Apache Airflow, an open-source workflow management platform for data pipelines. It describes how Airflow allows users to programmatically author, schedule and monitor workflows or data pipelines via a GUI. It also outlines key Airflow concepts like DAGs (directed acyclic graphs), tasks, operators, sensors, XComs (cross-communication), connections, variables and executors that allow parallel task execution.