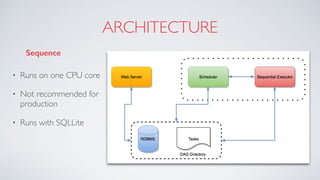

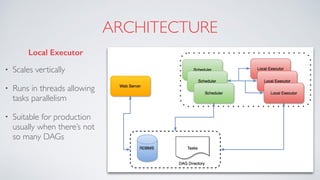

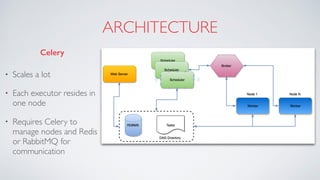

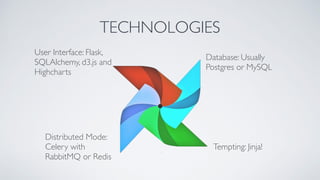

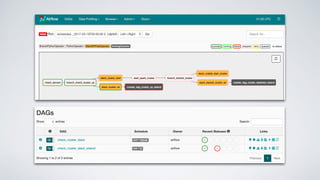

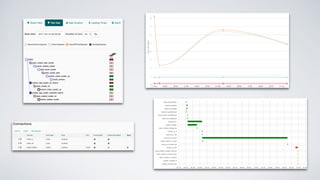

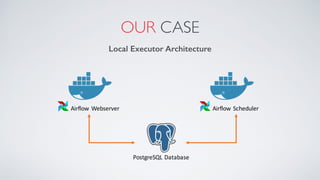

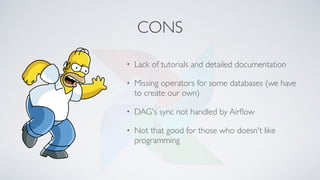

Airflow is an open-source platform for authoring and monitoring data pipelines, allowing configuration as code and dynamic generation. It provides flexibility, efficient parallel task execution, and a rich user interface while running on single or multiple nodes, primarily leveraging technologies like Flask and PostgreSQL. However, it faces challenges such as a lack of extensive documentation, missing operators for certain databases, and synchronization issues for Directed Acyclic Graphs (DAGs).