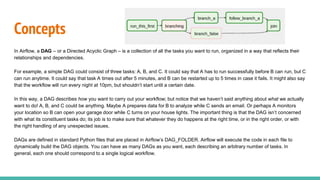

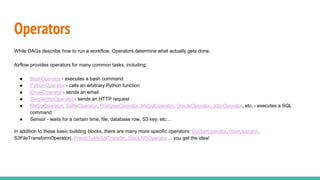

Apache Airflow is a platform to author, schedule and monitor workflows as directed acyclic graphs (DAGs) of tasks. It allows workflows to be defined as code making them more maintainable, versionable and collaborative. The rich user interface makes it easy to visualize pipelines and monitor progress. Key concepts include DAGs, operators, hooks, pools and xcoms. Alternatives include Azkaban from LinkedIn and Oozie for Hadoop workflows.