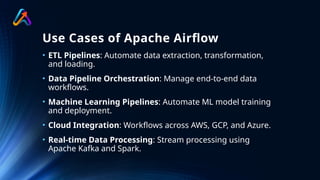

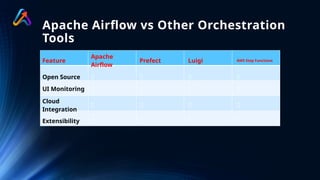

This PPT covers the fundamentals of Apache Airflow and workflow orchestration, including its architecture, key components, and real-world use cases. It highlights how Airflow automates and manages data pipelines efficiently. The presentation also introduces the Accentfuture Apache Airflow course for hands-on learning and career growth. 🚀