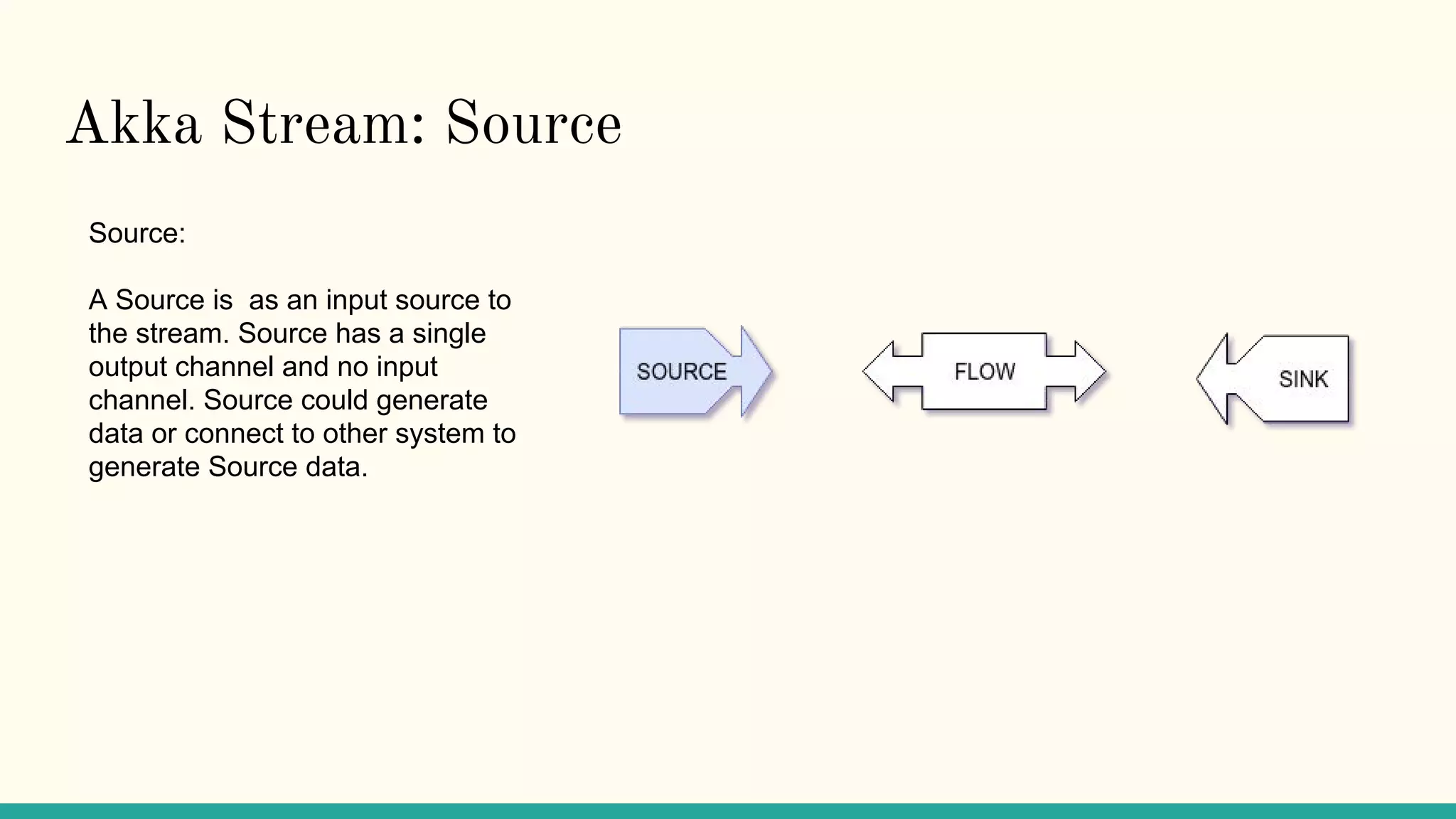

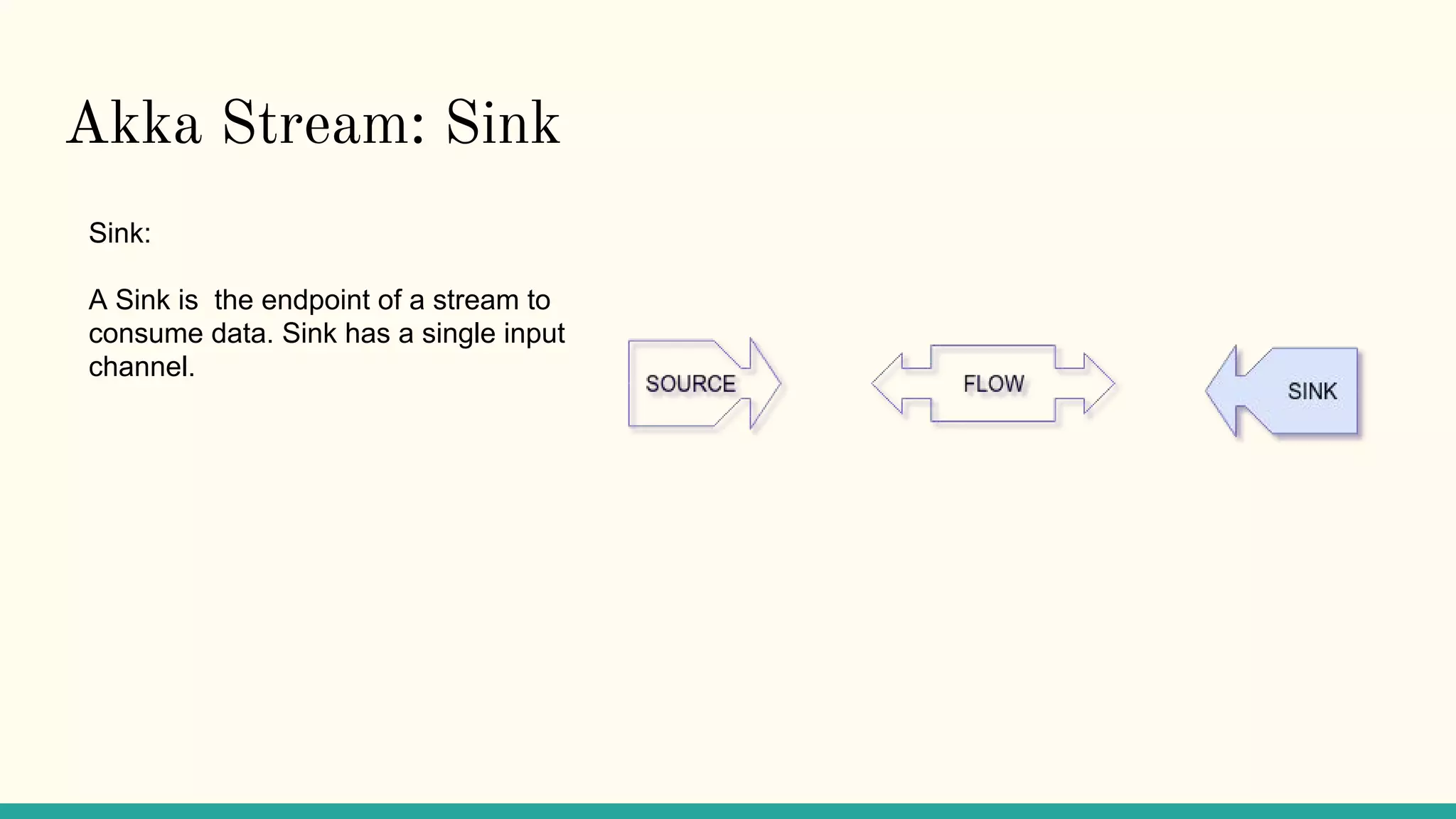

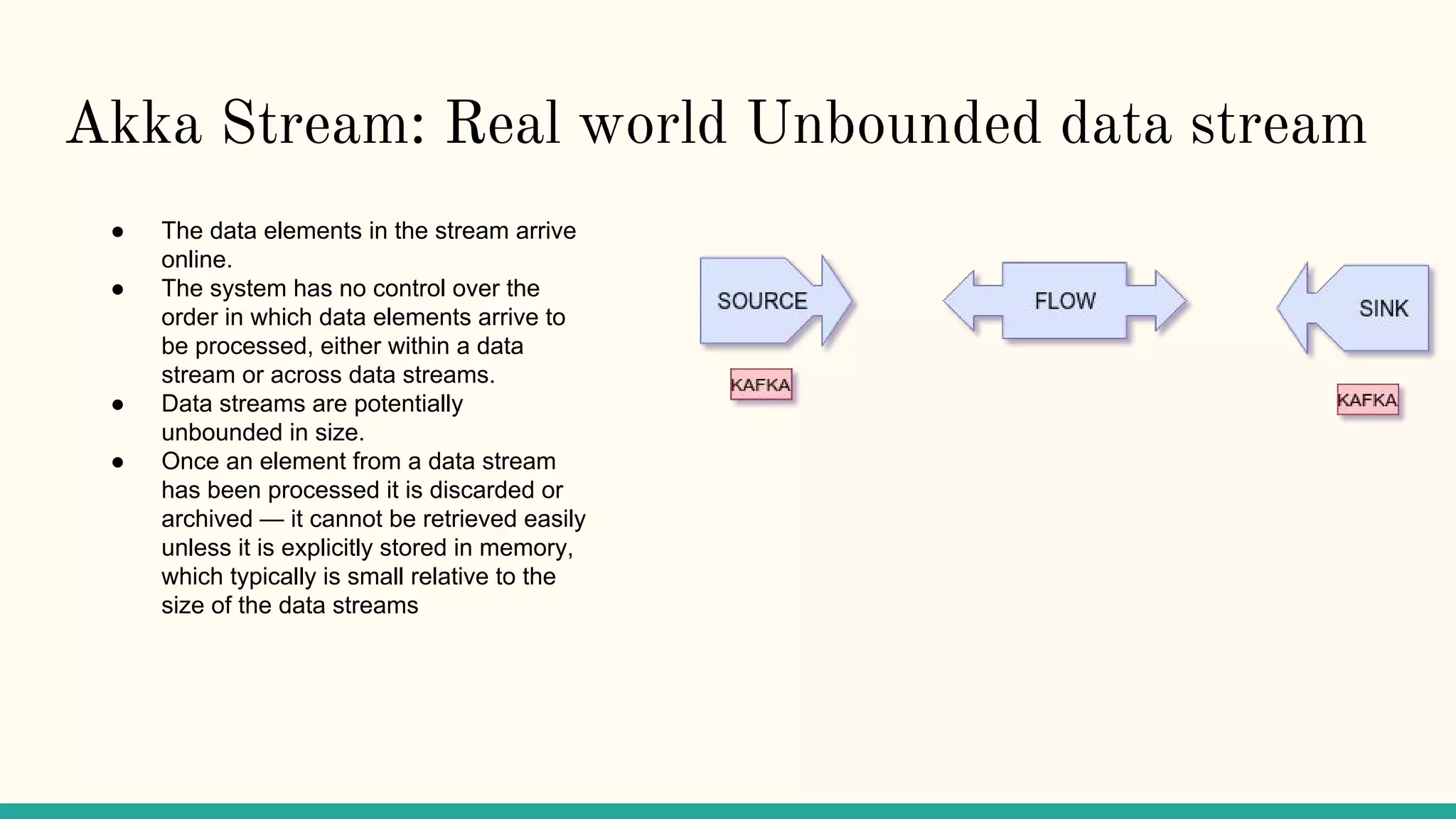

This document discusses Akka Stream and CQRS using Akka Persistence. It provides an overview of Akka Stream concepts including sources, flows, sinks and materializers. It also discusses building complex graphs with GraphDSL and handling backpressure. For CQRS and event sourcing, it explains how Akka Persistence allows storing immutable events and replaying them to reconstruct state, separating the command and query responsibilities to improve scalability compared to shared databases. Journal and snapshot storage in databases like Cassandra are also summarized.

)

val flow = source to sink

flow.run()

}](https://image.slidesharecdn.com/akkastreamakkacqrs-170817013257/75/Akka-stream-and-Akka-CQRS-8-2048.jpg)

![Complex Graph GraphDSL

Akka Streams junctions :

Fan-out

● Broadcast[T] – (1 input, N outputs) given an input element emits to each output

● Balance[T] – (1 input, N outputs) given an input element emits to one of its output ports

● UnzipWith[In,A,B,...] – (1 input, N outputs) takes a function of 1 input that given a value for each input emits N output

elements (where N <= 20)

● UnZip[A,B] – (1 input, 2 outputs) splits a stream of (A,B) tuples into two streams, one of type A and one of type B

Fan-in

● Merge[In] – (N inputs , 1 output) picks randomly from inputs pushing them one by one to its output

● MergePreferred[In] – like Merge but if elements are available on preferred port, it picks from it, otherwise randomly from

others

● ZipWith[A,B,...,Out] – (N inputs, 1 output) which takes a function of N inputs that given a value for each input emits 1

output element

● Zip[A,B] – (2 inputs, 1 output) is a ZipWith specialised to zipping input streams of A and B into an (A,B) tuple stream

● Concat[A] – (2 inputs, 1 output) concatenates two streams (first consume one, then the second one)](https://image.slidesharecdn.com/akkastreamakkacqrs-170817013257/75/Akka-stream-and-Akka-CQRS-13-2048.jpg)

![Akka Stream Backpressure

// 1000 jobs are dequeed

// Getting a stream of jobs from an imaginary external system as a Source

val jobs: Source[Job, NotUsed] = inboundJobsConnector()

jobs.buffer(1000, OverflowStrategy.backpressure)

jobs.buffer(1000, OverflowStrategy.dropTail)

jobs.buffer(1000, OverflowStrategy.dropNew)

jobs.buffer(1000, OverflowStrategy.dropHead)

jobs.buffer(1000, OverflowStrategy.dropBuffer)

jobs.buffer(1000, OverflowStrategy.fail)](https://image.slidesharecdn.com/akkastreamakkacqrs-170817013257/75/Akka-stream-and-Akka-CQRS-16-2048.jpg)