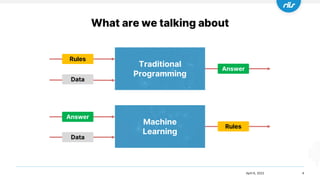

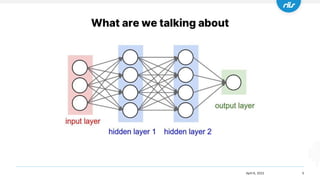

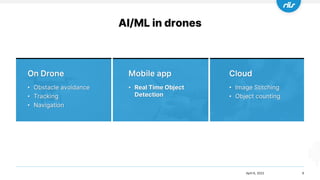

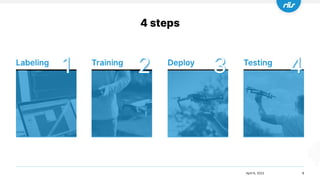

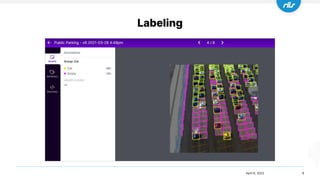

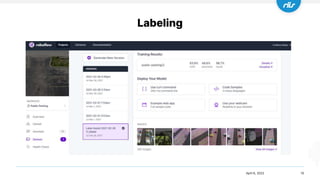

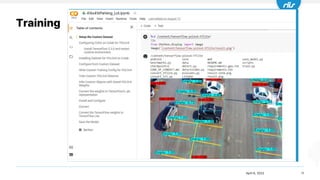

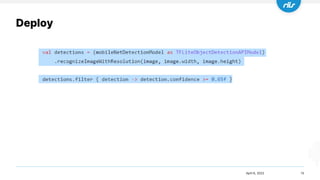

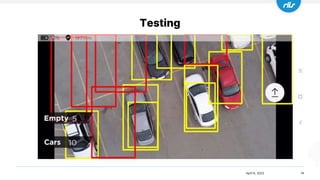

The document discusses the application of artificial intelligence (AI) and machine learning (ML) in drone technology, highlighting areas such as obstacle avoidance, navigation, and real-time object detection. It outlines a four-step process for implementing AI/ML in drones, which includes labeling data, training models, deploying them, and testing outcomes. The author also shares lessons learned, emphasizing the importance of sufficient input data and addressing ethical considerations.