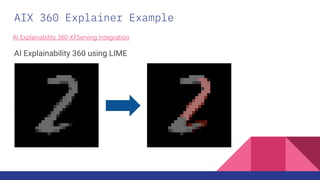

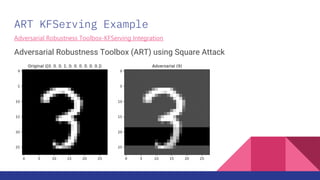

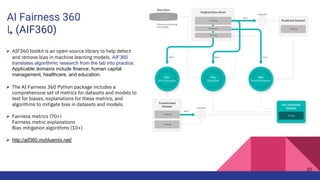

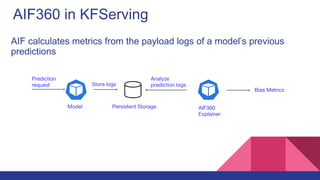

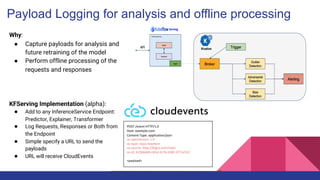

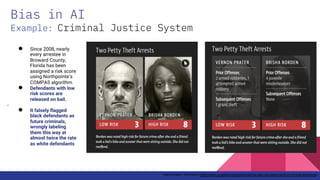

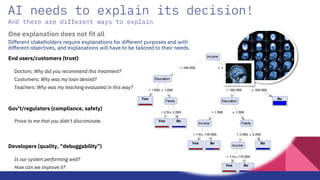

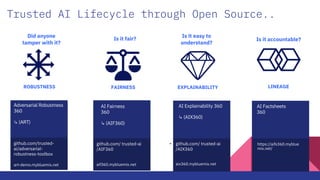

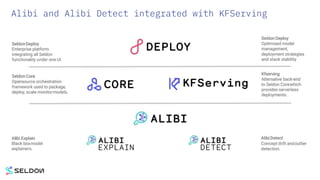

This document discusses approaches for adding trust, transparency and accountability to AI models deployed with KFServing. It proposes integrating open-source explainability, fairness and adversarial robustness tools like AIX360, AIF360 and ART to analyze model payloads and provide explanations. The tools would calculate metrics from logged predictions to detect bias or anomalies. Designs are presented for capturing events from KFServing in brokers like Kafka for offline processing. This would allow auditing models over time to ensure trusted performance.

![AI Explainability and Adversarial Robustness

in KFServing

InferenceService

YAML

Kubernetes

API

ETCD

InferenceService

Controller

Webhook Configmap

(initial create)

Explainer

[Alibi, AIX360, ART]

Transformer

Predictor

Model Server

Model Agent

Queue proxy](https://image.slidesharecdn.com/kfserving-payload-logging-v1-210501194319/85/KFServing-Payload-Logging-for-Trusted-AI-14-320.jpg)