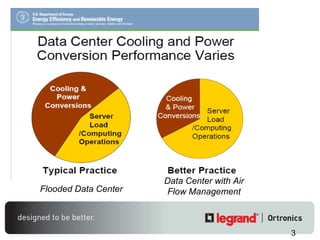

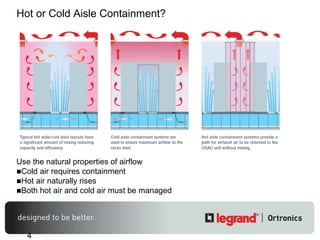

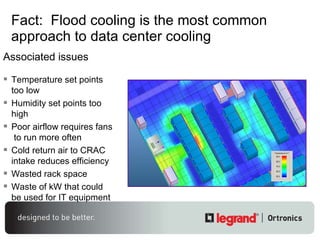

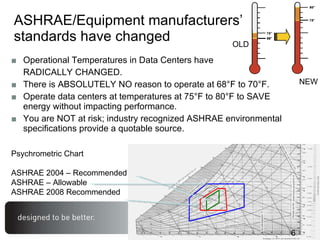

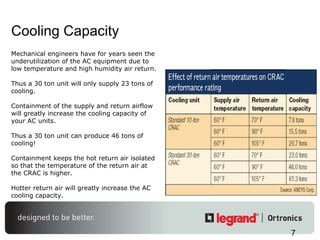

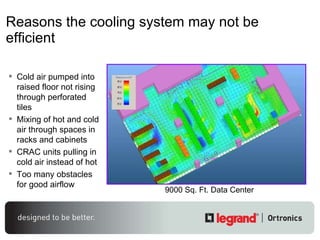

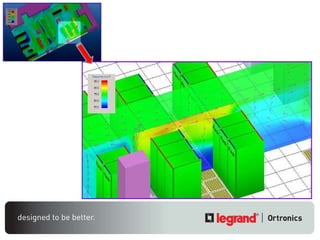

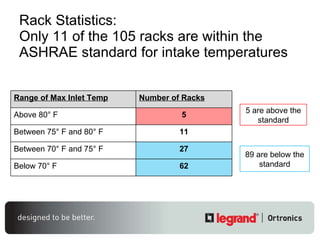

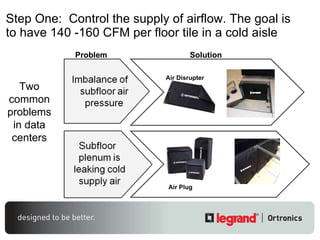

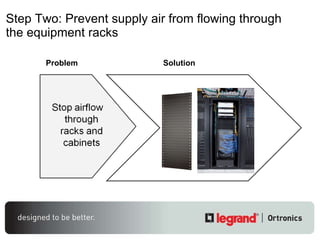

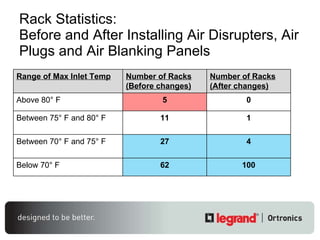

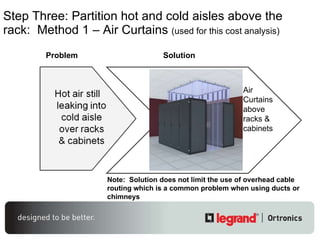

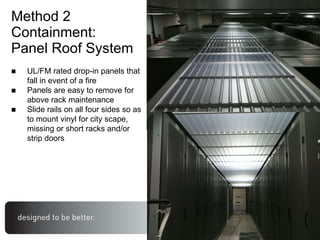

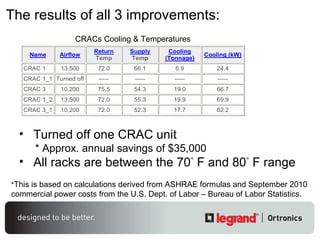

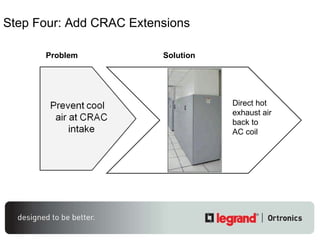

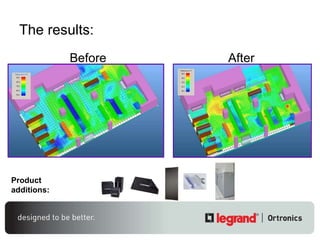

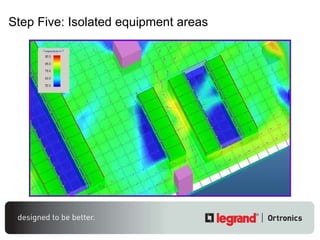

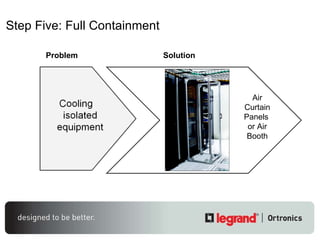

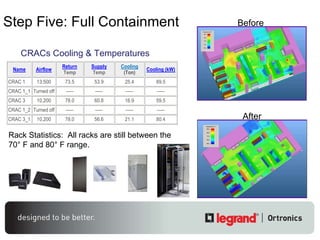

The document discusses improving air flow management in data centers to increase efficiency and savings. It recommends containing hot and cold aisles to better manage airflow, increasing temperature and humidity set points, and installing products like air curtains and blanks to isolate hot and cold air flows. Implementing these strategies increased the number of server racks operating within an optimal temperature range, allowed turning off one CRAC unit for an estimated $35,000 annual savings, and maximized the cooling capacity of CRAC units.