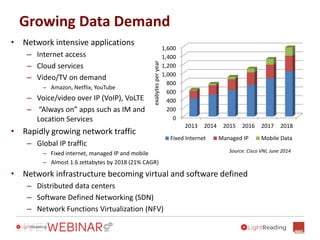

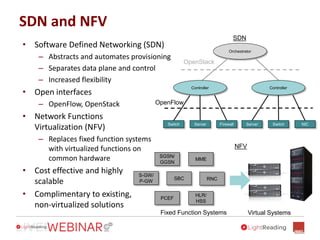

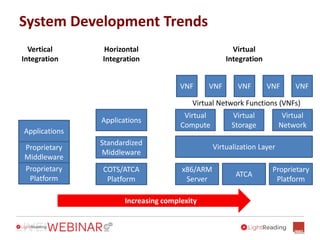

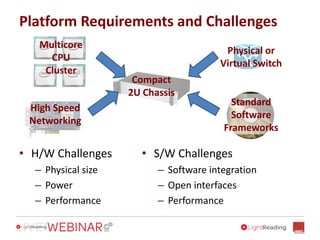

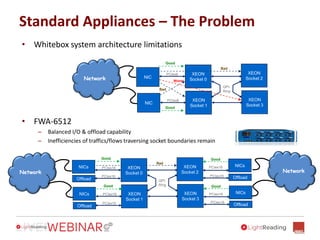

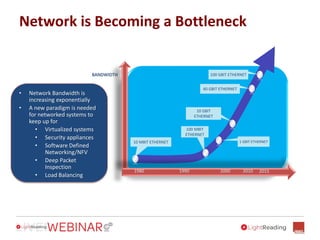

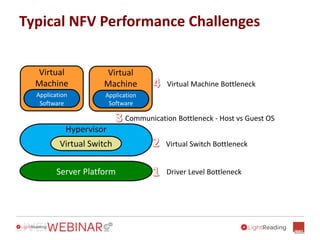

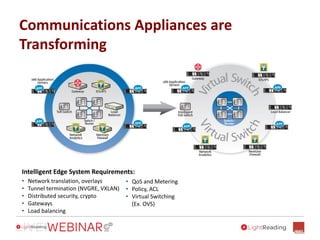

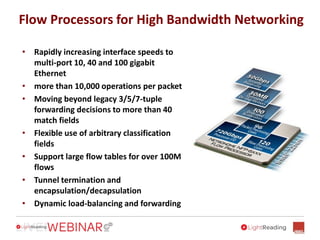

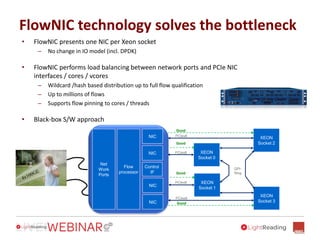

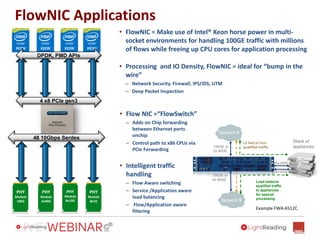

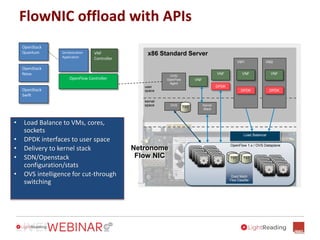

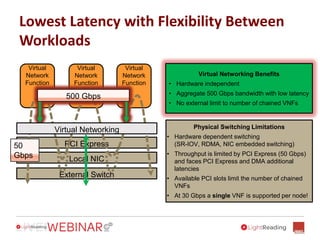

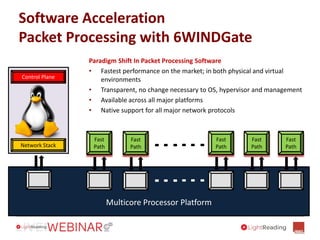

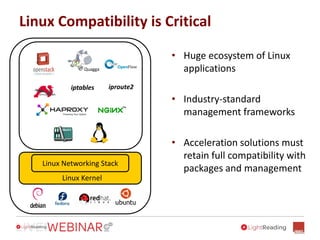

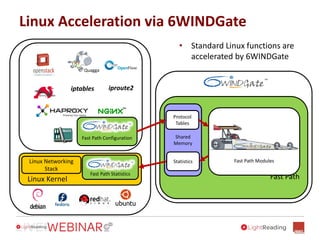

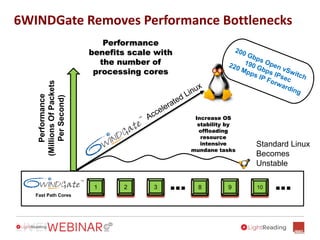

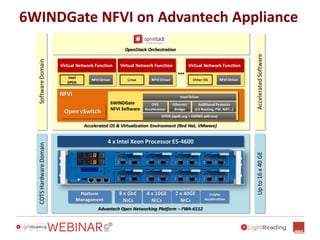

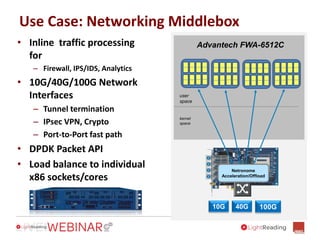

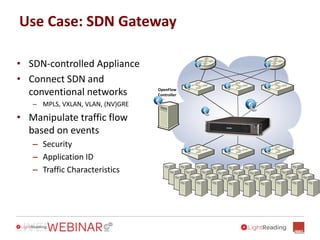

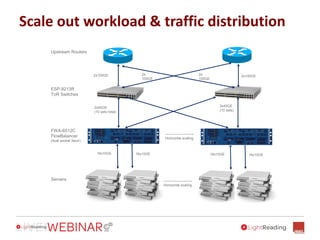

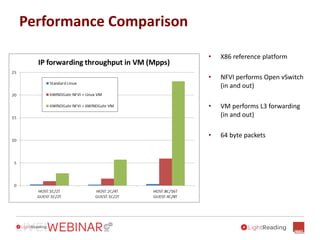

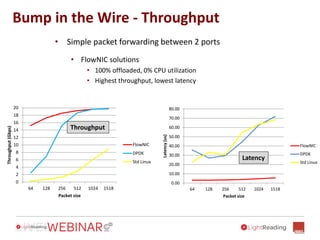

The document discusses the increasing demand for networked applications and the challenges associated with virtualizing network infrastructure while maintaining performance. It highlights solutions related to software acceleration in networking and provides examples of use cases for enhanced throughput and efficient resource utilization. Key concepts include software-defined networking (SDN), network functions virtualization (NFV), and the importance of tackling bottlenecks in data flow for optimal network performance.