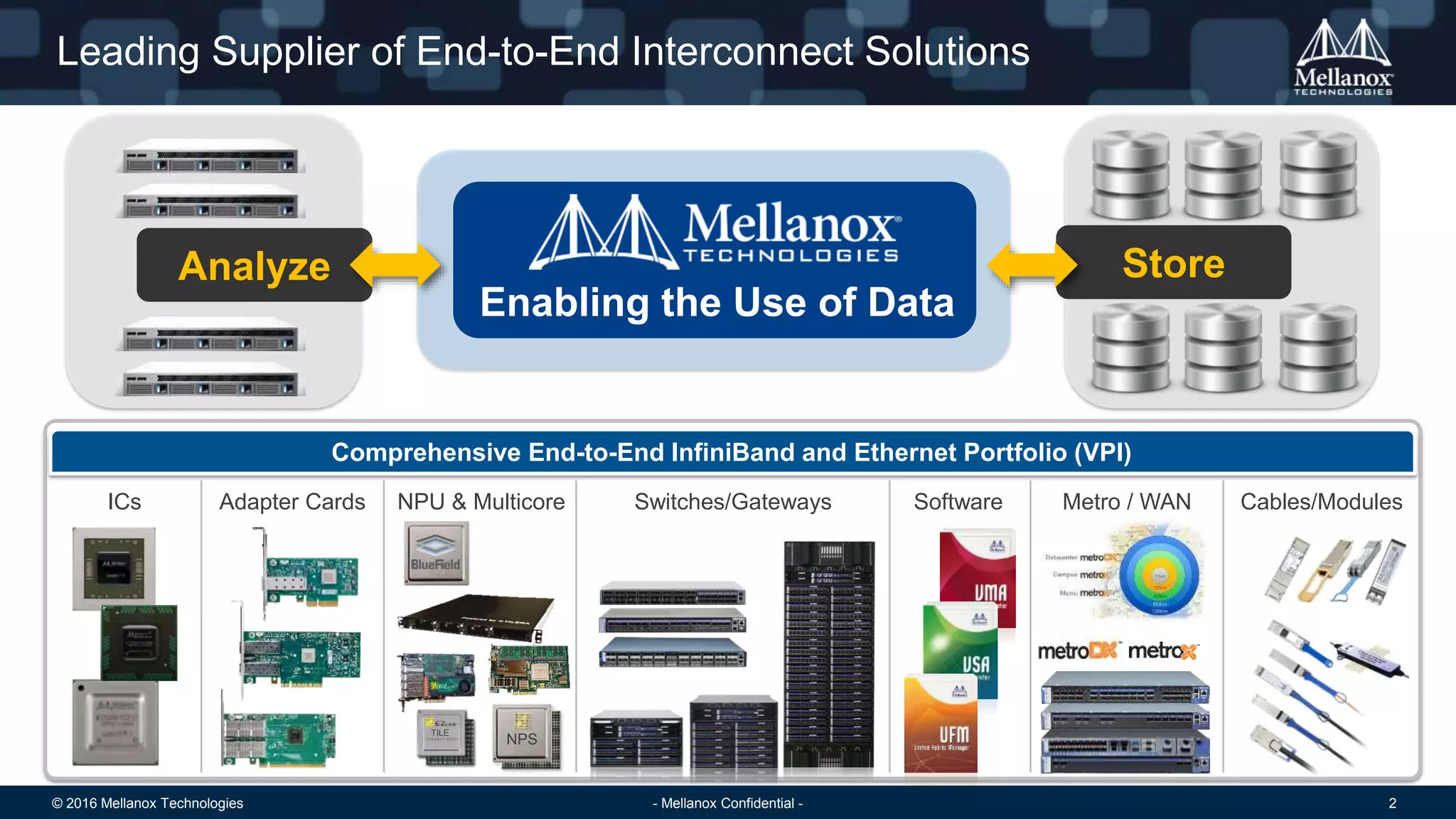

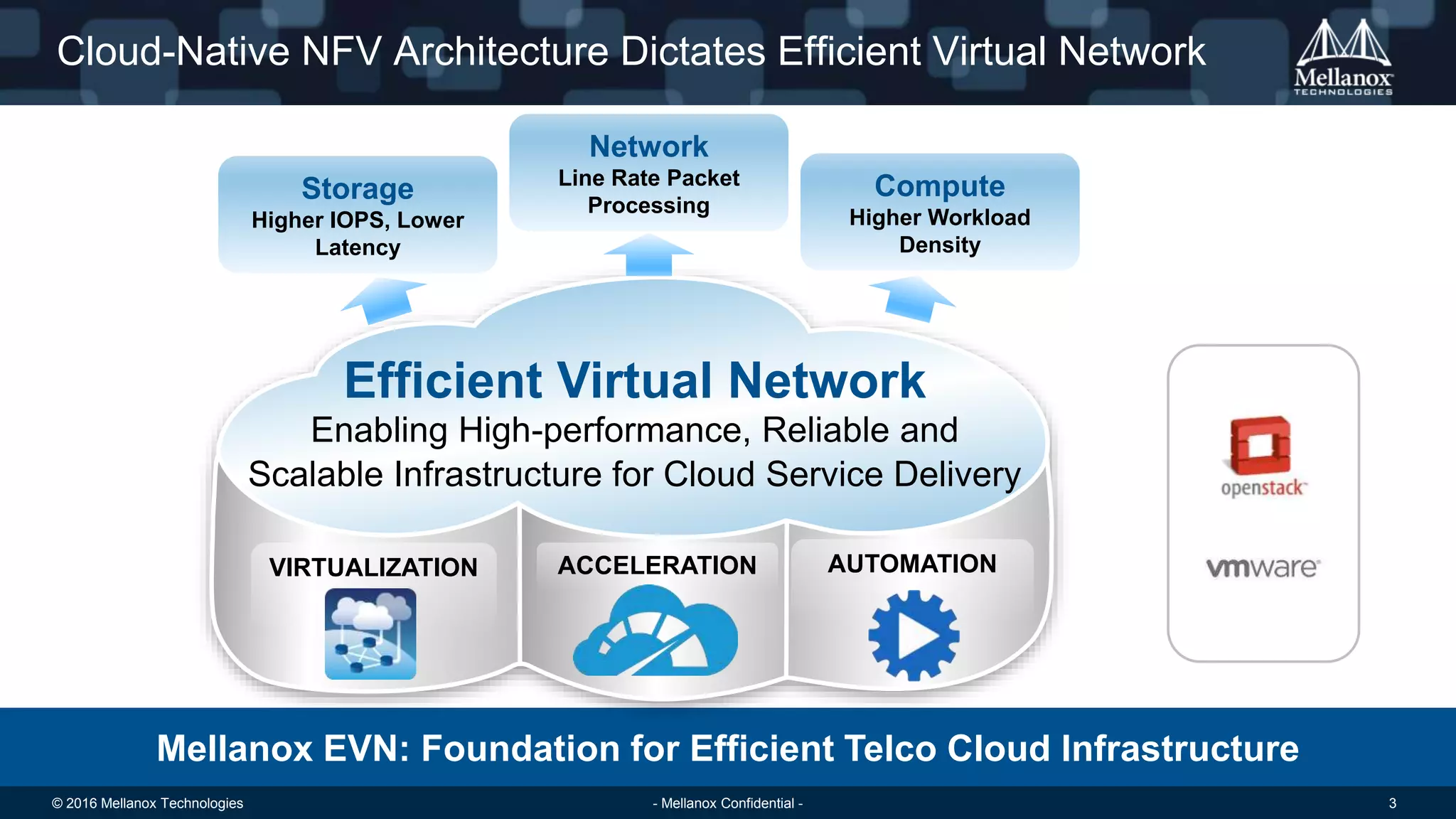

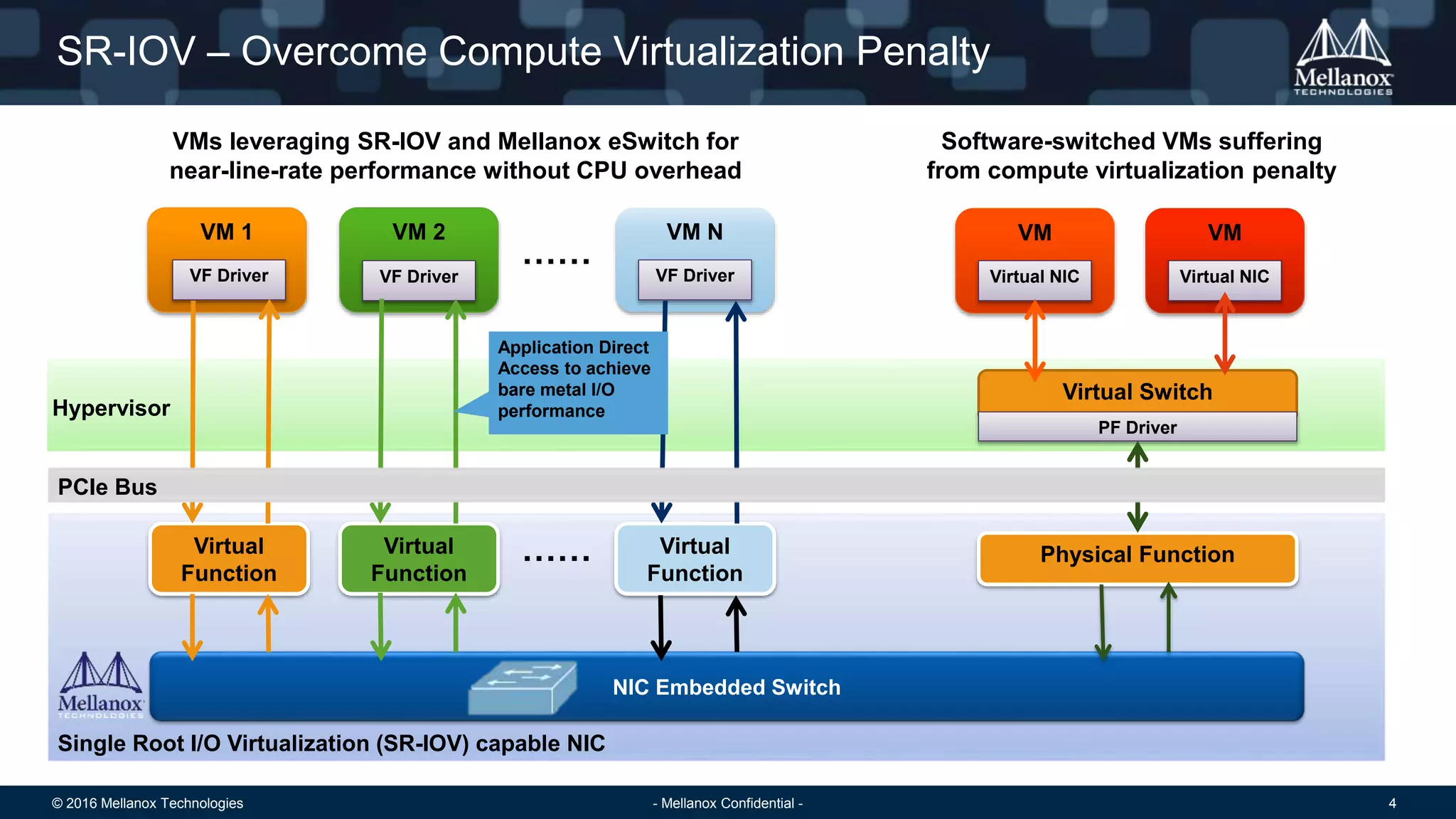

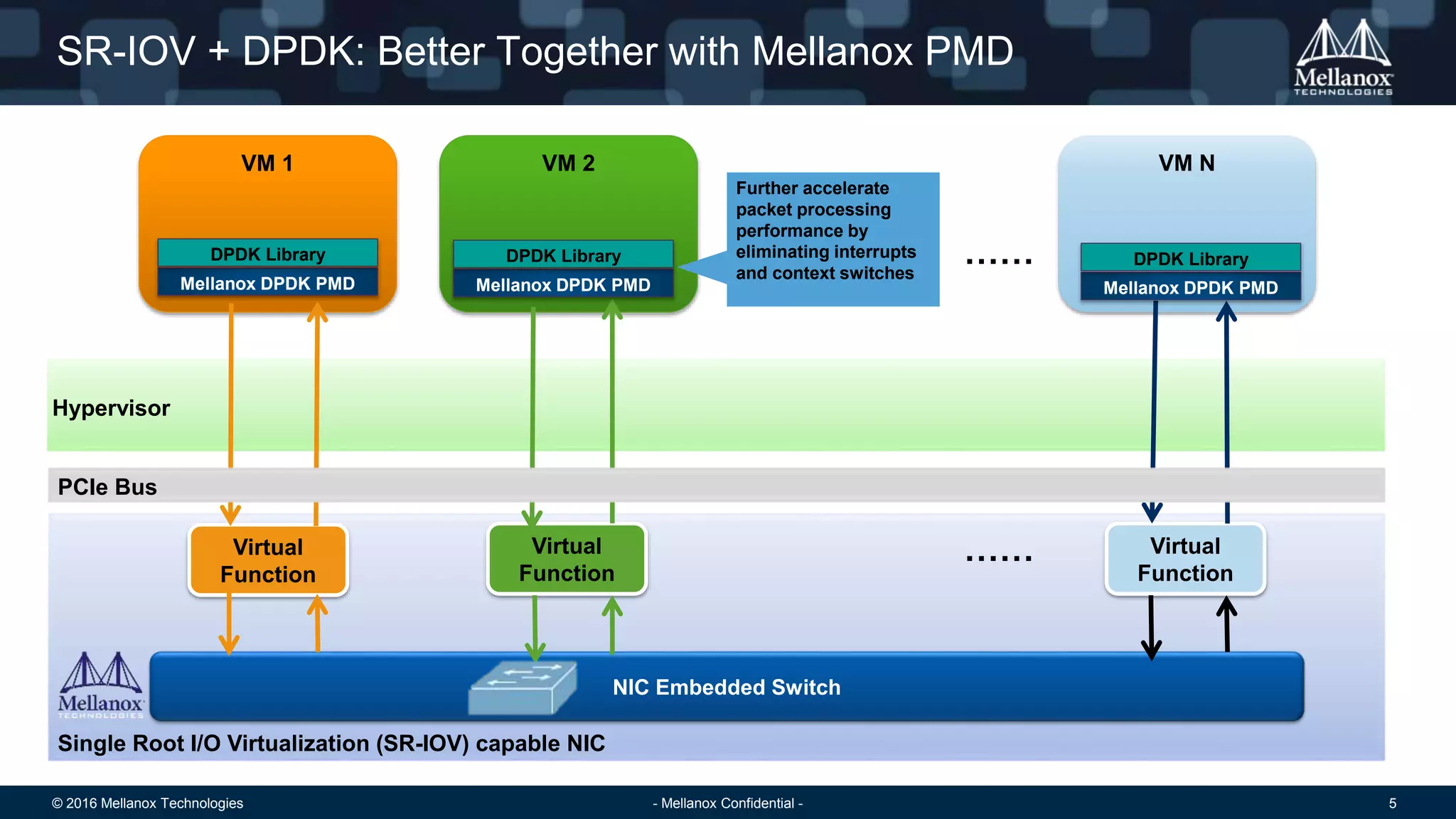

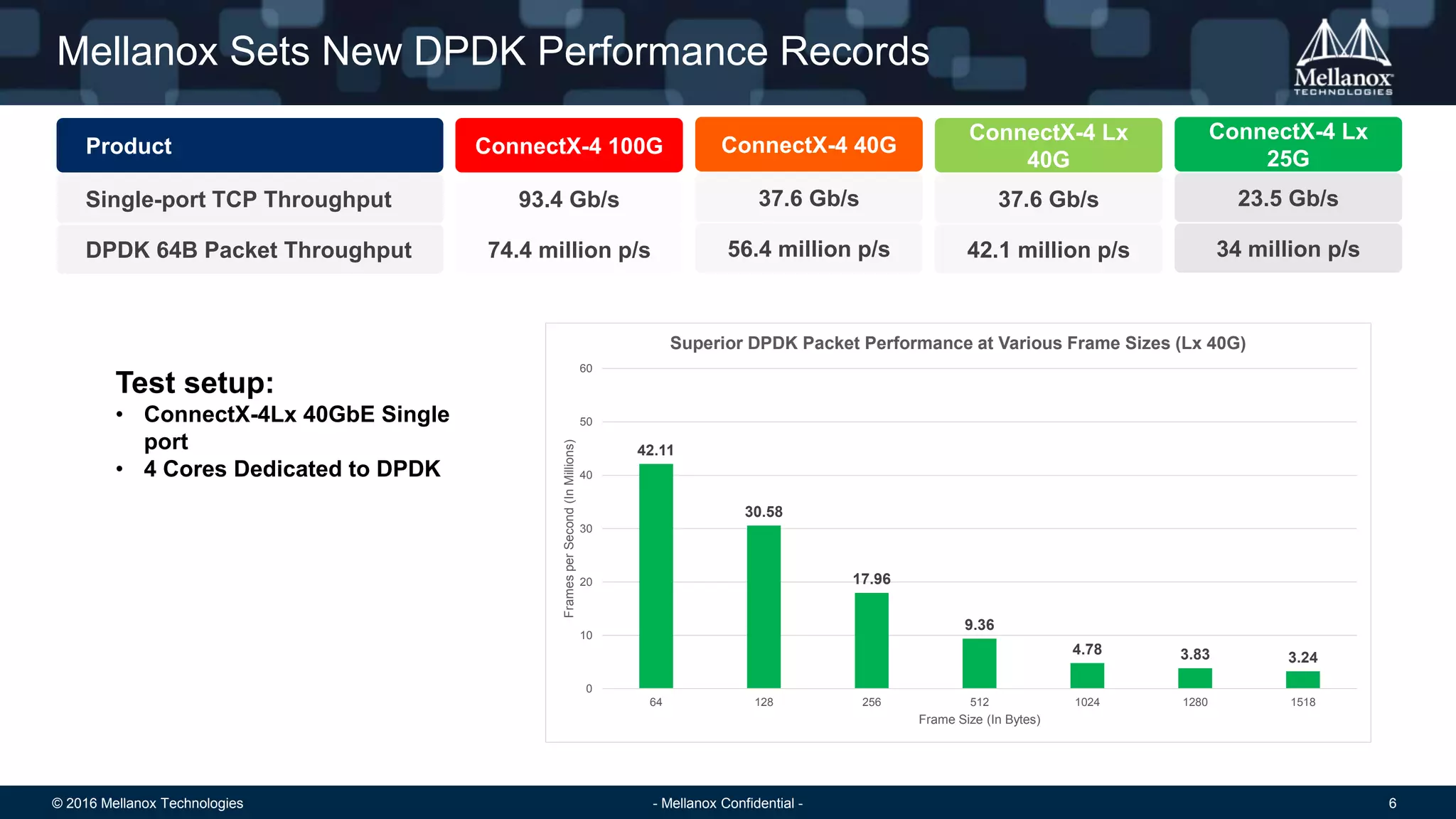

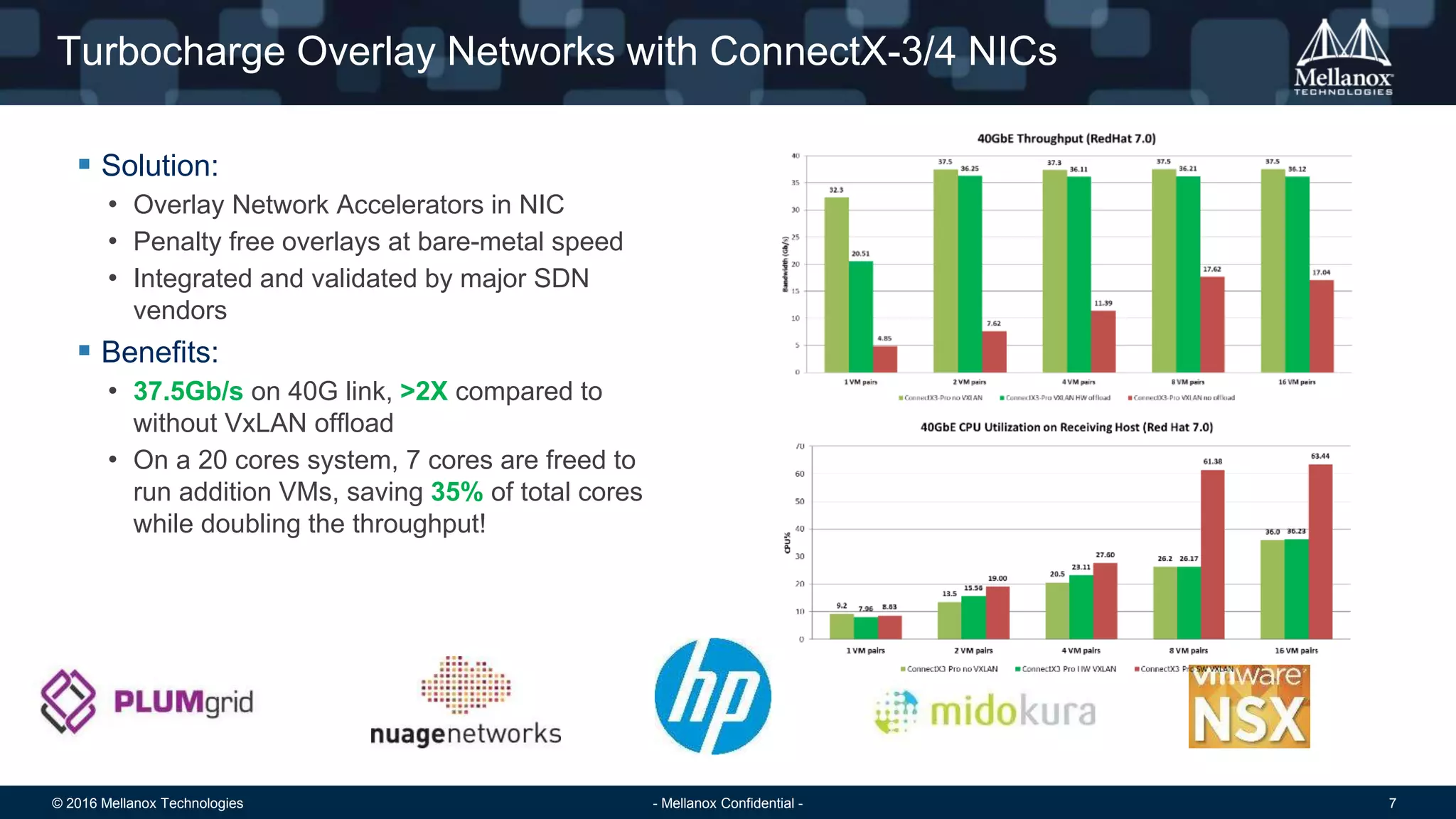

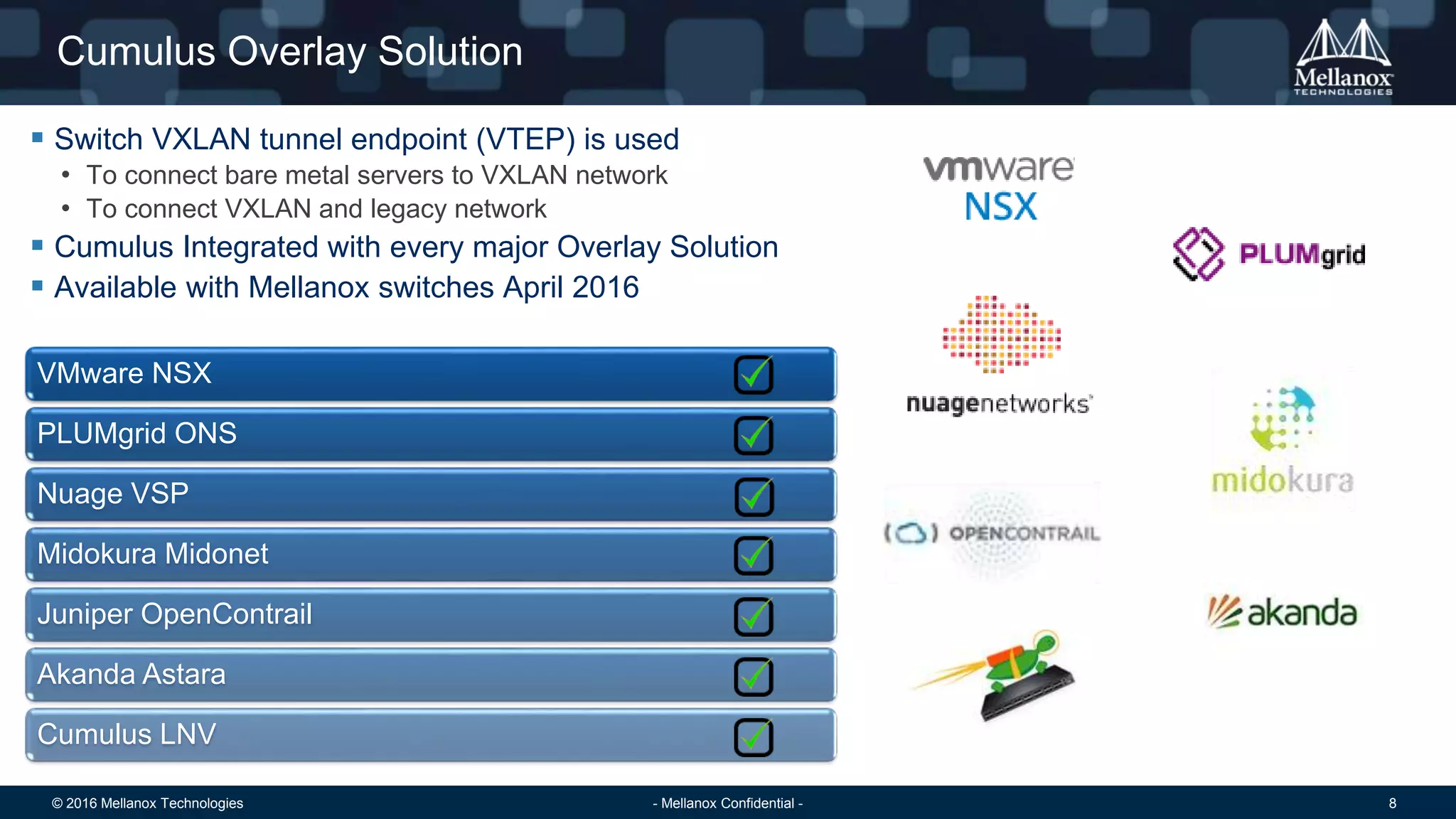

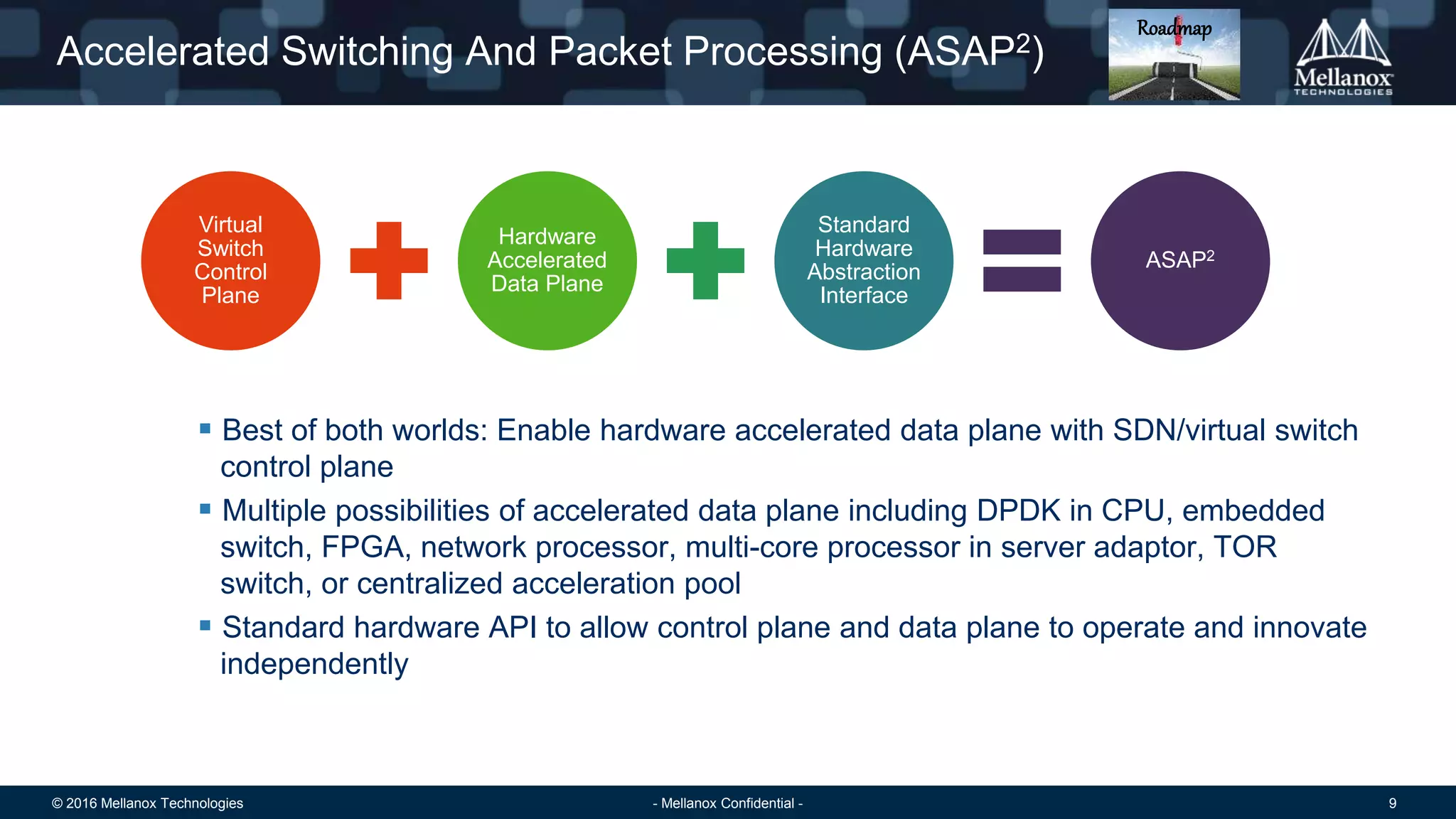

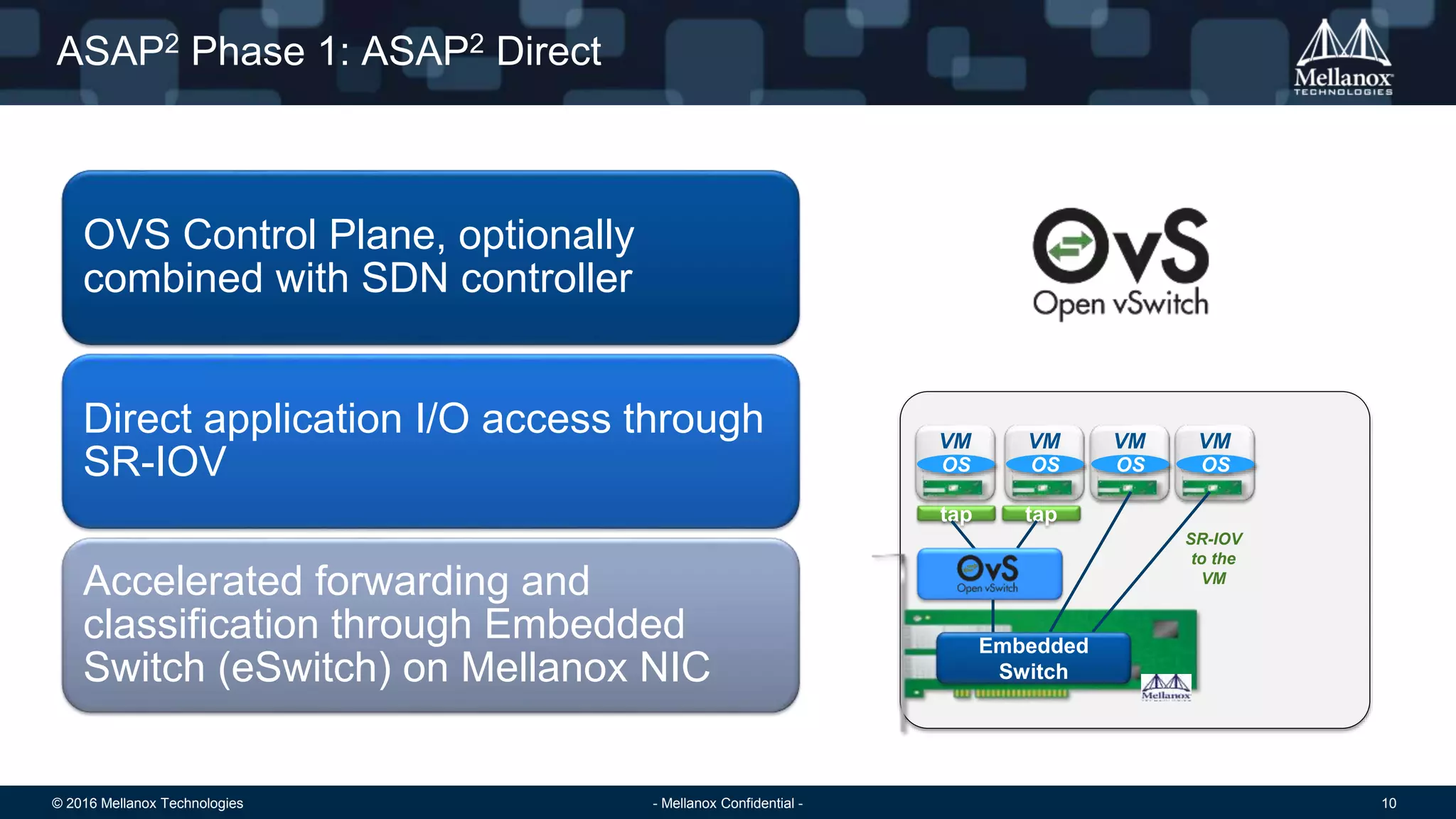

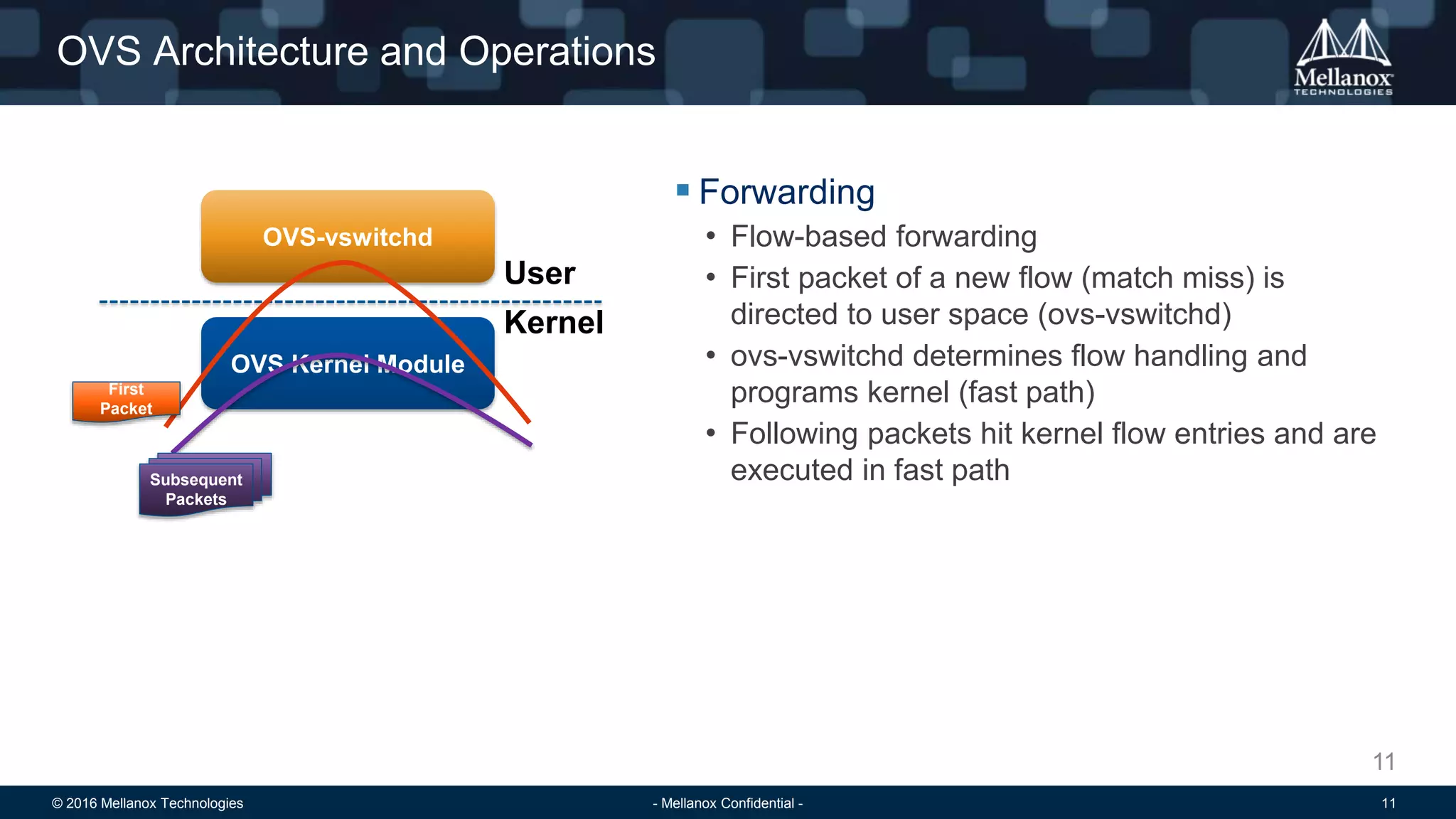

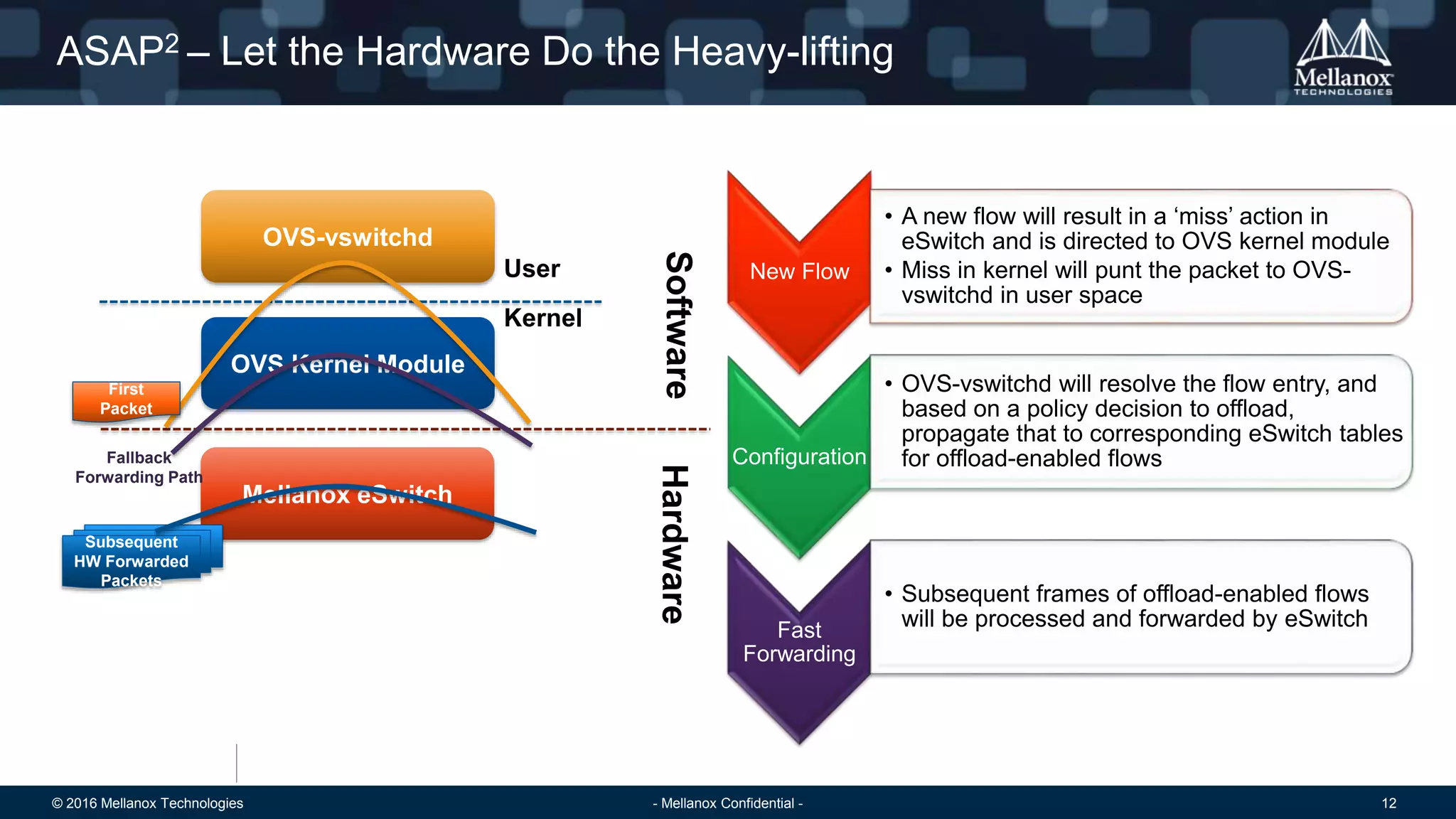

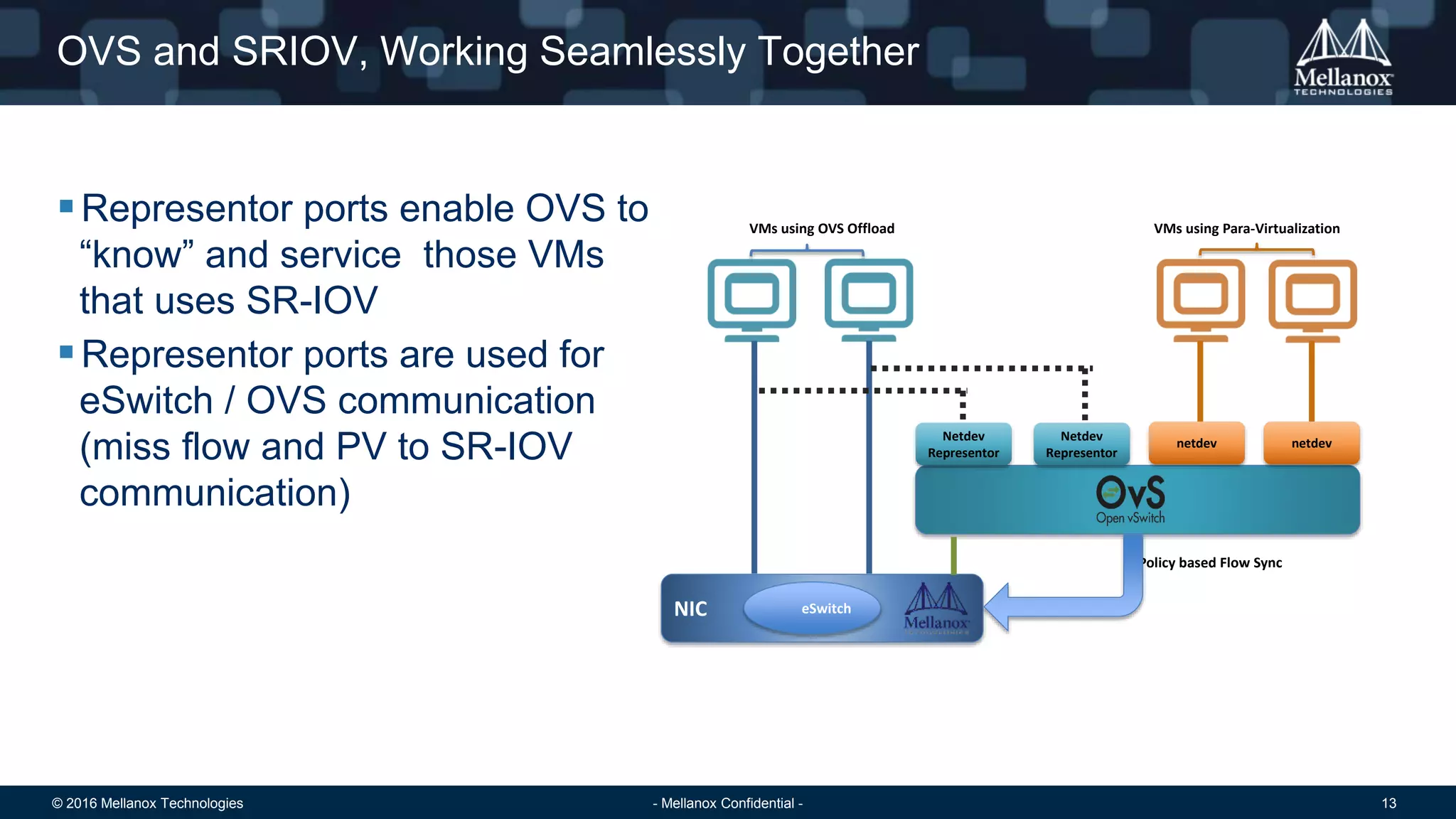

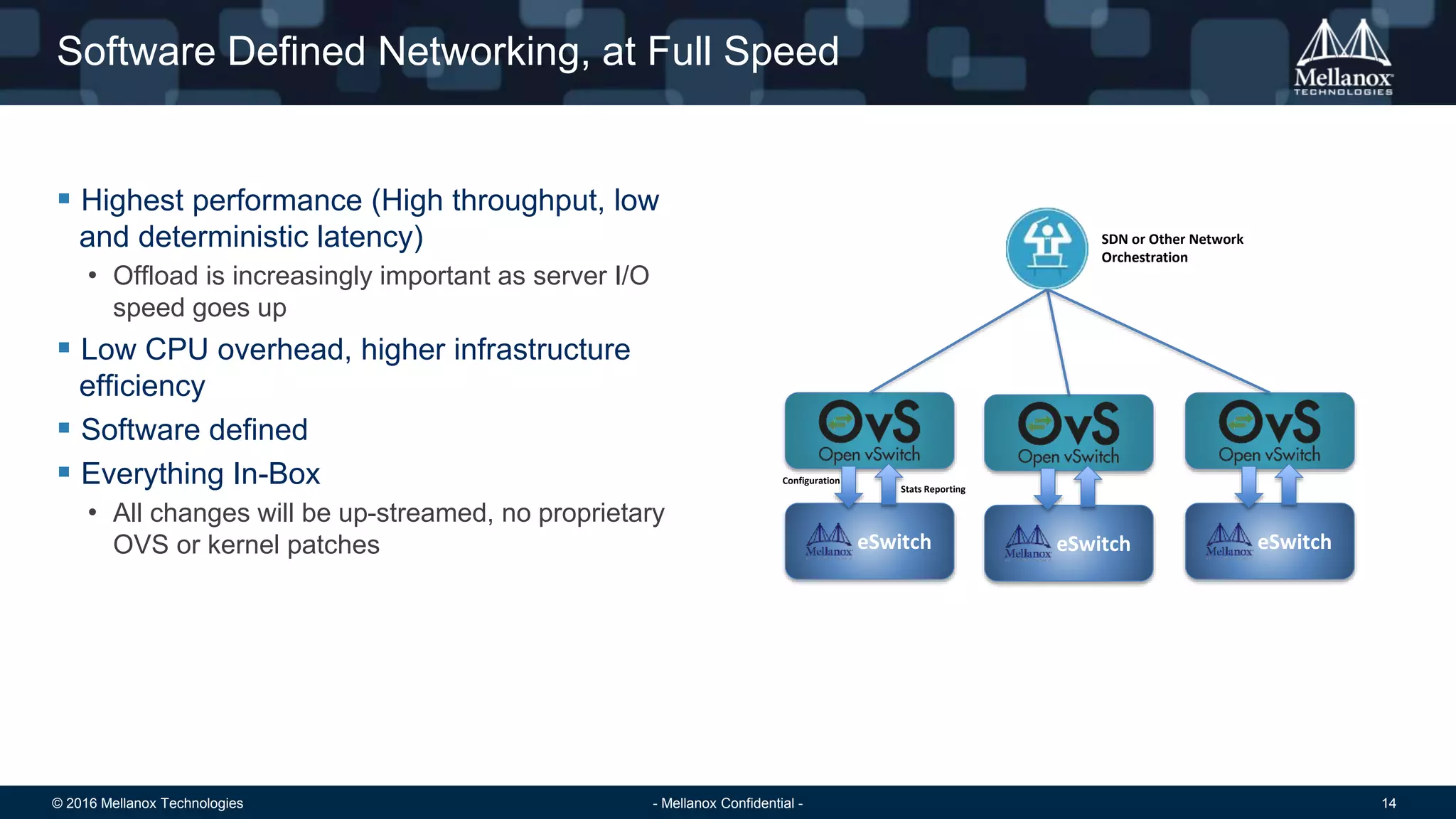

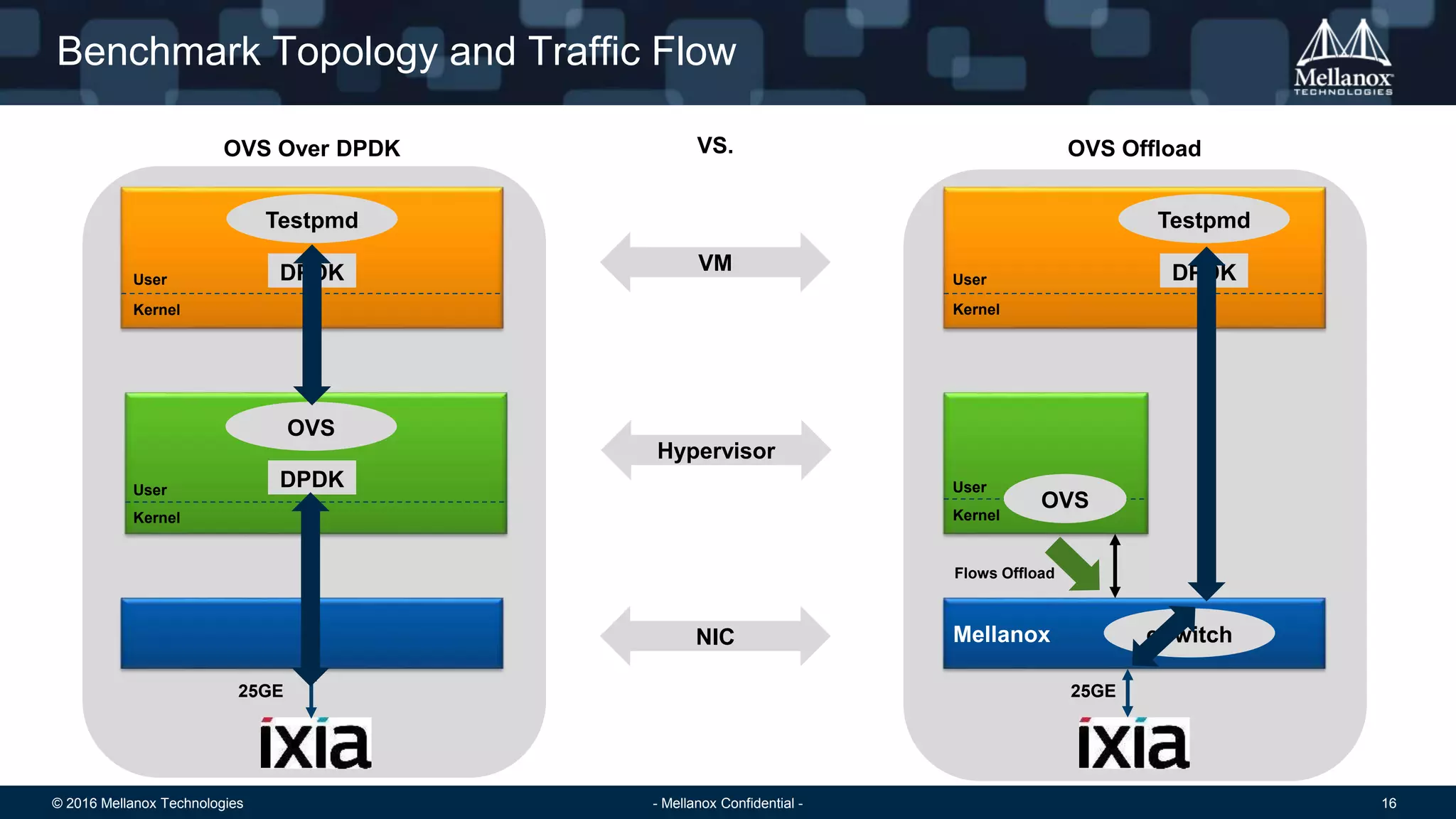

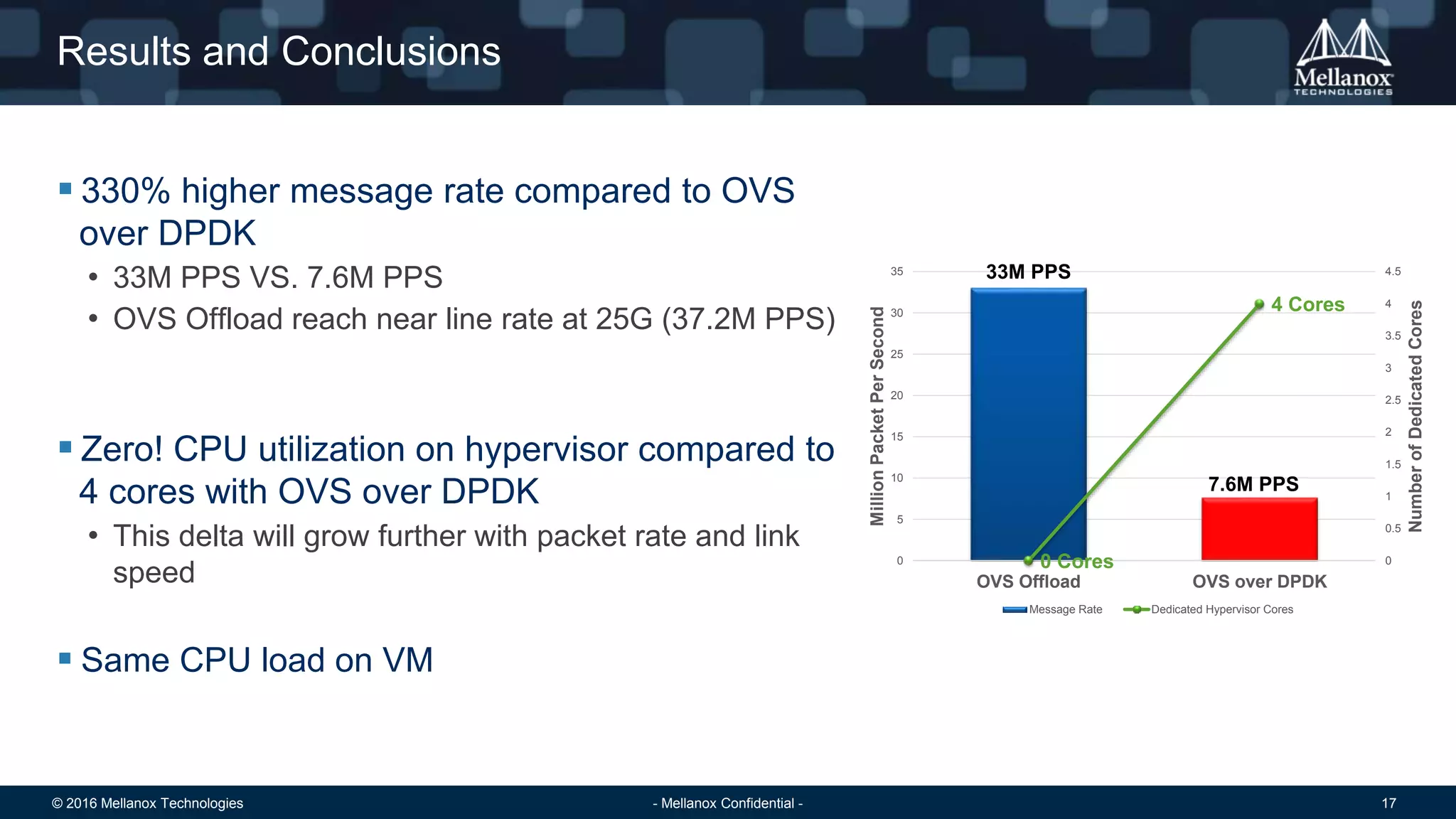

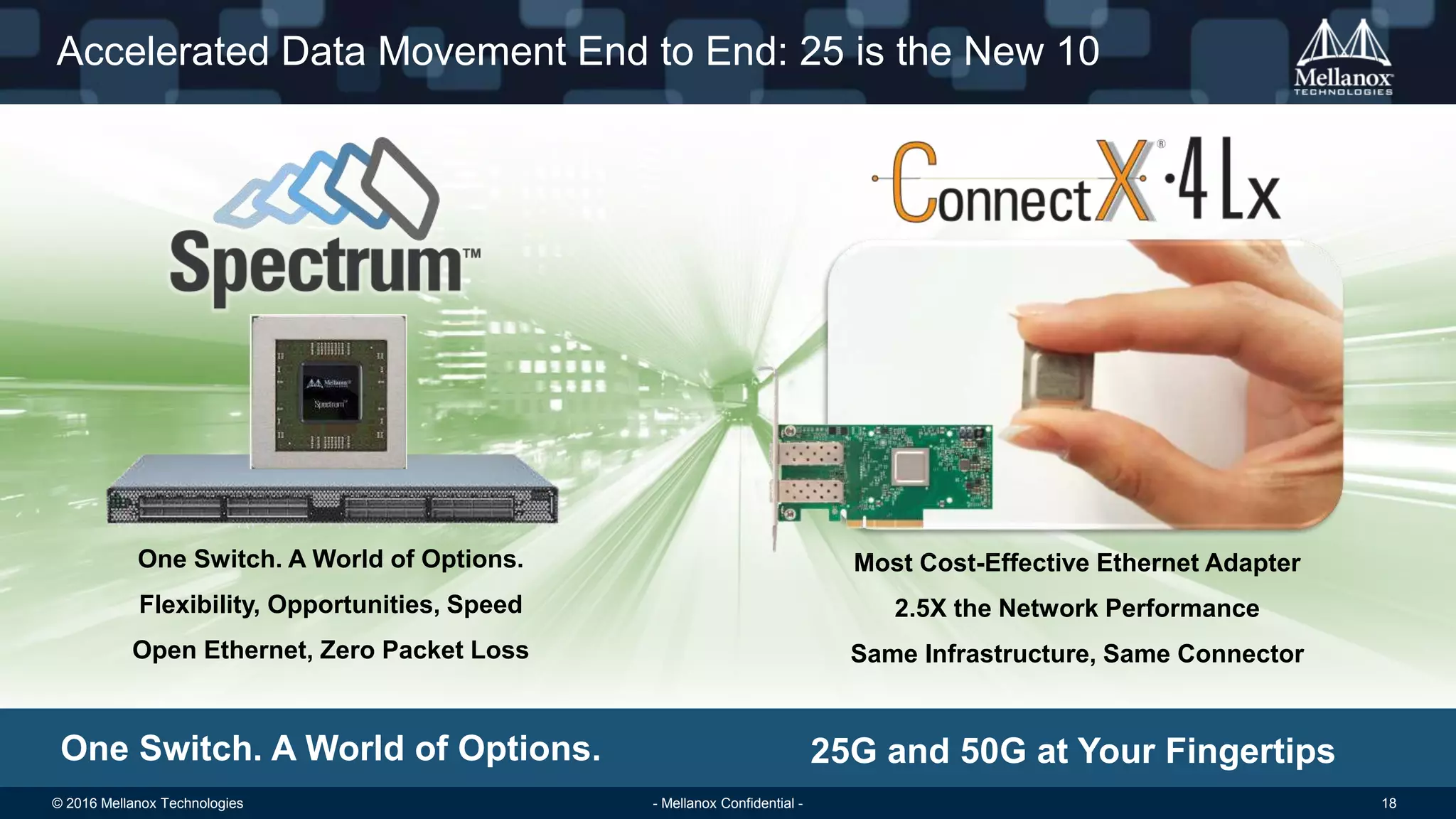

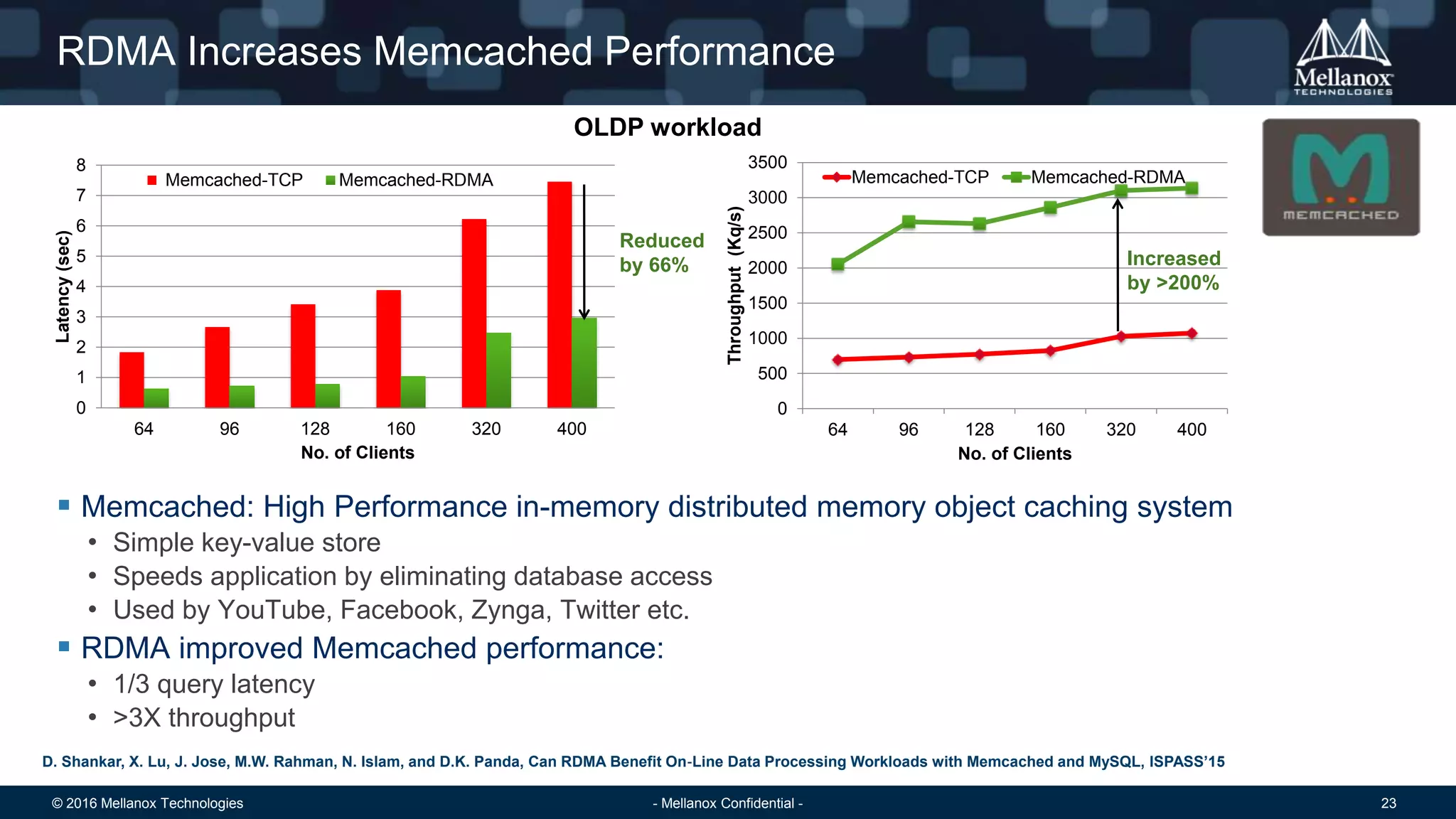

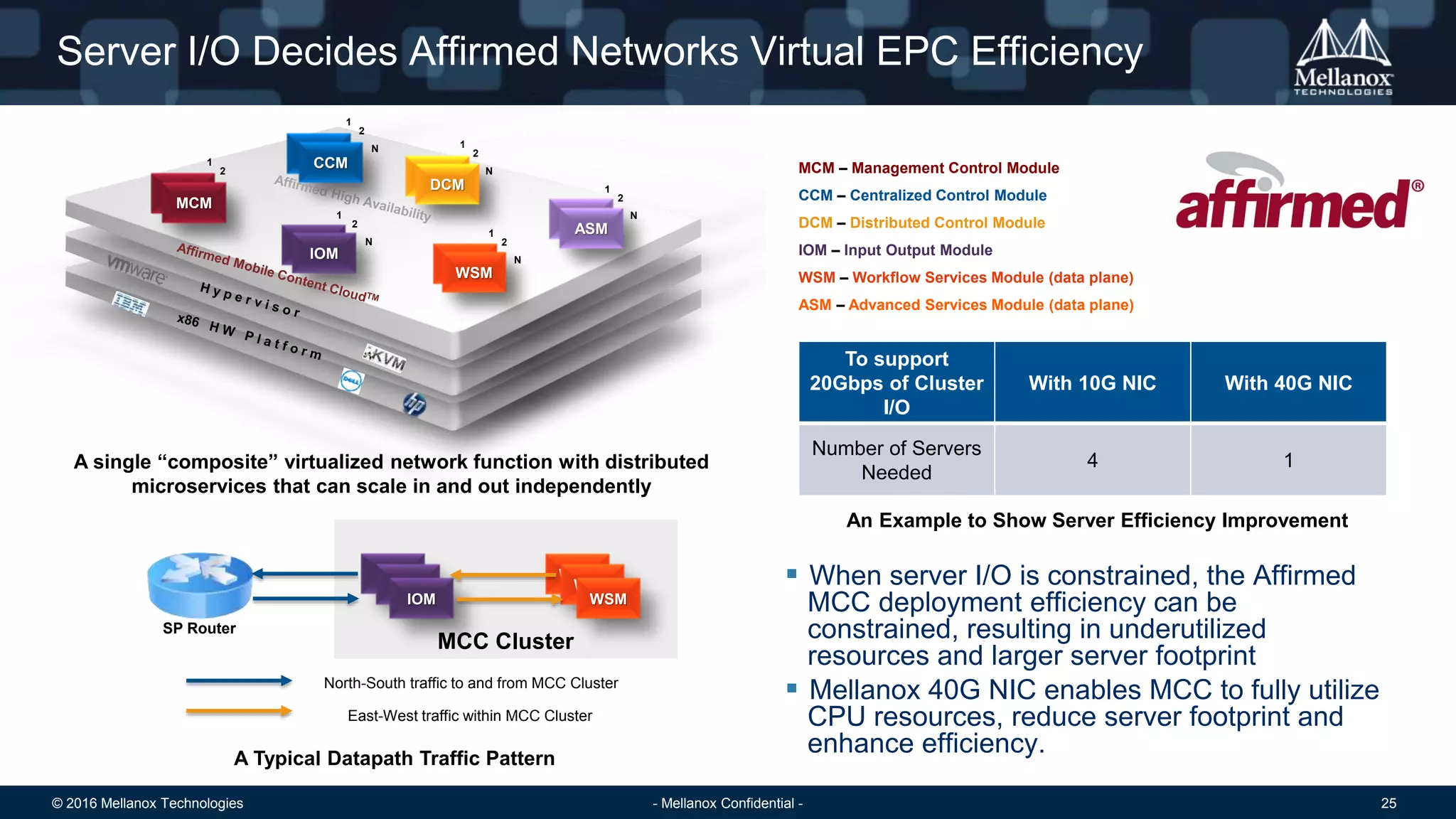

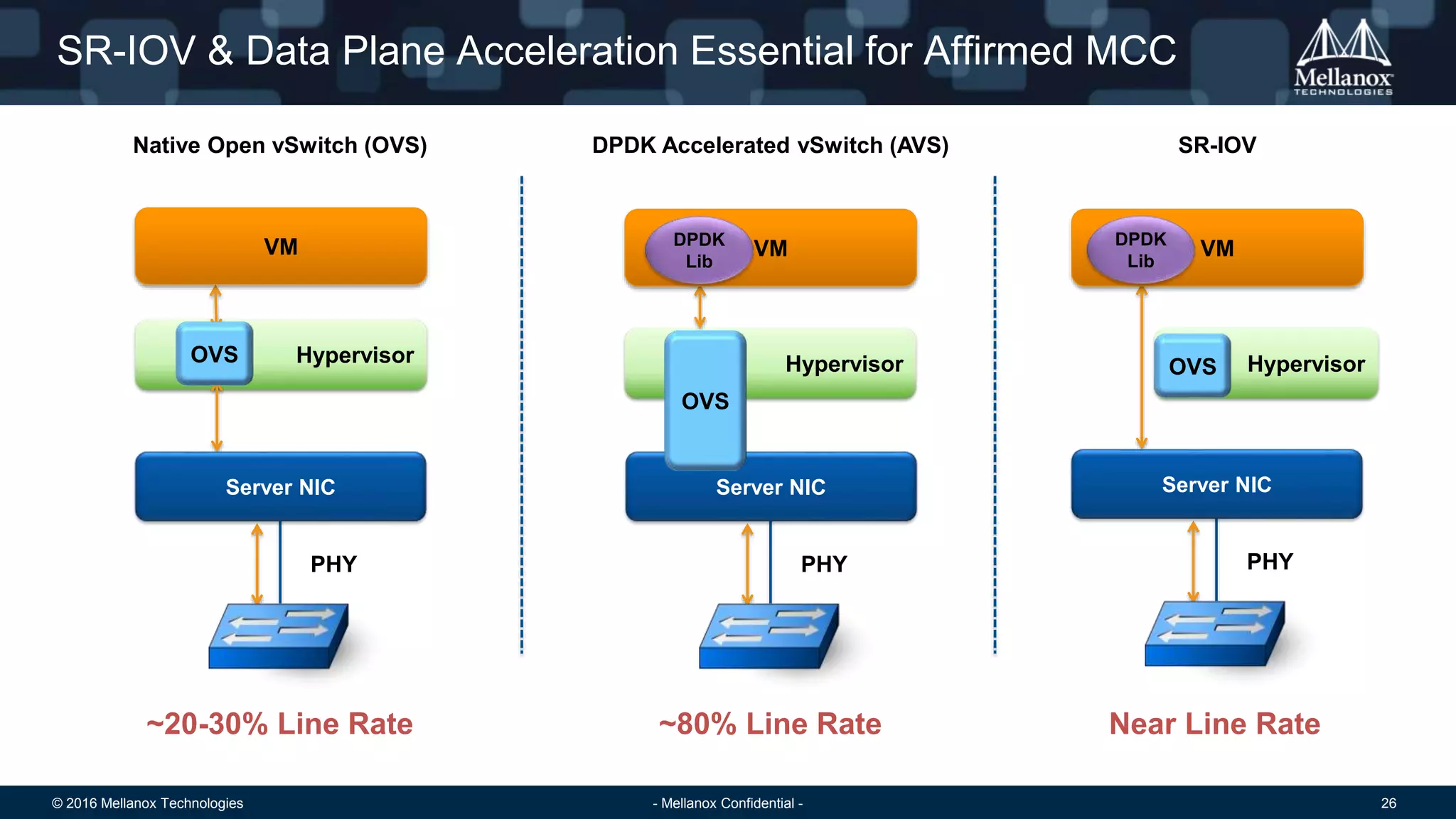

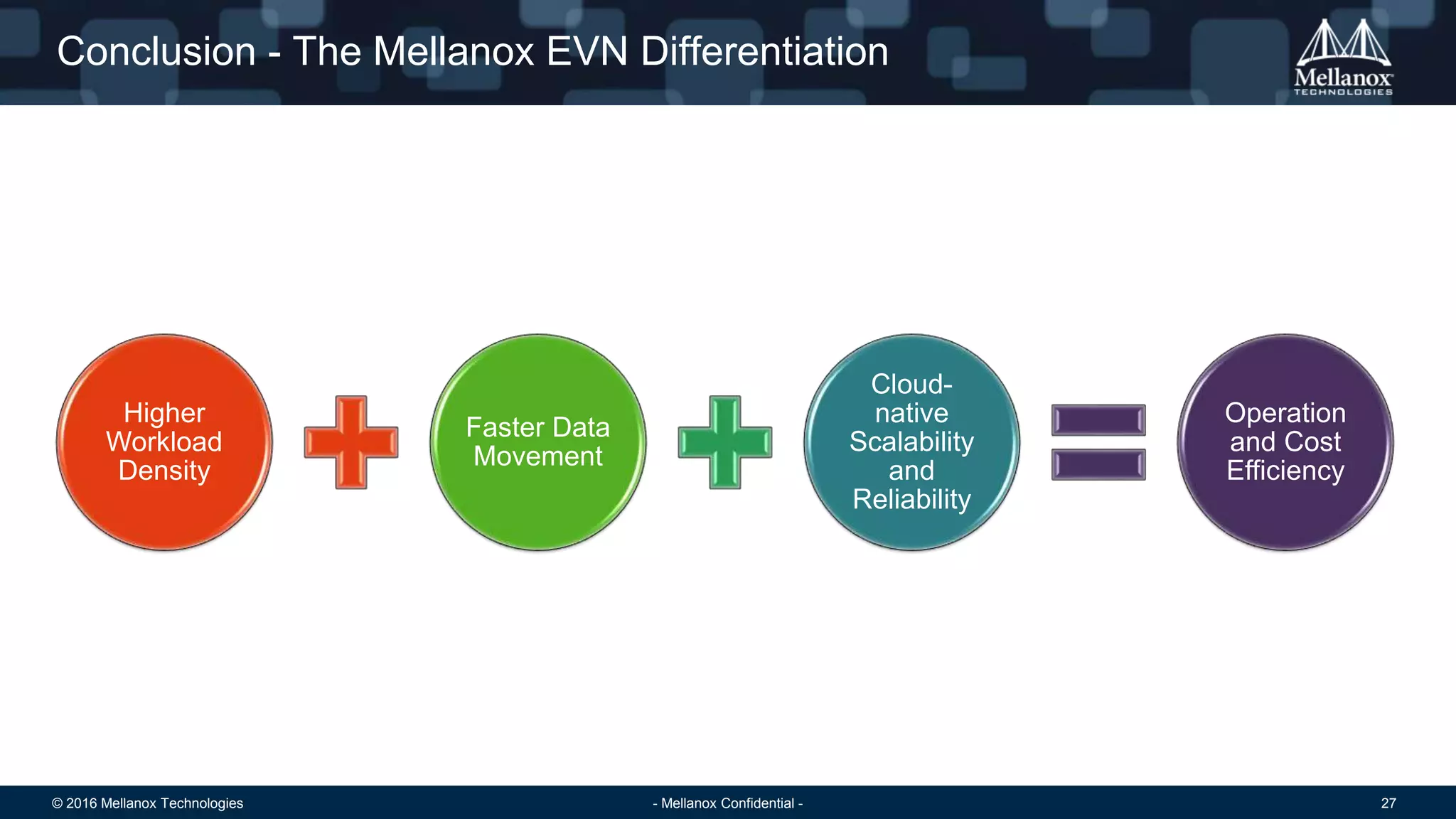

This document discusses Mellanox's Efficient Virtual Network (EVN) solution for service providers. It begins with an overview of Mellanox's end-to-end interconnect solutions and portfolio. It then discusses how the cloud-native NFV architecture requires an efficient virtual network. The EVN is introduced as the foundation for efficient telco cloud infrastructure. The document provides details on how SR-IOV and DPDK can be used together with Mellanox NICs to achieve near line-rate performance without CPU overhead. It also discusses how overlay networks can be accelerated using overlay network accelerators in NICs. Benchmark results show the EVN approach achieving higher performance and lower CPU utilization compared to alternative solutions.