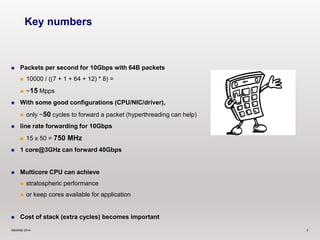

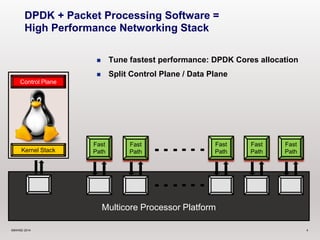

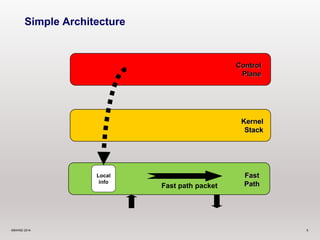

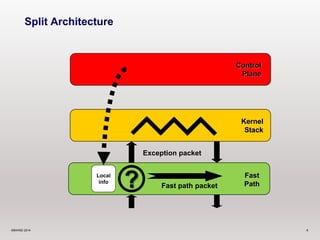

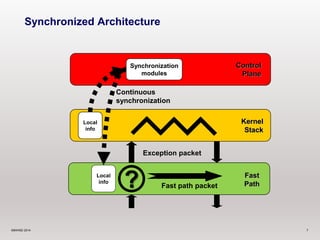

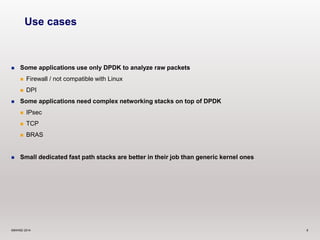

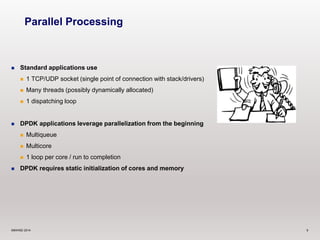

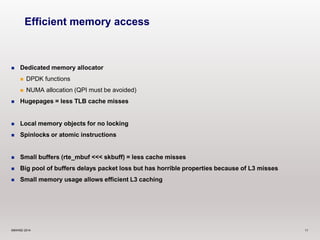

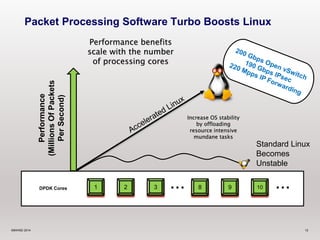

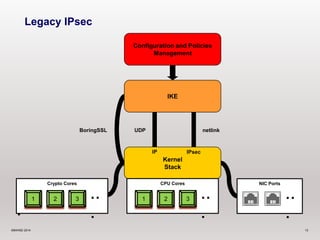

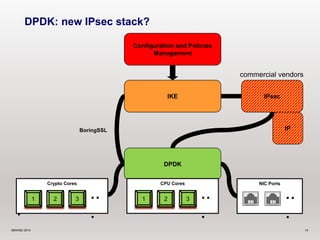

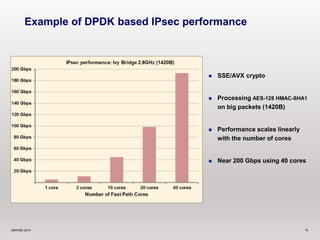

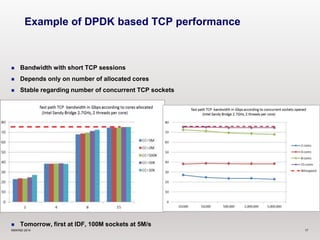

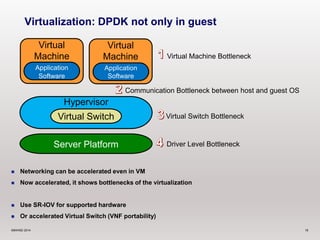

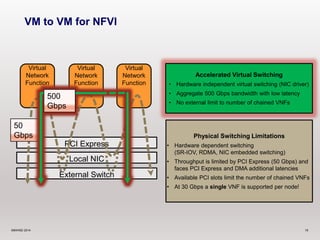

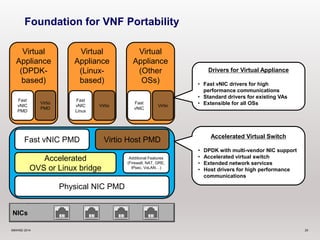

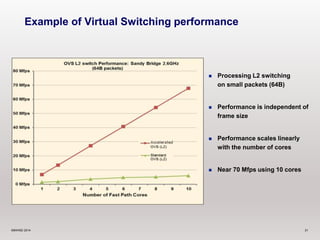

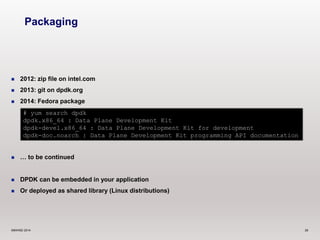

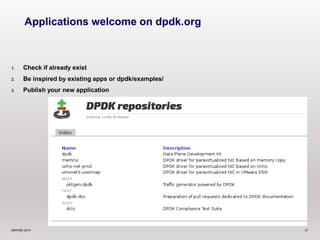

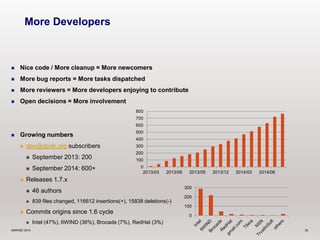

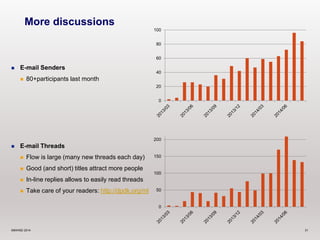

The document outlines the Data Plane Development Kit (DPDK), emphasizing its role in high-performance networking through efficient packet processing. It discusses various architectural designs, use cases, and benefits of DPDK in applications such as IPsec and TCP termination, highlighting its capabilities for parallel processing and memory efficiency. Additionally, it addresses the DPDK ecosystem's growth, challenges, and community engagement for further development.