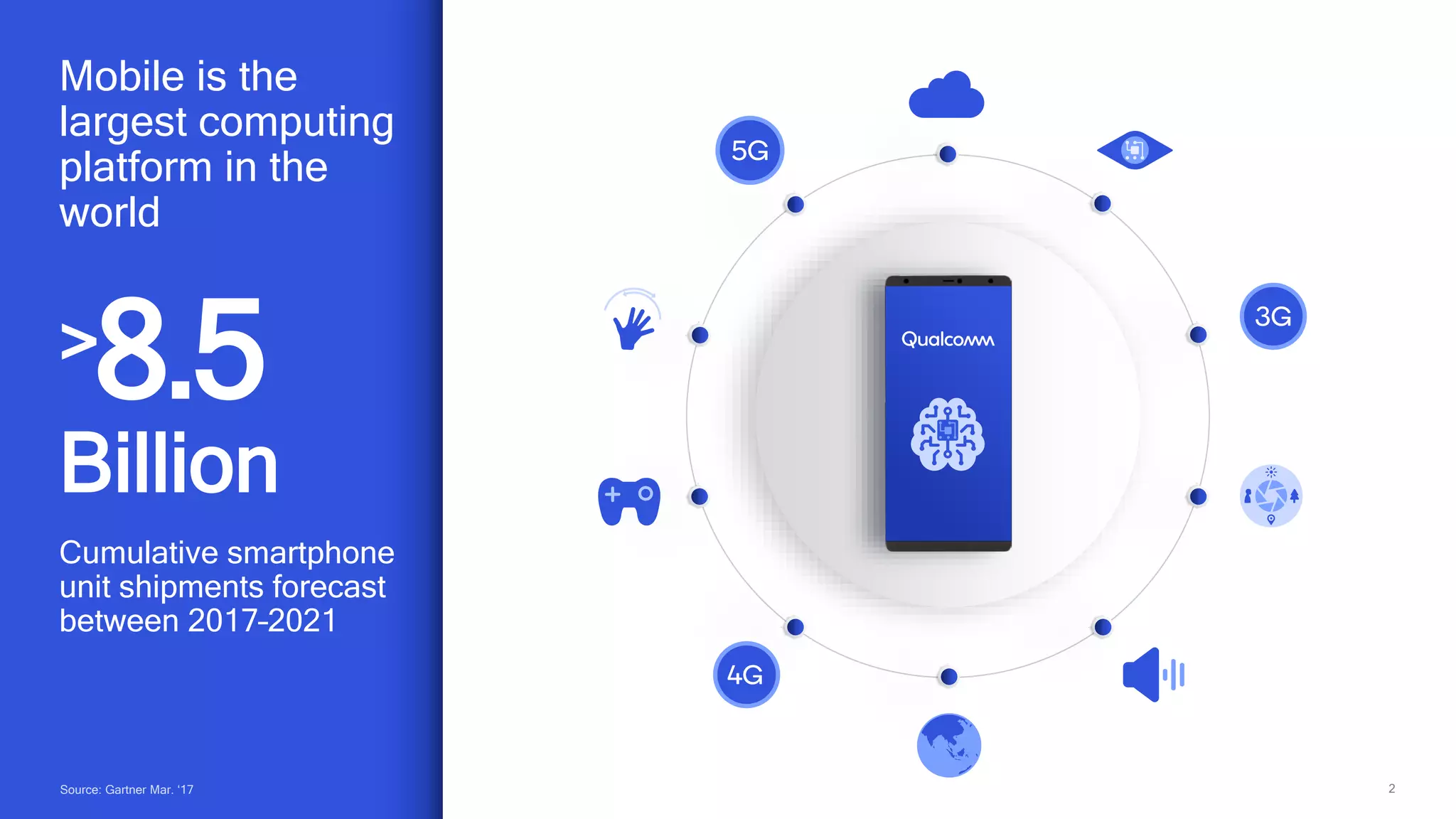

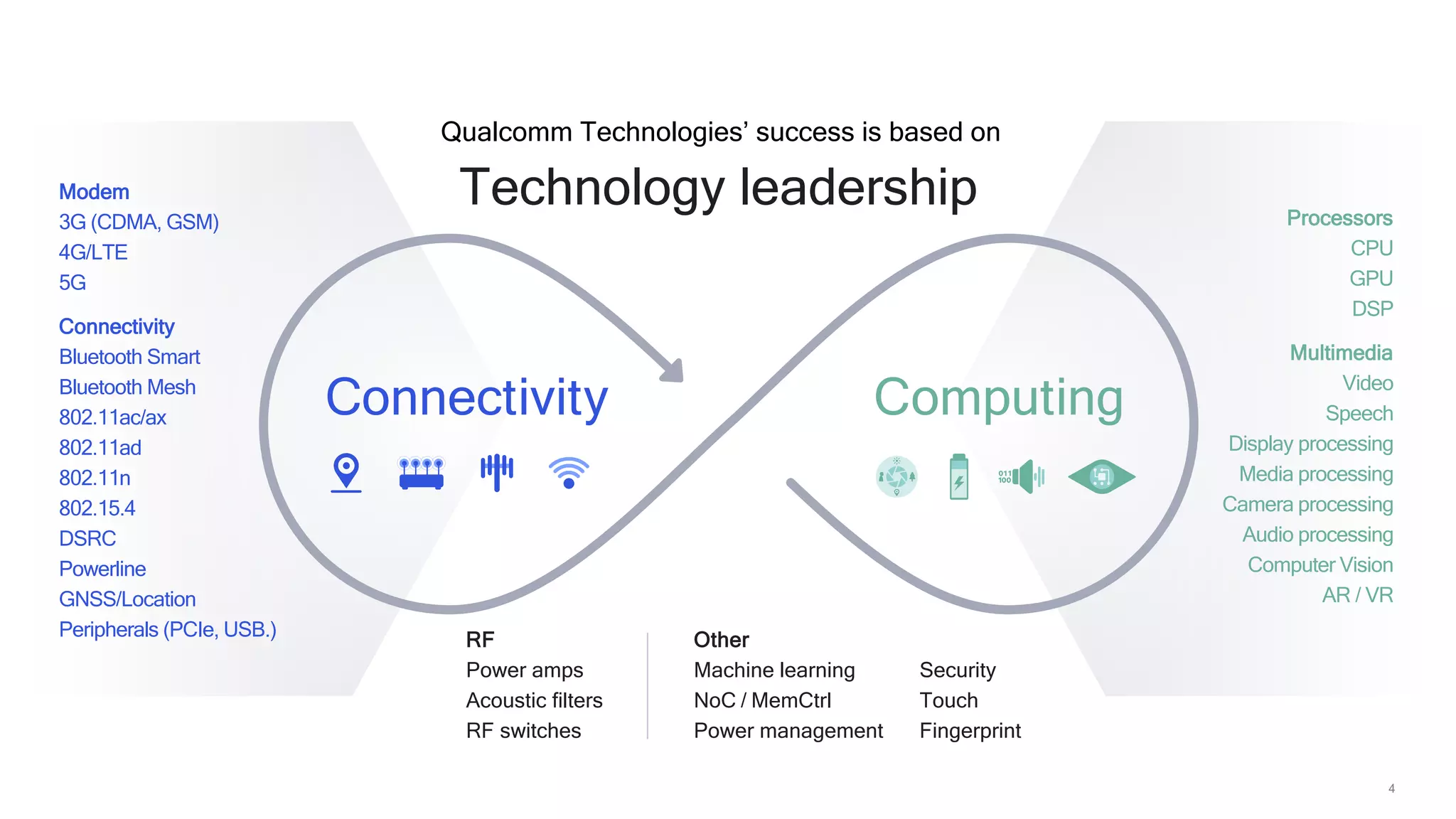

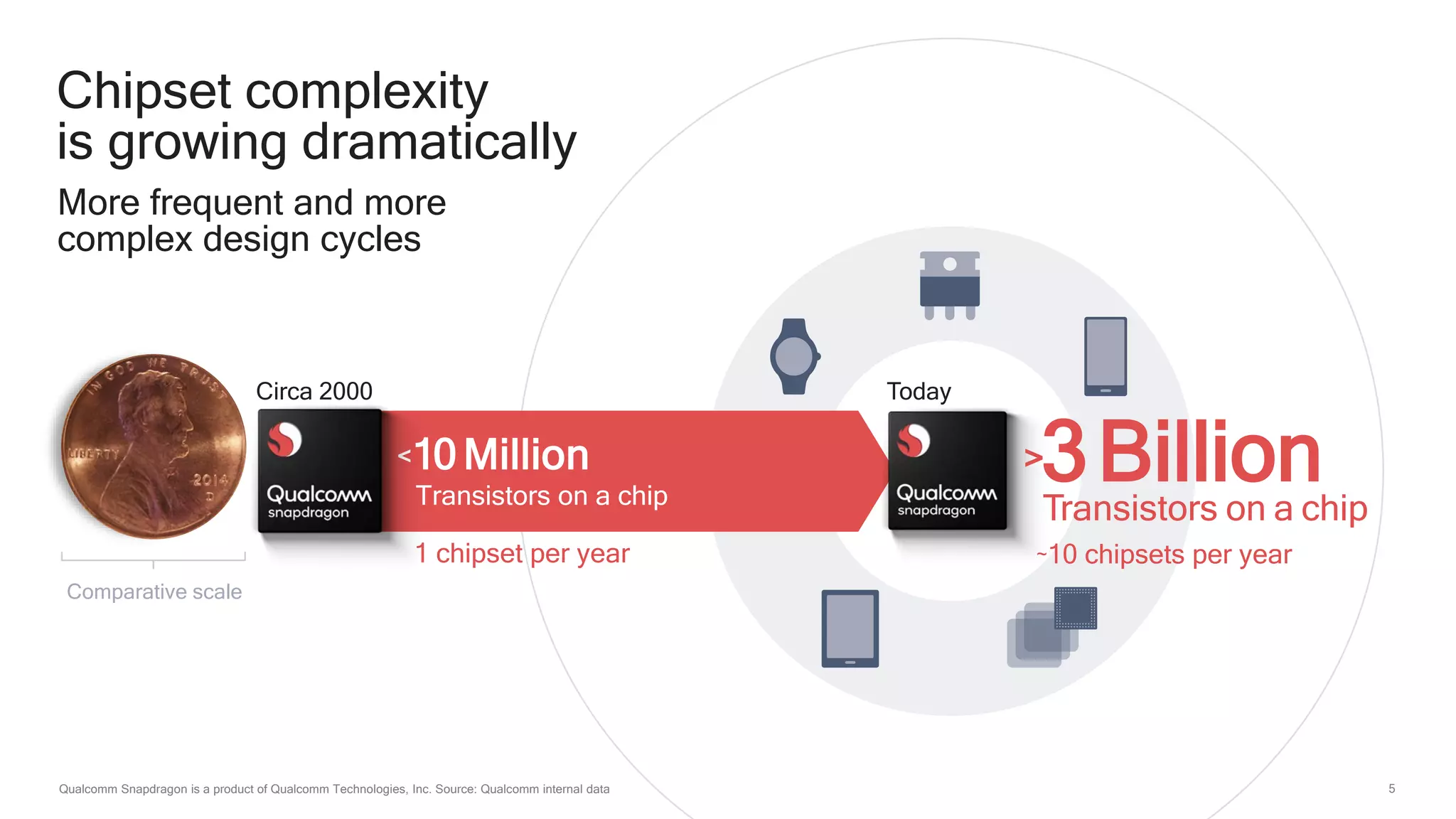

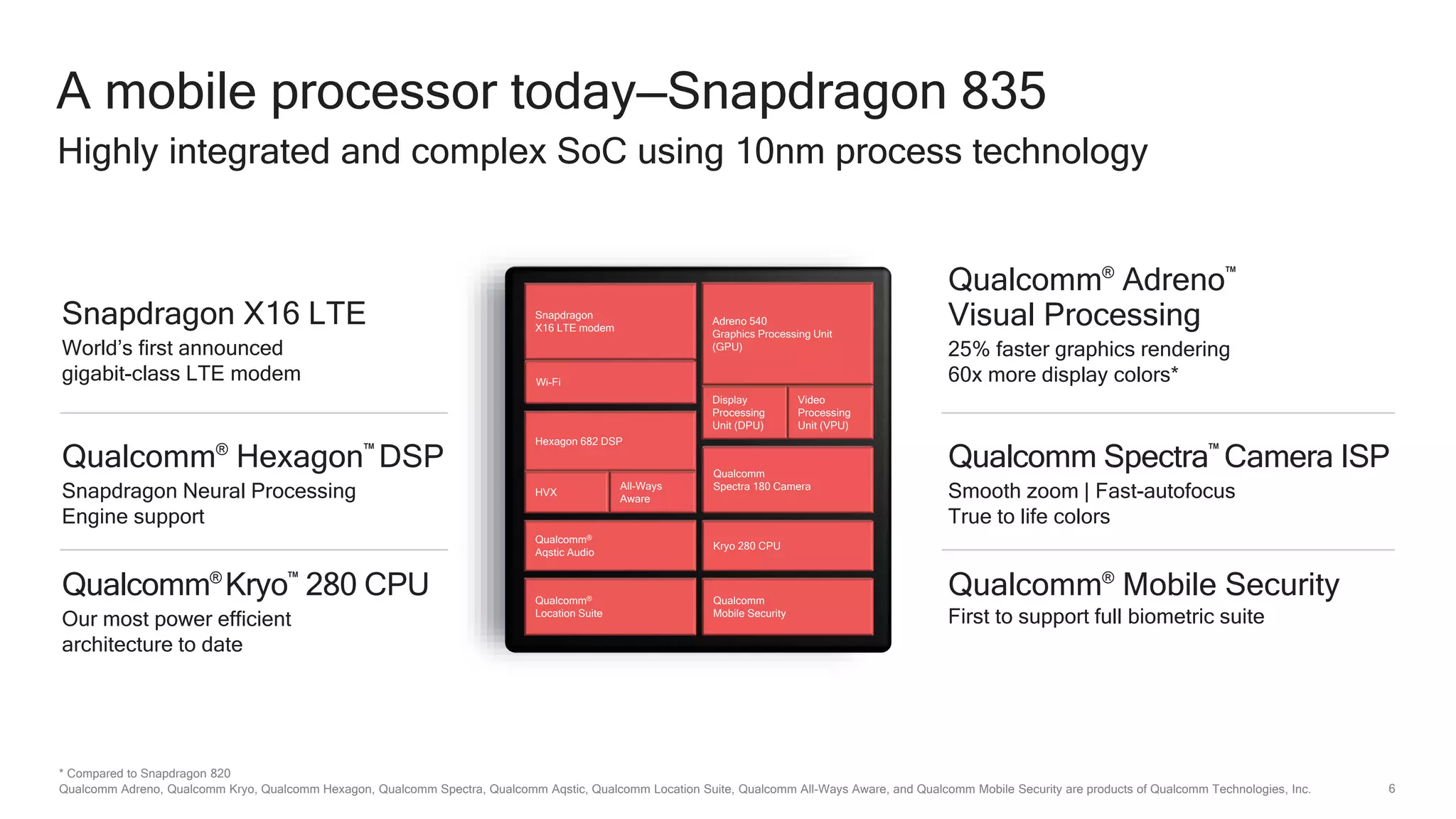

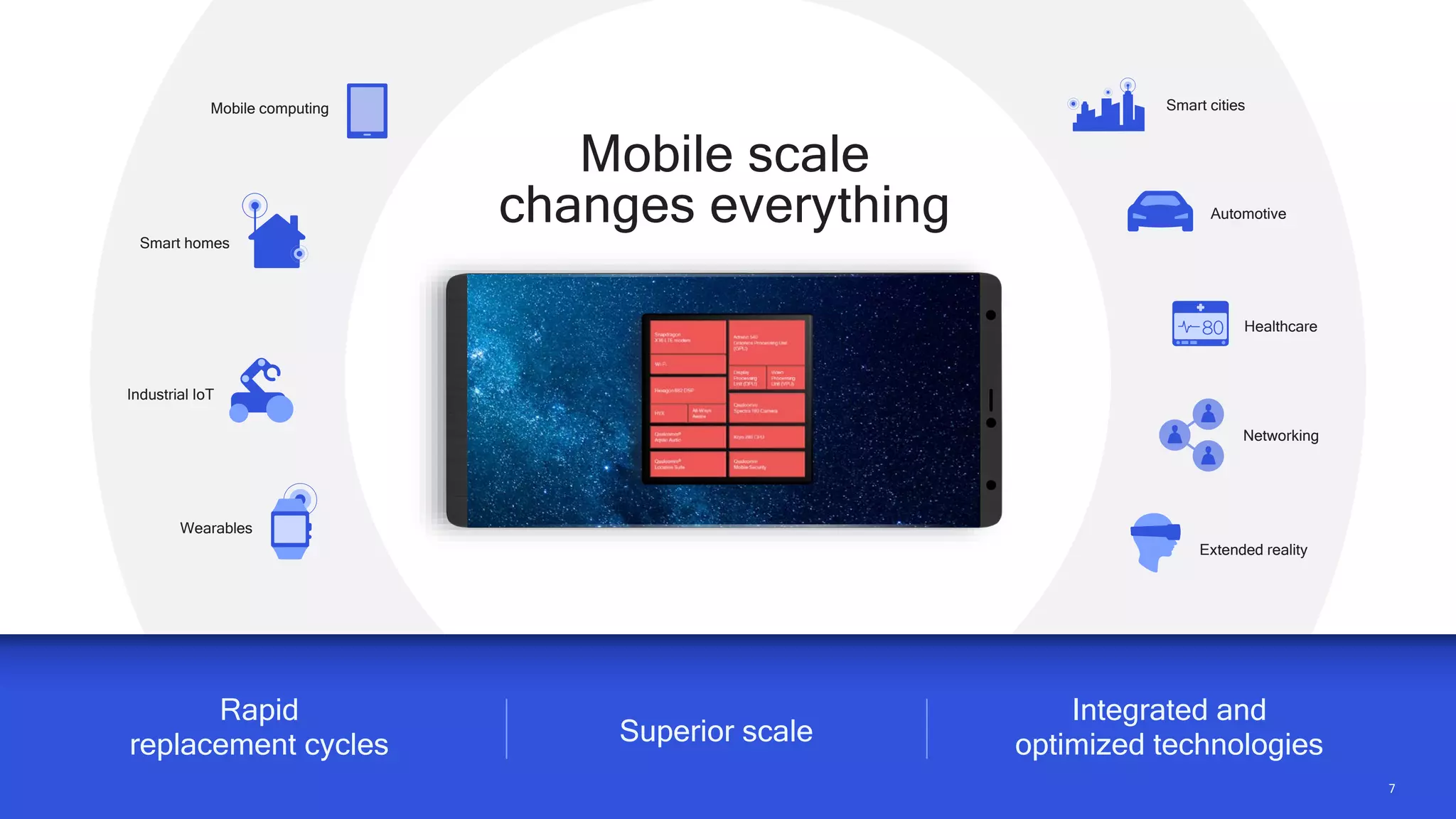

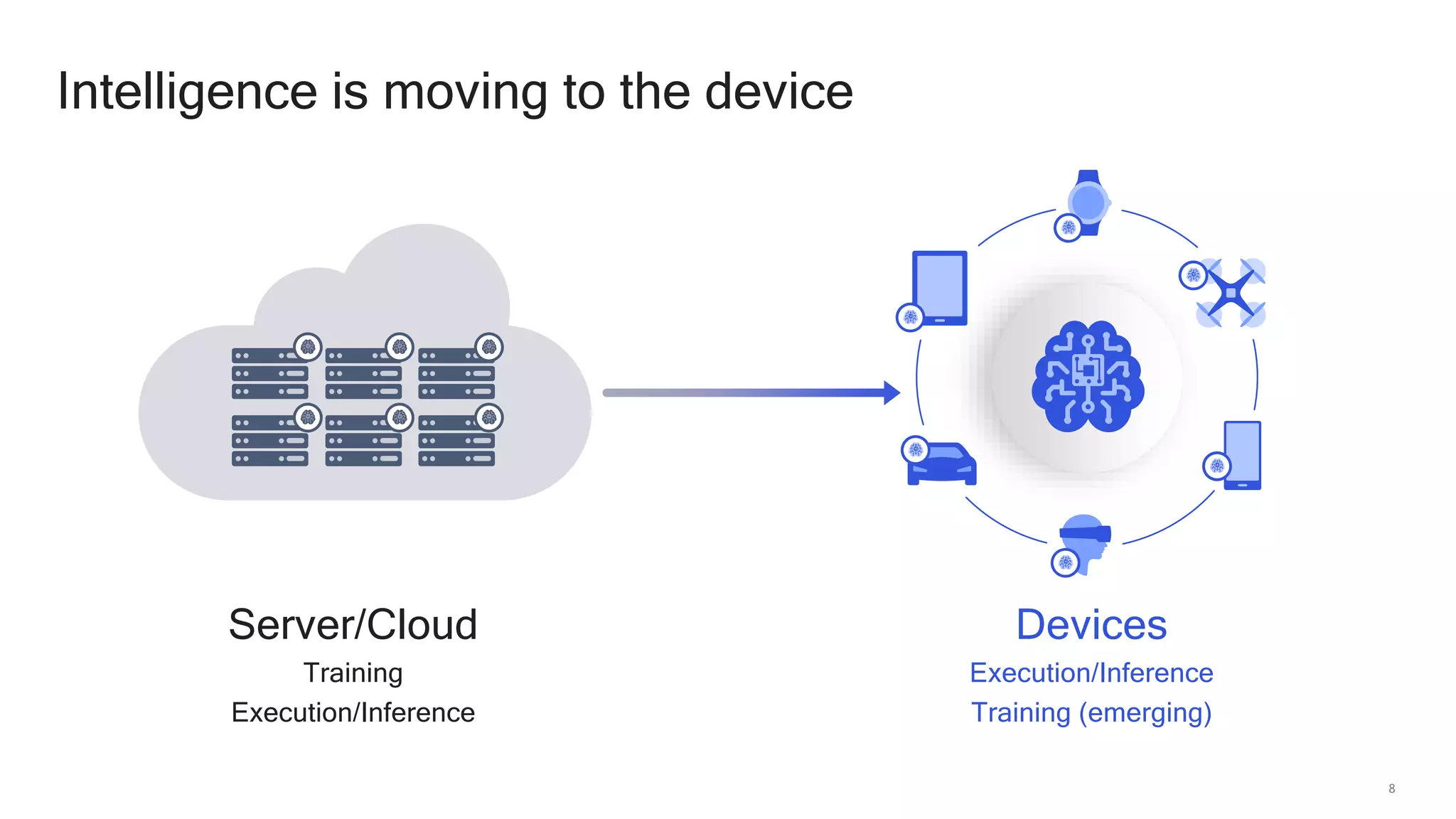

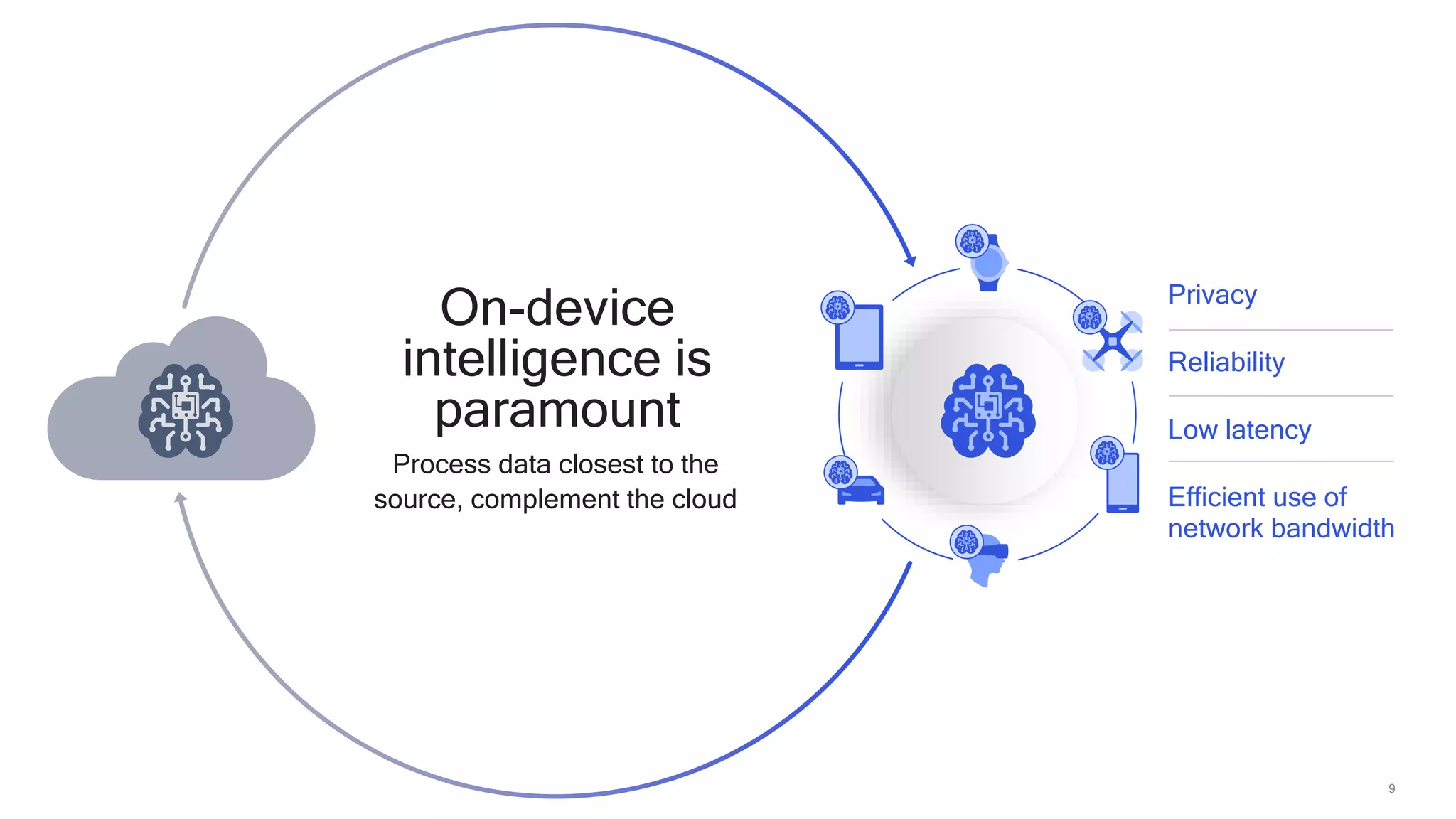

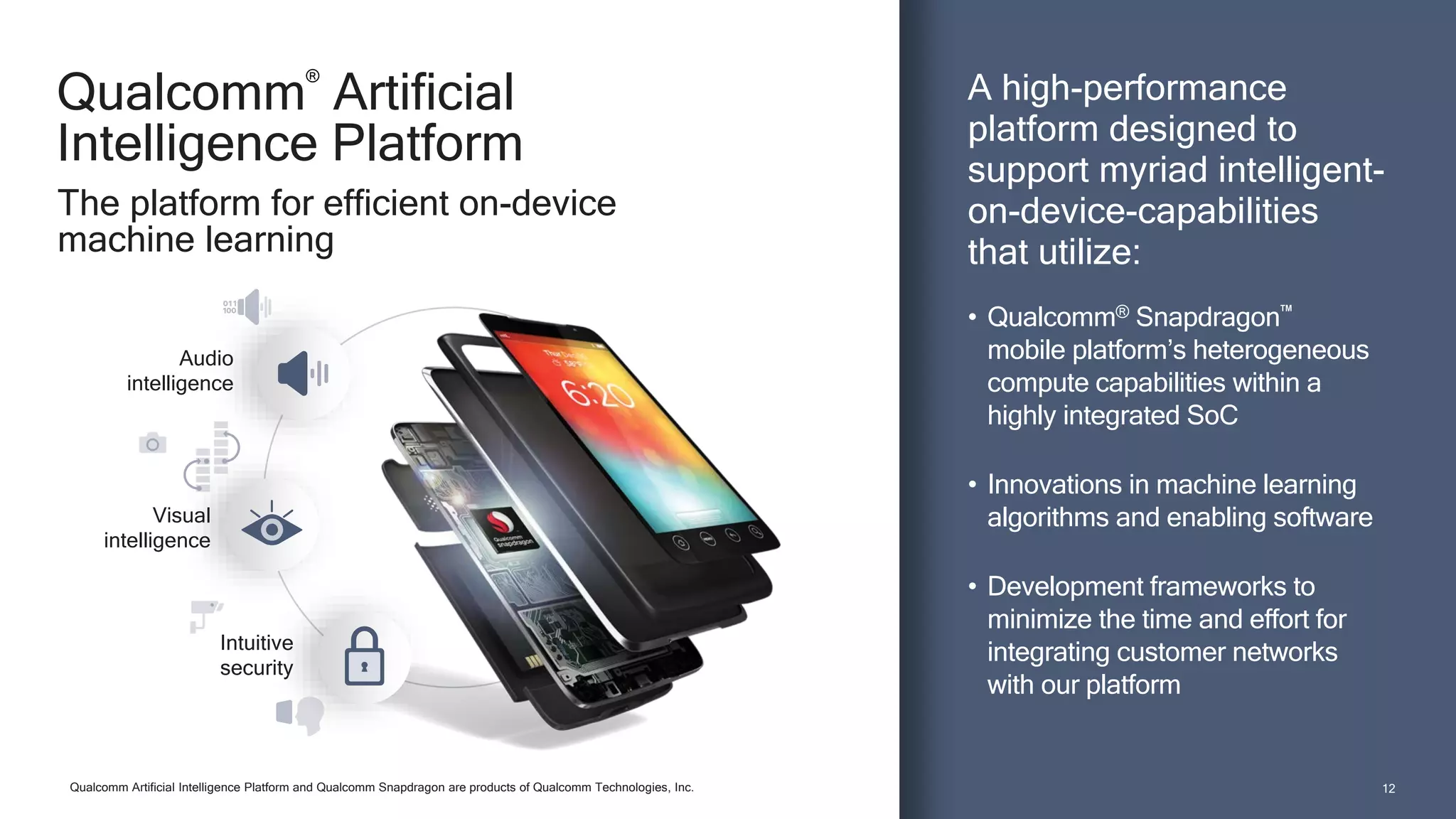

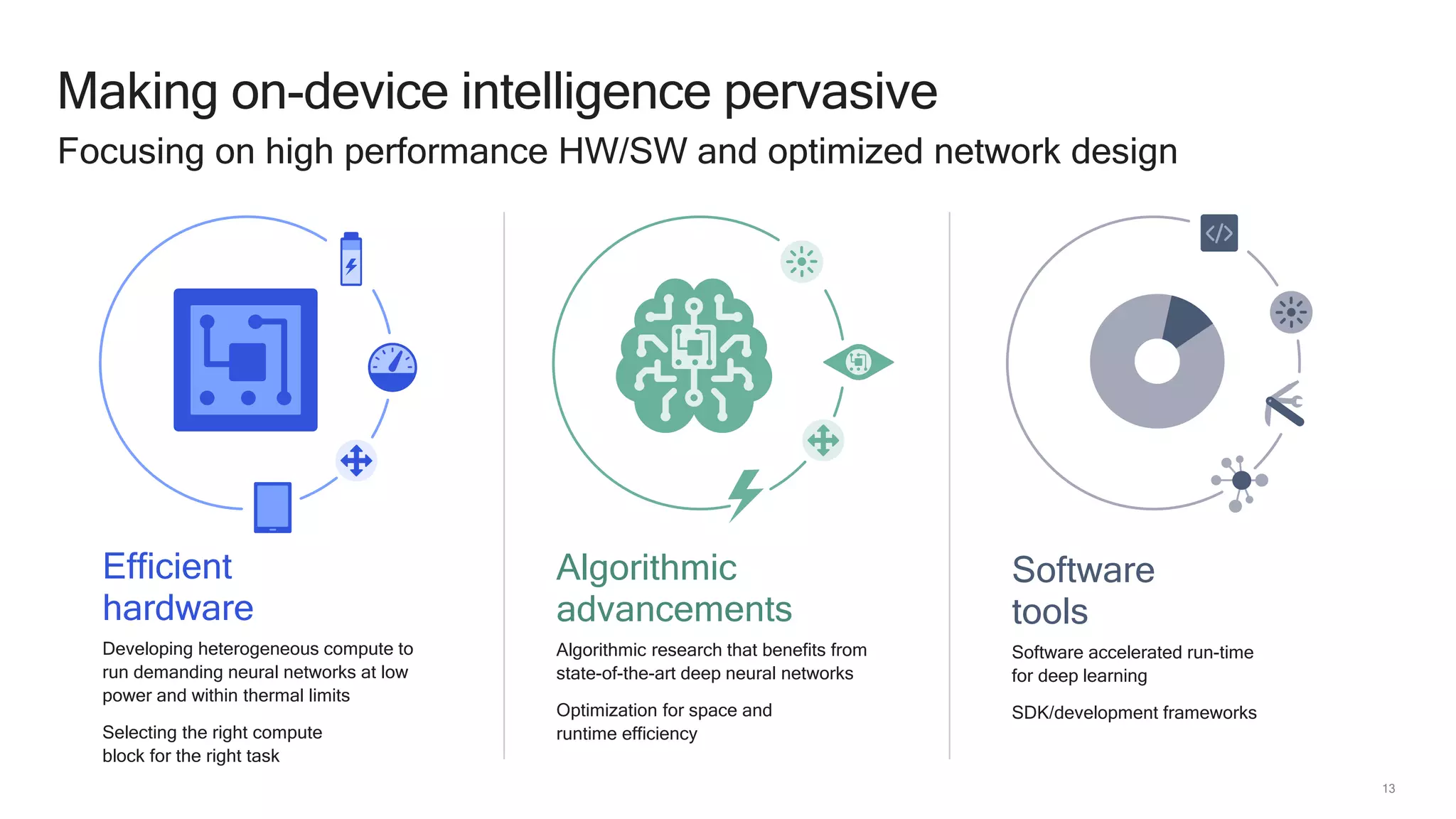

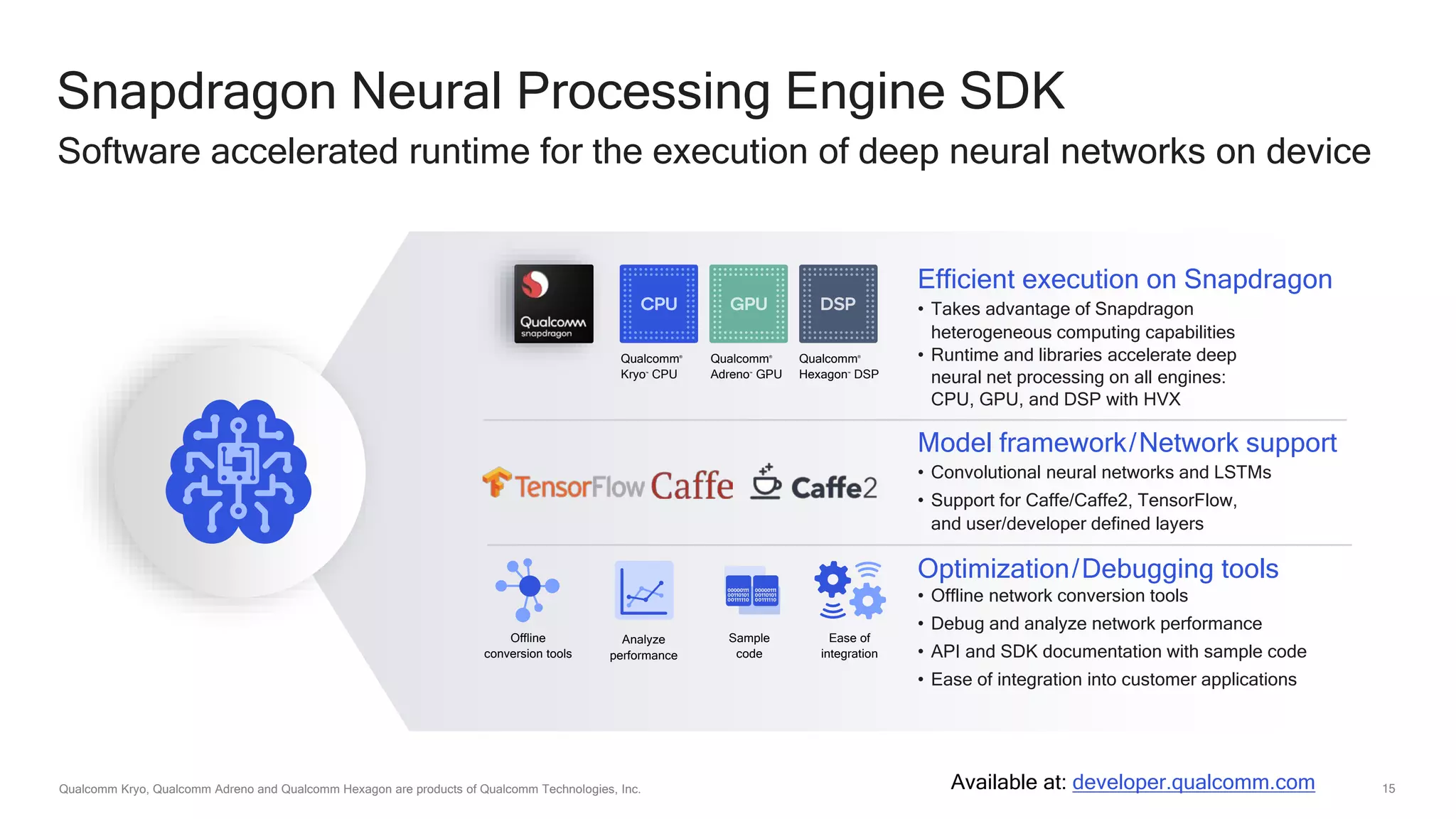

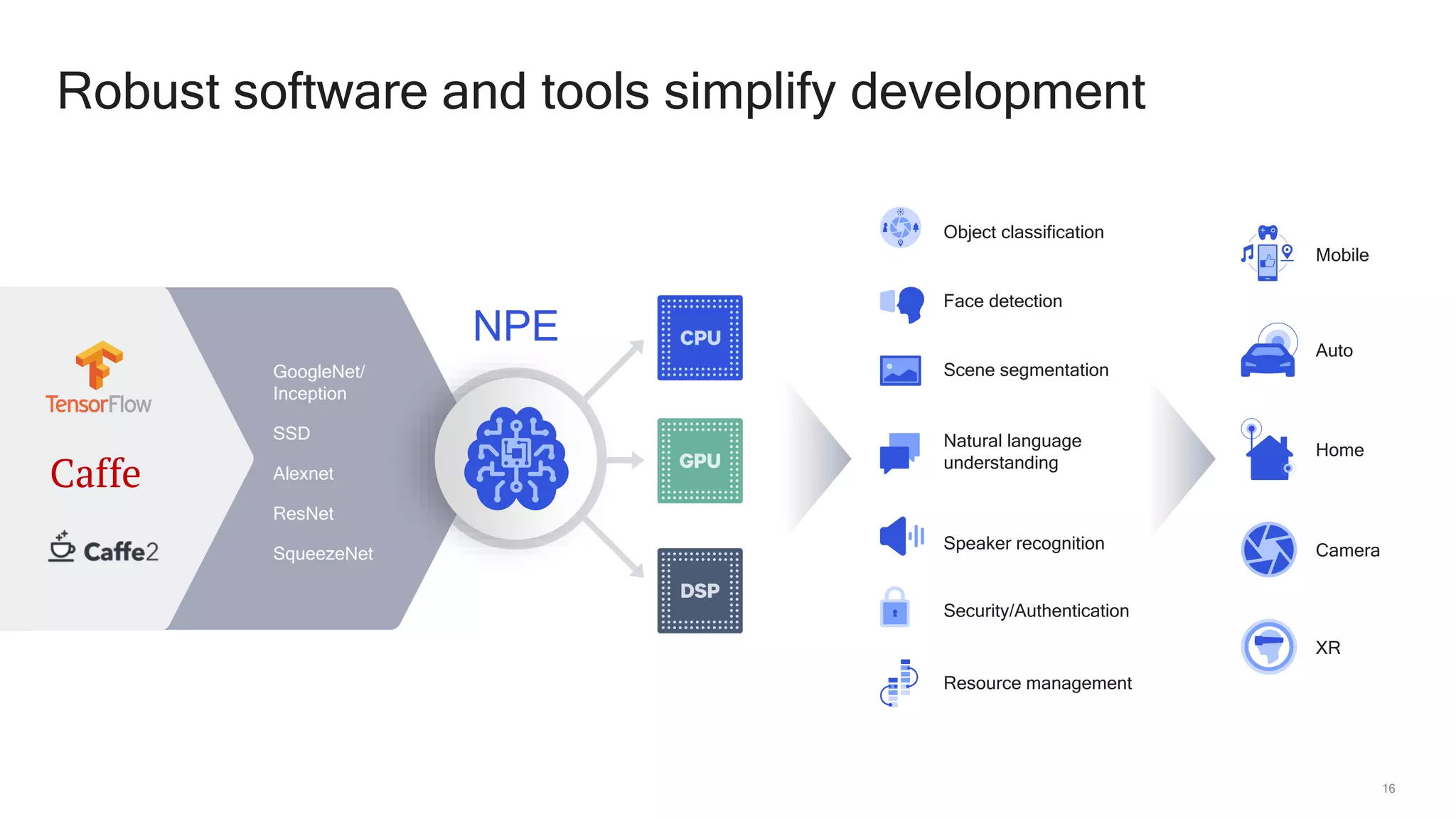

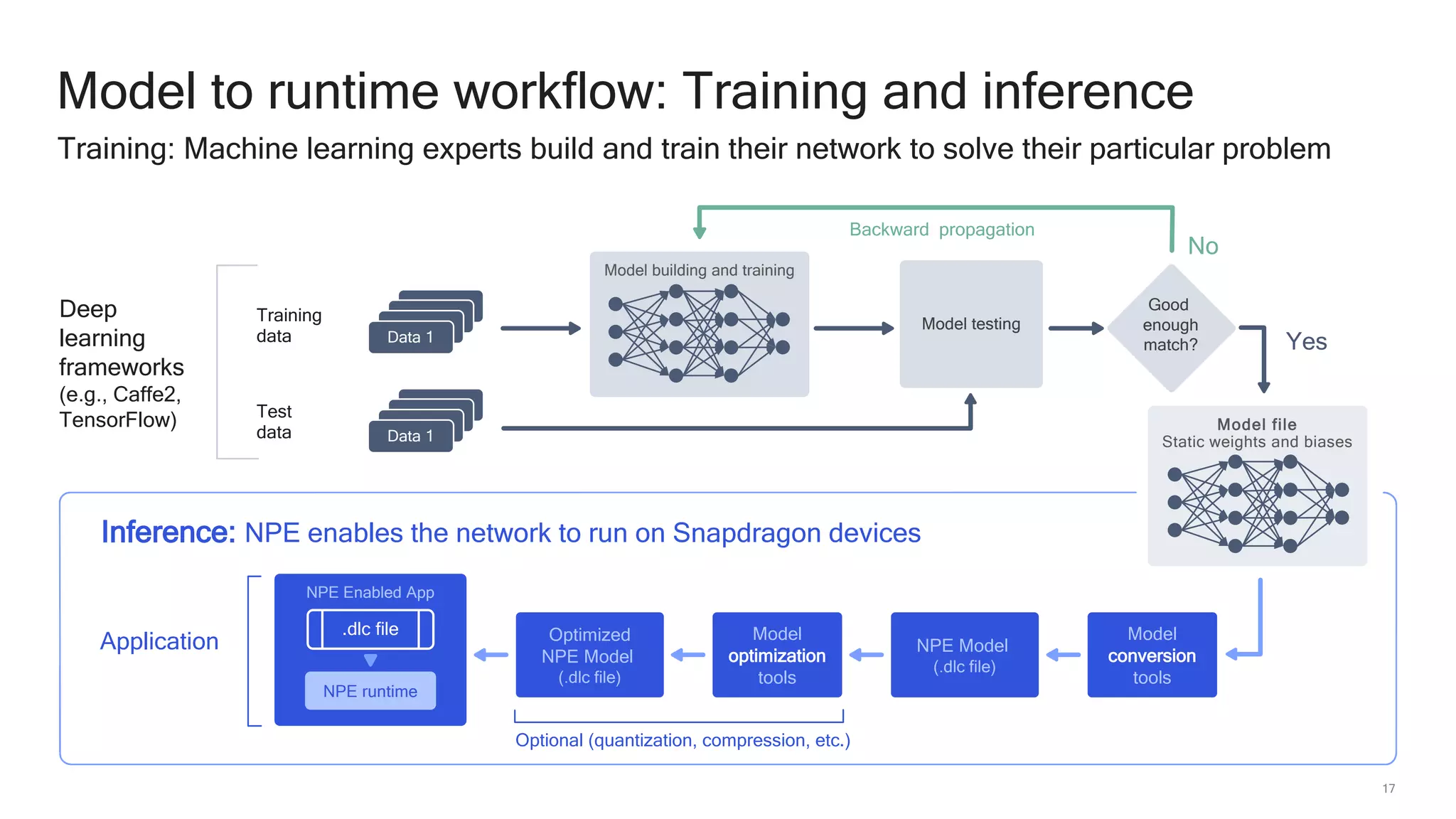

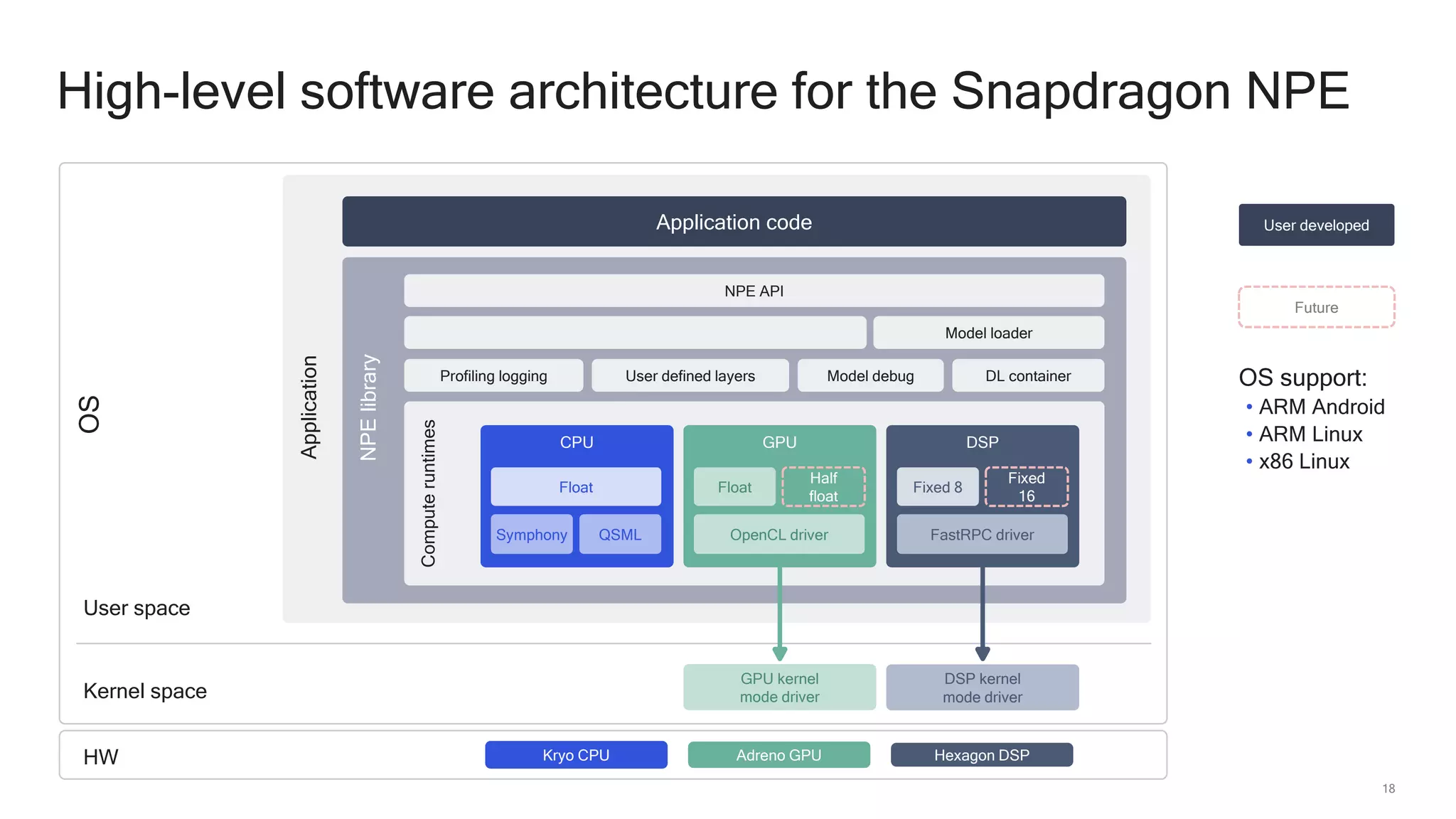

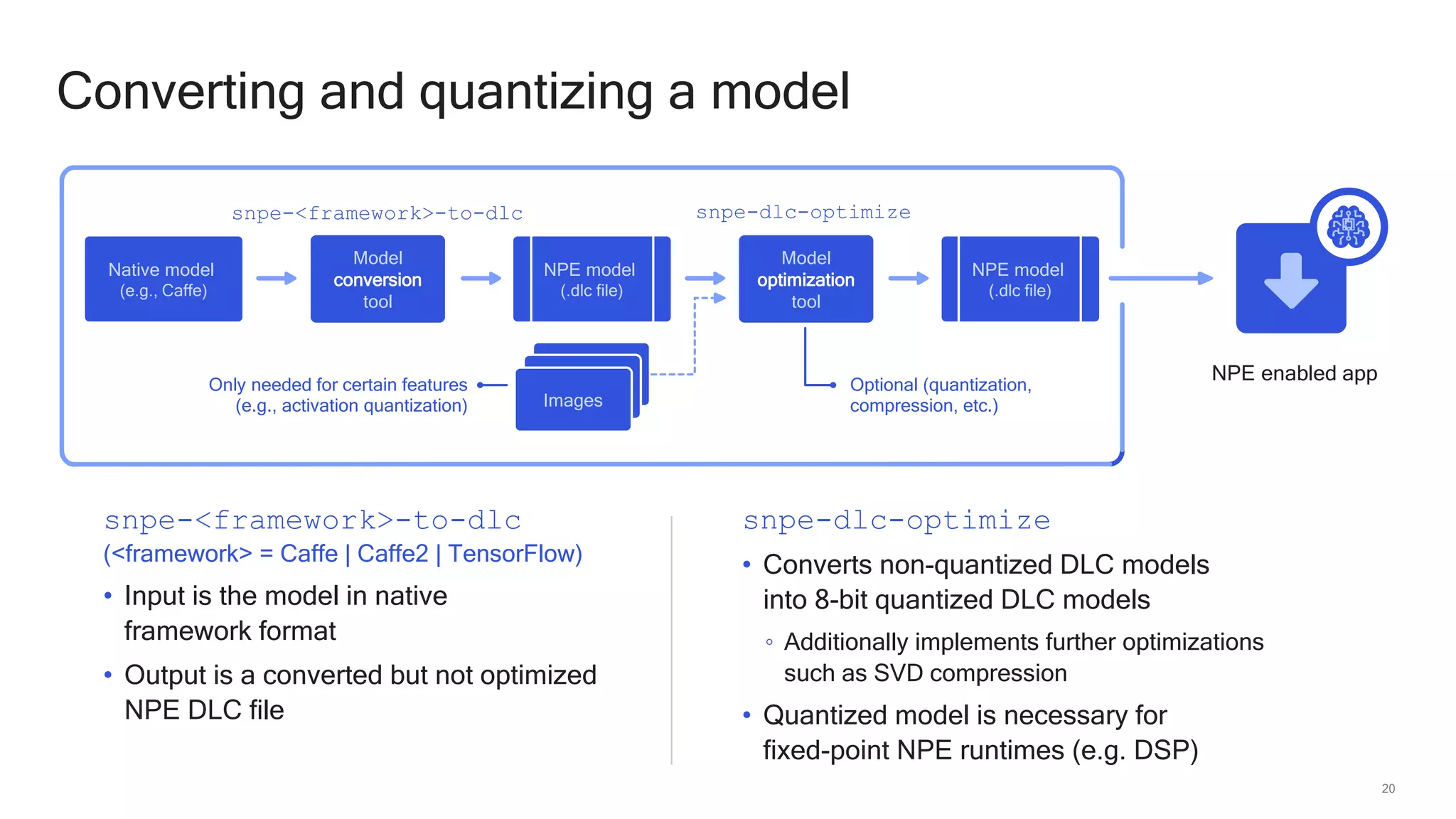

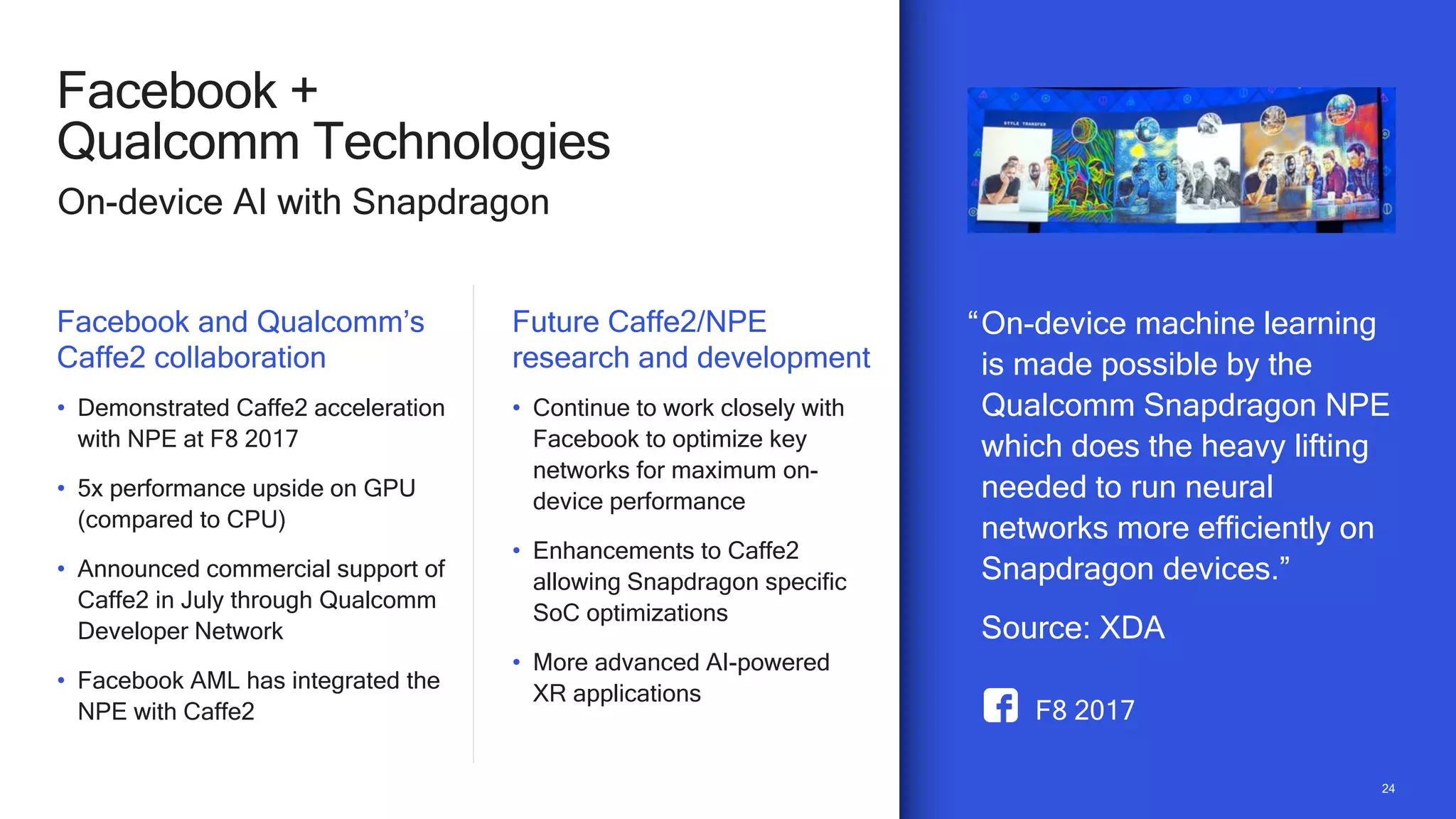

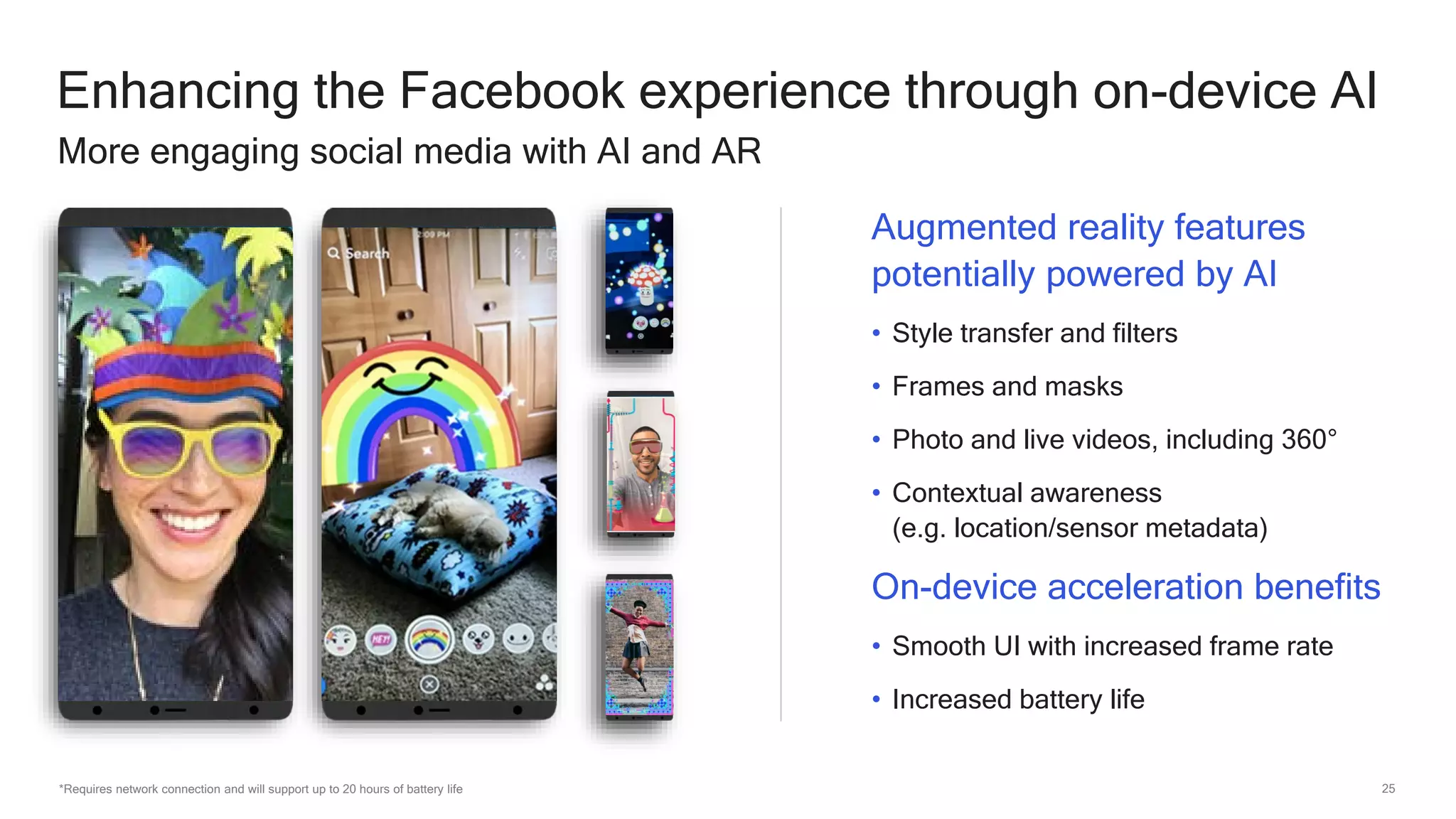

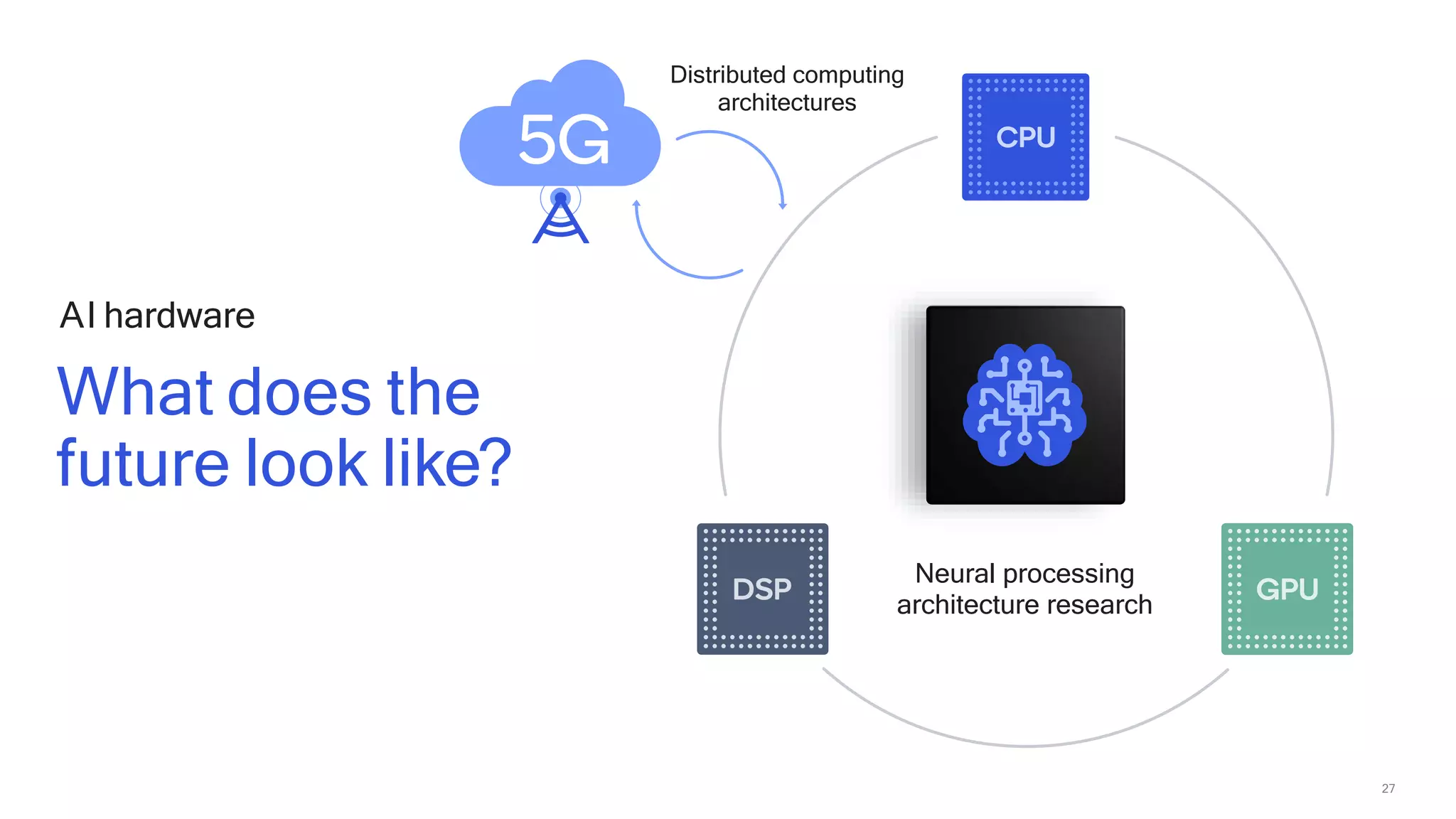

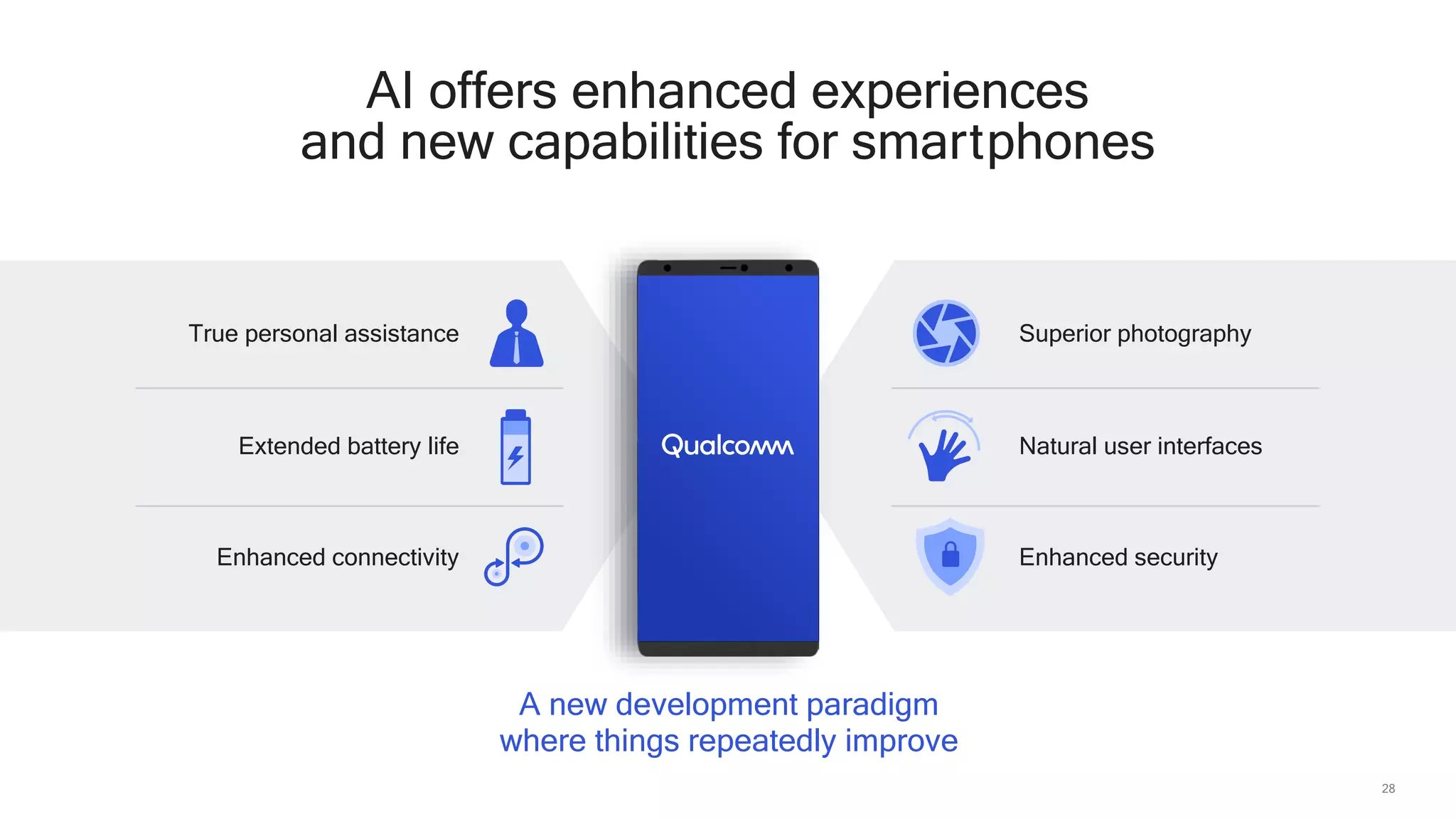

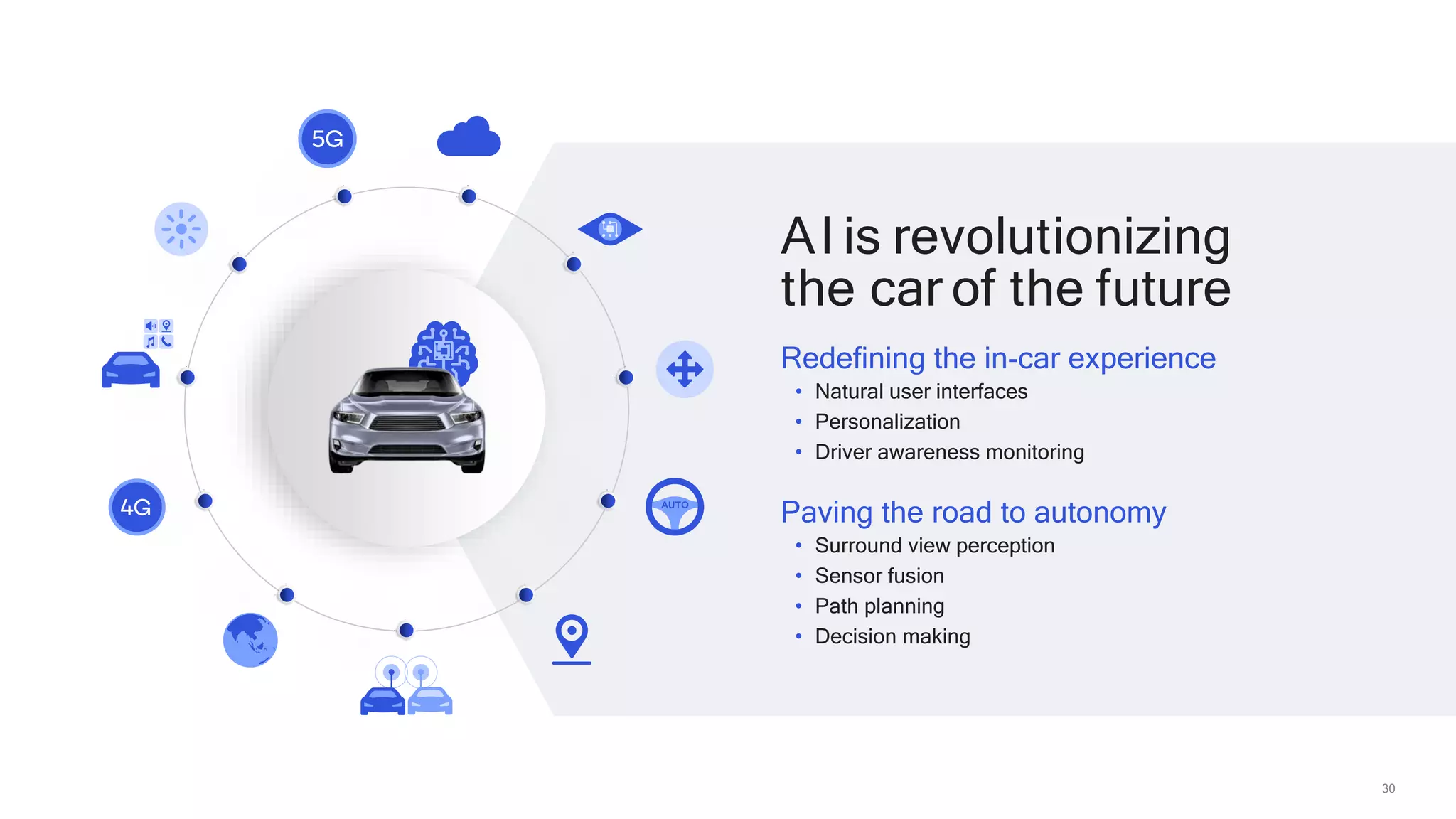

Qualcomm Technologies is leading advancements in mobile AI, emphasizing the Snapdragon platform's capabilities for efficient on-device machine learning and high performance. The integration of advanced algorithms and heterogeneous computing enhances user experiences while prioritizing privacy and low latency. This initiative supports various applications, from augmented reality to automotive technology, showcasing the transformative potential of AI on mobile devices.