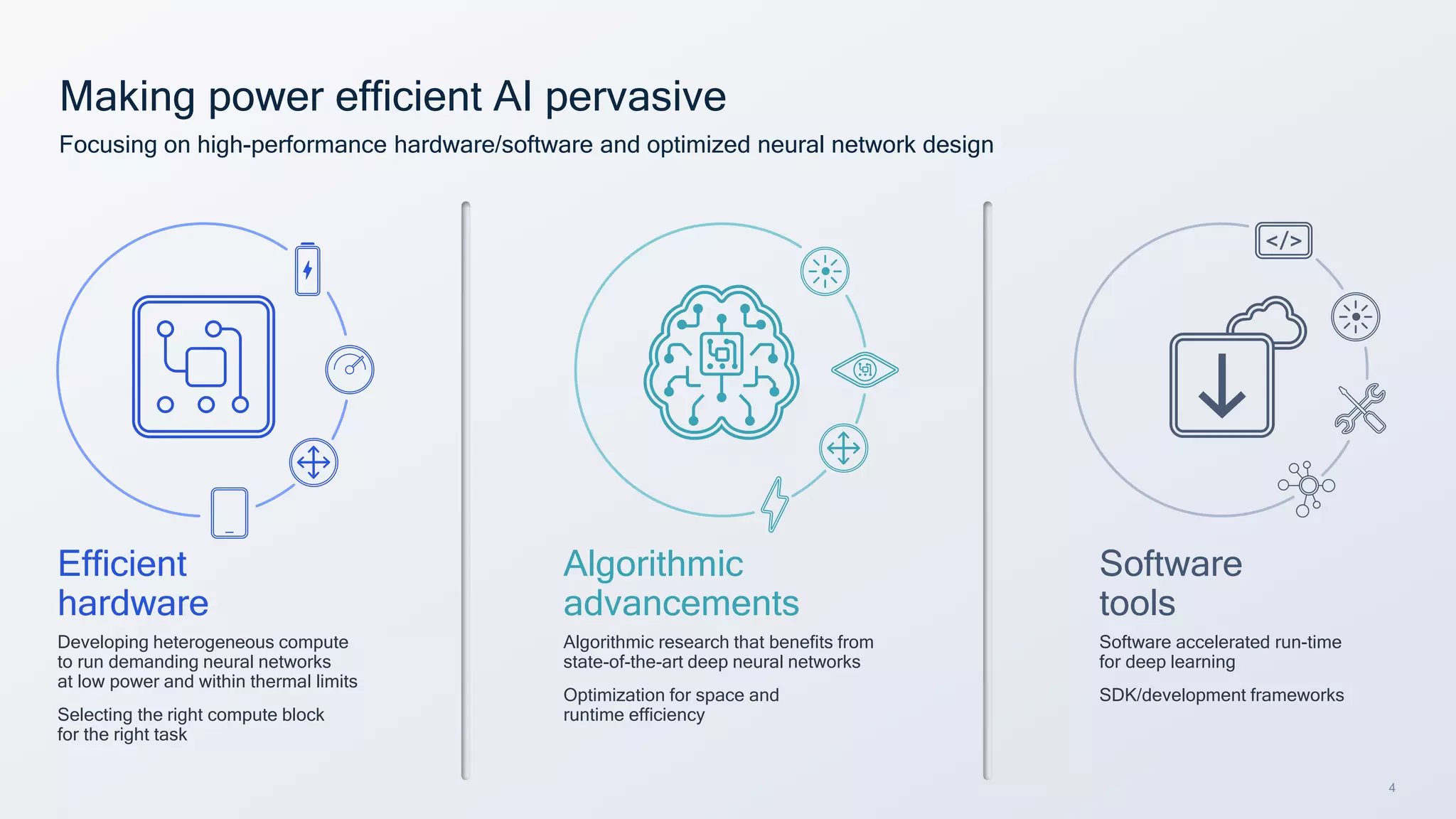

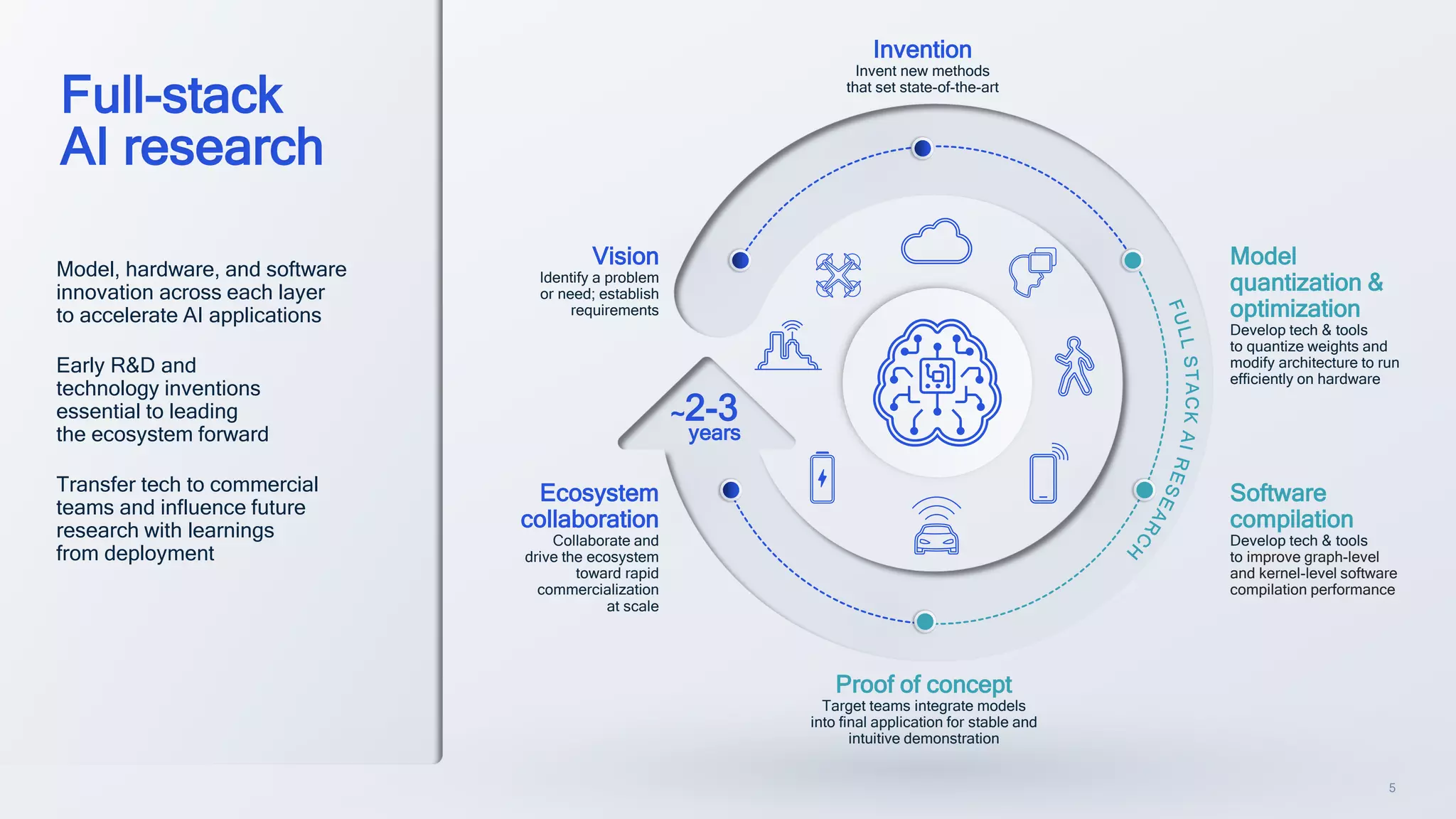

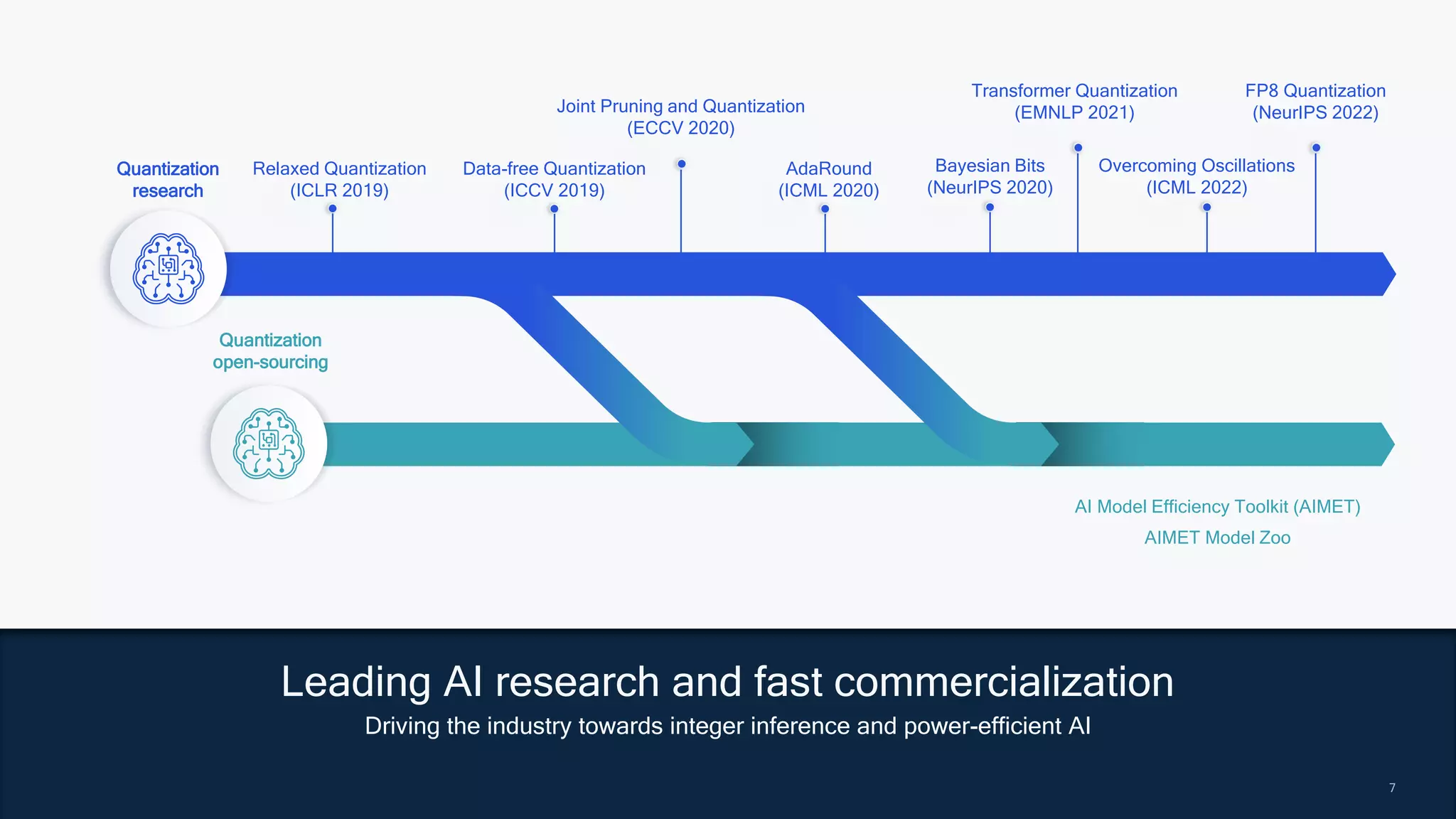

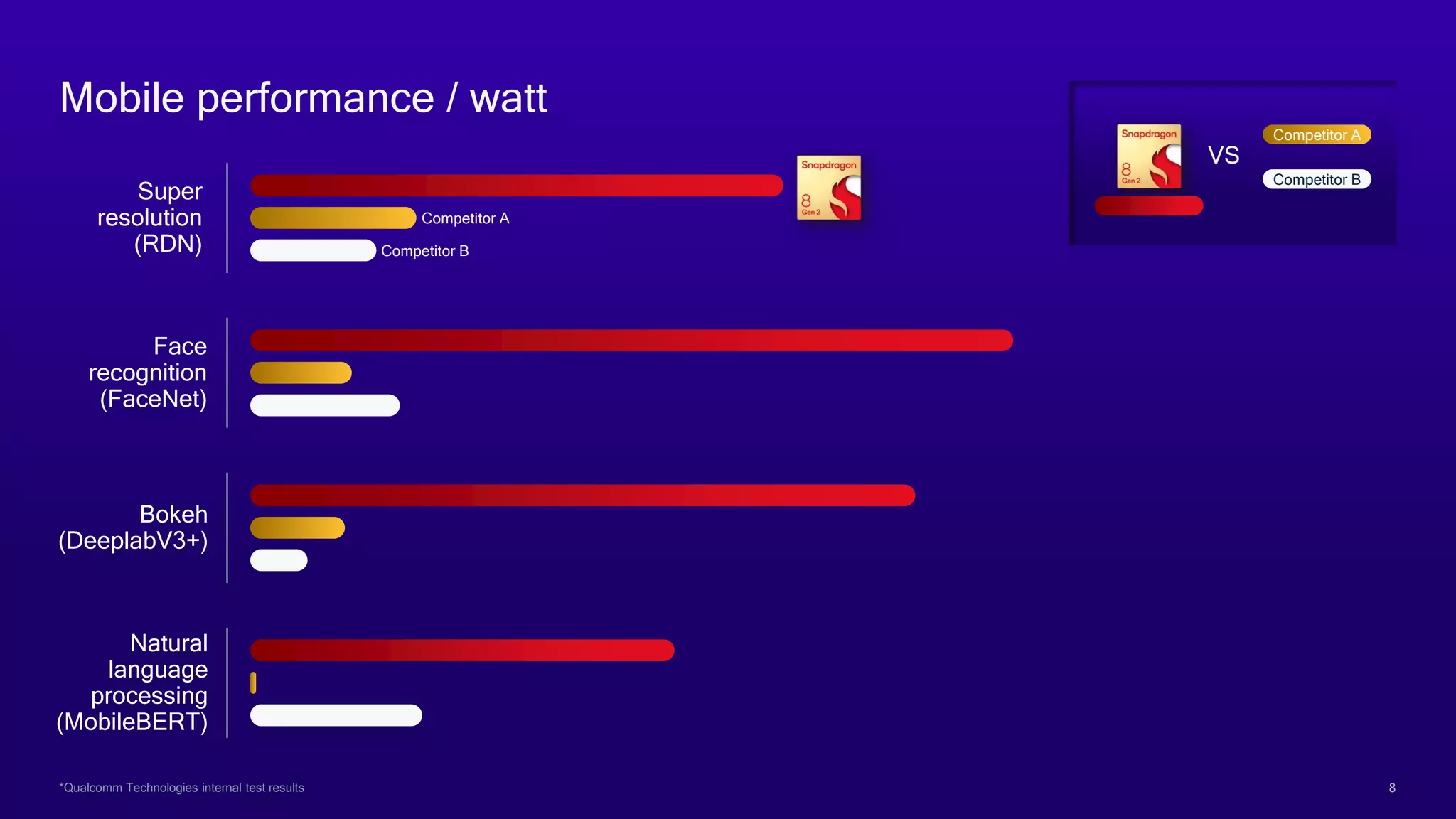

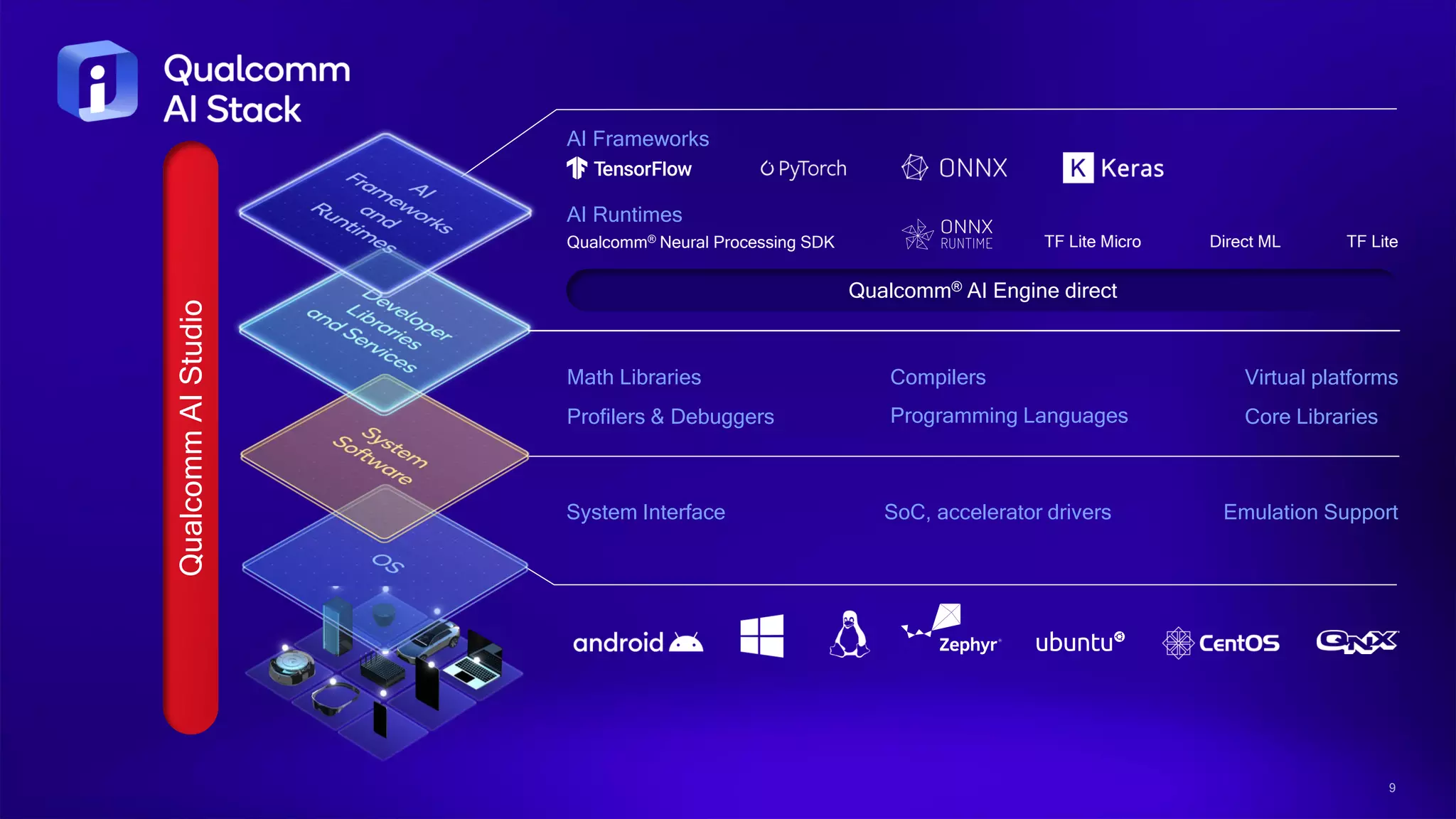

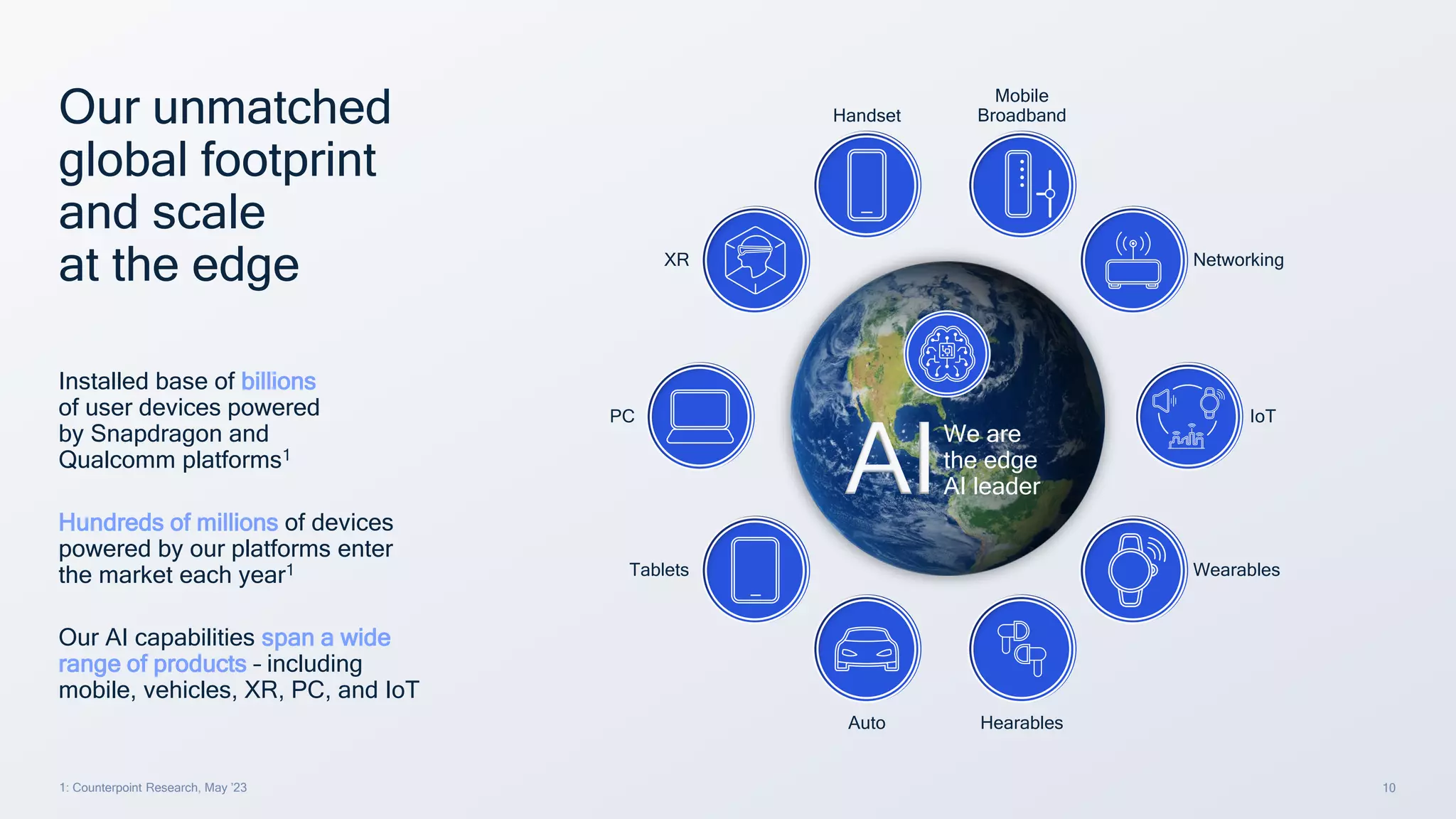

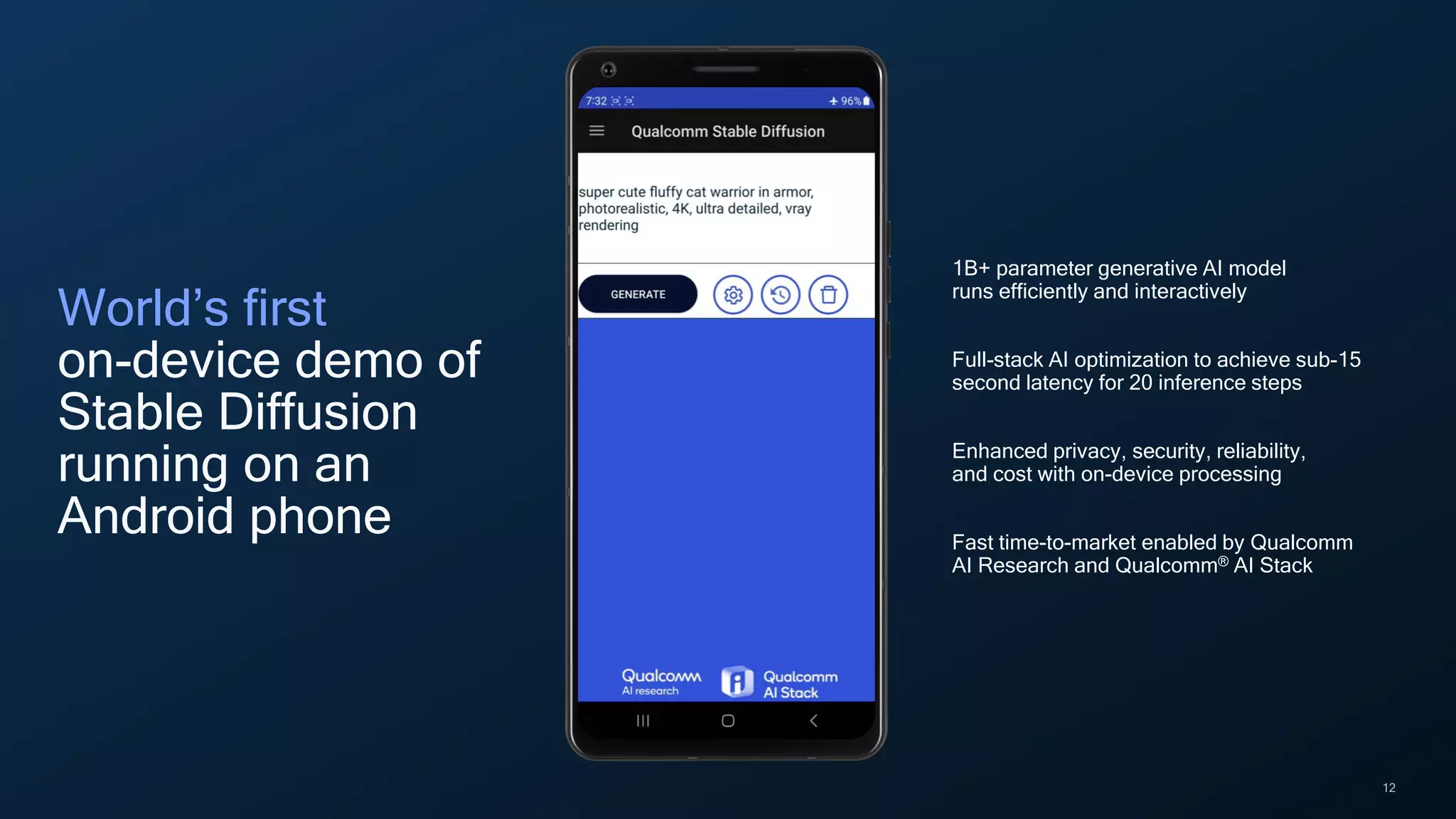

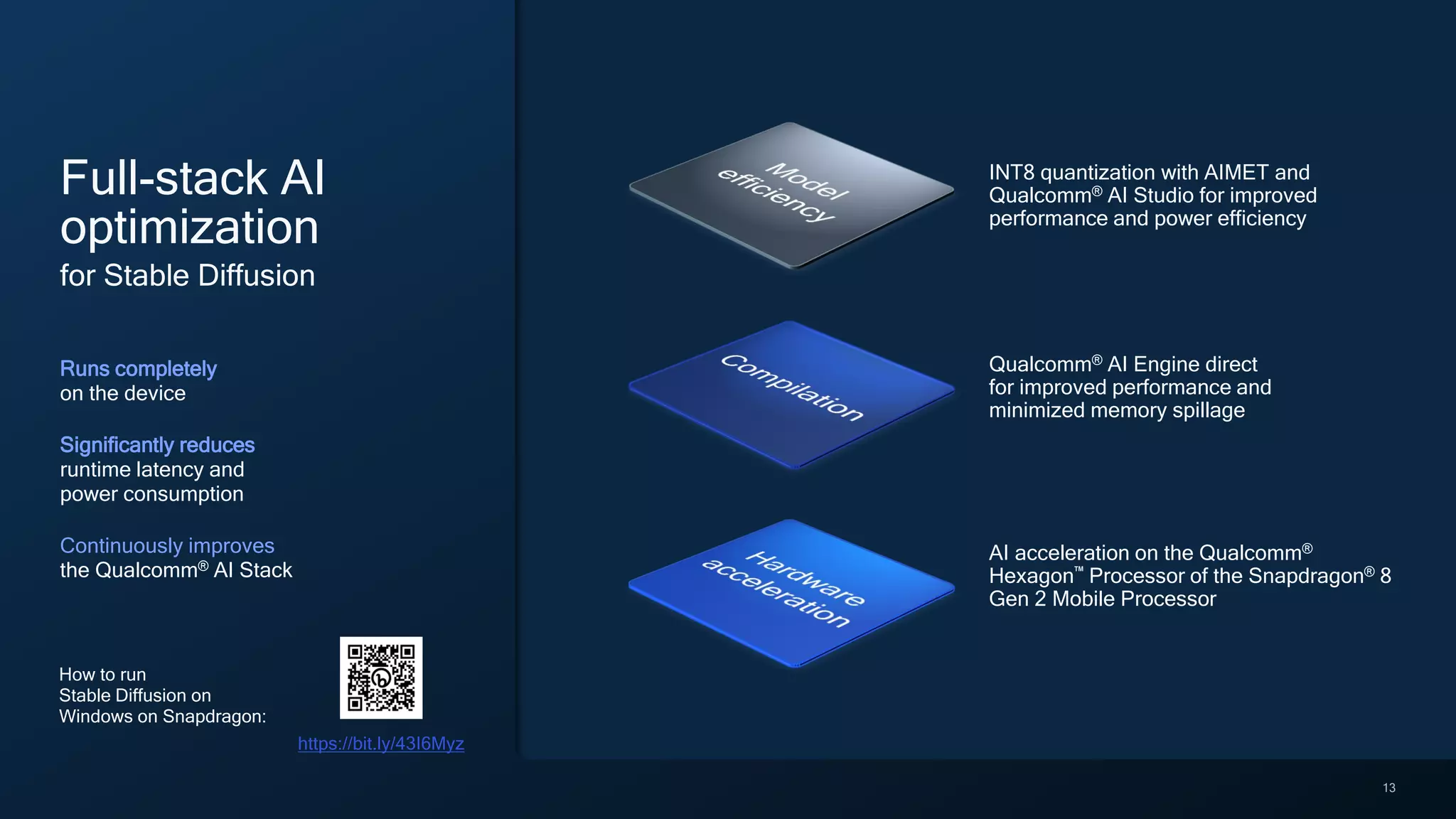

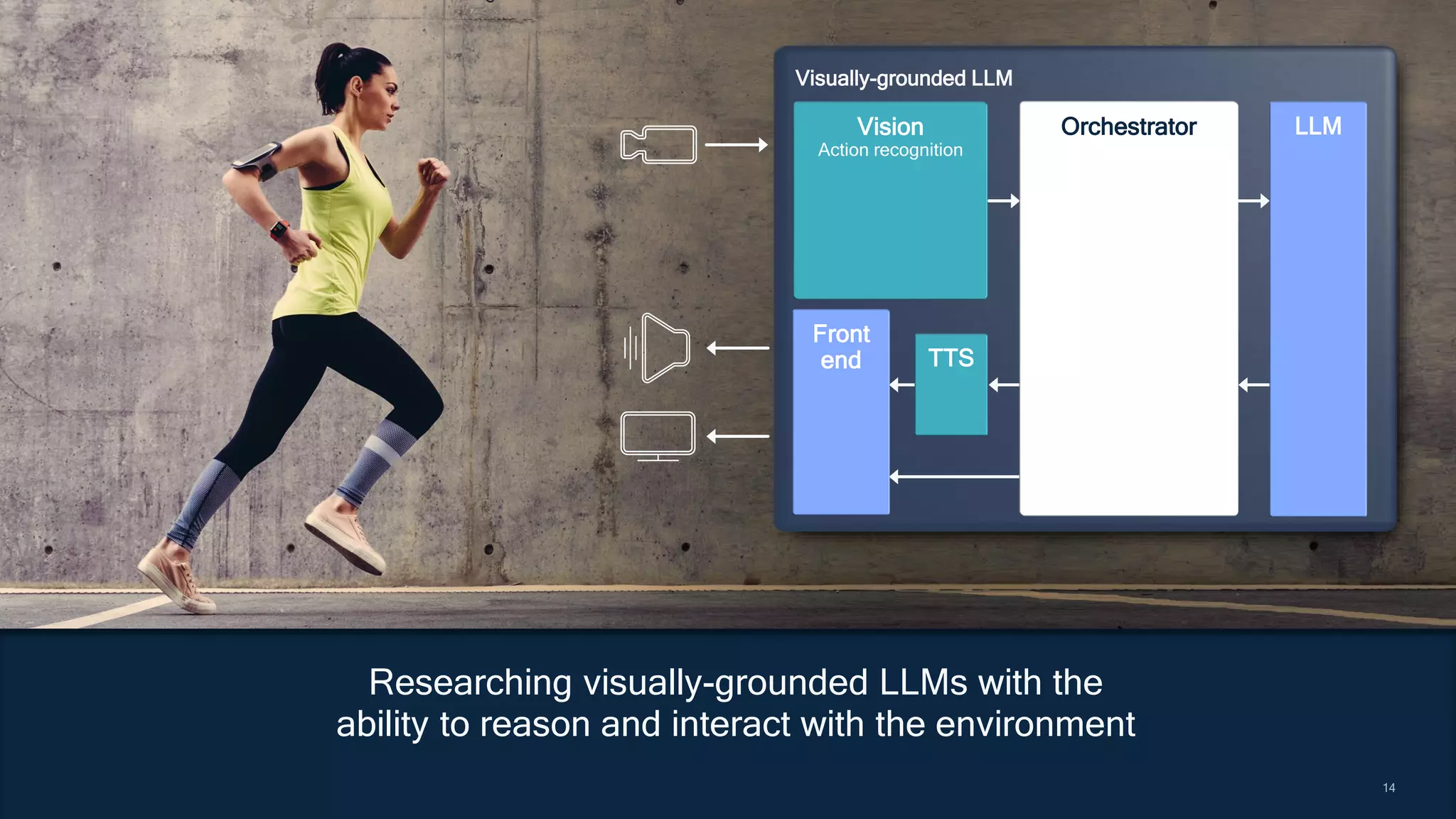

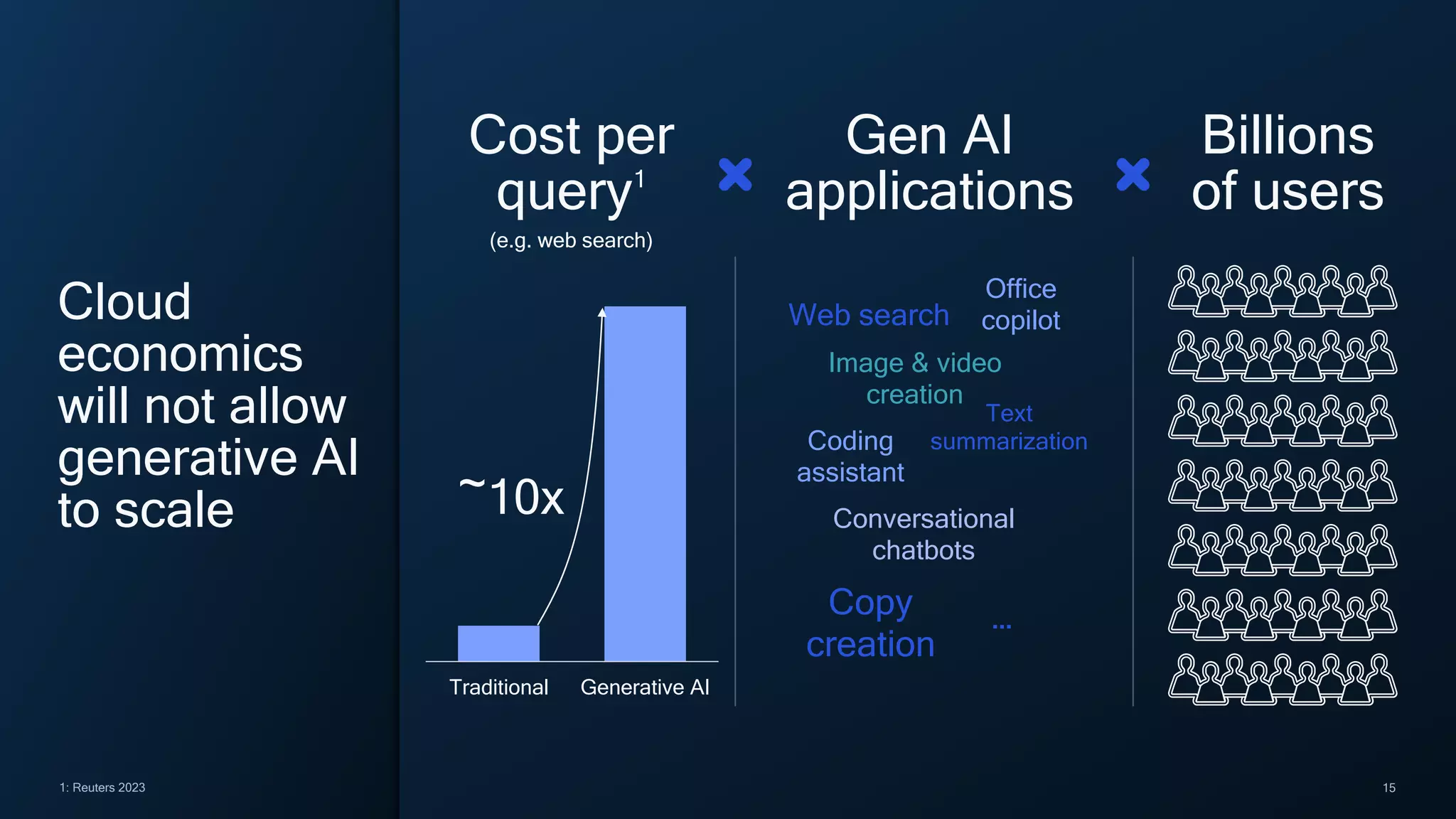

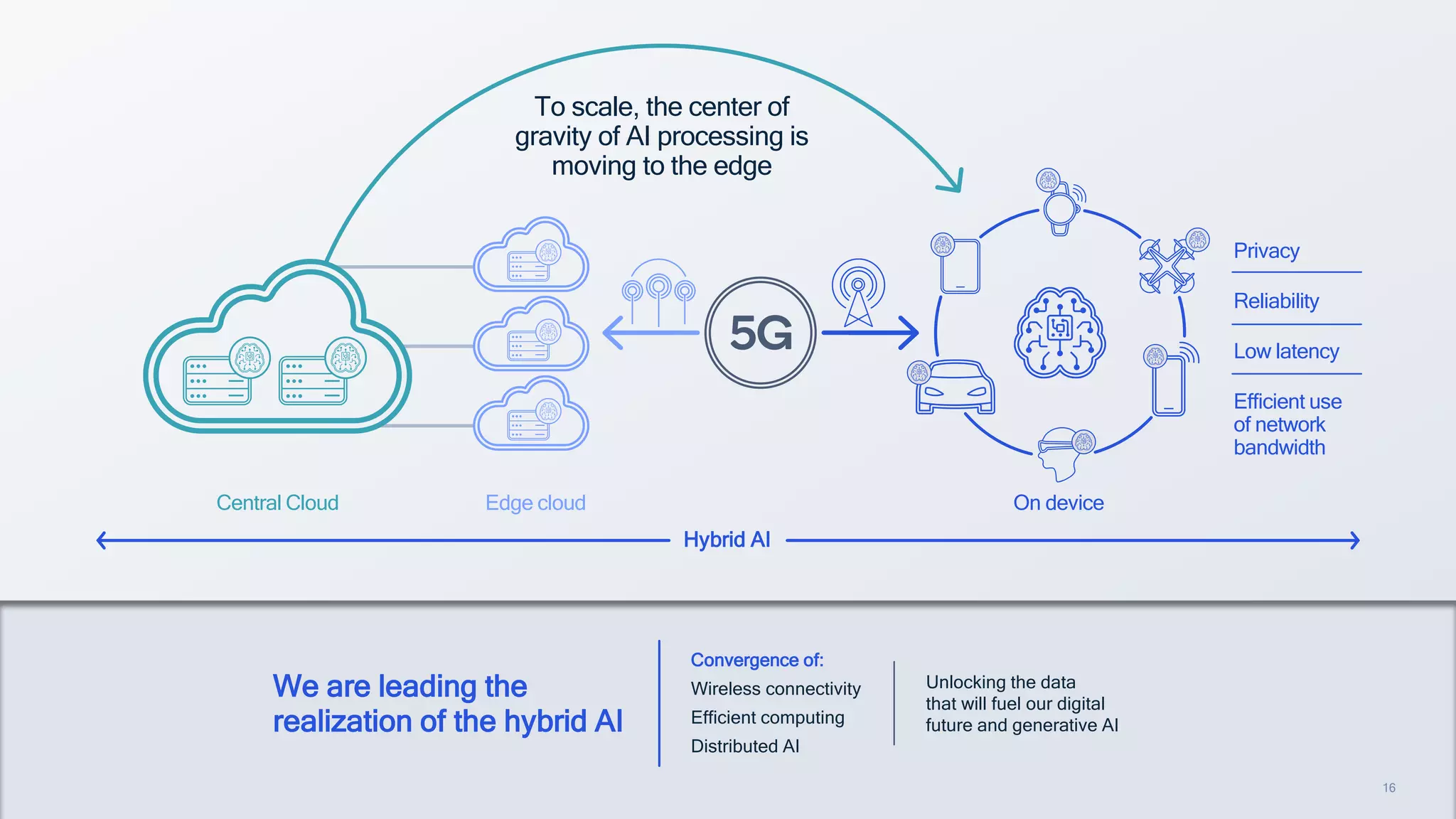

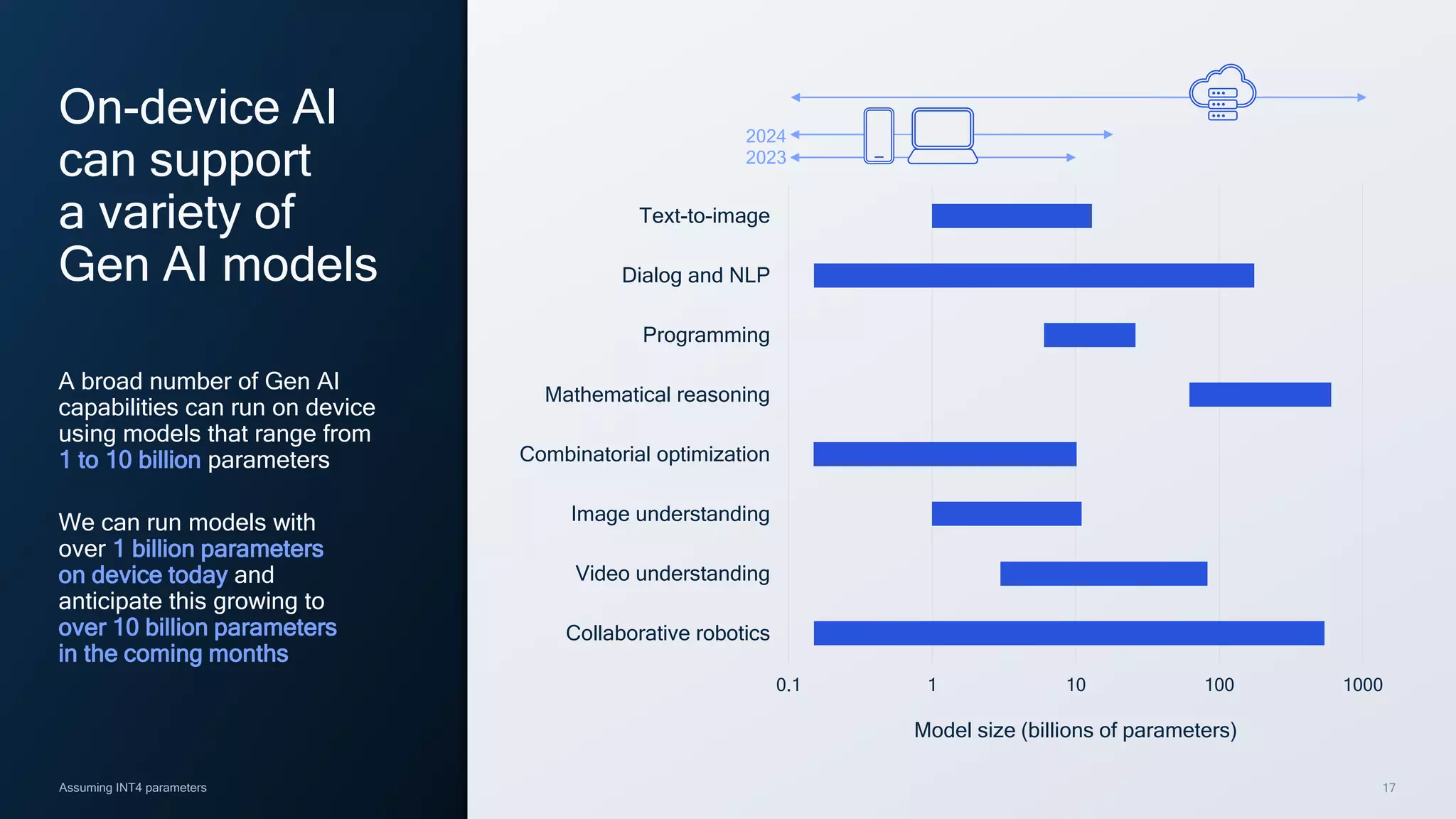

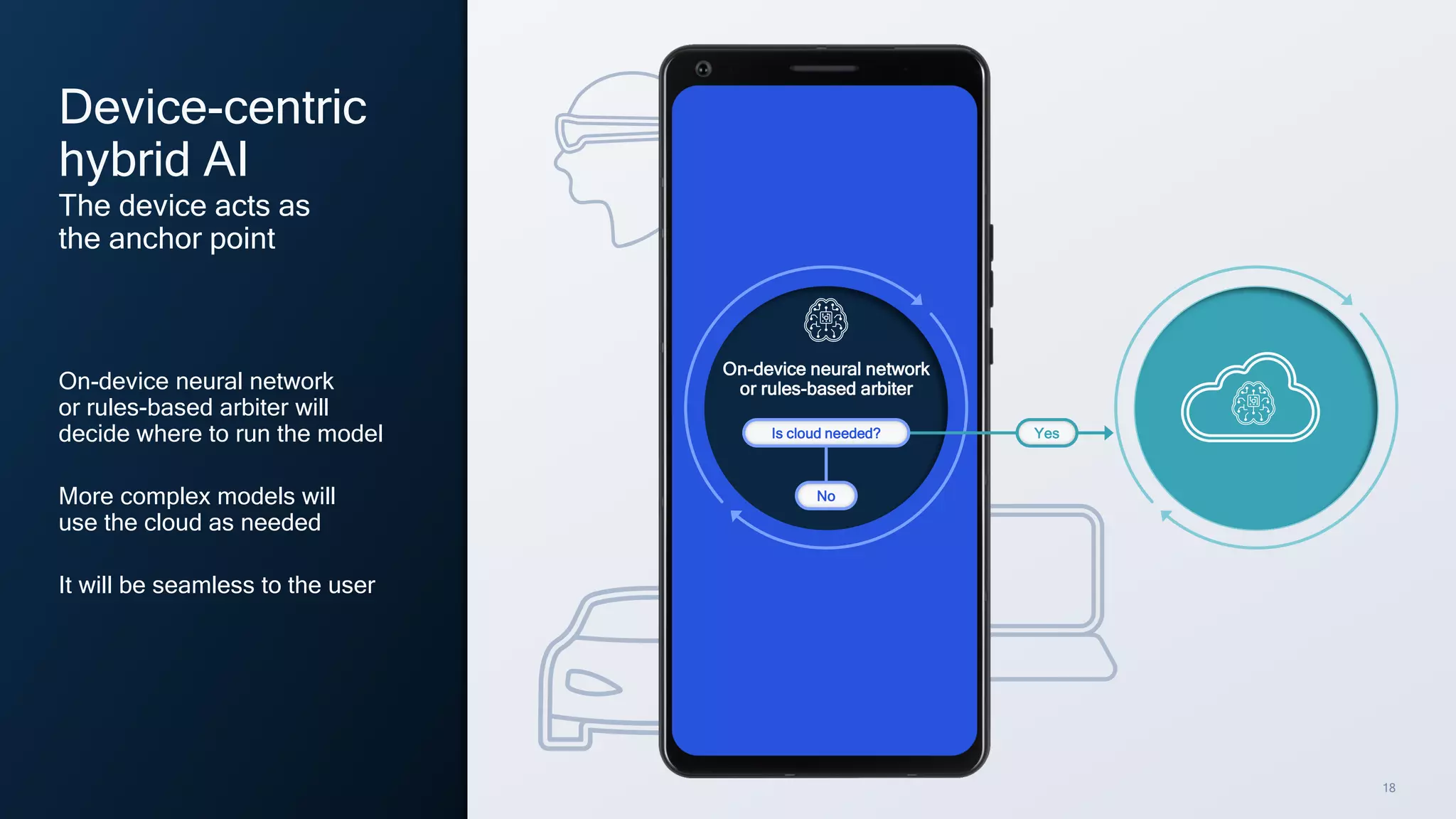

Qualcomm emphasizes the significance of on-device artificial intelligence (AI) as essential for privacy, reliability, and efficiency. The future of AI is described as hybrid, combining on-device intelligence with cloud resources to enhance performance across various applications, including generative AI. The company aims to lead in AI by developing power-efficient models and optimizing neural networks for widespread deployment on devices.