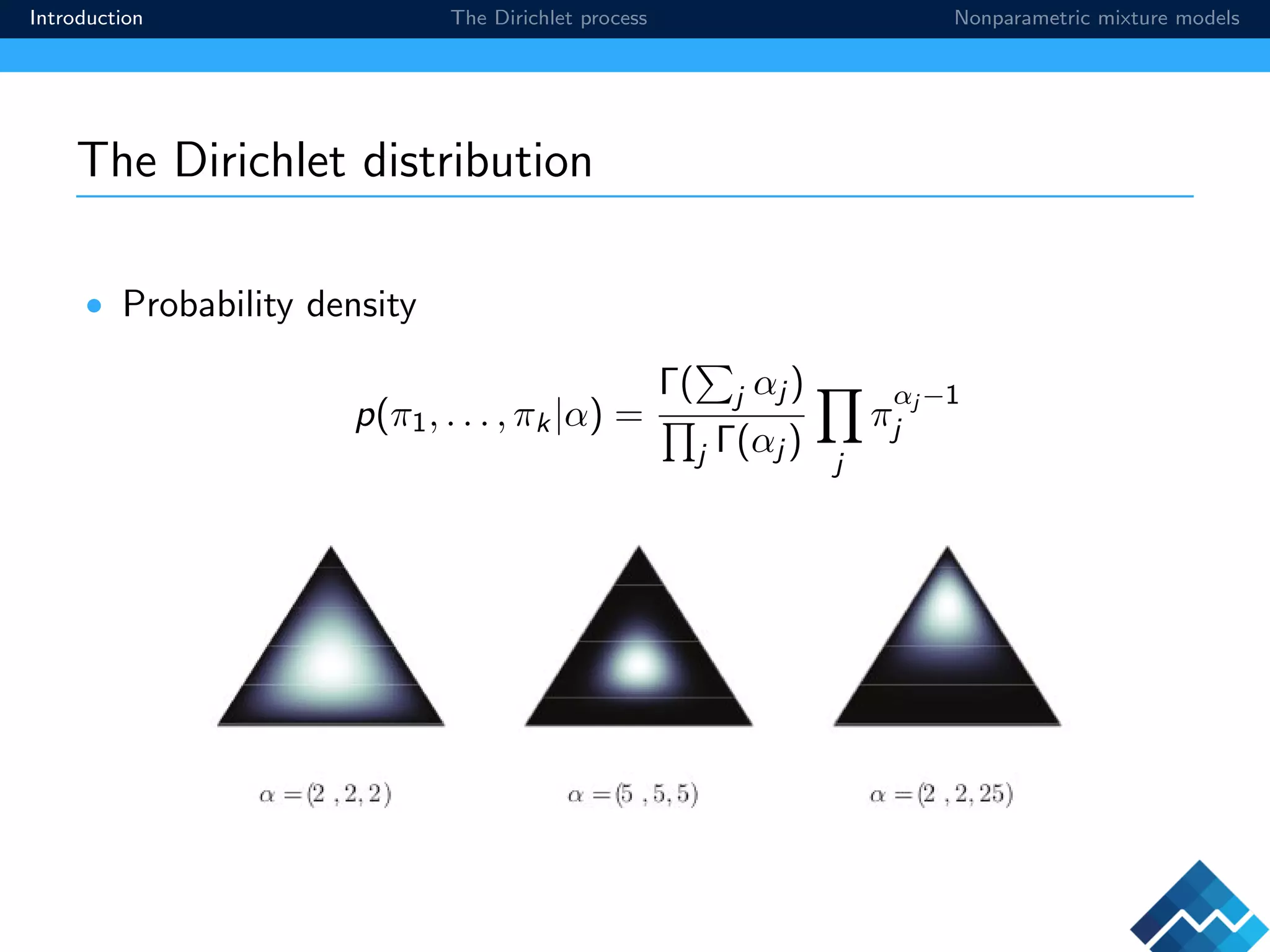

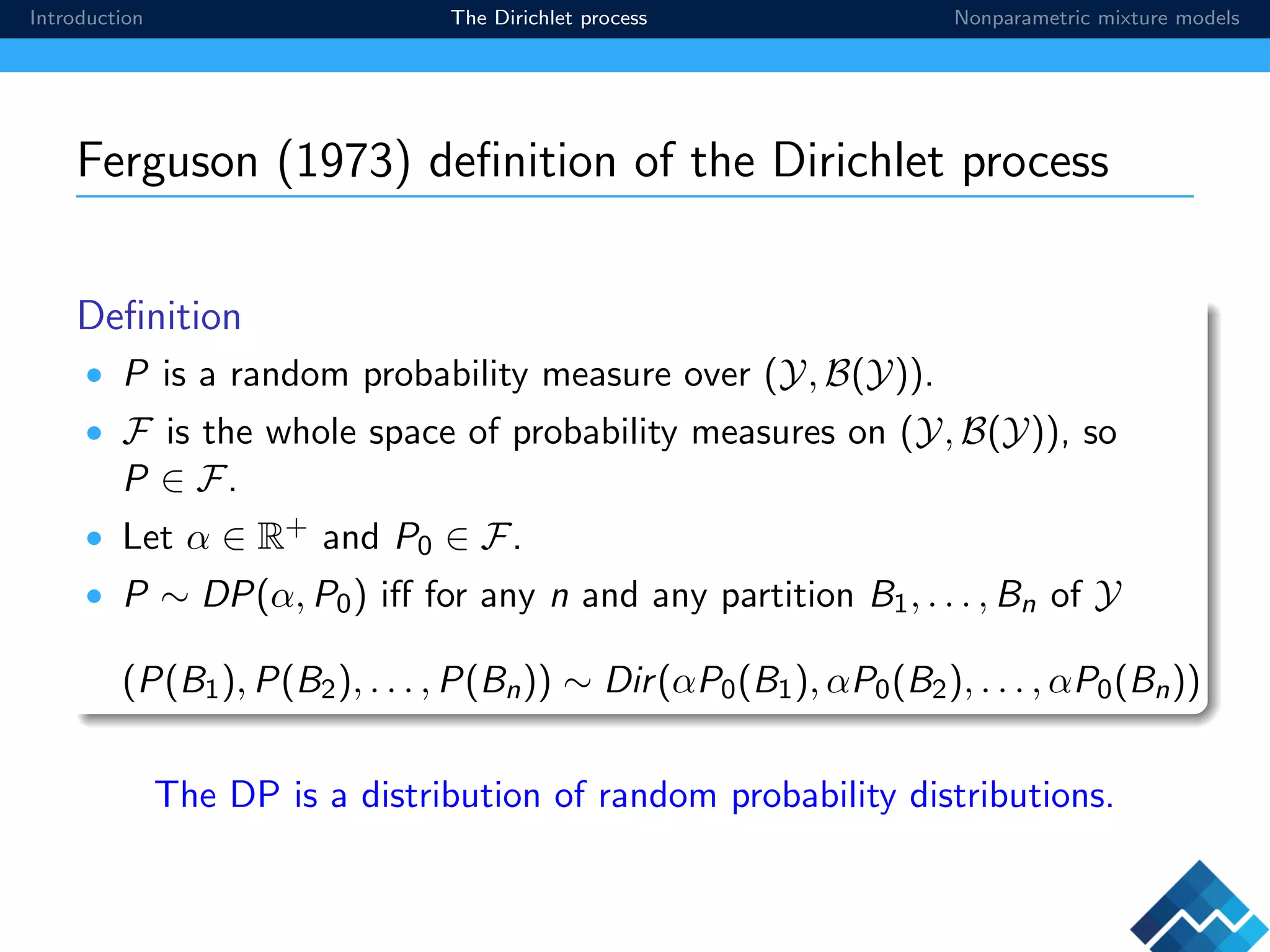

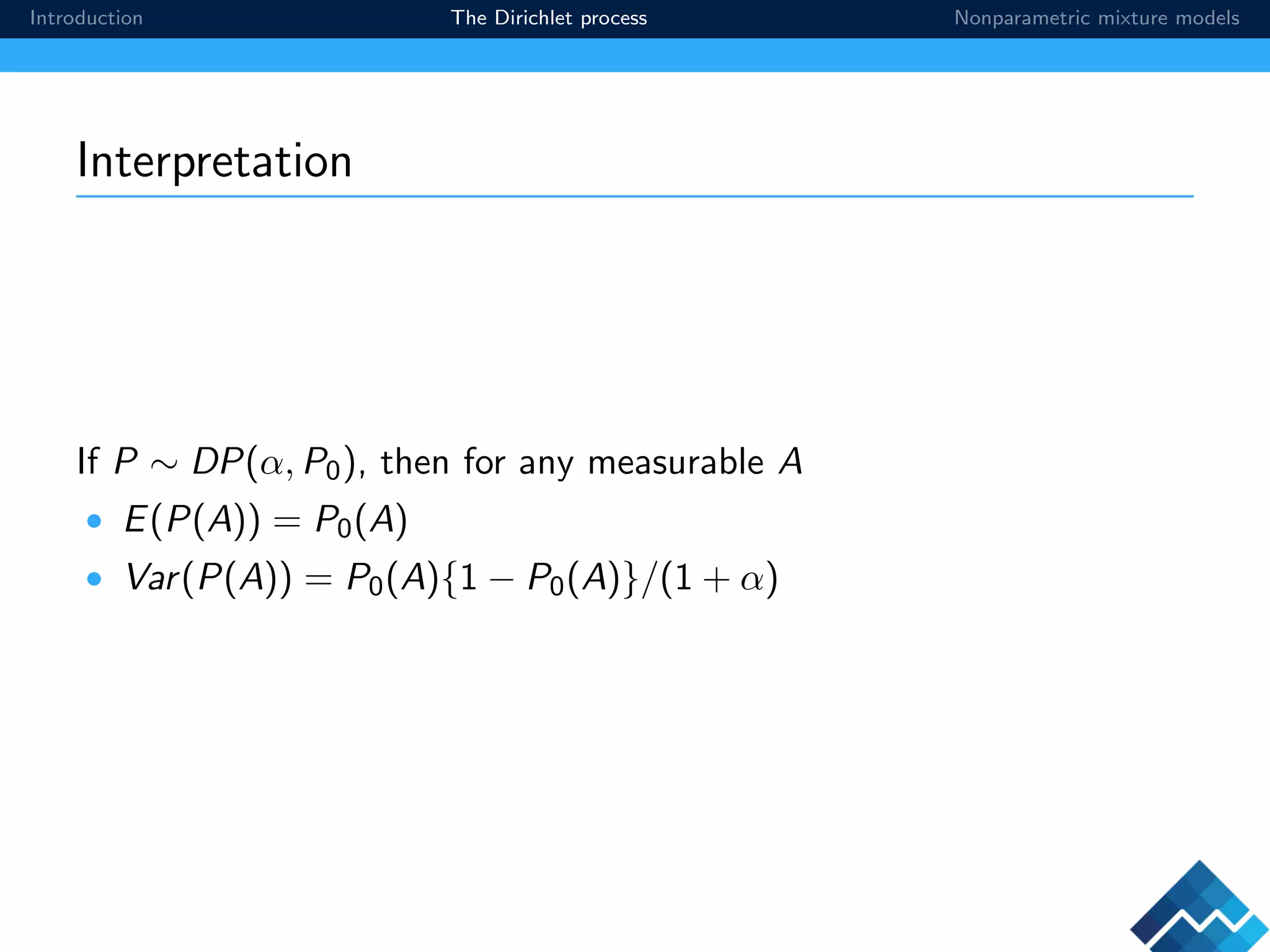

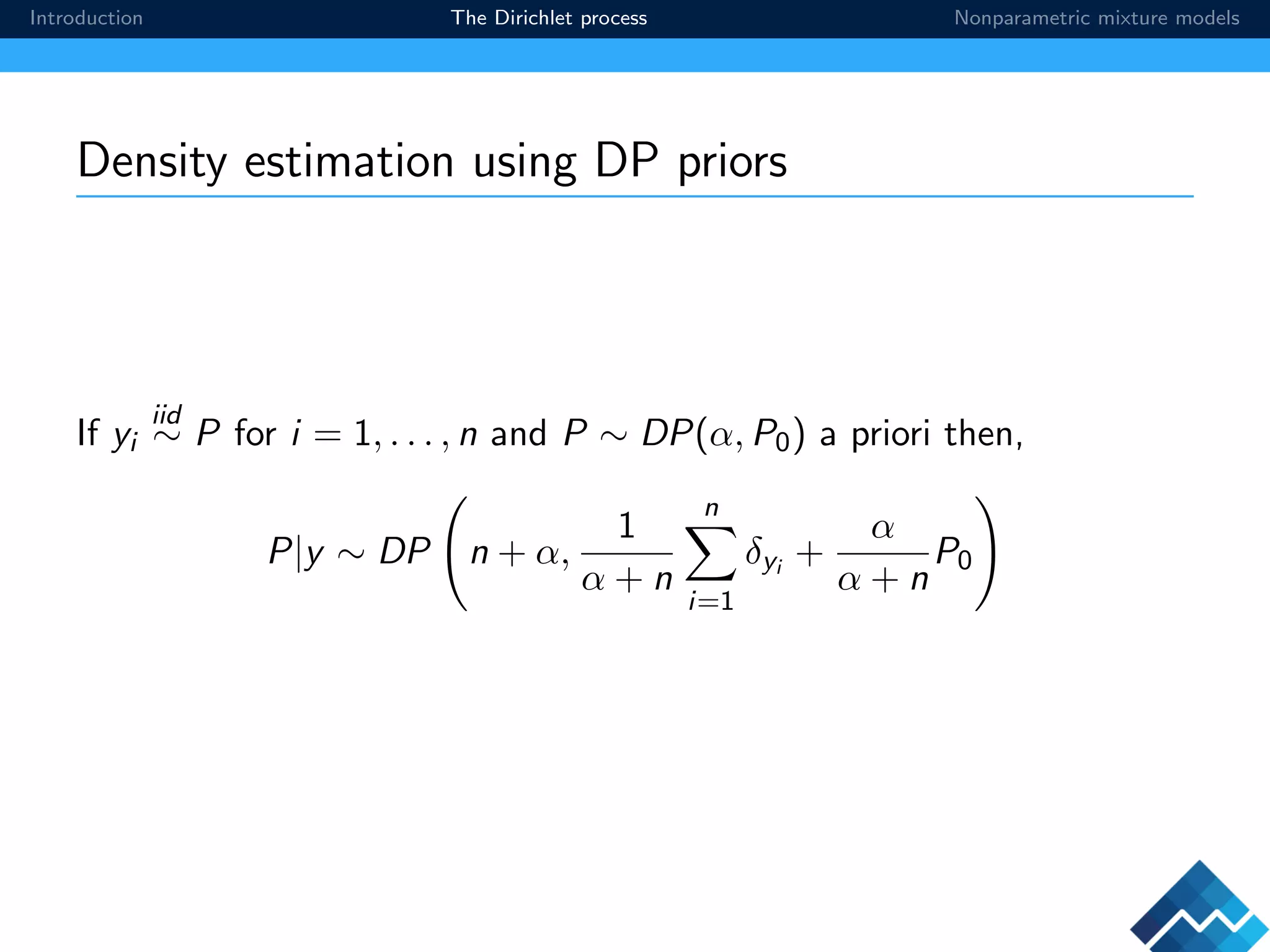

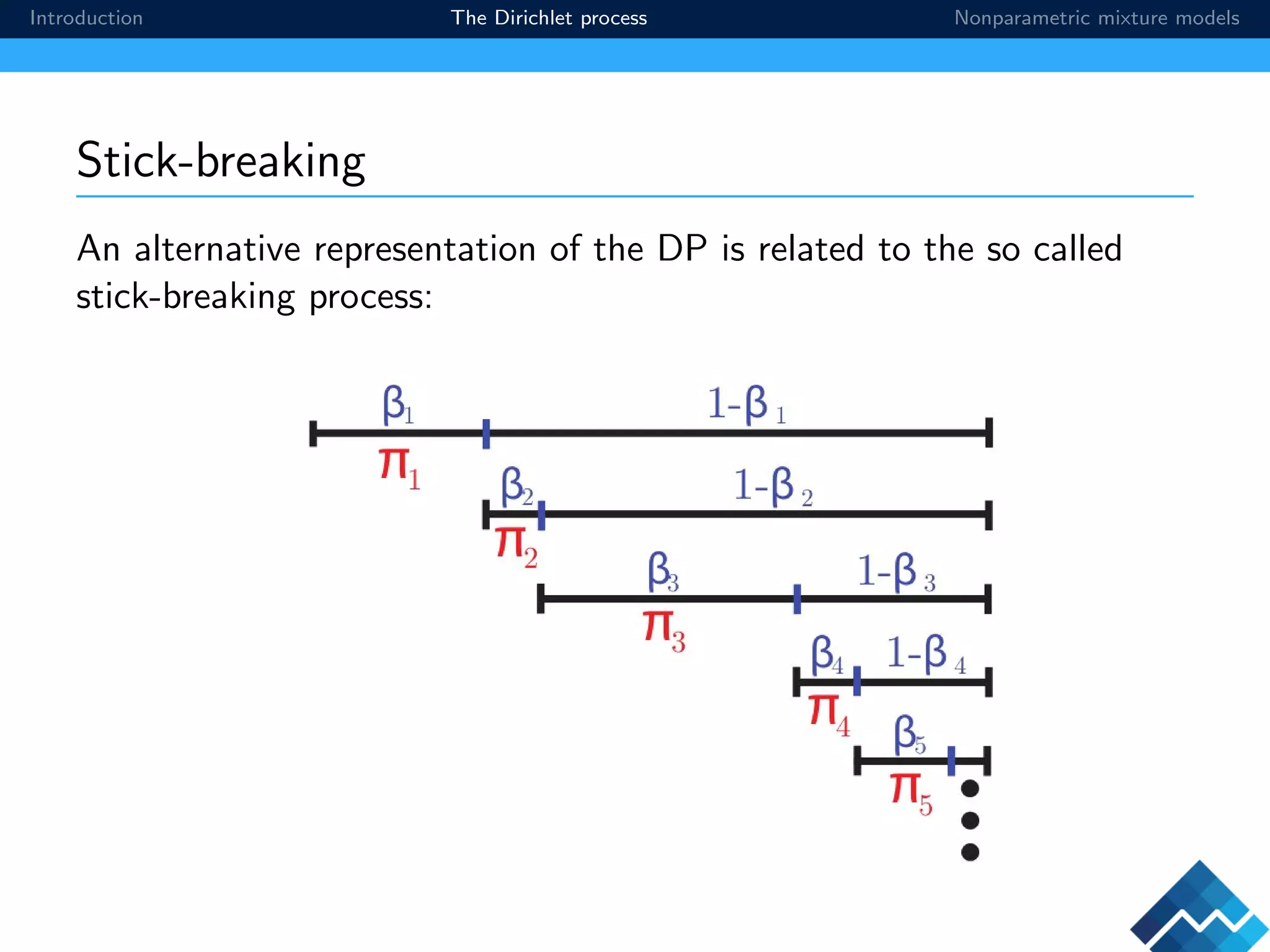

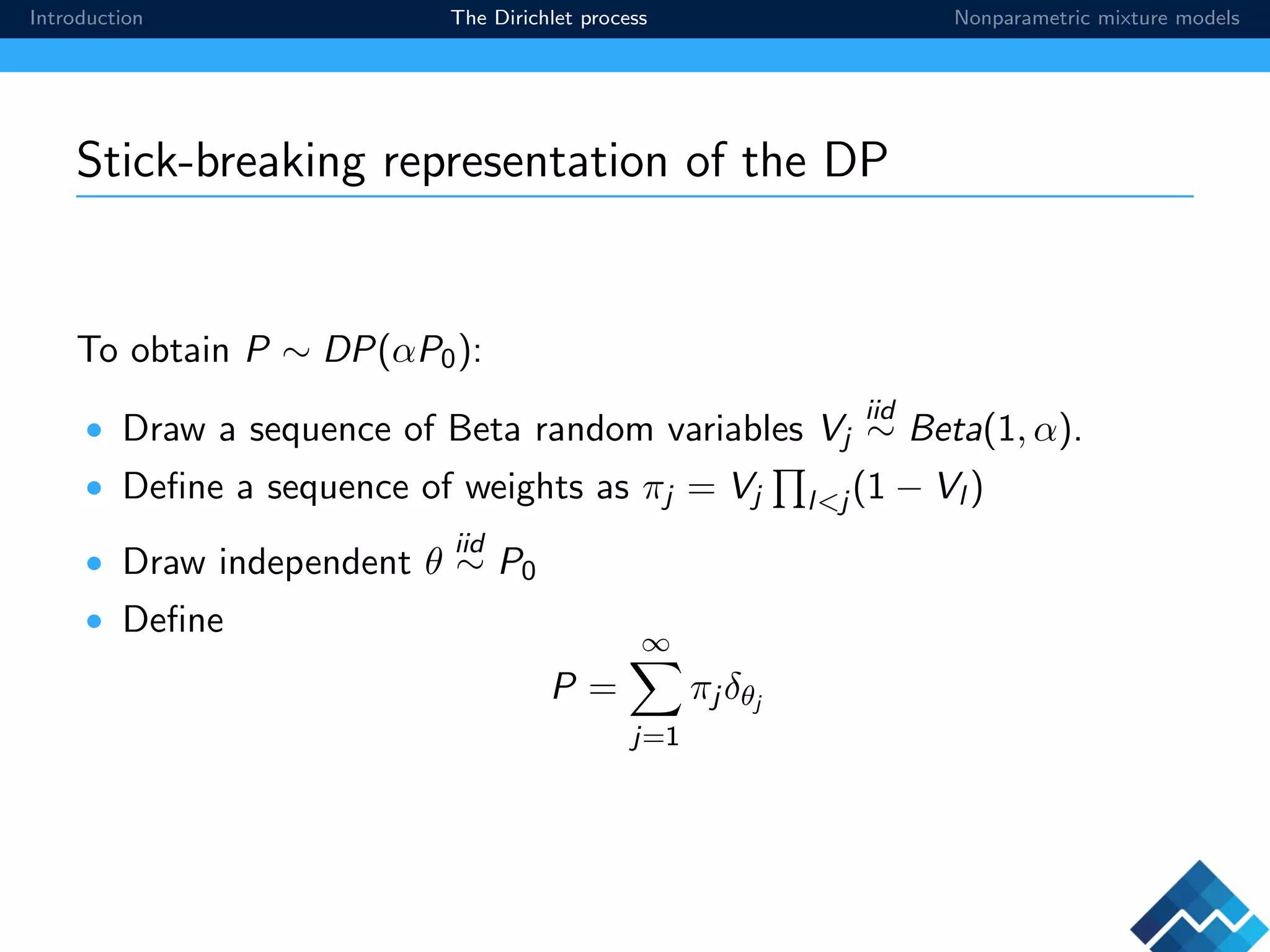

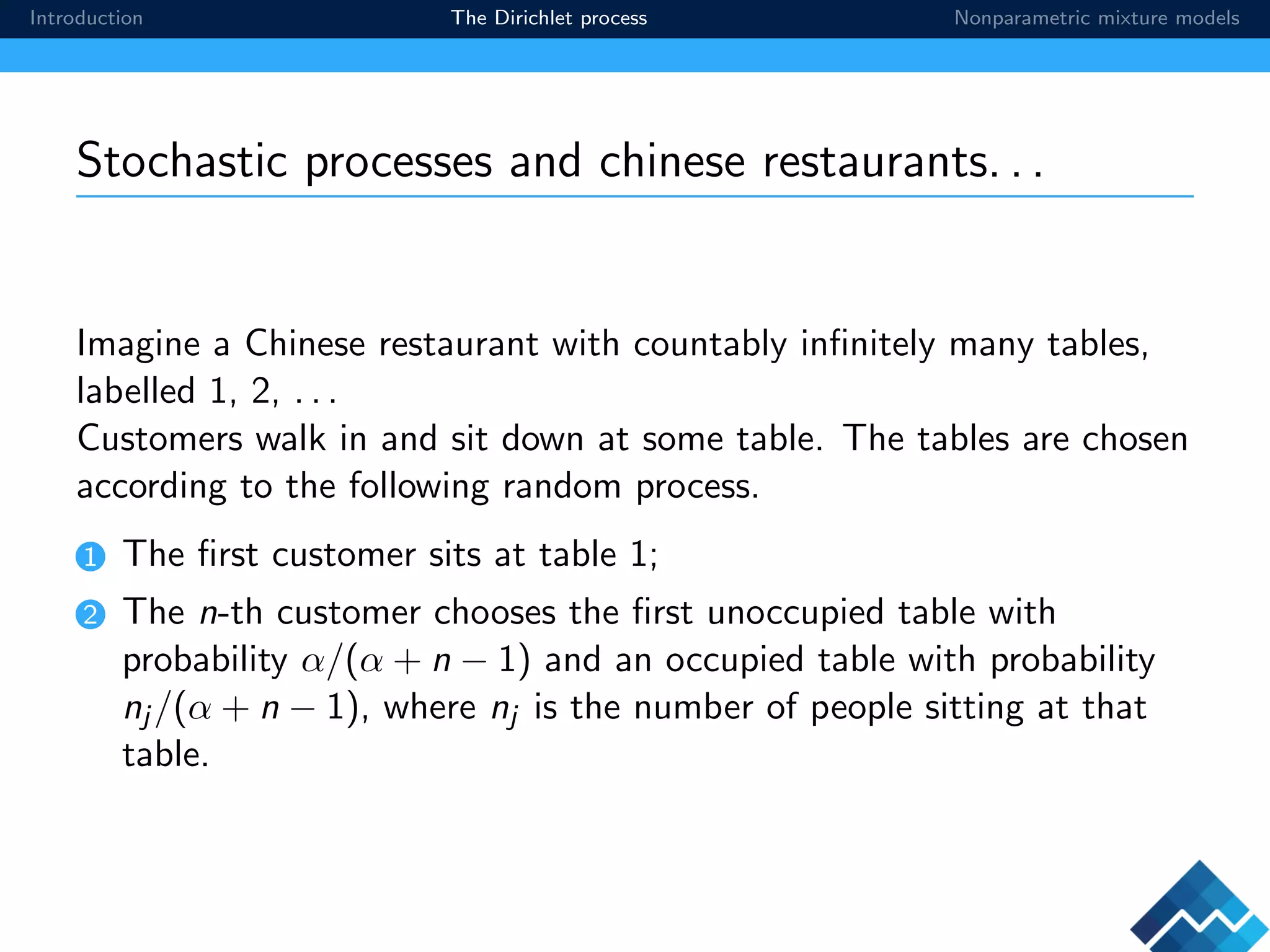

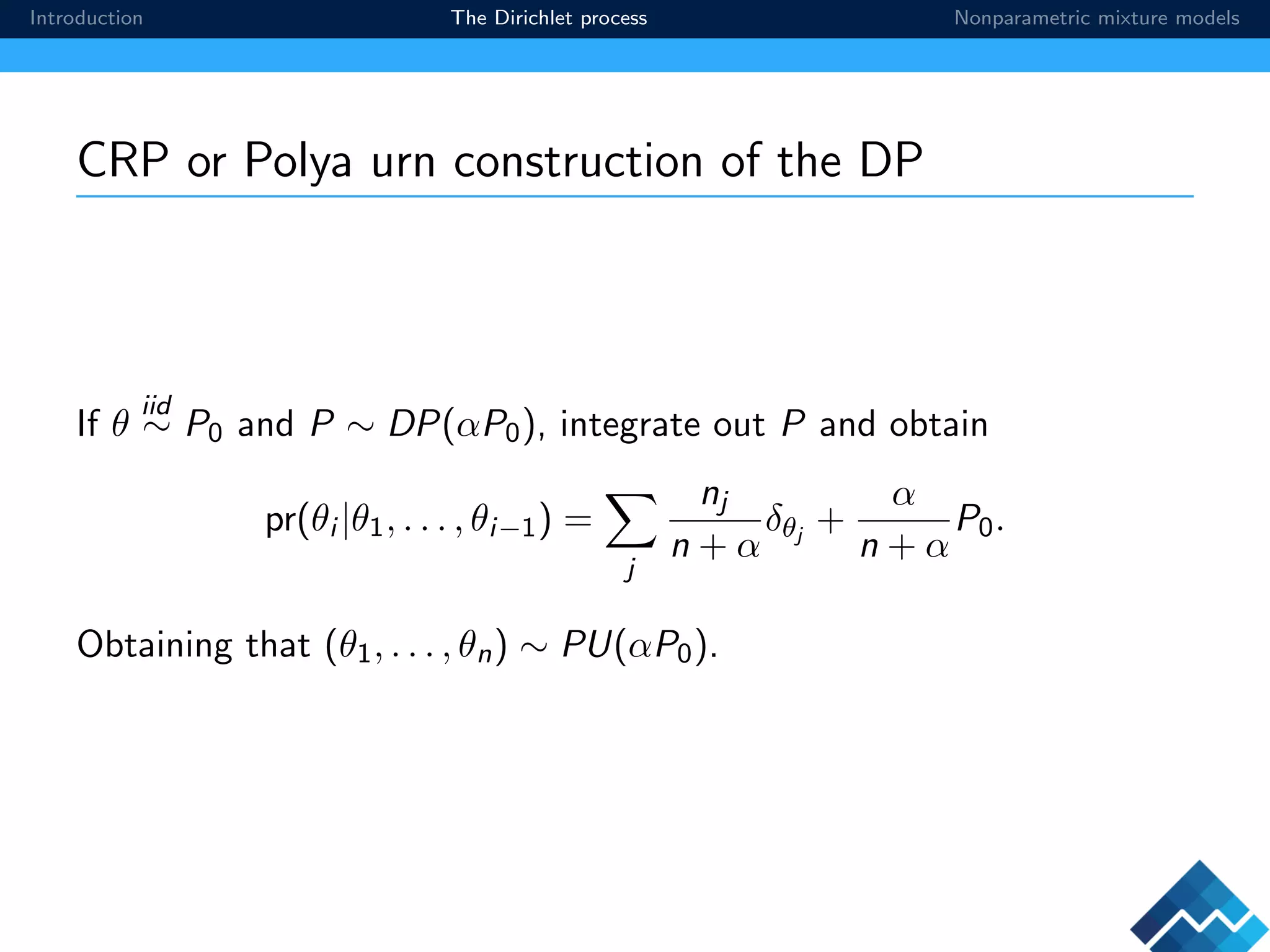

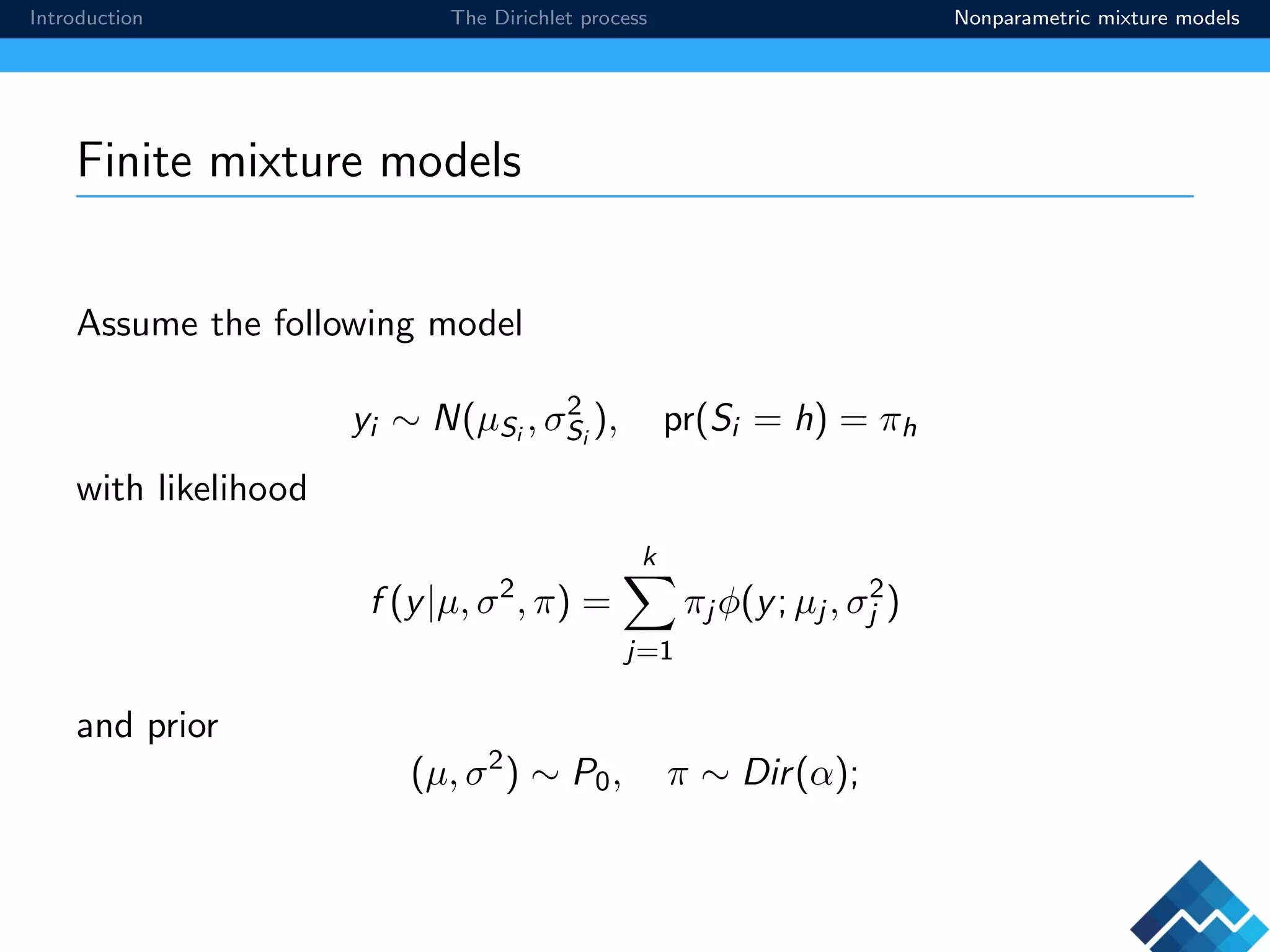

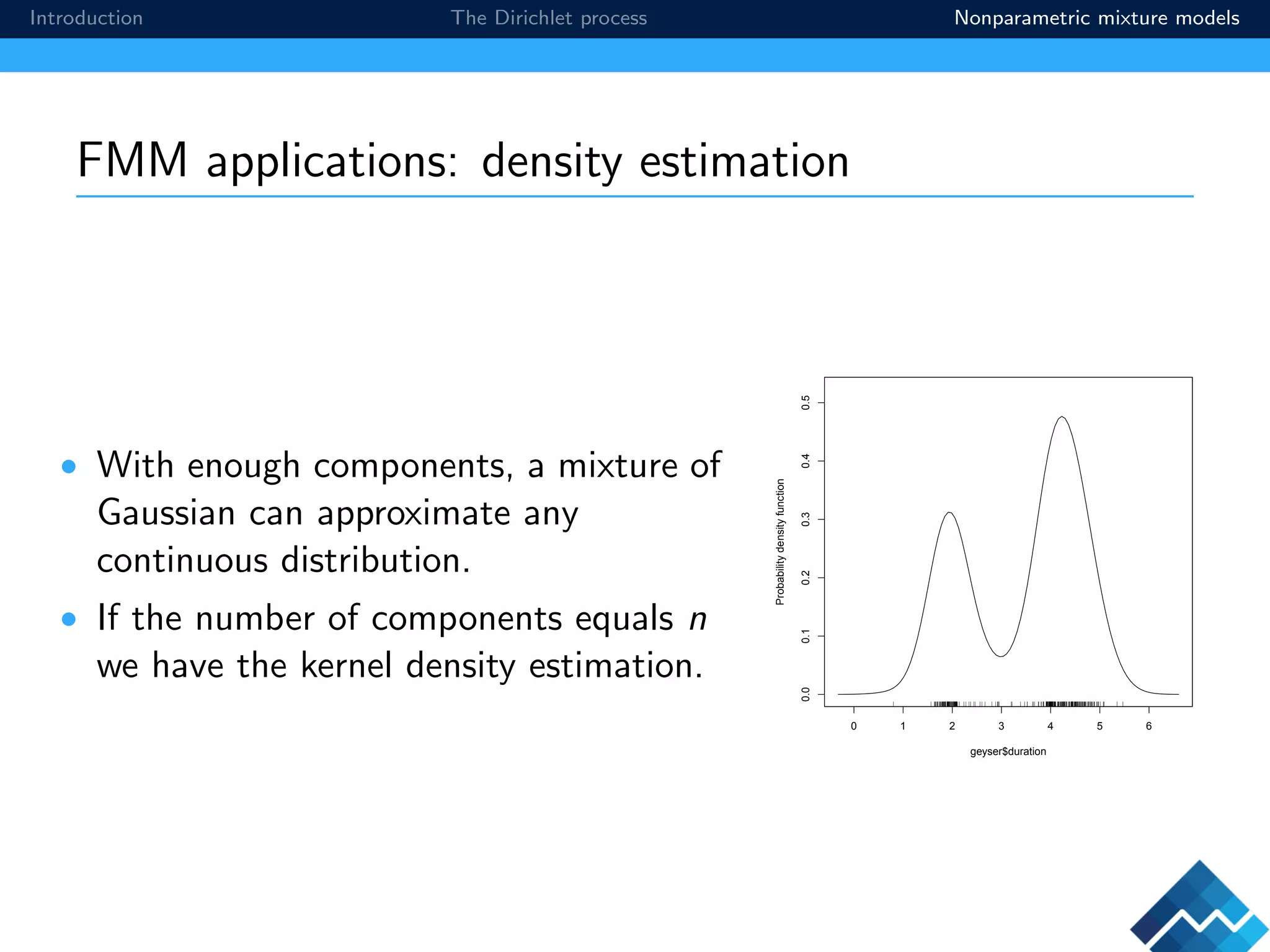

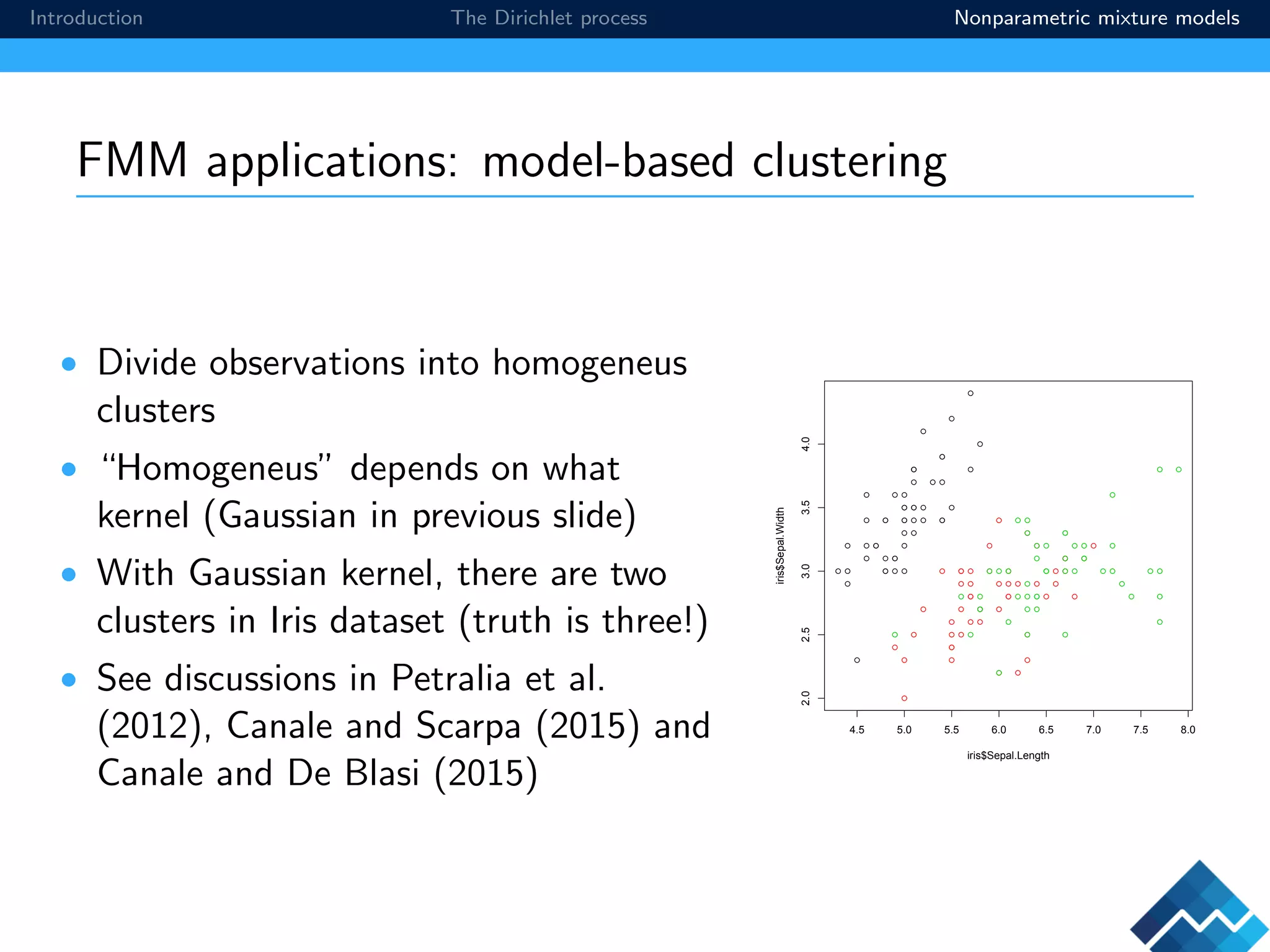

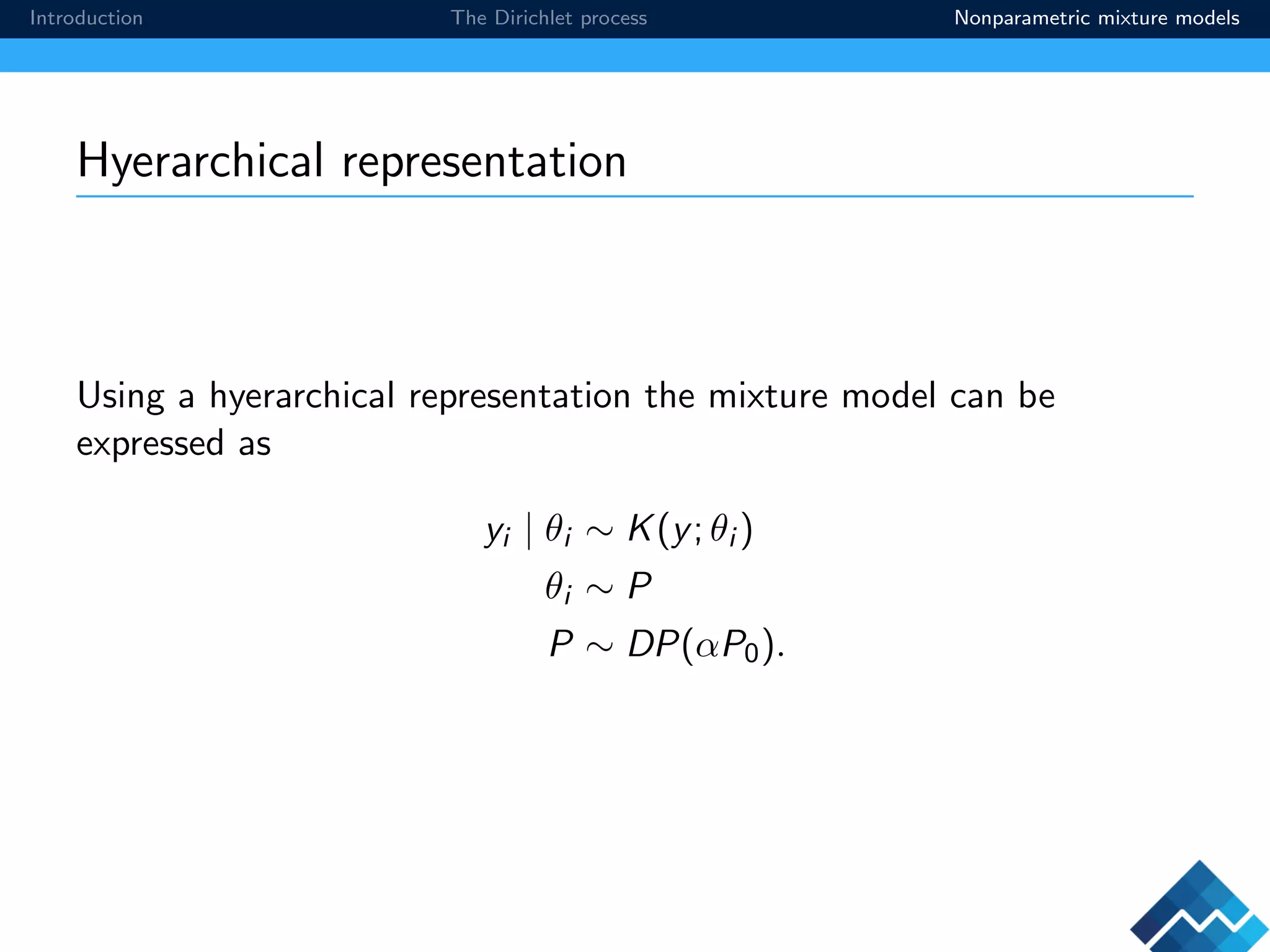

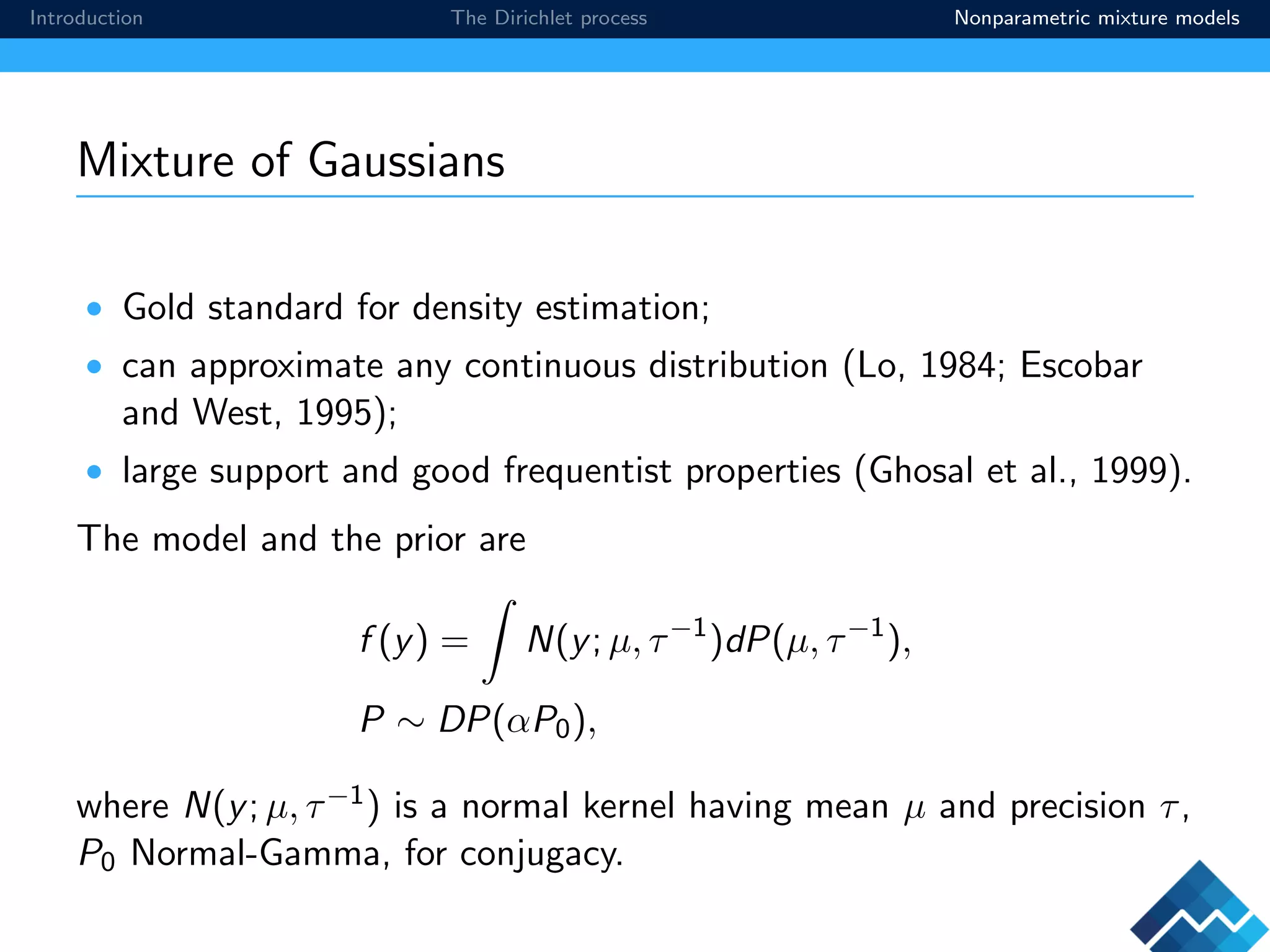

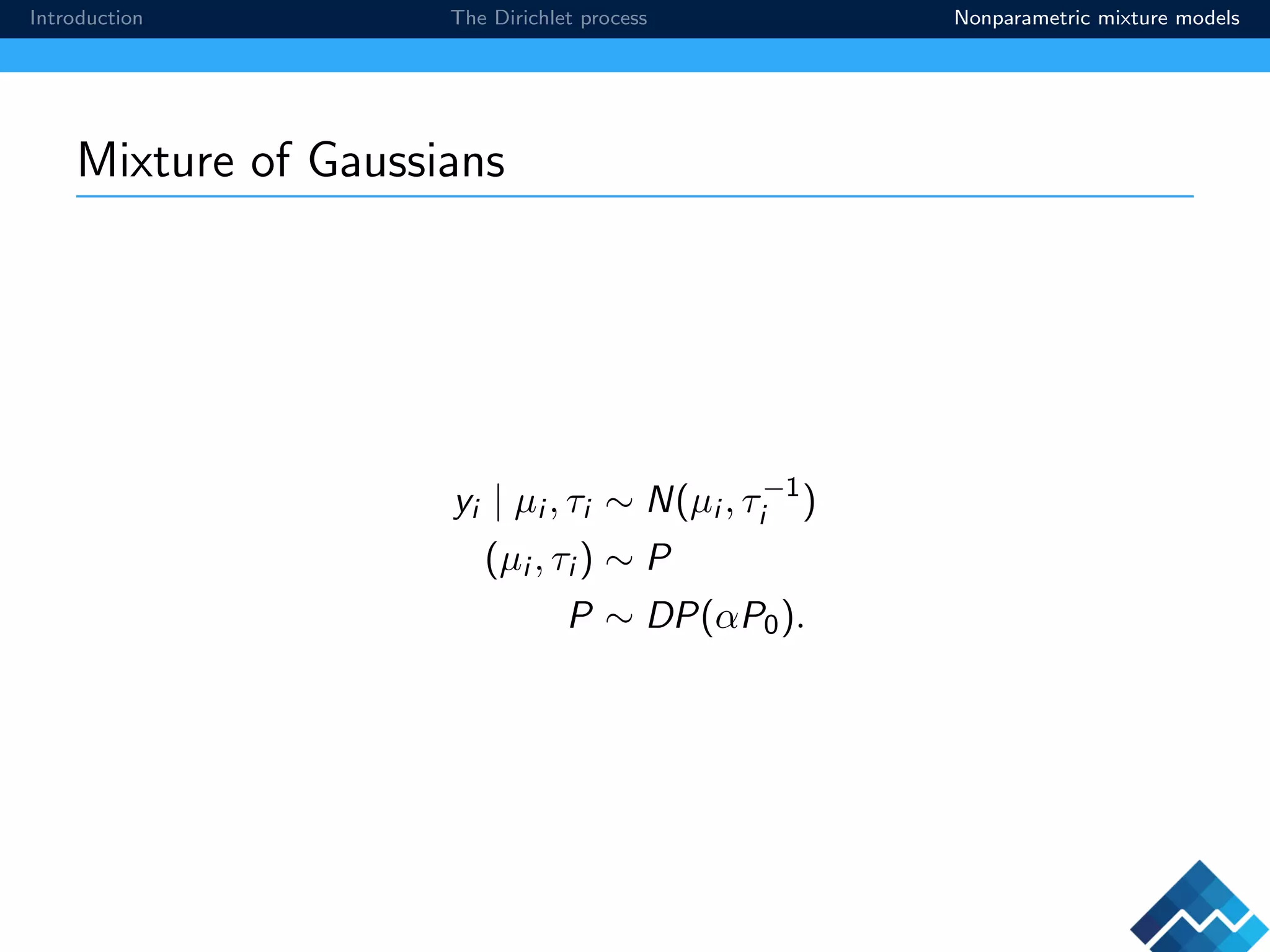

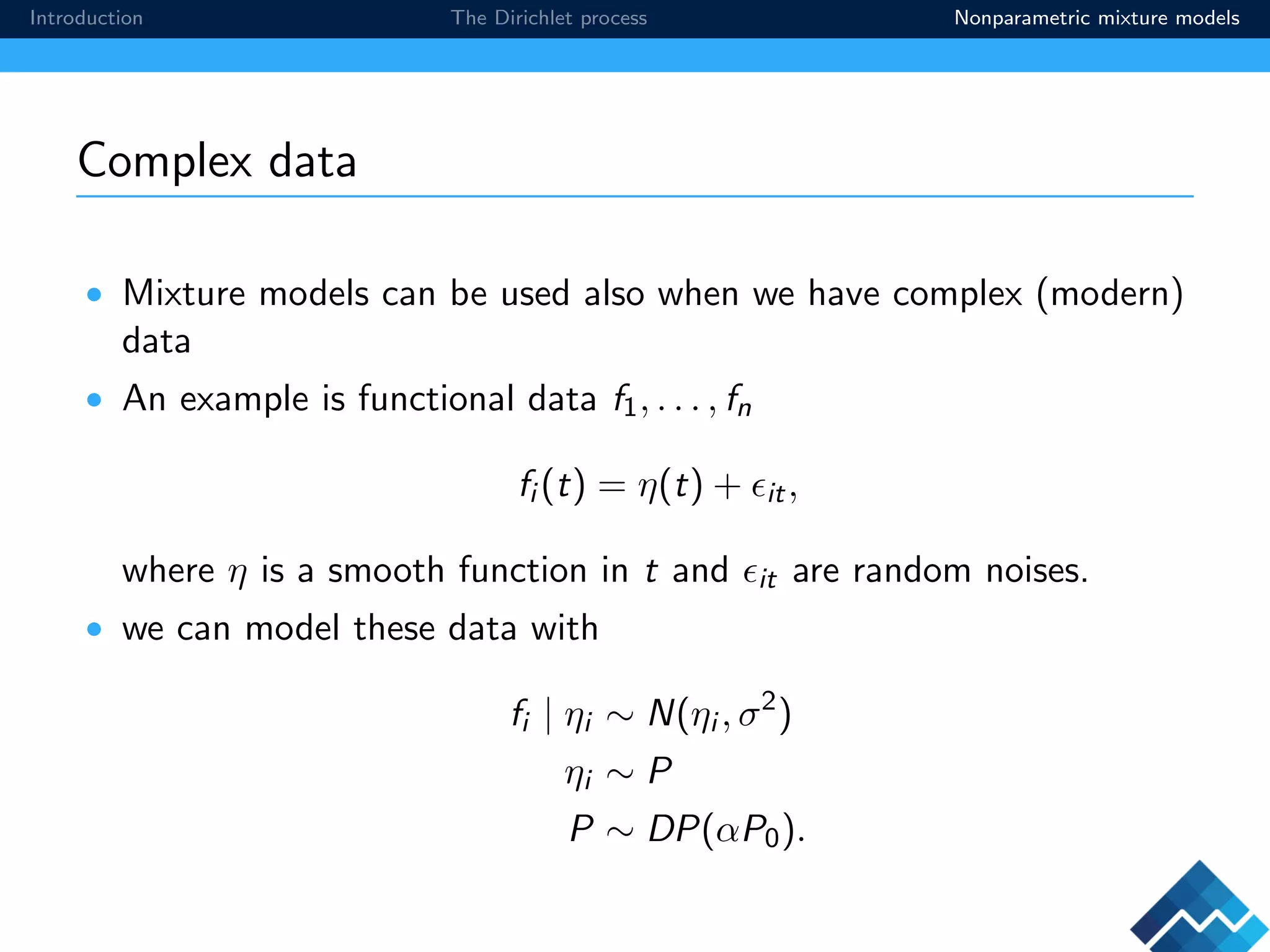

The document provides an introduction to Bayesian nonparametrics and the Dirichlet process. It explains that Bayesian nonparametrics aims to fit models that can adapt their complexity based on the data, without strictly imposing a fixed structure. The Dirichlet process is described as a prior distribution on the space of all probability distributions, allowing the model to utilize an infinite number of parameters. Nonparametric mixture models using the Dirichlet process provide a flexible approach to density estimation and clustering.