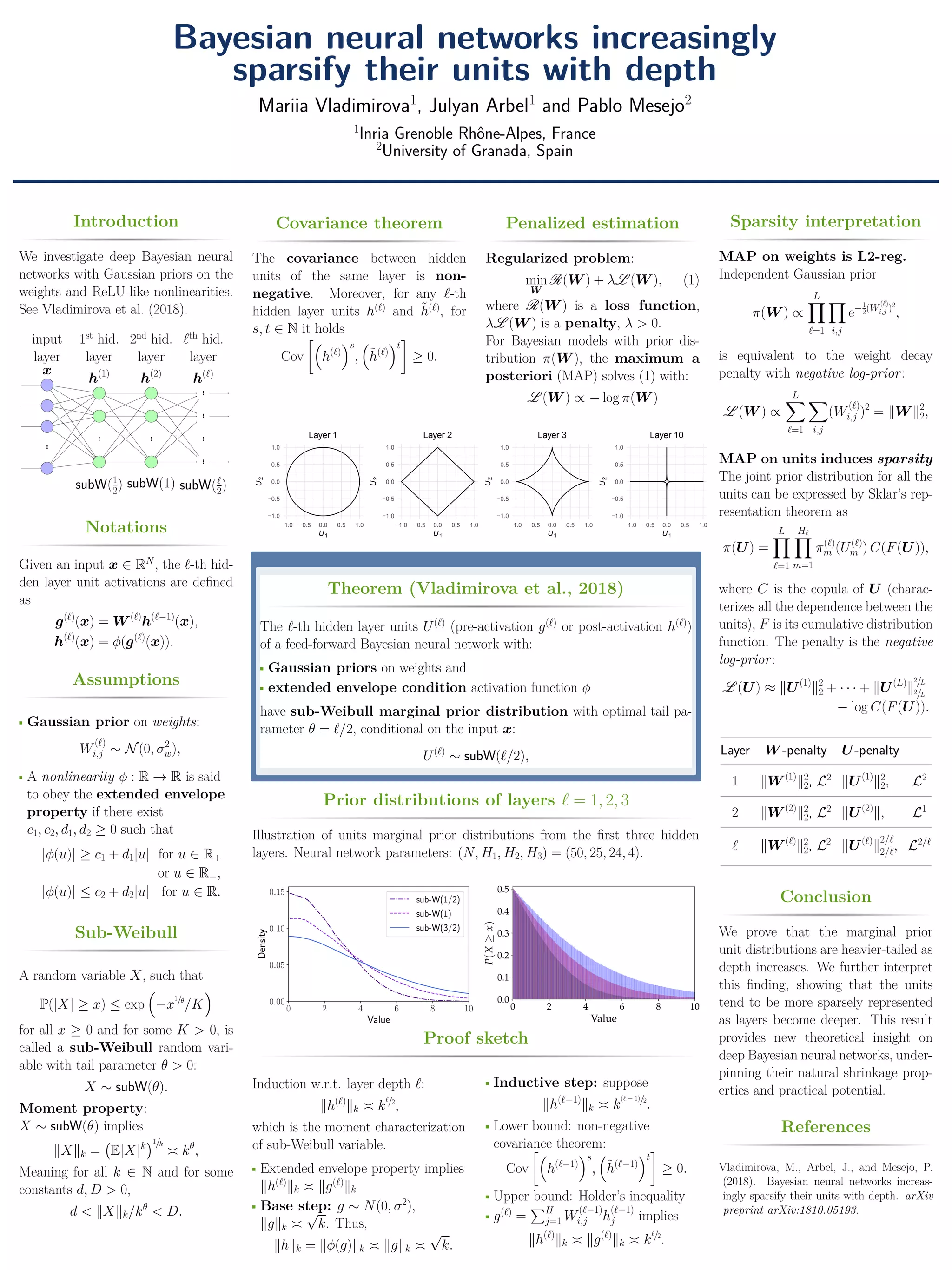

This document analyzes deep Bayesian neural networks with Gaussian priors on weights and ReLU-like activations. It proves that the marginal prior distributions of hidden units become heavier-tailed (sub-Weibull) with increasing layer depth, with an optimal tail parameter of layer depth divided by 2. This indicates that units in deeper layers will be more sparsely represented under maximum a posteriori estimation, explaining the natural shrinkage properties of these networks.