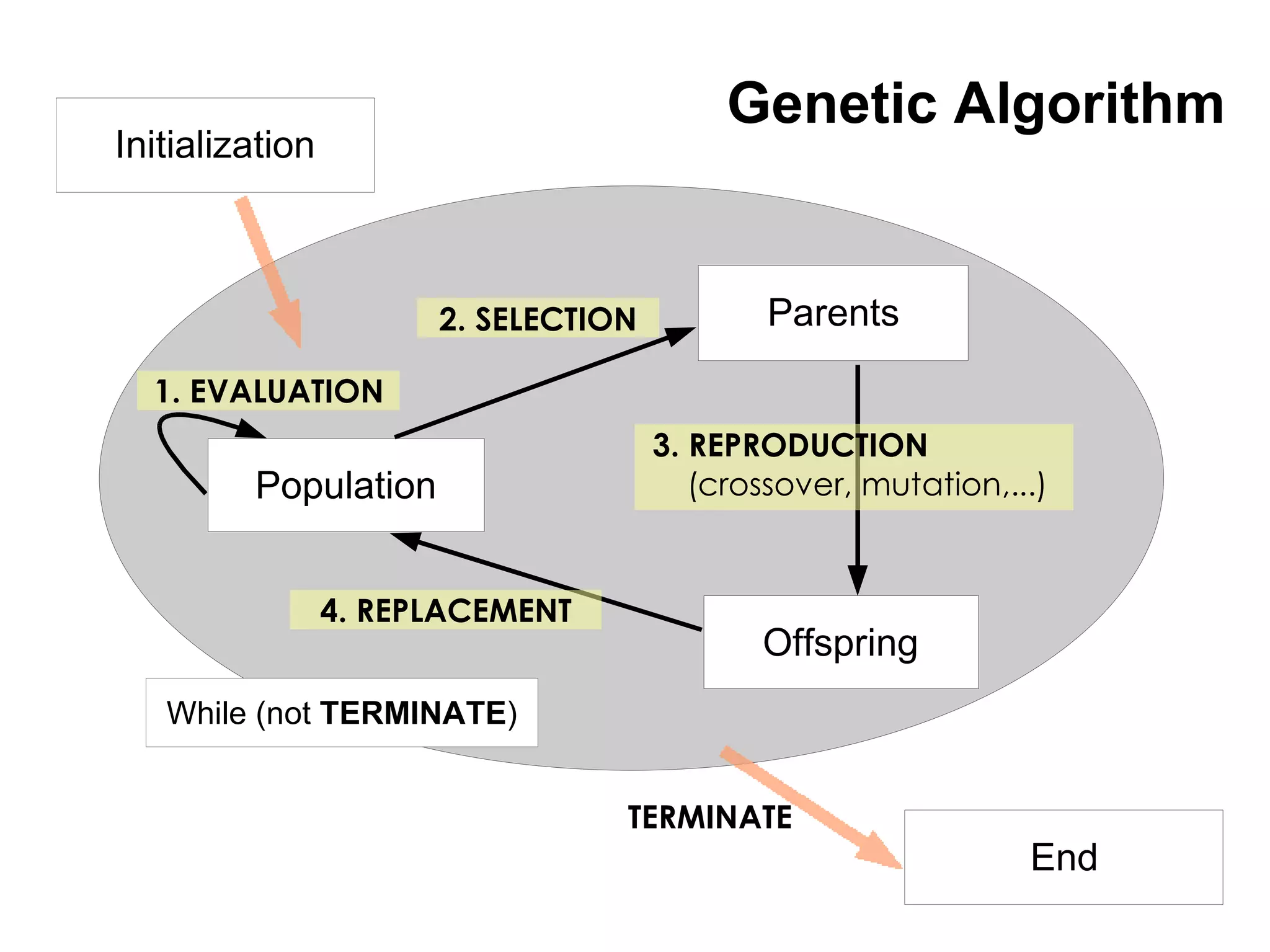

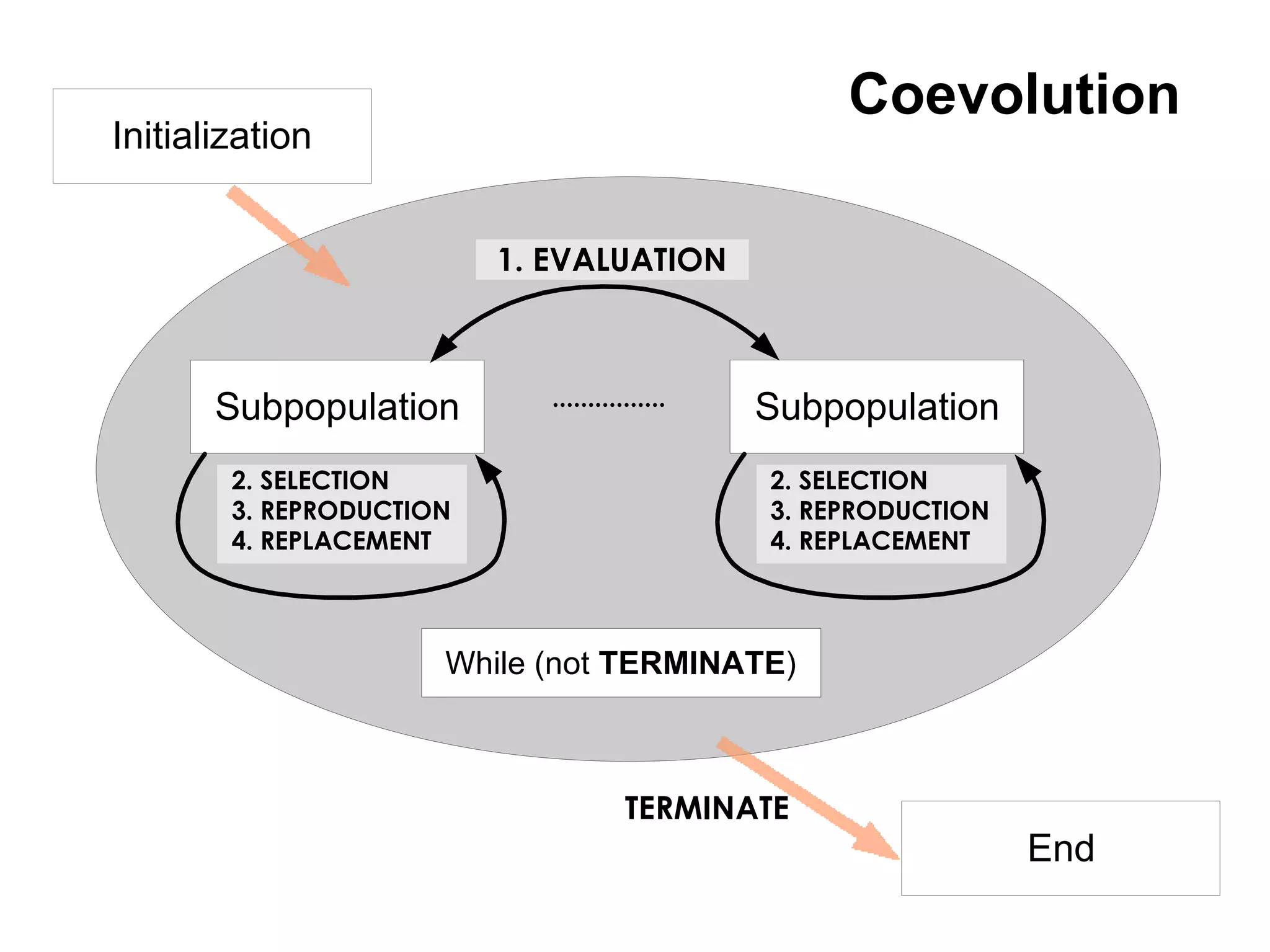

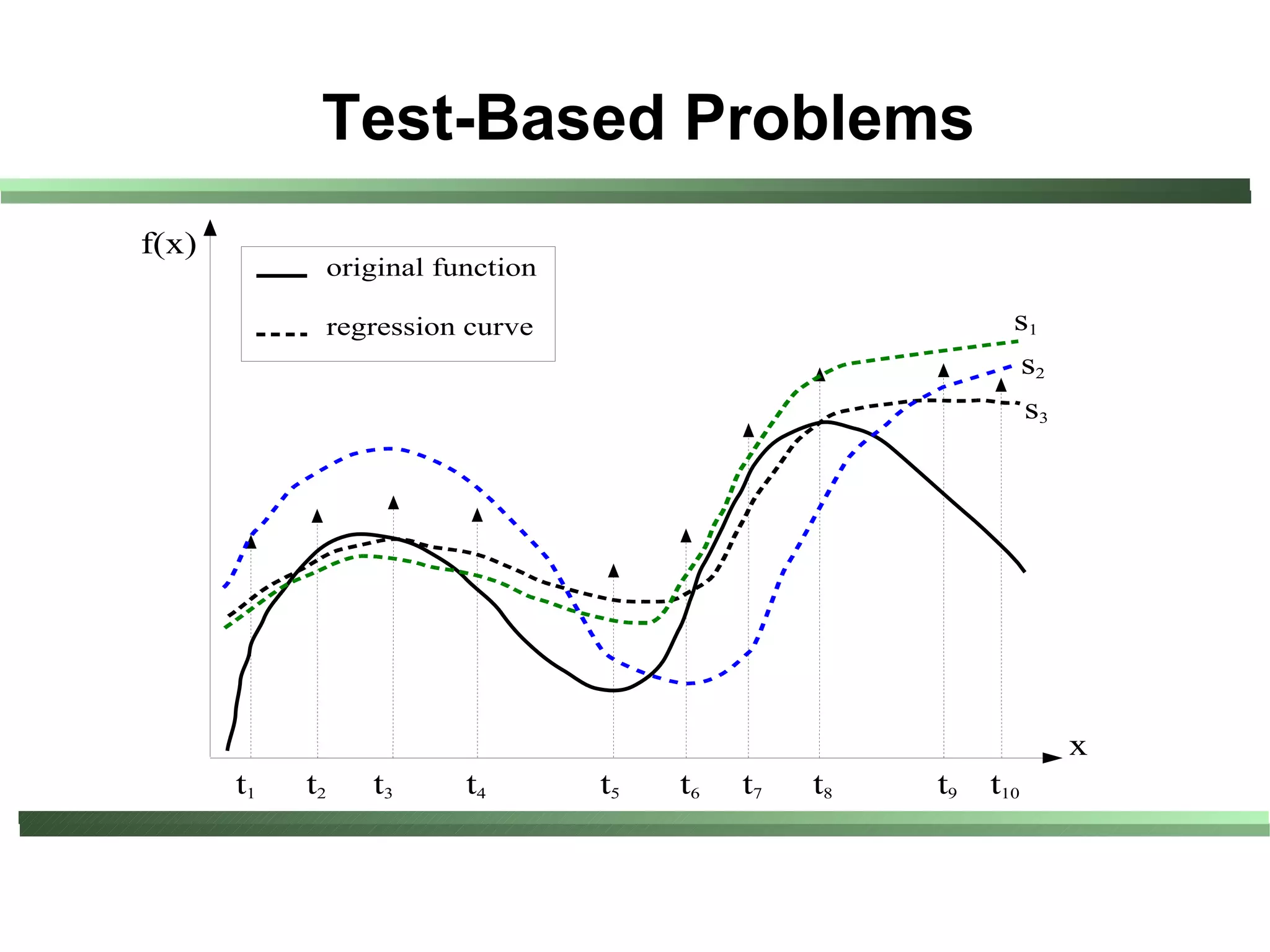

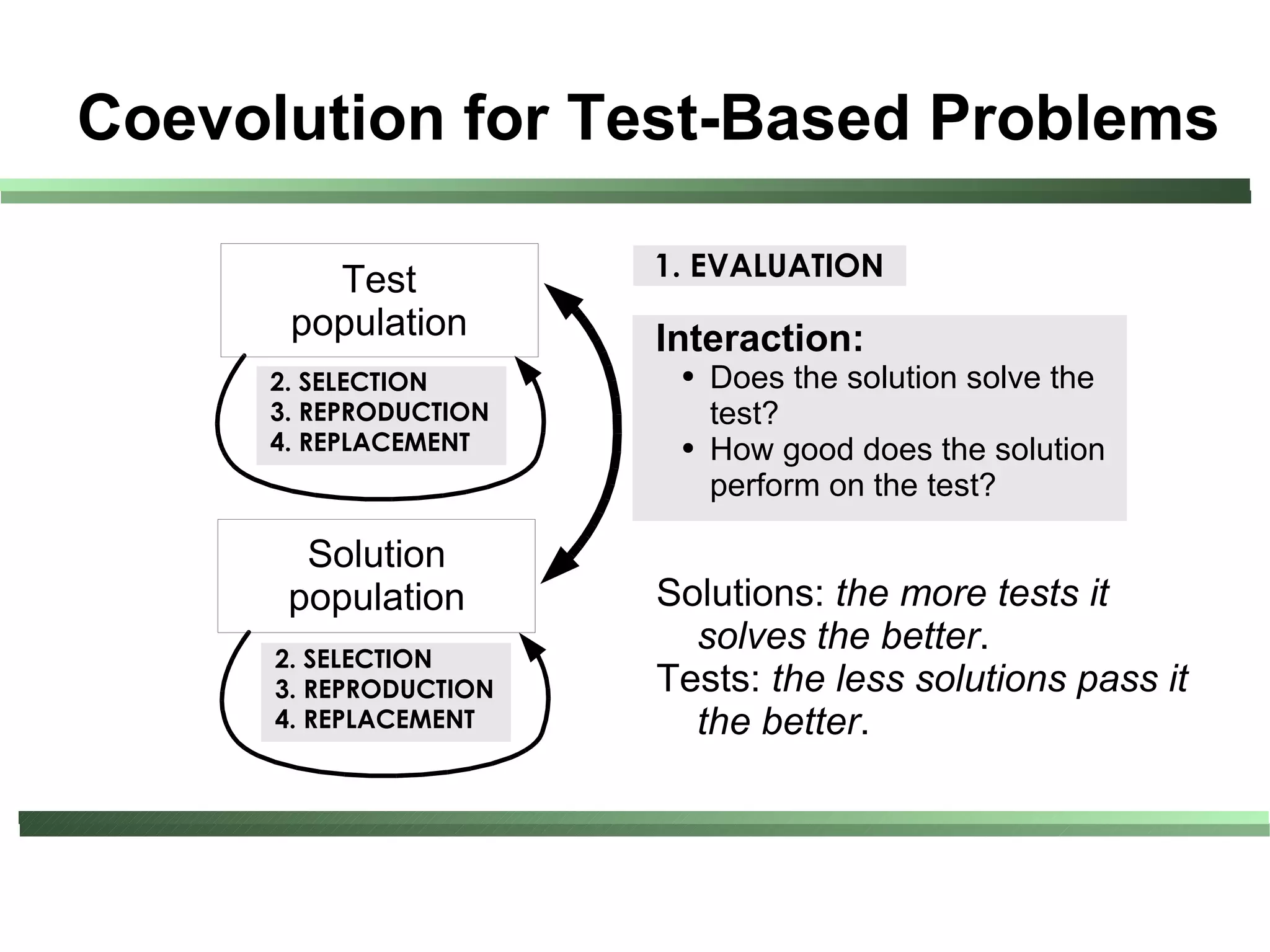

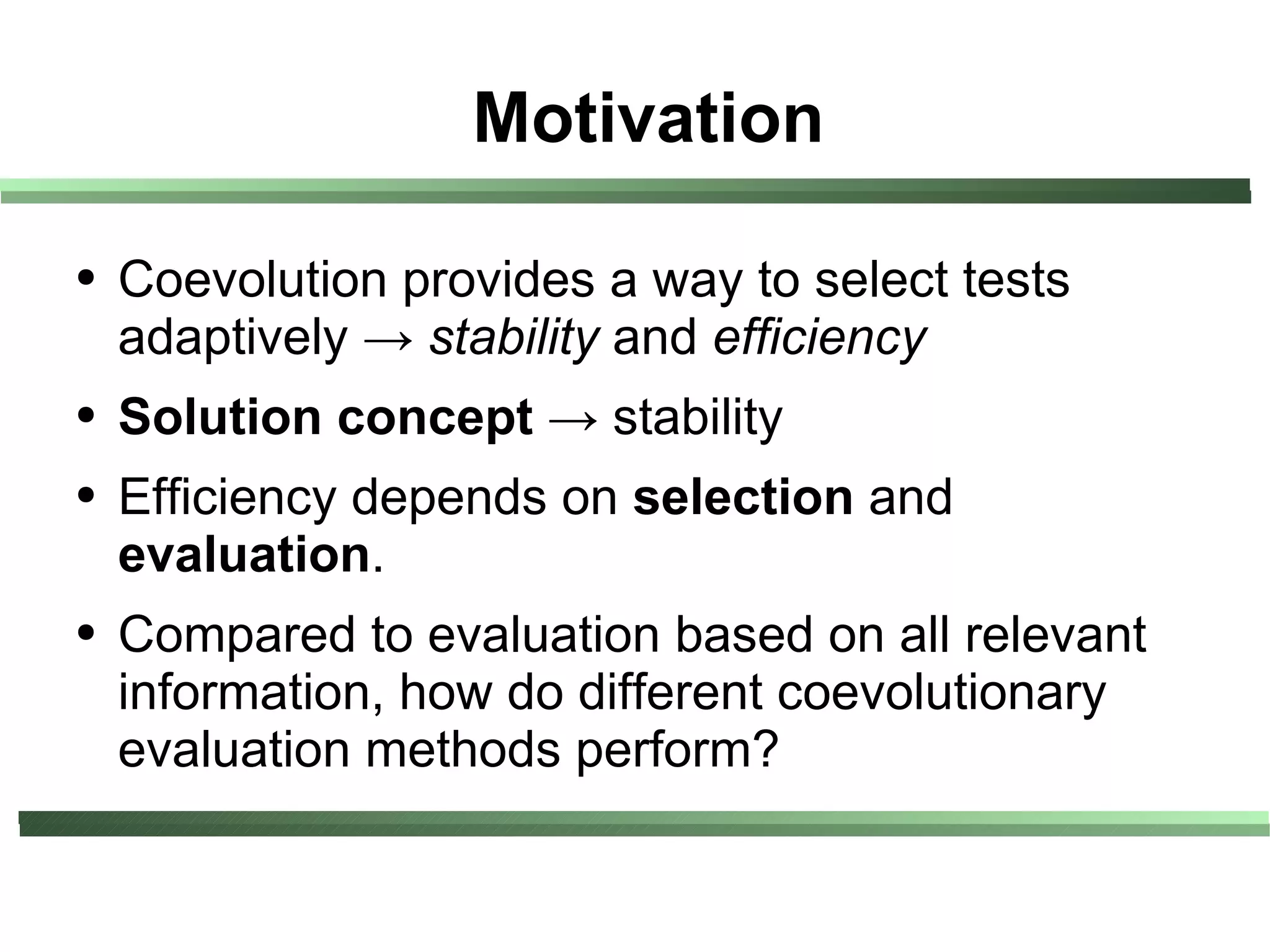

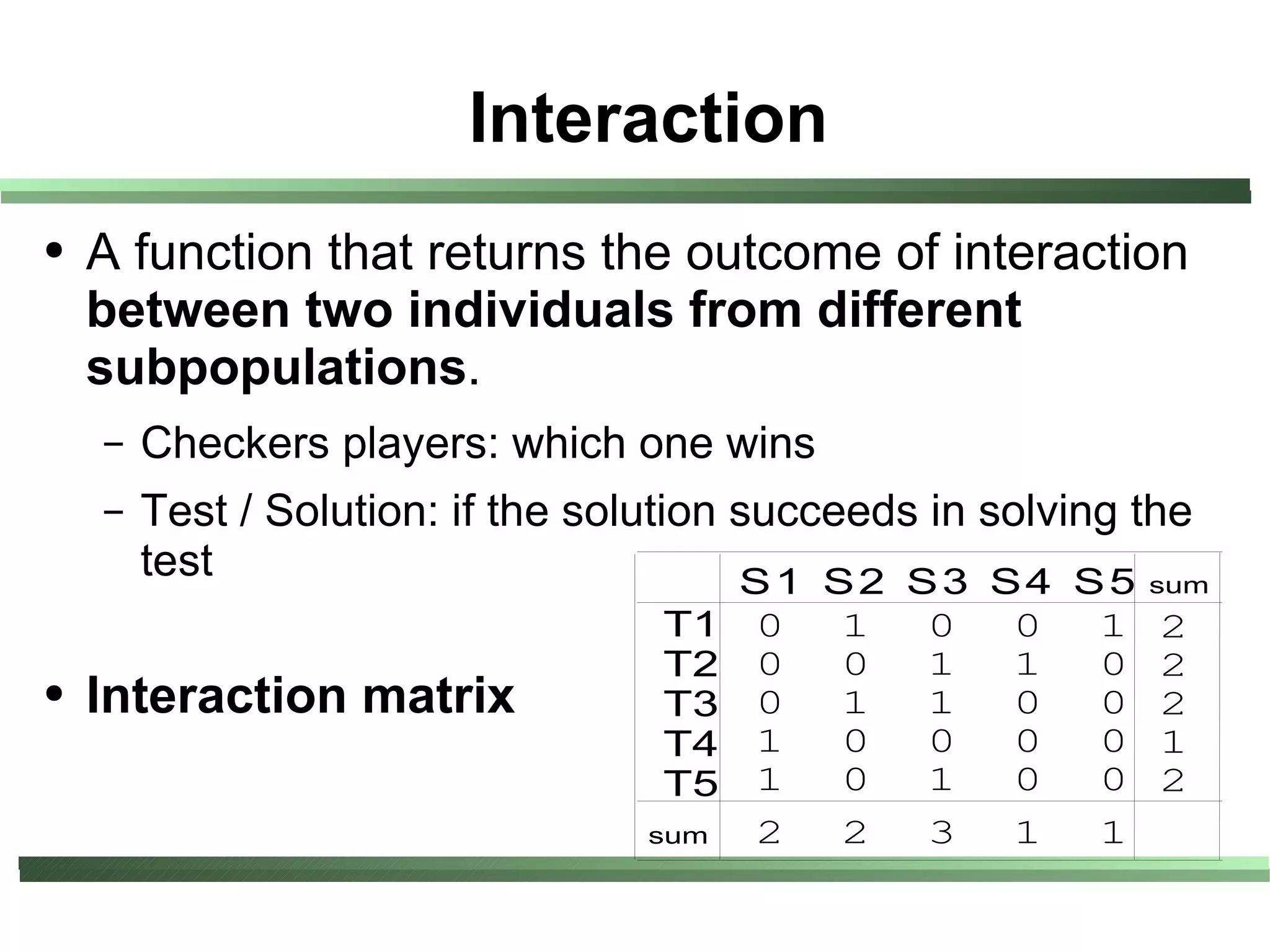

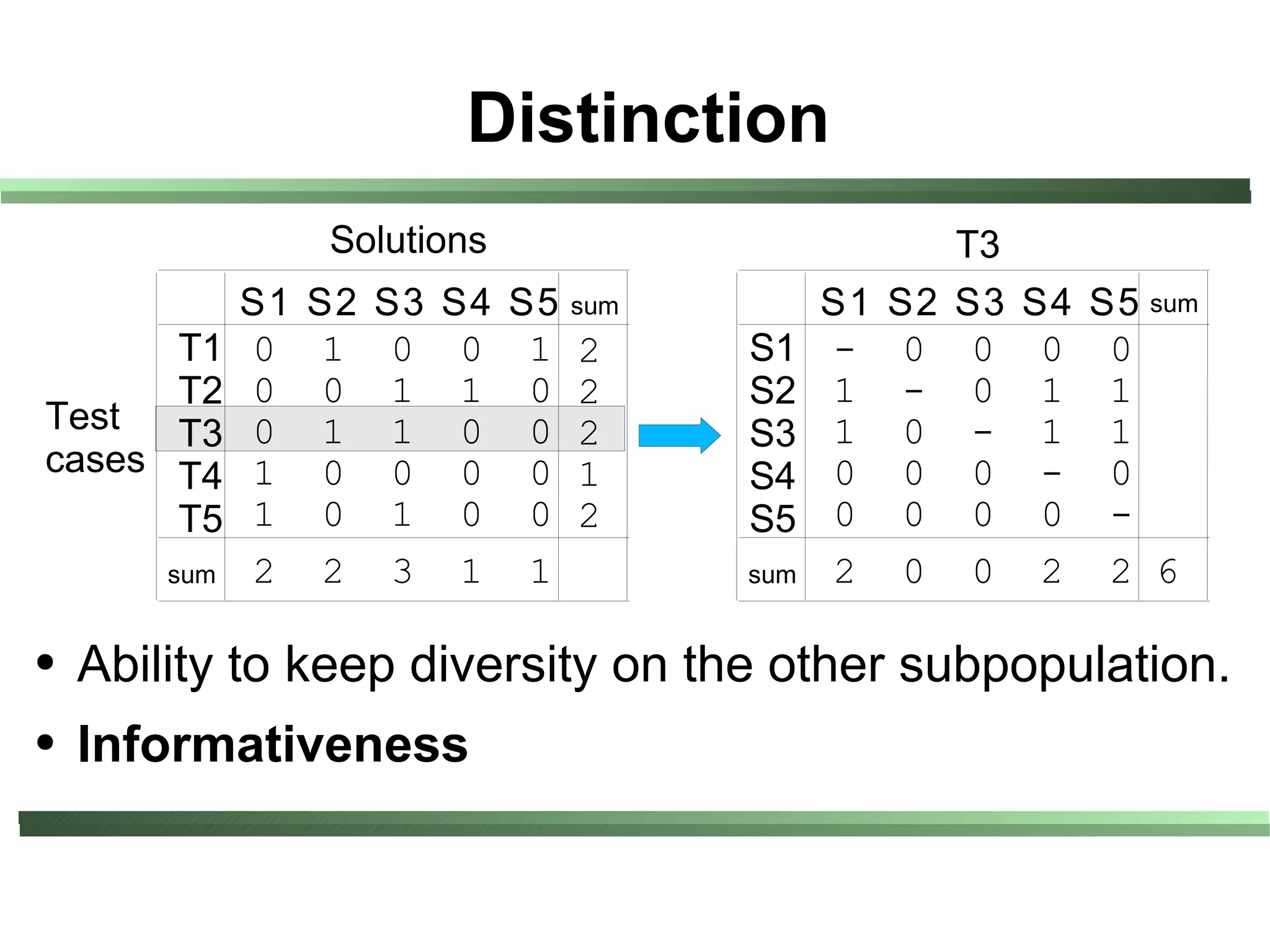

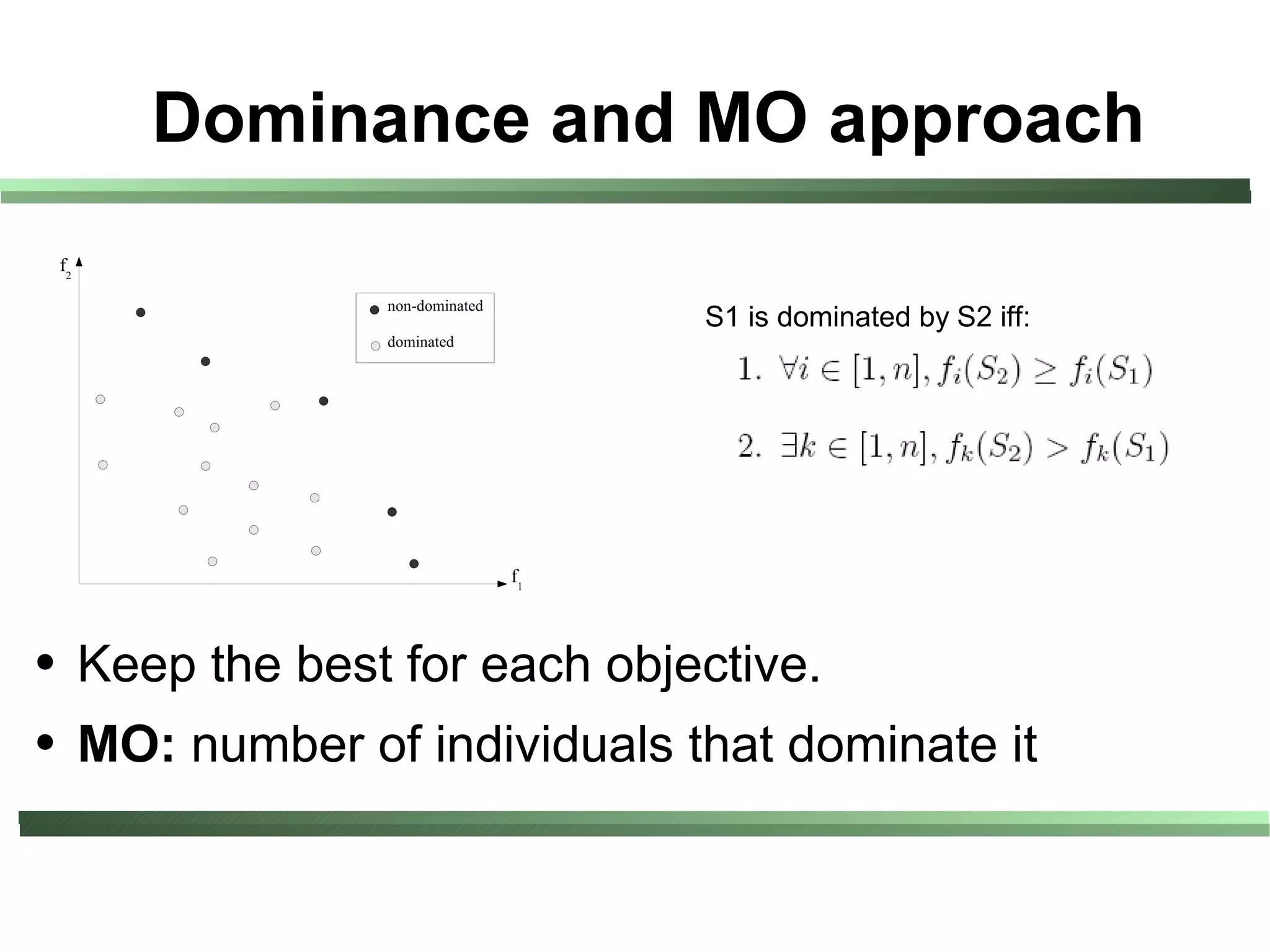

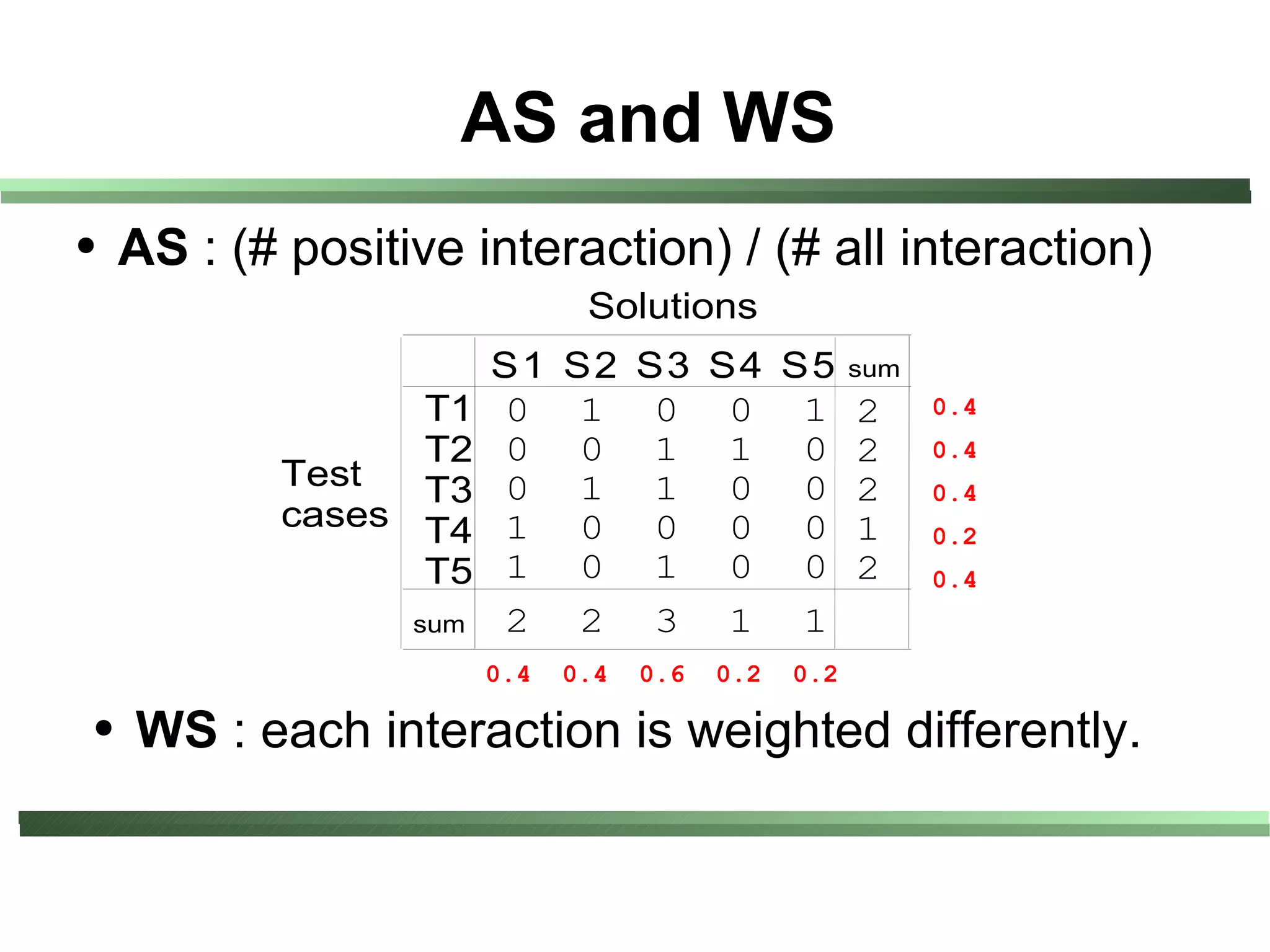

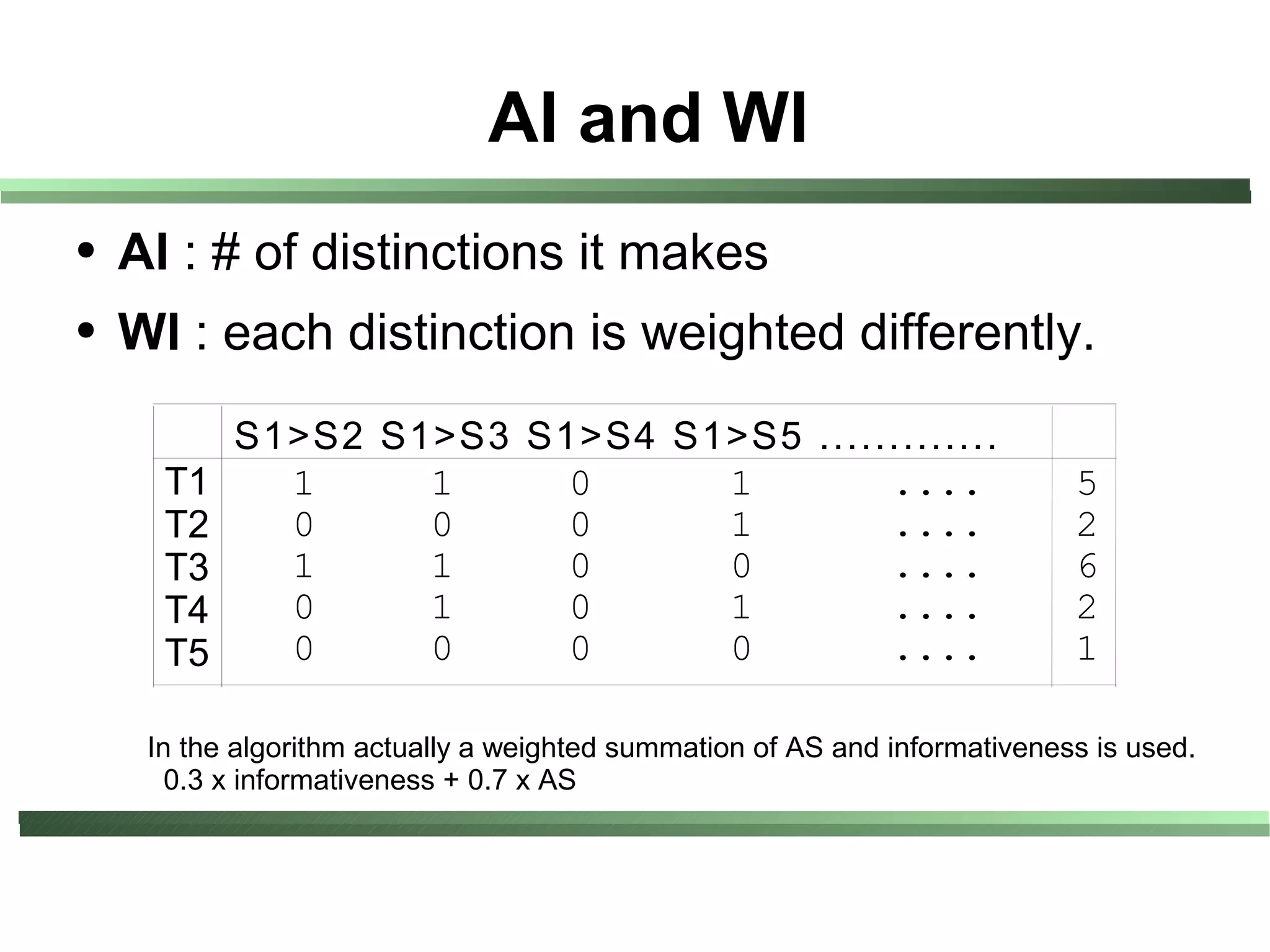

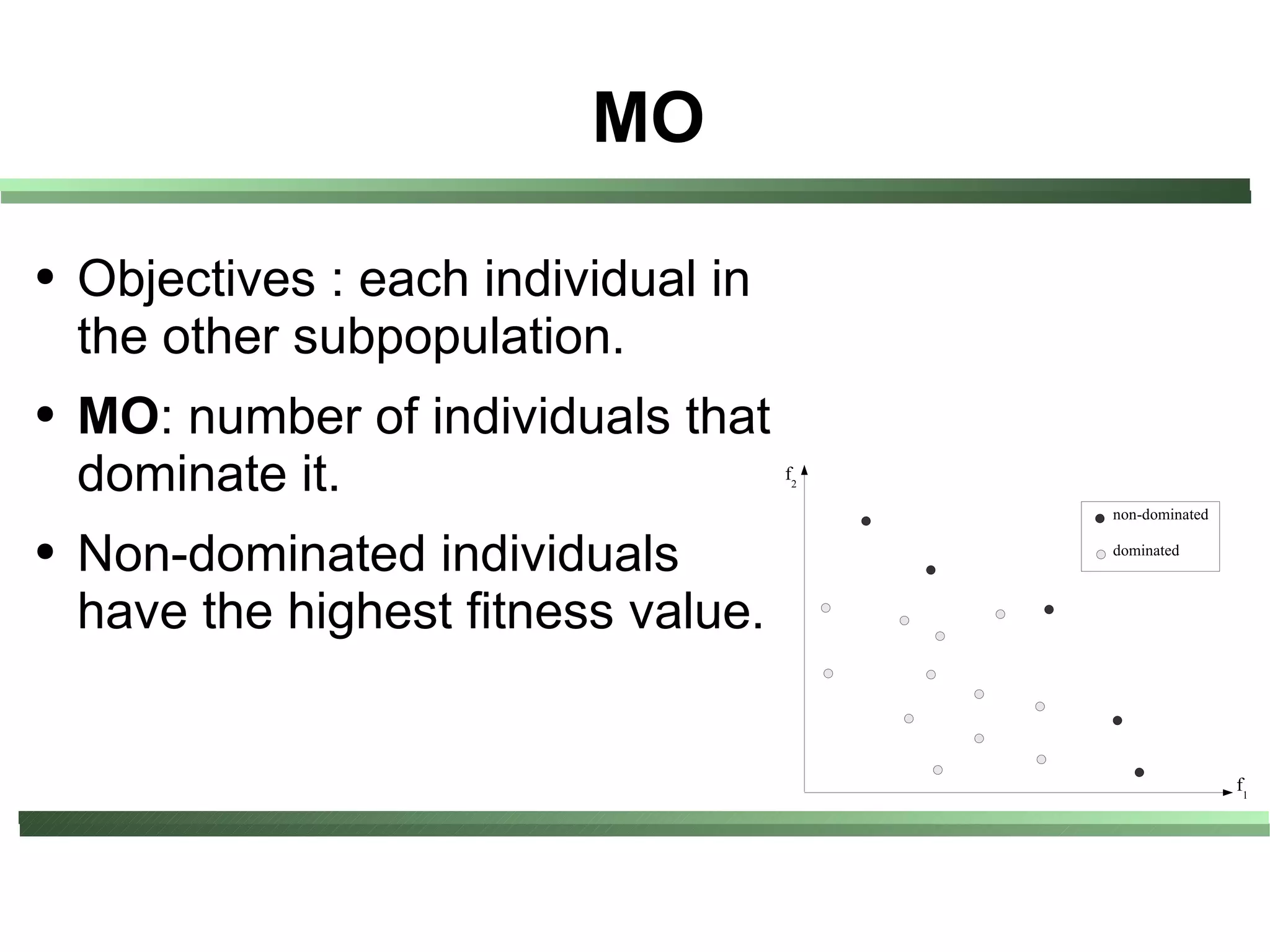

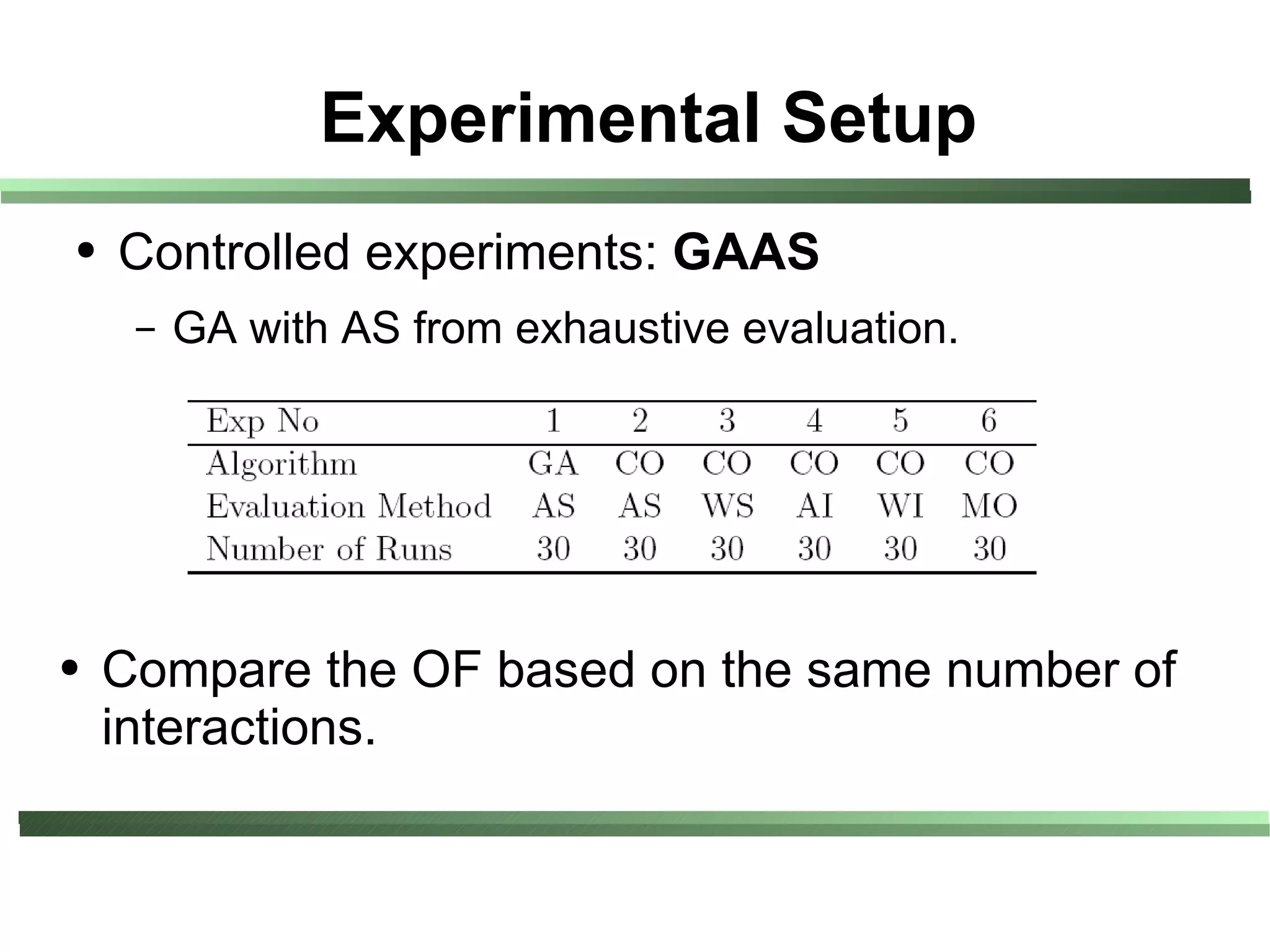

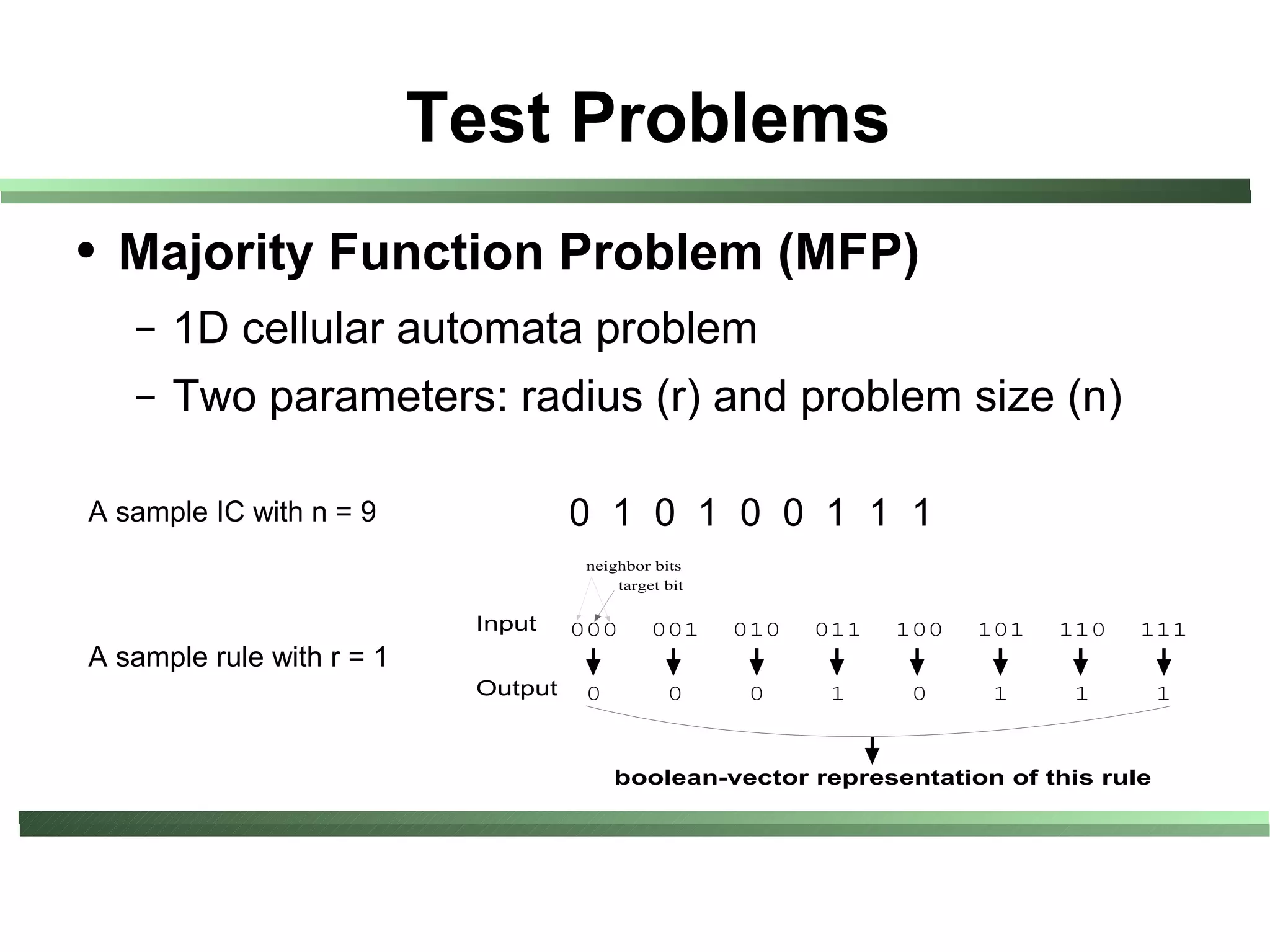

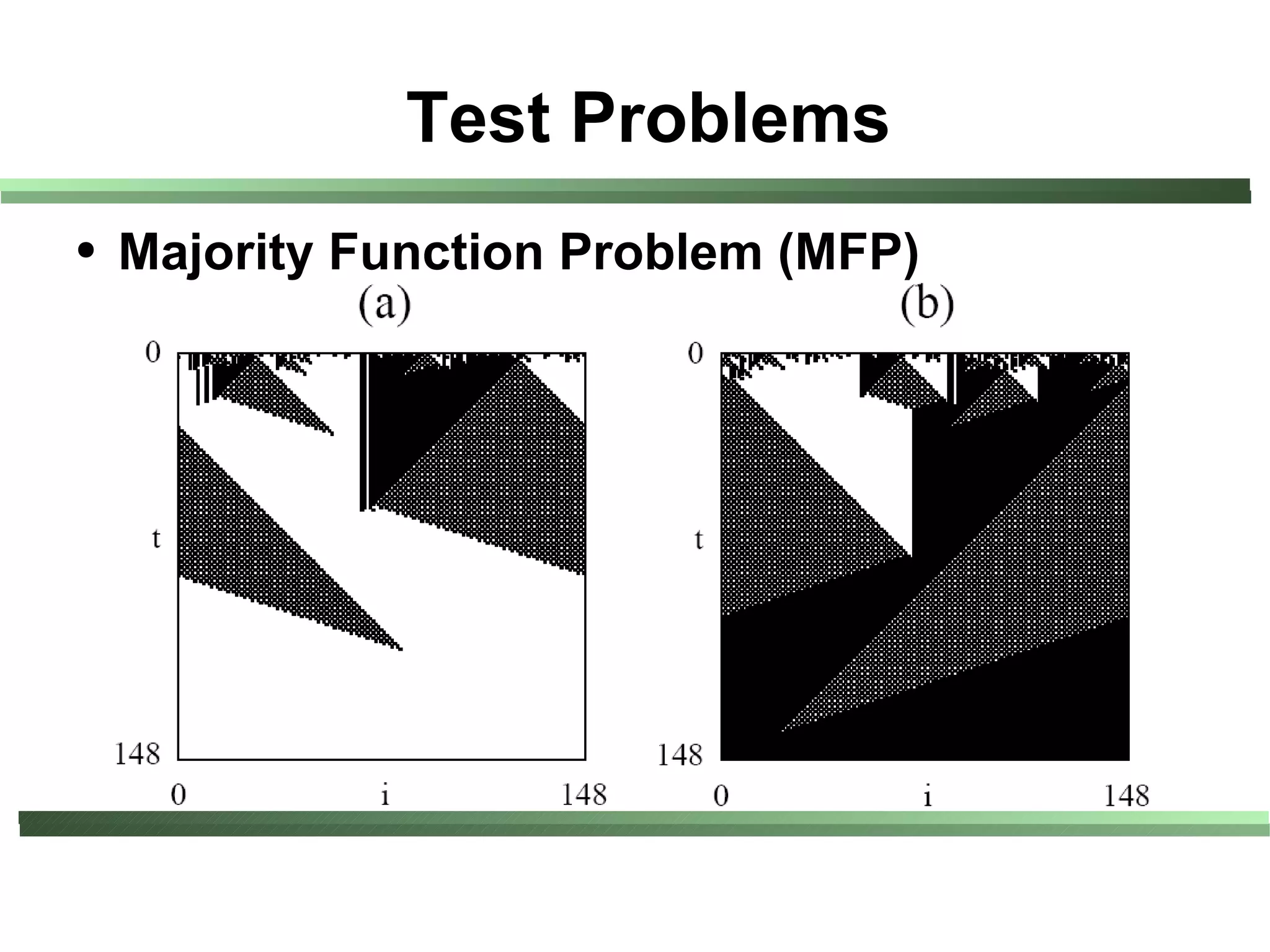

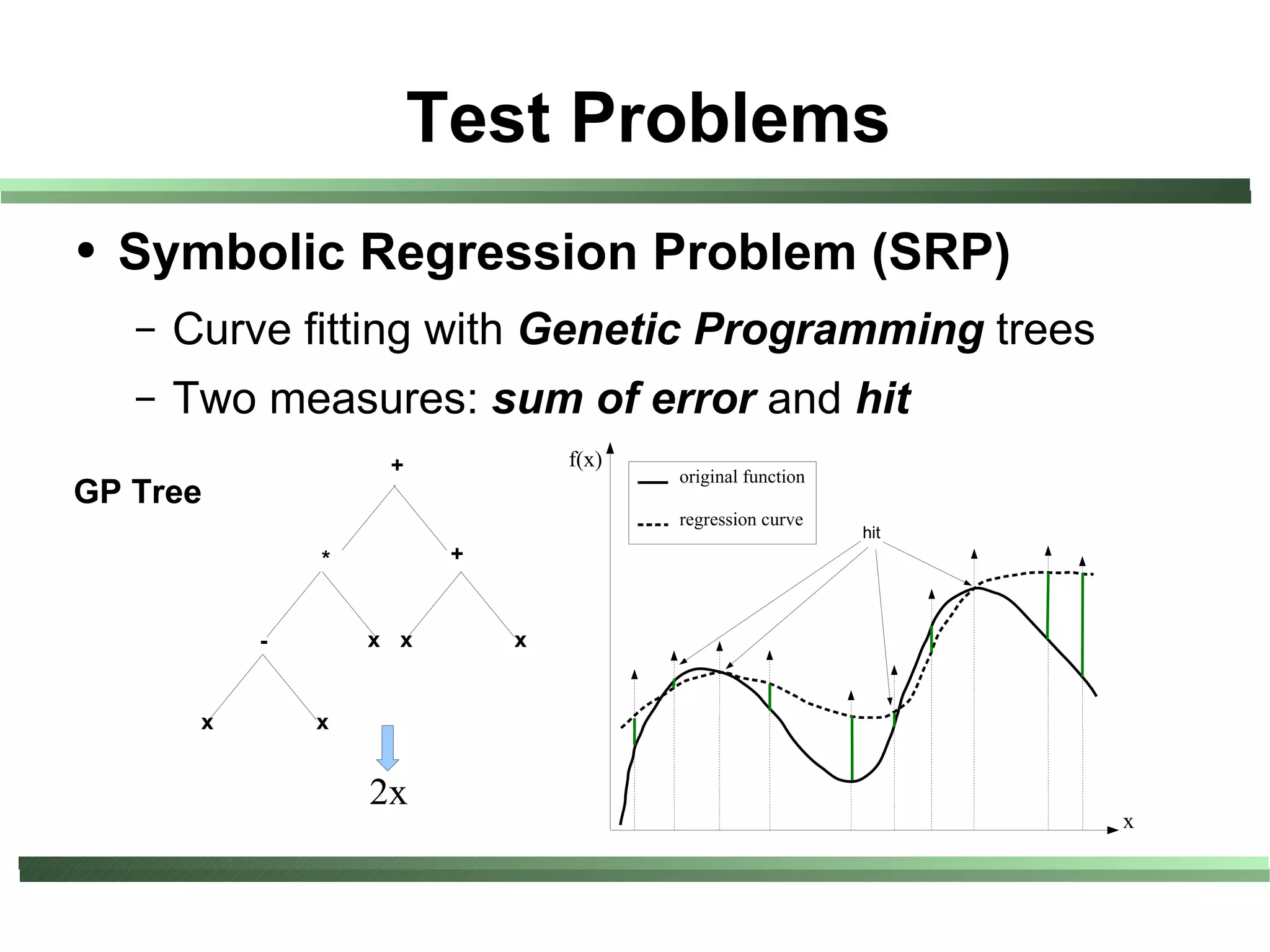

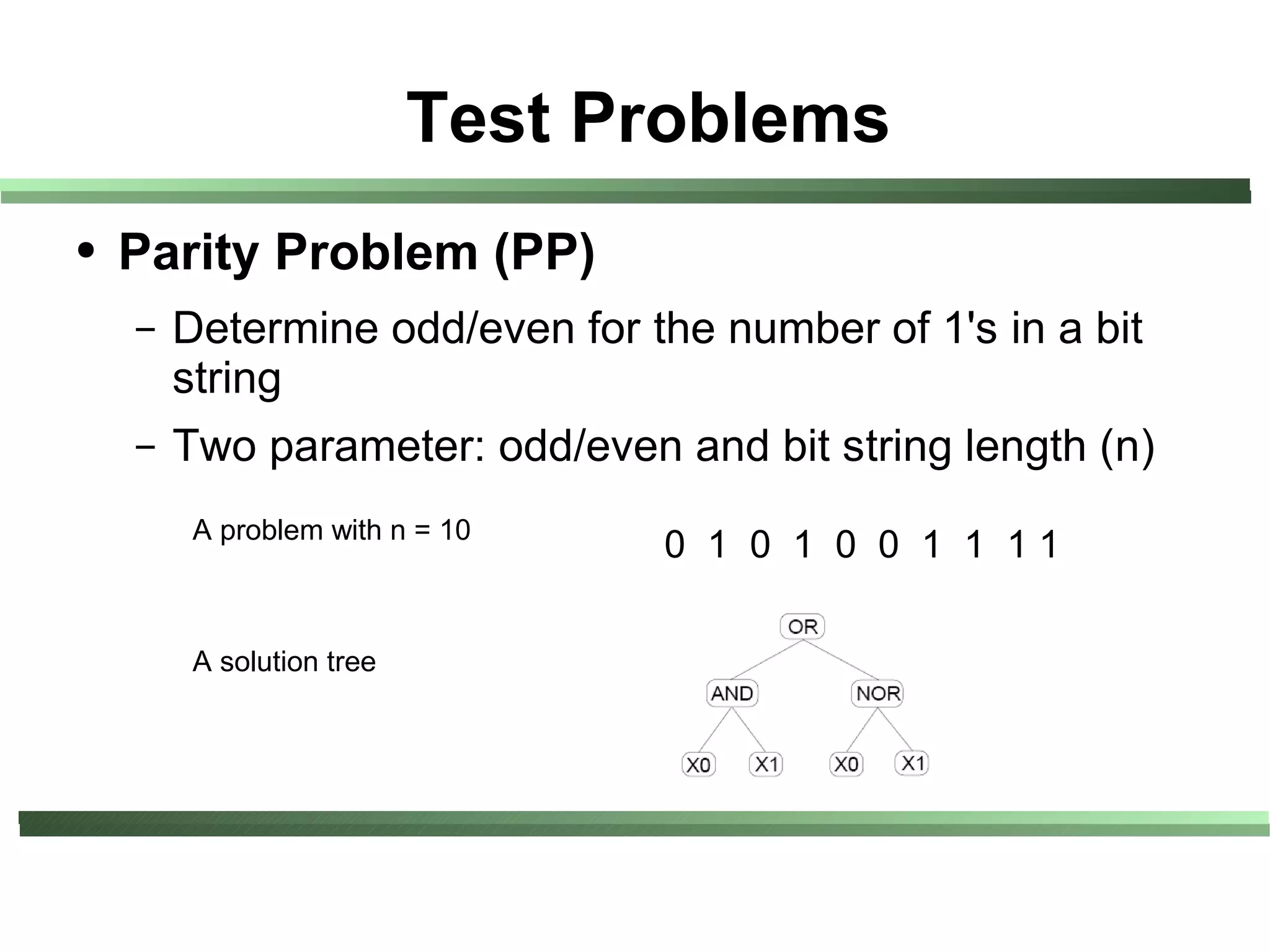

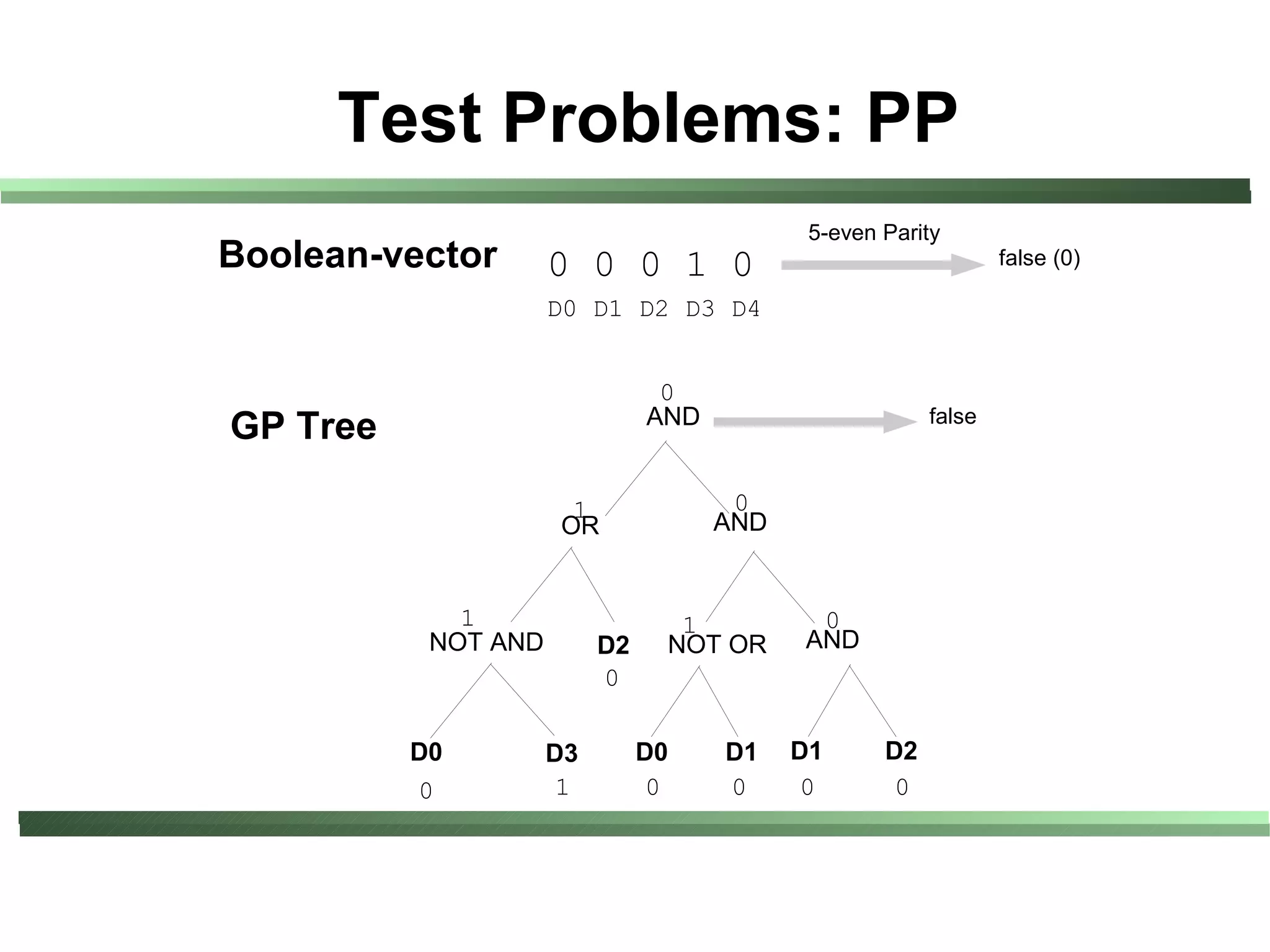

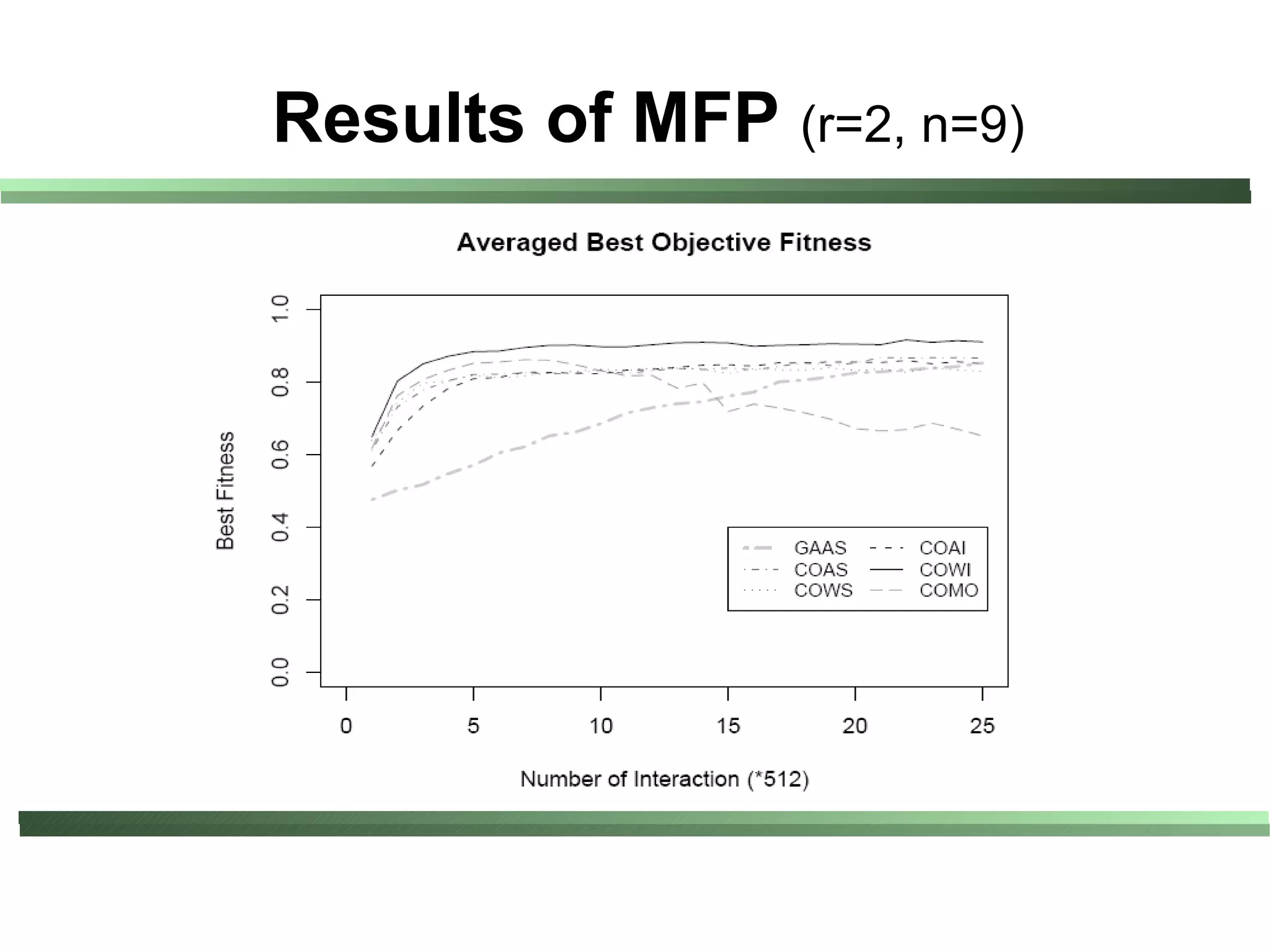

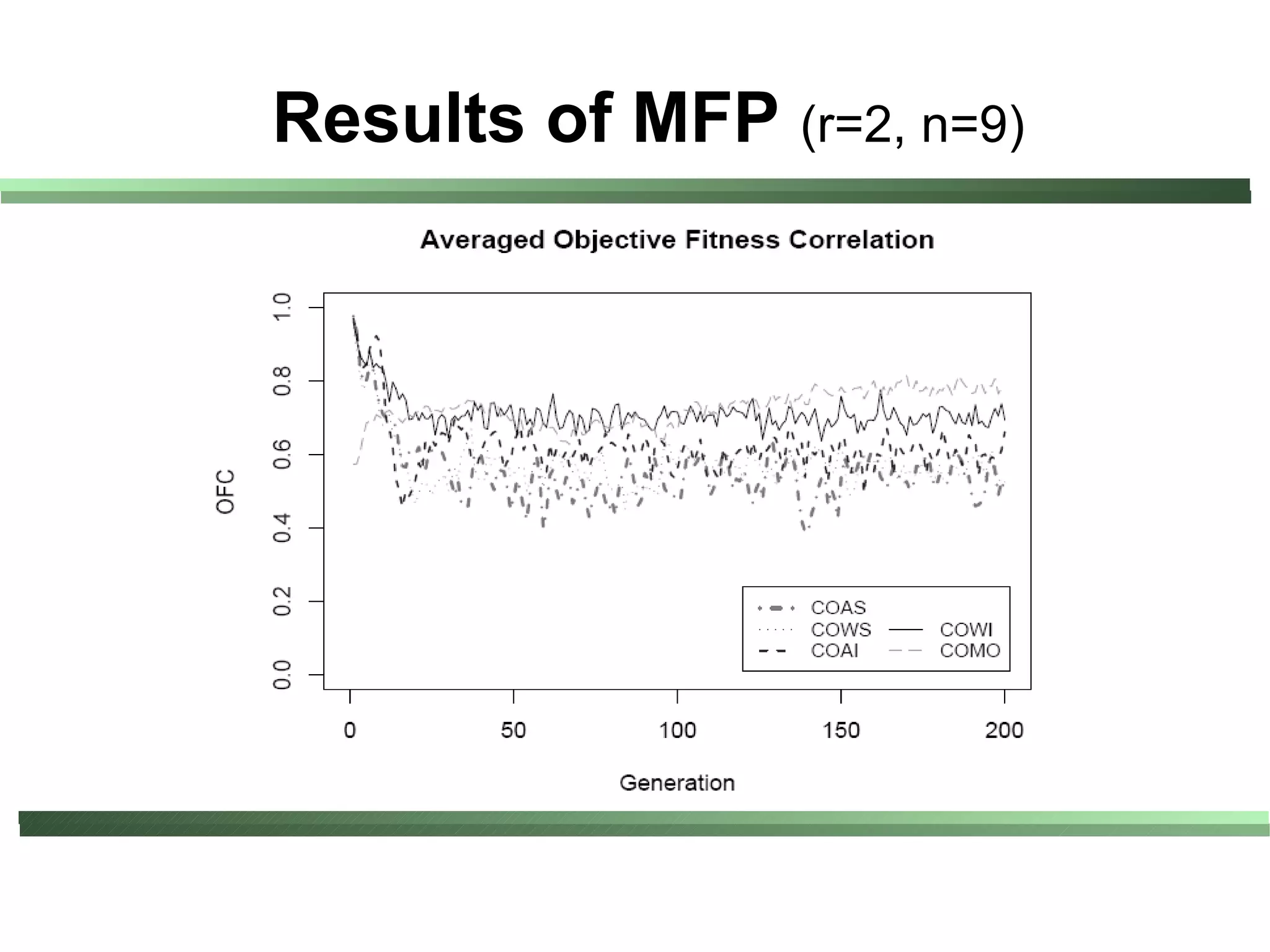

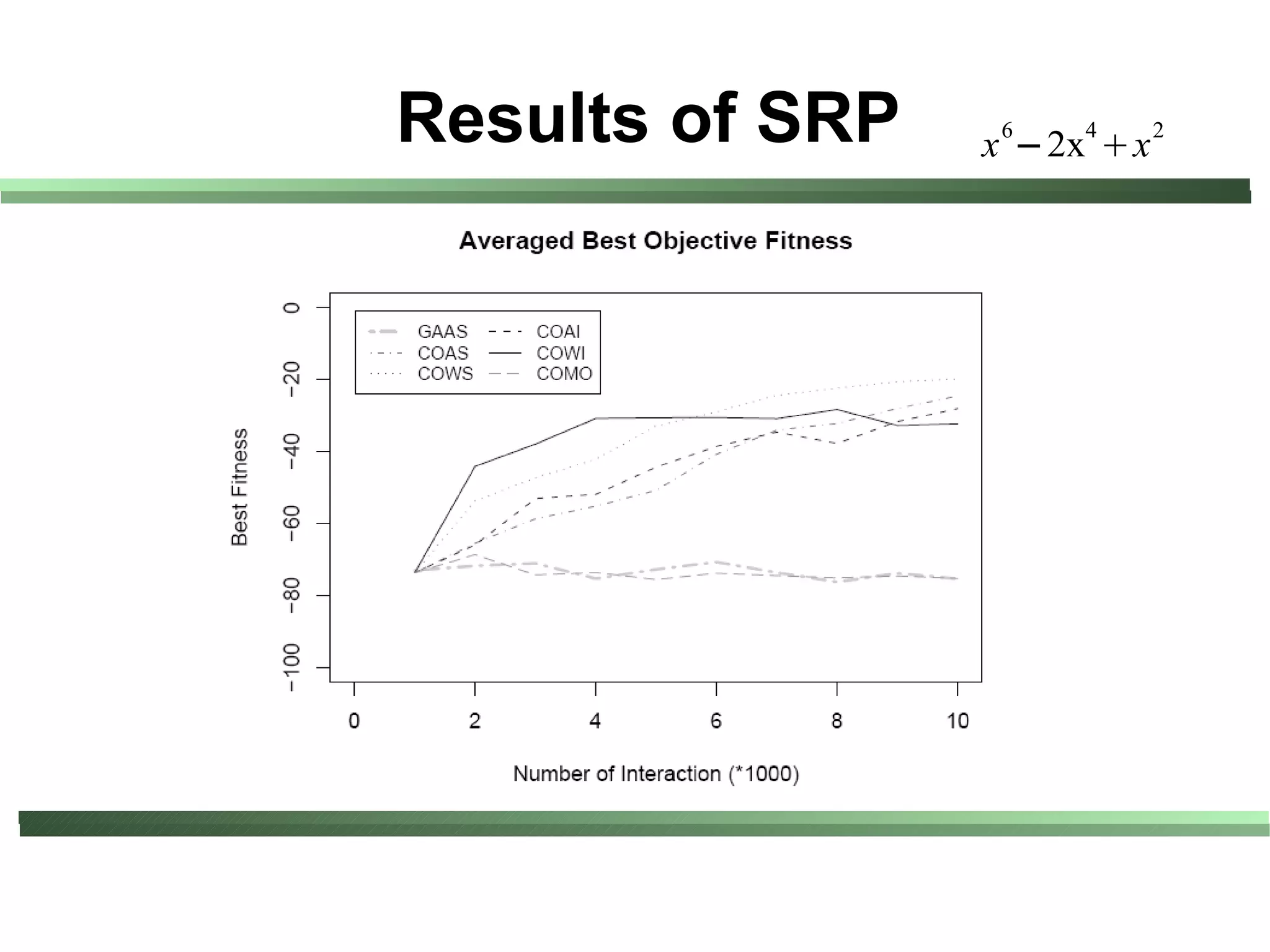

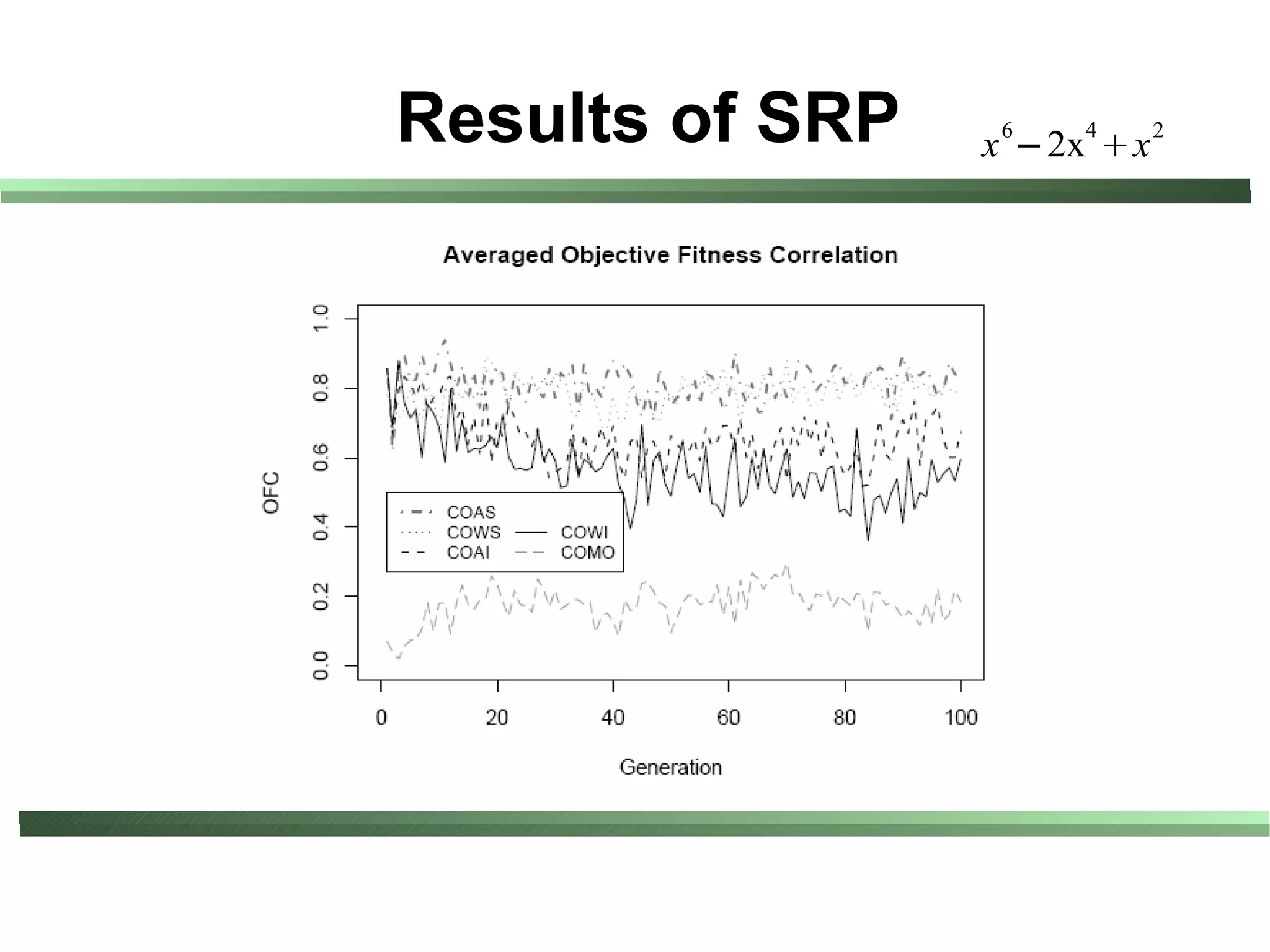

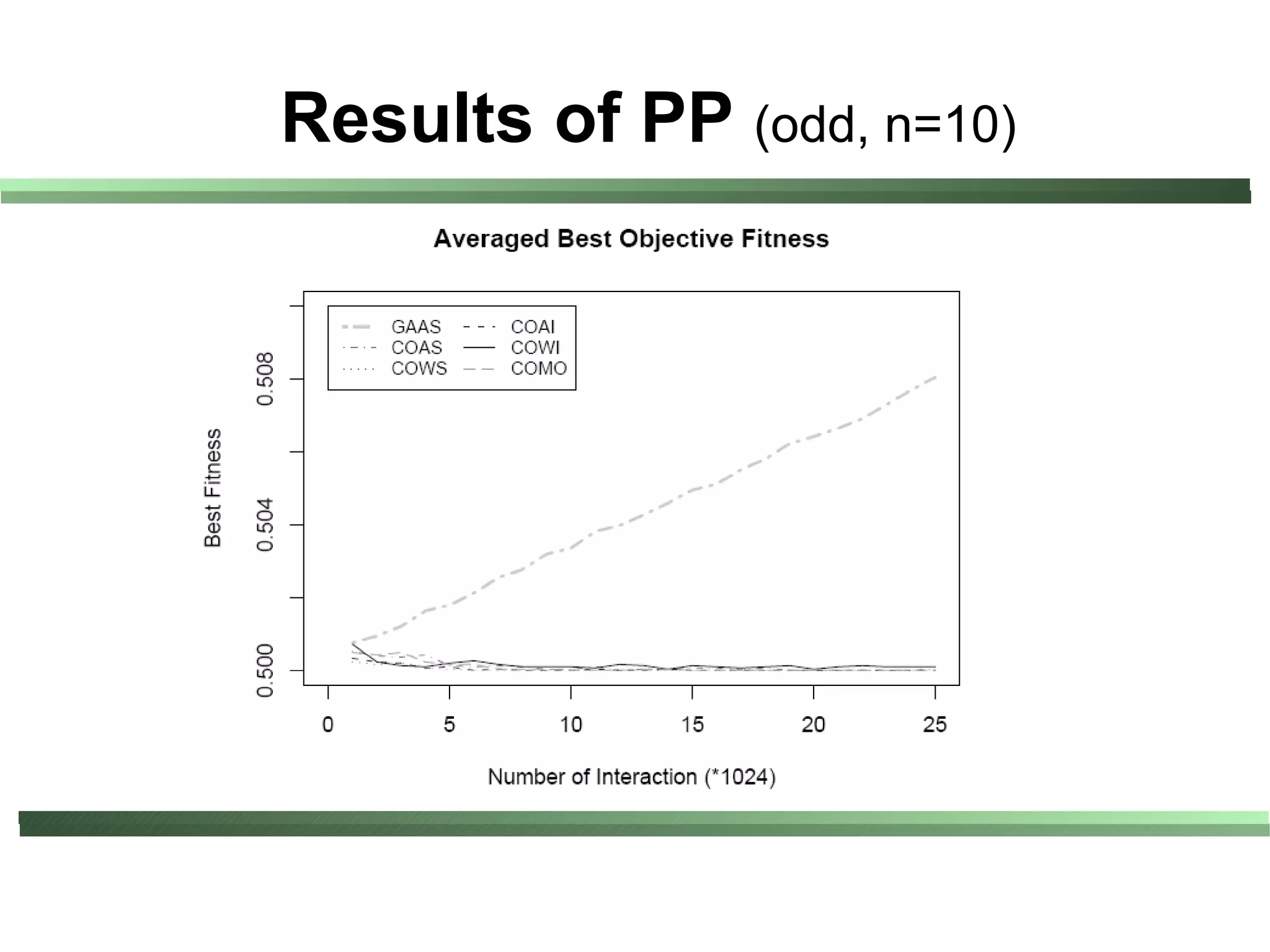

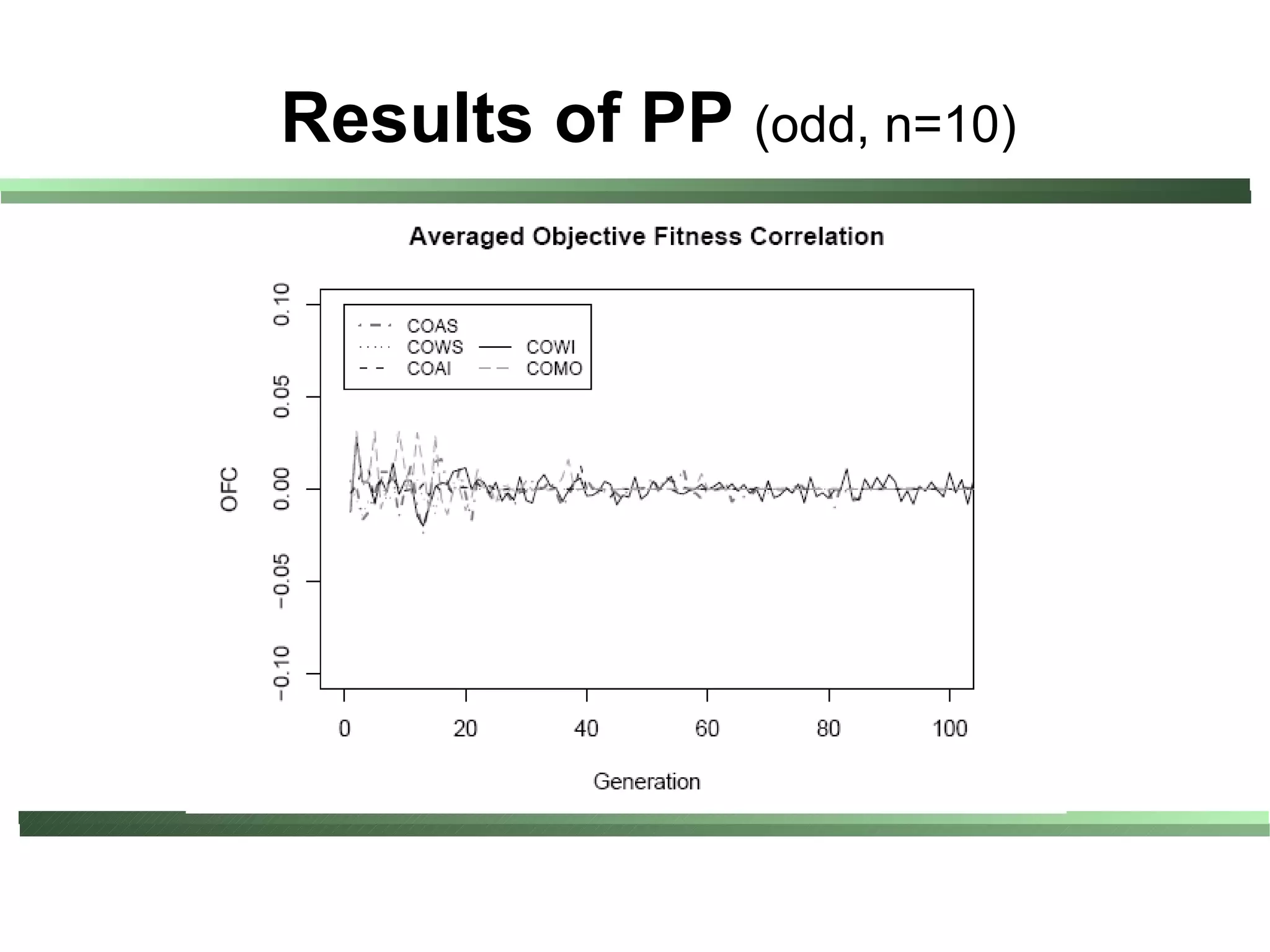

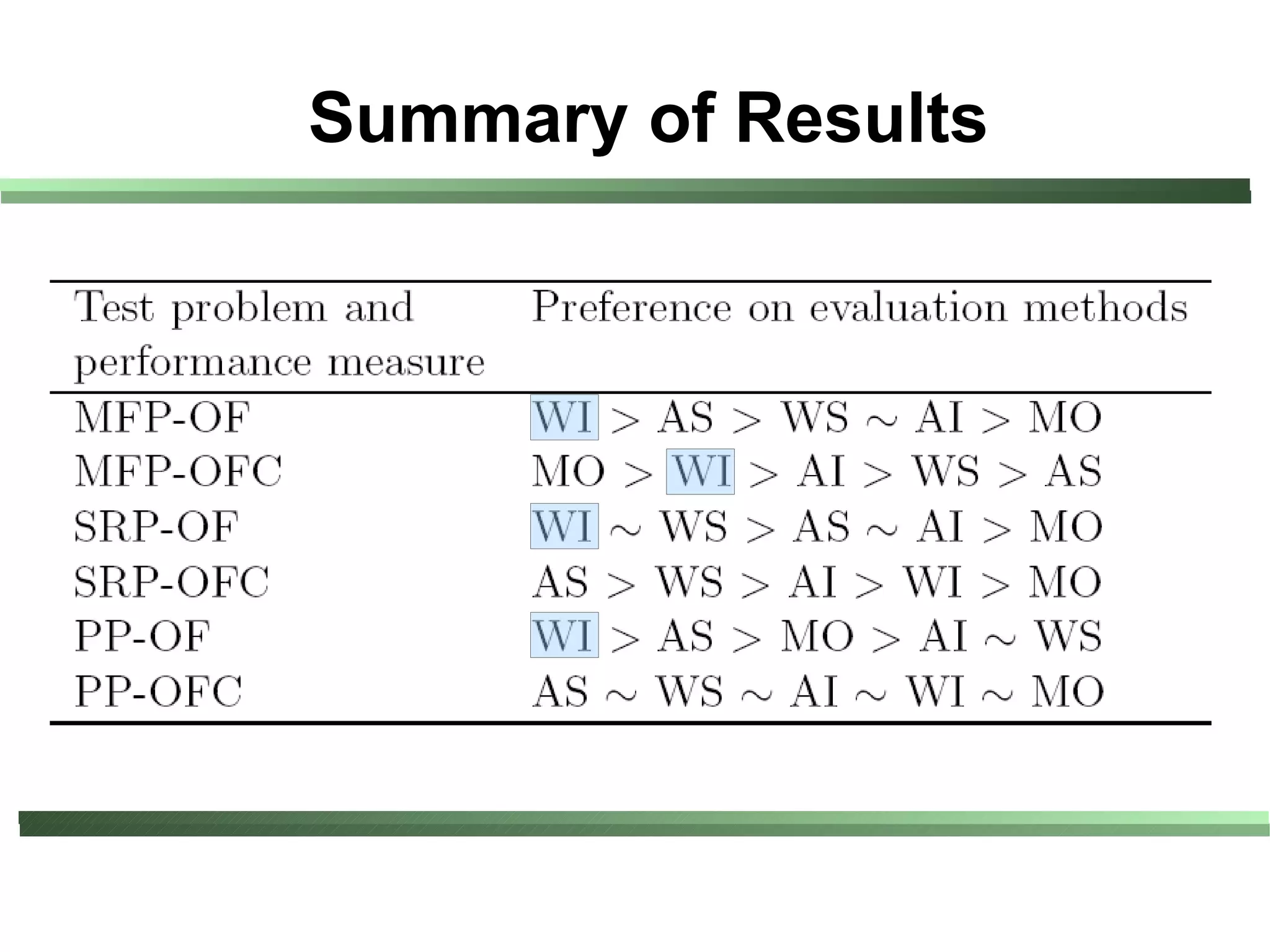

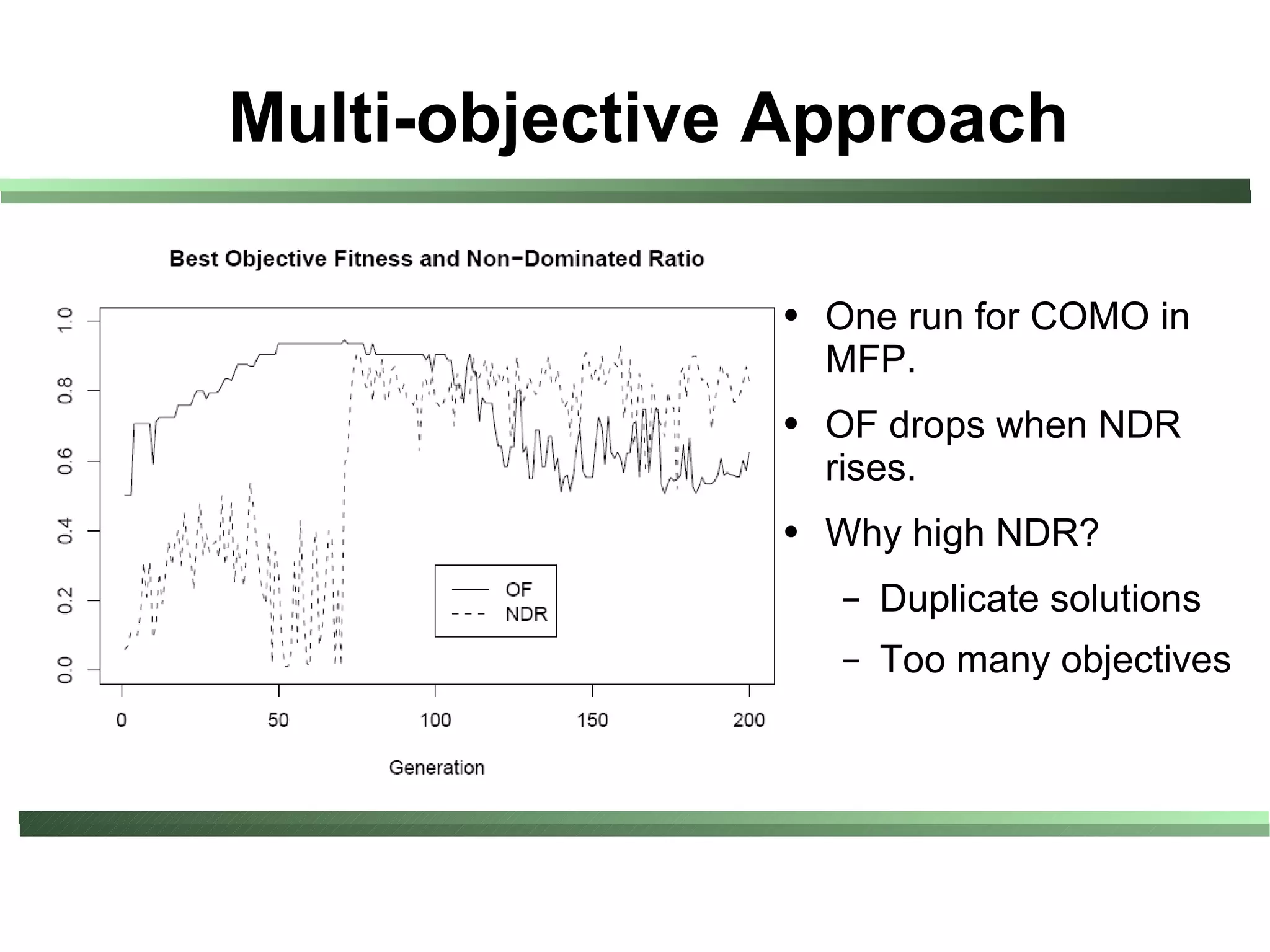

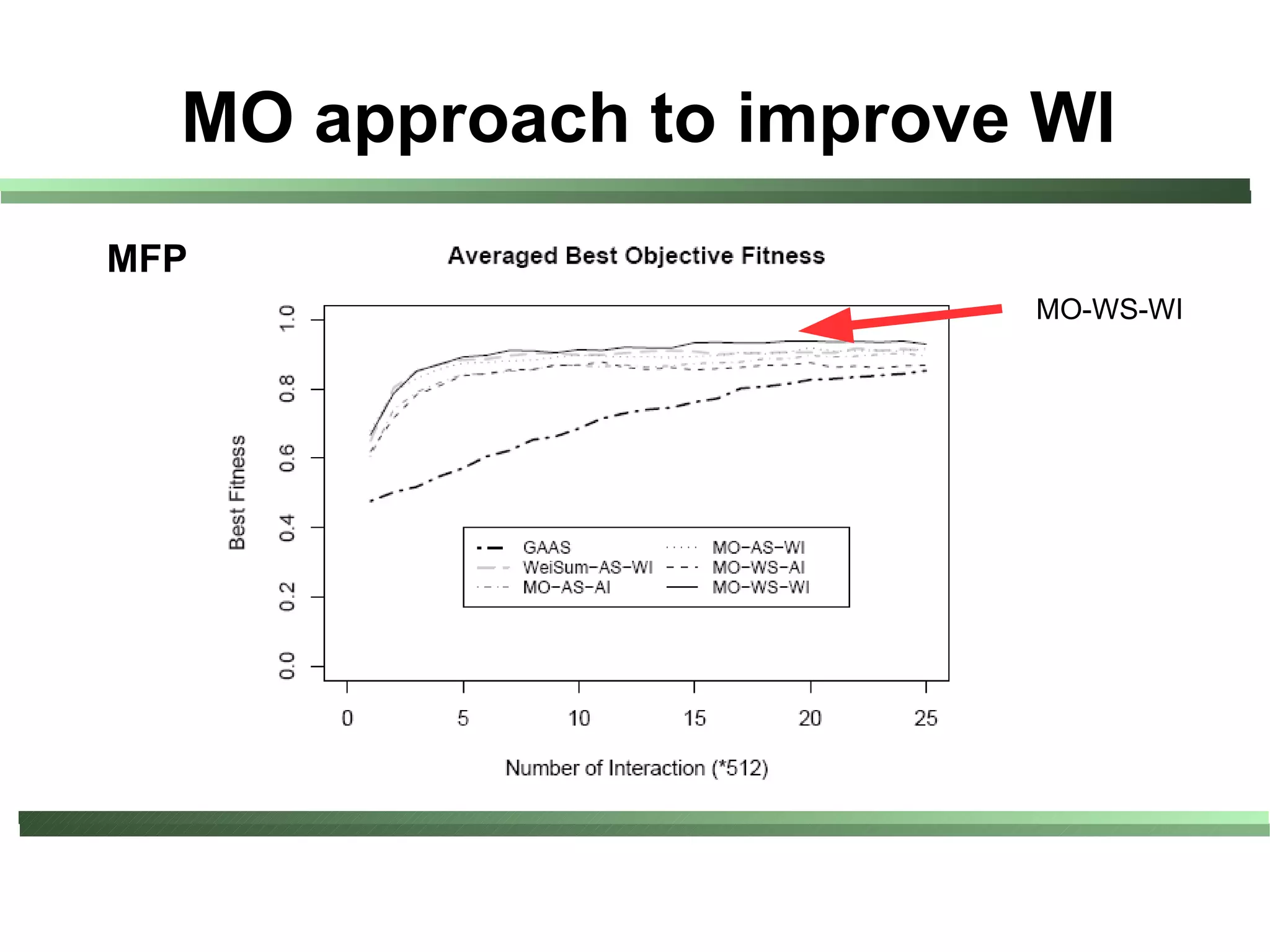

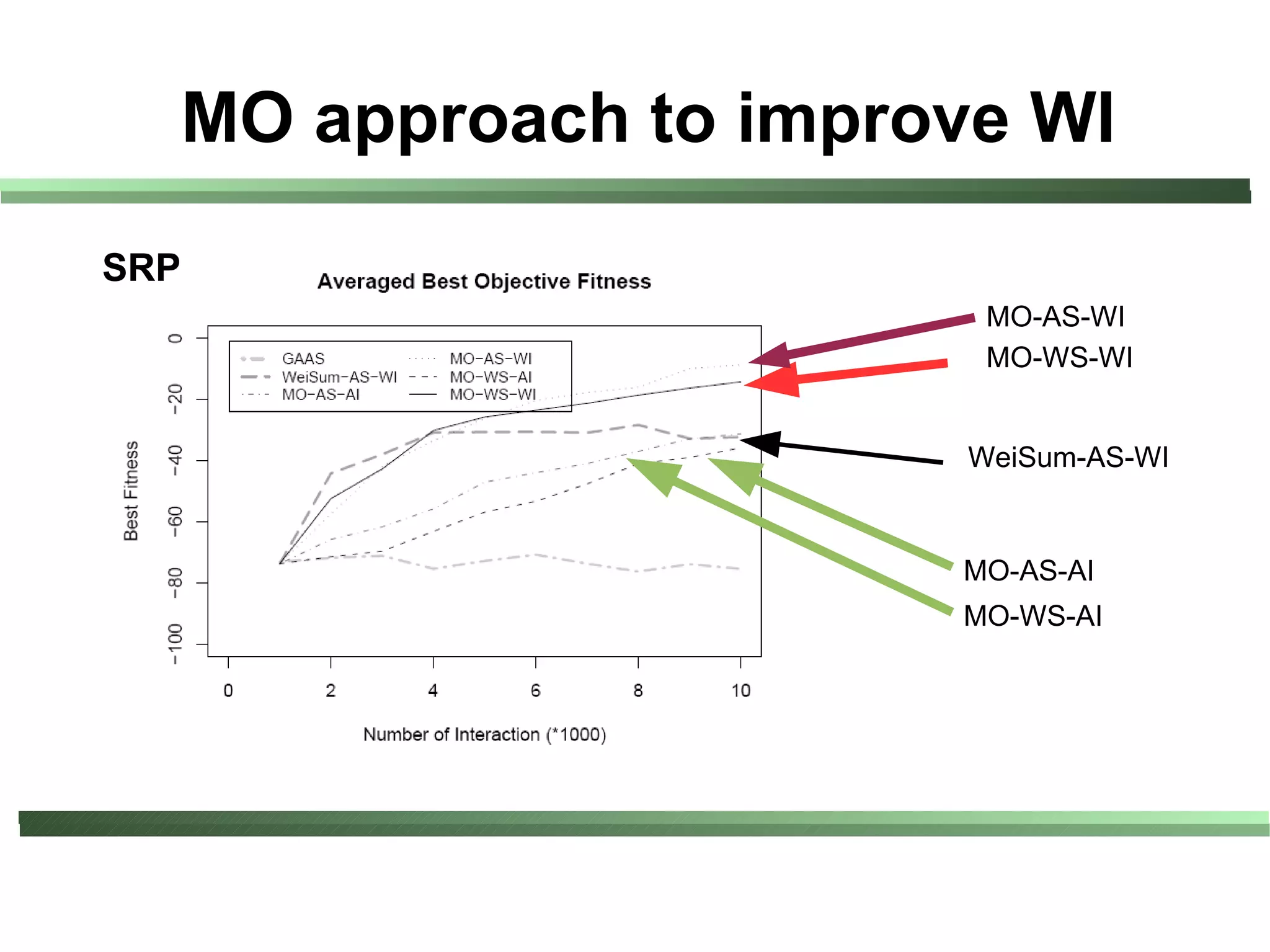

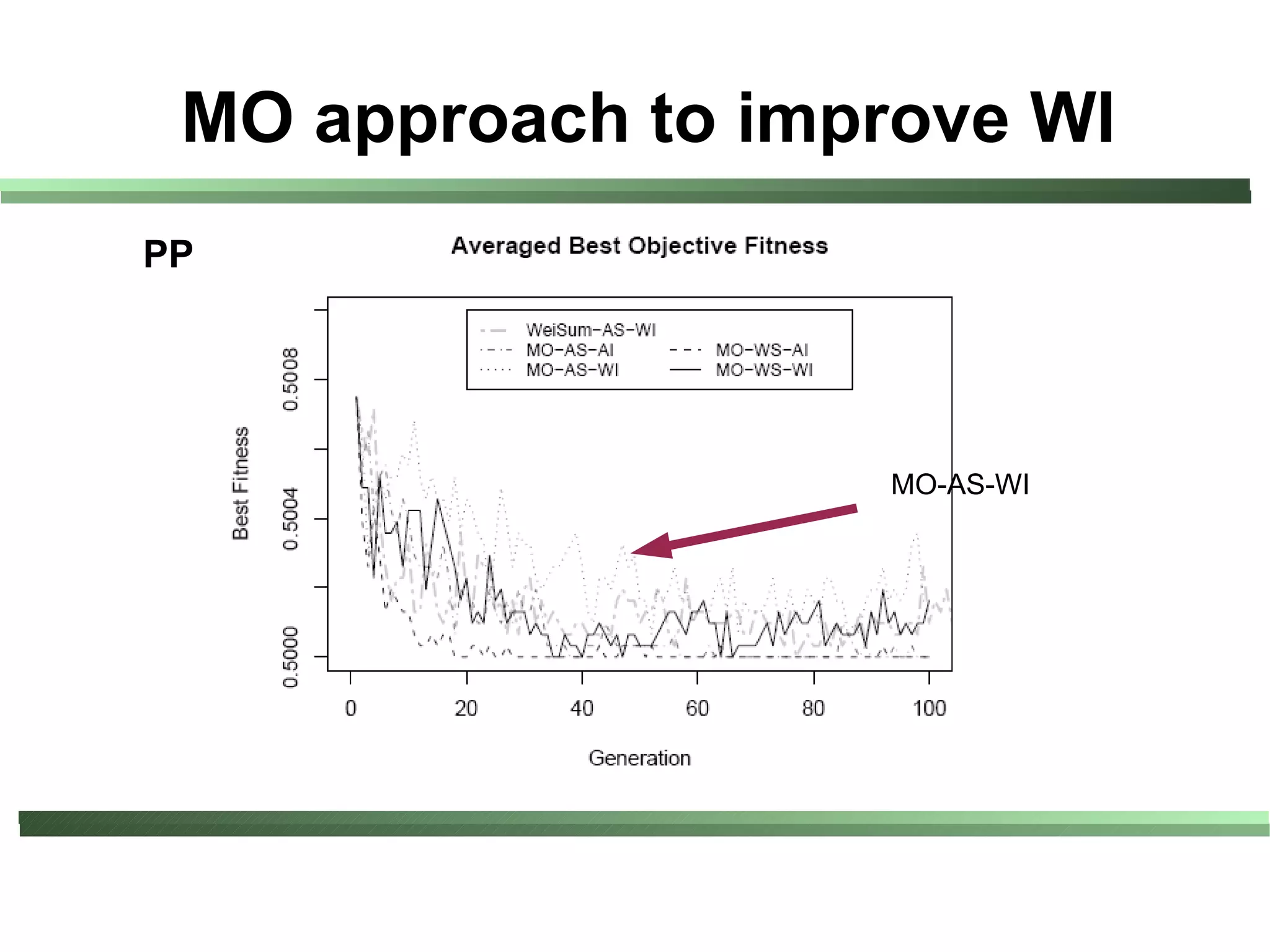

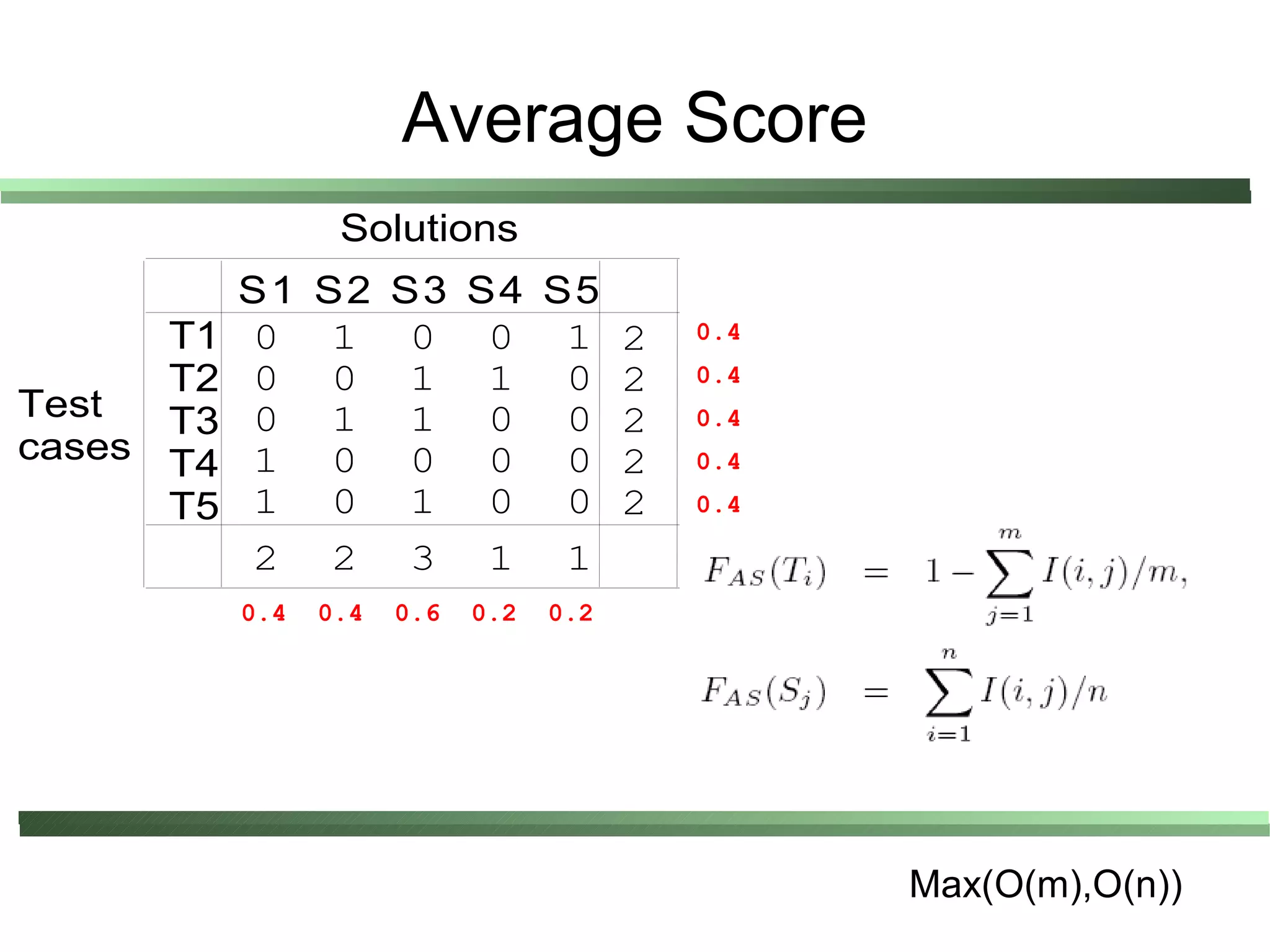

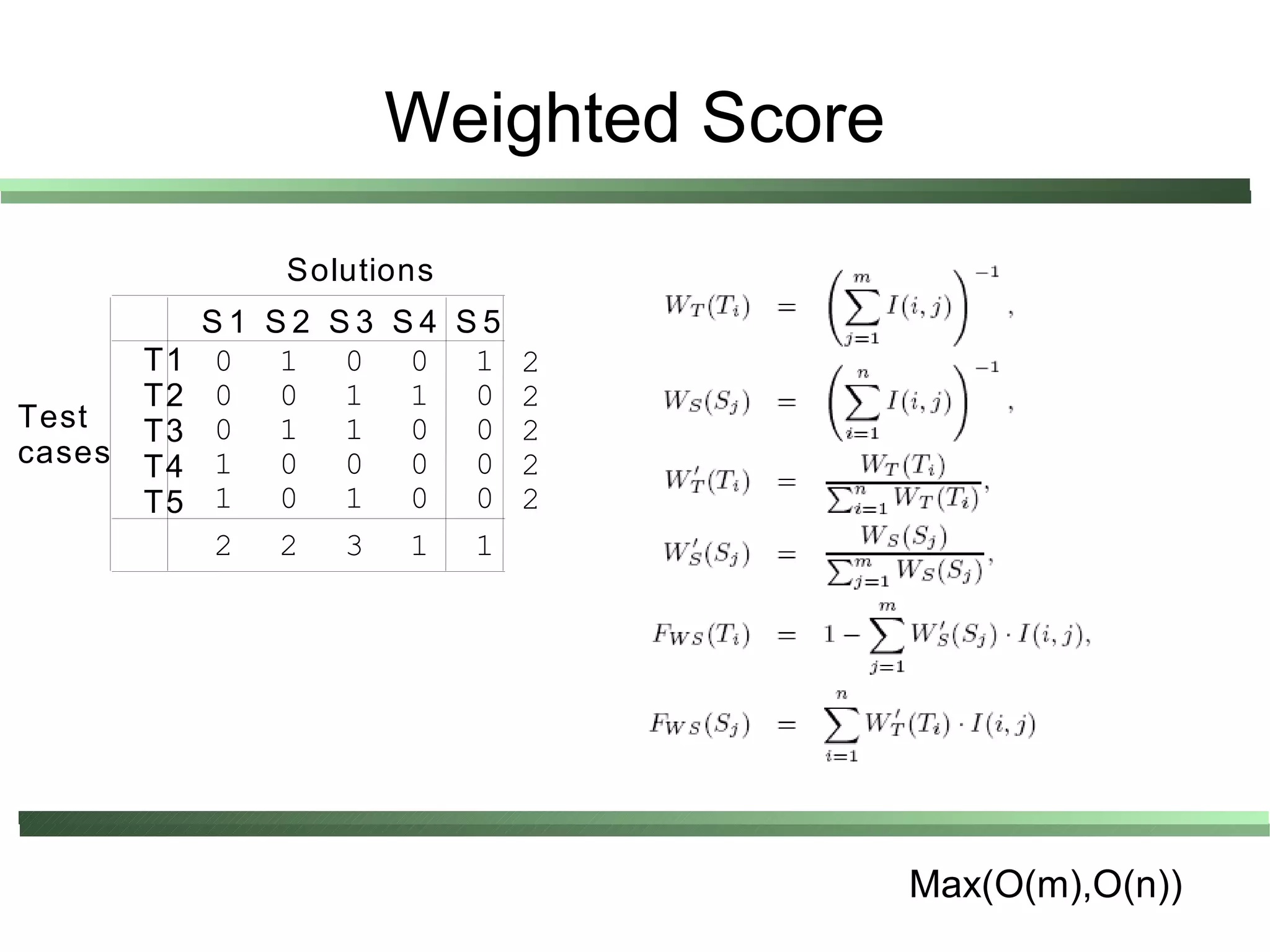

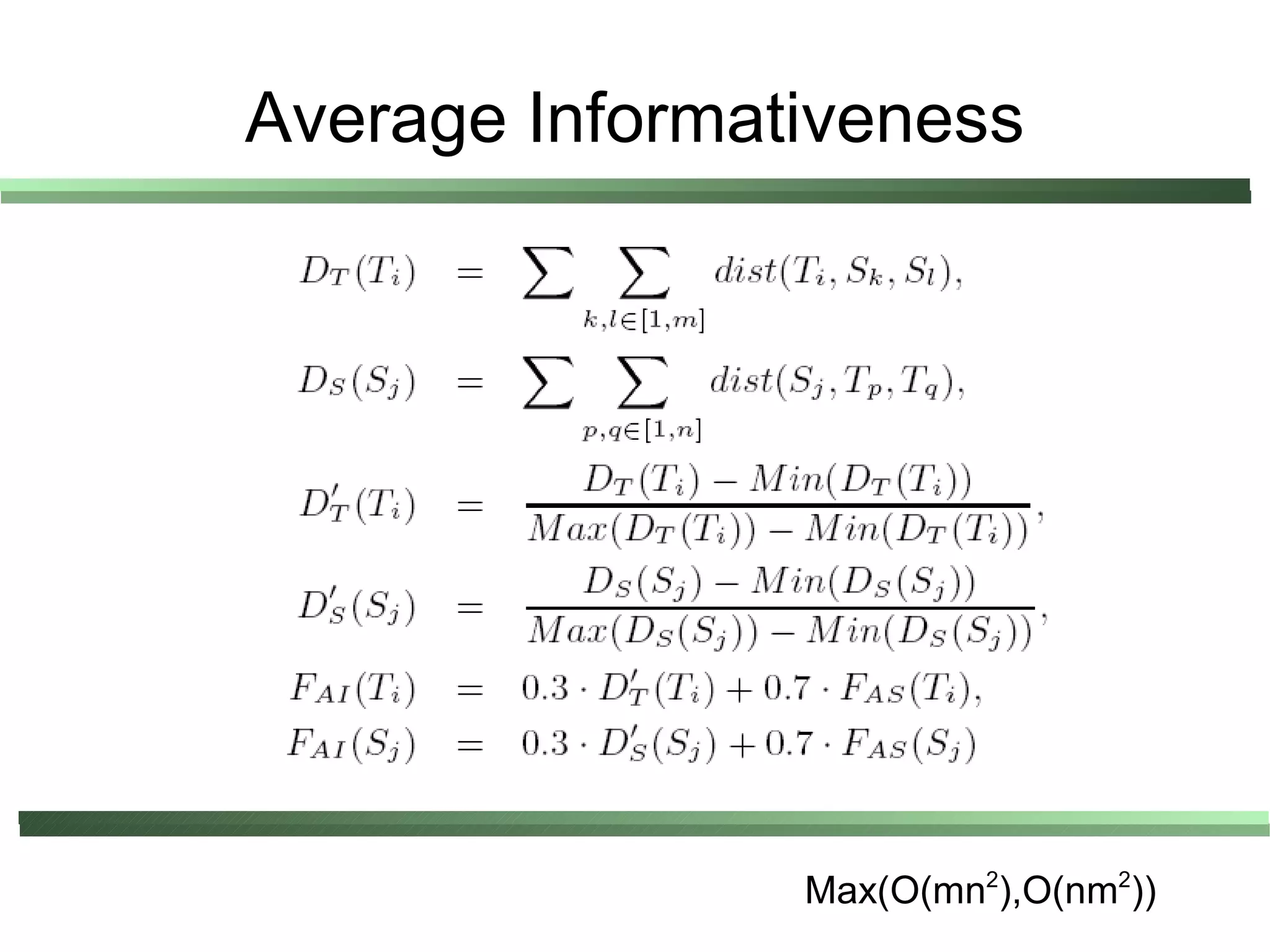

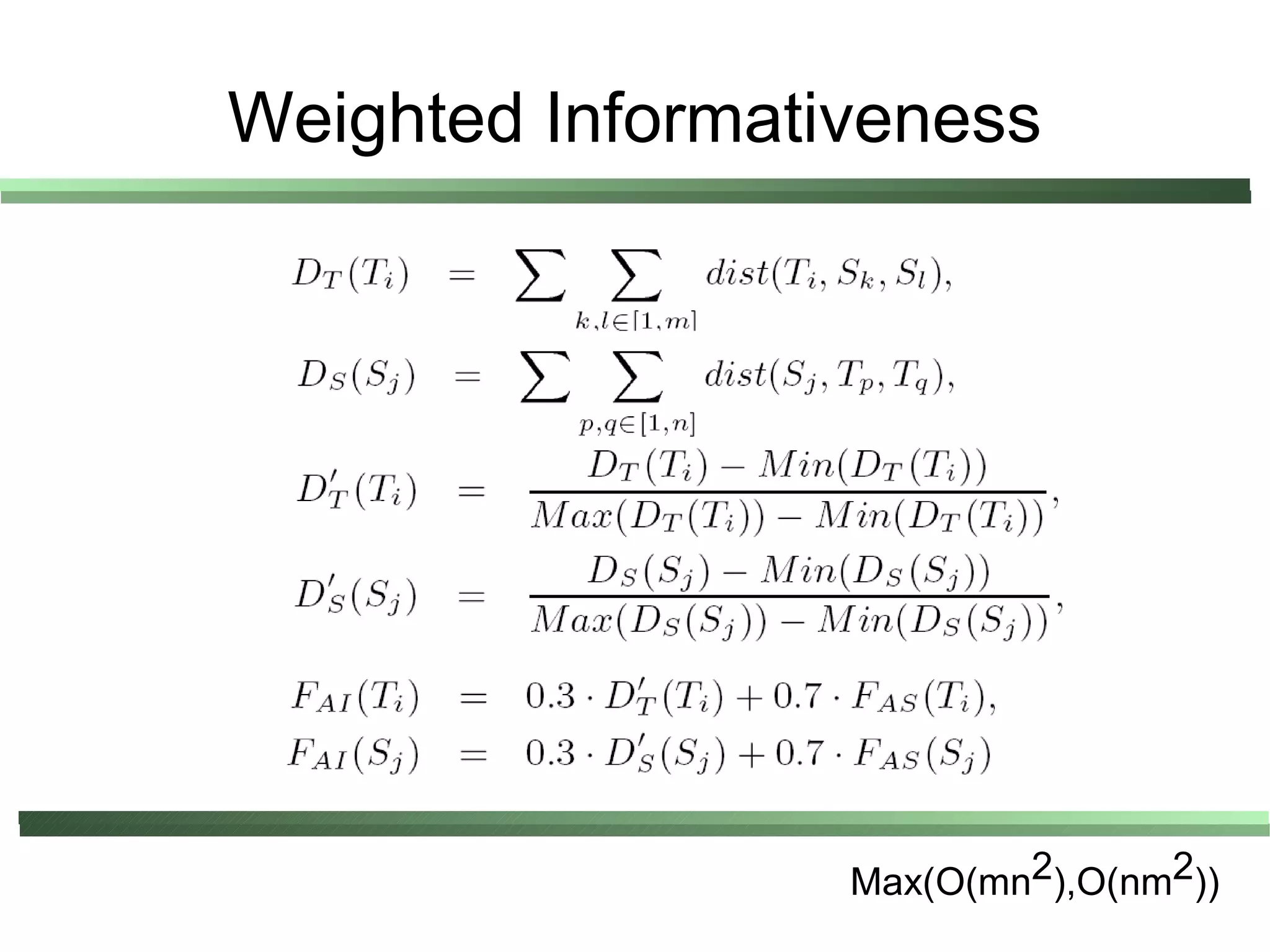

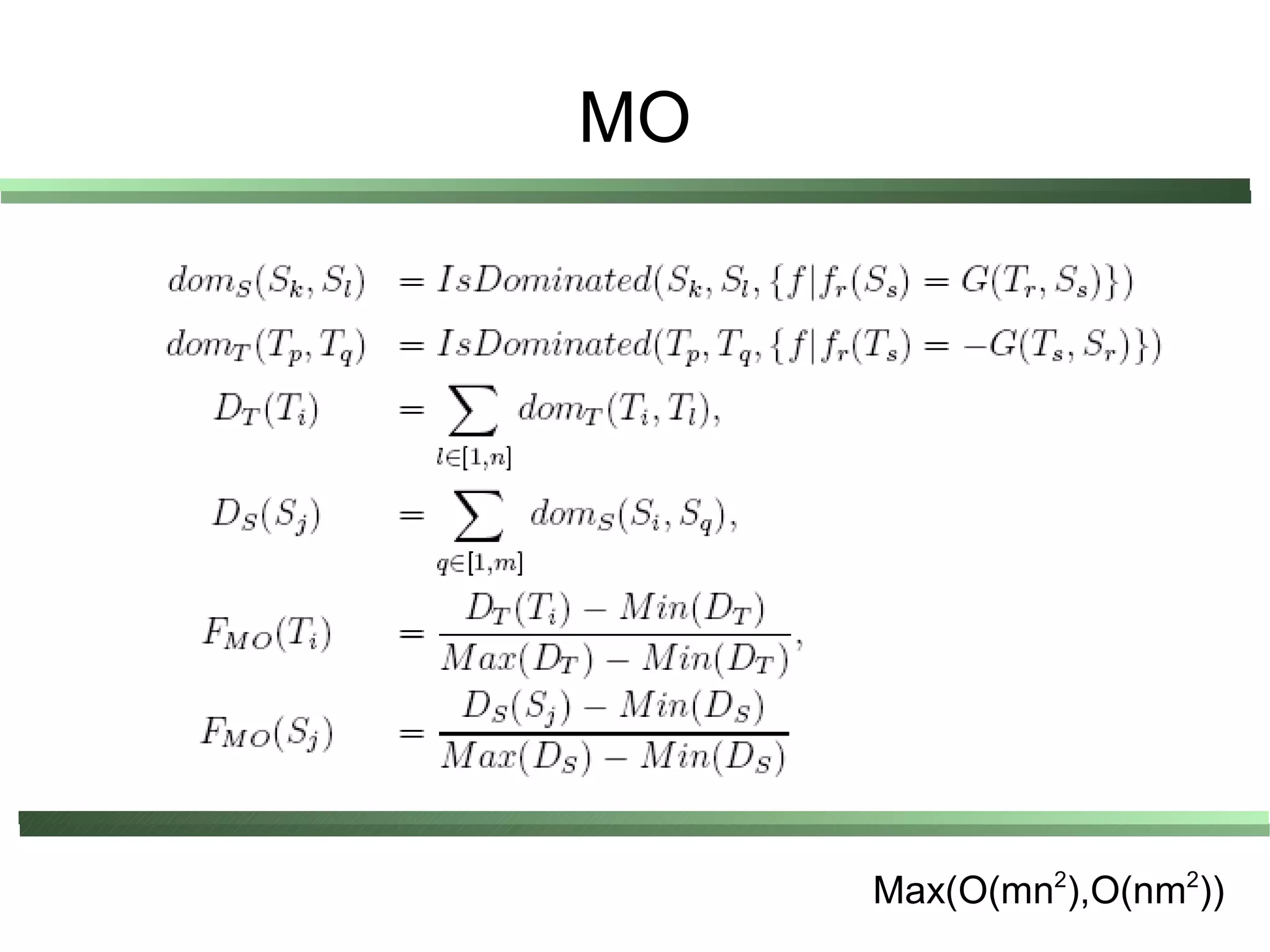

This document compares different evaluation methods in coevolution, including averaged score, weighted score, averaged informativeness, weighted informativeness, and a multi-objective approach. It presents results on three test problems: majority function problem, symbolic regression problem, and parity problem. The results show that the multi-objective approach achieves the best performance in terms of objective fitness and correlation between subjective and objective fitness.